Contents

Memory allocation scheme is a very important issue when developing efficient infrastructures. Normally, we consume memory either from heap or from stack. Heap is flexible but slow, stack is fast but limited, for example array size must be known at compile time. The goal is to combine the heap flexibility and stack speed.

Arena allocator is a well known technique. An arbitrary sized memory block is preallocated at some point of execution. A pointer is maintained to track the next allocation position within the block. Subsequent allocations simply move that pointer to the next allocation position. The entire block is deallocated at once eliminating the need of individual deallocations.

Motivation

Consider the following use case: you have a complex data structure that you only need to build once, and won't modify after that except to delete it.

Given this use case, the desired arena features are:

- One arena could service all the objects in the entire data structure. This gives you high locality and no bookkeeping overhead.

- The objects would not hold an allocator (reference to the arena). They only need to be built once.

- The arena would not be in global/static storage.

- The objects would all still have their destructors called.

...as discussed at Boost mailing list.

(I have no intention to write a boost compliant piece of code. I just like the way the motivation is expressed here.)

There are many excellent contributions in the C++ area of memory allocation.

It took me a while before I finally decided to post this article. It's clear that my approach is just a different implementation of ideas and concepts you can find in the articles above. But still it has three notable design decisions:

- Embedding of preallocated buffer inside

Arena - Departure from DTOR calling automation

- Same interface for allocations of any size (unlimited allocation space)

My Arena allocator was developed and tested under VS2008. I don't expect any surprises under other VS compilers. However, as David Mazie`res explains in his article, there are portability issues with the approach presented here. The main issue, as far as I understand, is how a specific compiler implements the operator new[].

Lets see what Visual Studio documentation says:

![operator new[] is out of game](data:image/gif;base64,R0lGODlhAQABAAAAACH5BAEKAAEALAAAAAABAAEAAAICTAEAOw==)

It seems that in Visual Studio, both operator new and operator new[] are identical. If only operator new is overloaded, it will be used for both kinds of allocation:

Thing* thing = new (arena) Thing;

Thing* things = new (arena) Thing [10];

So, my implementation just silently ignores the existence of operator new[].

Although my Arena implementation has passed various unit tests, the code is still fresh and not field-proved. Thus, treat the sources rather as a demonstration of arena allocator concept.

Embedding Objects

Obviously, Arena is intended for fast small allocations. It will be a very powerful property, if first time allocations don't require any heap operations.

Arena should have an allocation buffer as its own member.

MyArena<256> arena;

What we are saying by this line of code is MyArena has internal buffer of 256 bytes.

sizeof(MyArena<256>) == sizeof(Arena) + 256

template<int INIT_CAPACITY>

class MyArena : public Arena

{

char storage_[INIT_CAPACITY];

};

Automation of DTOR invocation comes on behalf of implementation complexity. Worse, syntax of operator new forces implementers to introduce macros like NEW and NEW_ARRAY as described here.

Does such an automation help a professional programmer? I believe, that if one realizes the need of a different allocation scheme, he is not a beginner. He surely can decide by himself if DTOR has to be called. What he can't do, is to synchronize DTOR call with "arena-is-gone" moment.

Arena should provide a "DTOR-registration" mechanism, rather than calling DTOR automatically.

Thing* things = new (arena) Thing [3]; arena.DTOR(things, 3);

Indeed, Arena is optimized for small allocations. But, sometimes you just don't know the right size of preallocated buffer. Moreover, in rare cases, you intentionally may want a big allocation. Besides, Arena is an infrastructure class, we never know the exact usage curve.

Arena should grow when preallocated buffer is out of room.

double* arr = new (arena) double [10000];

MyArena<256> arena;

char* buf = new char[256];

Arena arena(buf, sizeof(buf));

Arena arena(256);

Arena arena;

MyArena<256> arena;

Thing* thing = new (arena) Thing;

MyArena<256> arena;

int* arr = new (arena) int [n];

MyArena<256> arena;

double* arr = new (arena) double [1024*1024];

Destroying Objects

MyArena<256> arena;

Thing* thing = new (arena) Thing;

arena.DTOR(thing);

MyArena<256> arena;

Thing* imgs = new (arena) Thing [n];

arena.DTOR(imgs, n);

Operator New Overloading

Yes, we have to overload it...

Just a quick recall of operator new overloading syntax:

Thing* thing = new Thing;

In this line of code, two things actually happen. Memory for a class Thing is allocated, then CTOR of Thing is called. By overloading operator new we get explicit control over the first action - memory allocation.

We define two overloads of operator new:

void* operator new (size_t size, Arena& arena);

void* operator new (size_t size, Arena* arena);

So the following examples behave as expected:

Arena arena; Thing* thing = new (arena) Thing;

Arena* arena = new Arena; Thing* thing = new (arena) Thing;

The topic of this article is Arena allocator rather then operator new overloading. So, I will not go further into the syntax. You can always refresh the details in documentation or here.

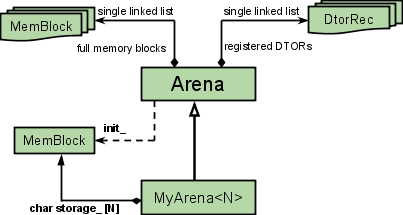

Arena class implements the whole functionality. MyArena<N> is just a container of "preallocated" buffer. Arena allocates memory from cur_ memory block. When cur_ block is full, it is pushed on the front of list of full memory blocks. Arena stores the "preallocated" buffer in init_ member. That block is never freed by arena, since it is created outside of arena. - When DTOR is registered, a templated DTOR callback is instantiated. A new

DtorRec is pushed on the front of DTOR records list.

MyArena<256> arena;

int* iarr = new (arena) int [10];

char* msg = new (arena) char [32];

Thing* thing = new (arena) Thing;

arena.DTOR(thing);

These lines of code lead to memory layout as follows:

More complex allocation scenarios may lead to the following memory layout:

Note, there is a MAX_SMALL_ALLOC constant. If memory allocation request exceeds this limit, allocation is propagated to default operator new - heap. A block header is placed in front of the allocated memory to maintain a linked list of allocated blocks.

Two Boost facilities are in use:

- Intrusive single direction linked list

- Boost asserts

The Arena is completely implemented inside "arena_allocator.h". A set of use cases are implemented in "arena_allocator.cpp". You don't need to link it in order to use Arena allocator.

It took me several years to come to Arena implementation presented here. I wrote STL allocators, object pools, pools of object pools, etc. Each implementation I made left me with the feeling that I was continuously missing some point. And then I realized a simple thing: memory allocation and object lifetime are two orthogonal things. Yes, it's obvious, and is written in every book related to memory allocation techniques. Still, it is sad to say, this knowledge was formal and not related to my real-life-programming for a long time.

Thing* thing = new Thing; ... delete thing;

Once again, the code above deals with two orthogonal things. Managing the memory of a Thing and managing the lifetime of a Thing. A typical design tries to separate these issues: Memory management goes to various allocators, while lifetime management goes to various smart pointers.

Personally, I have never used auto_ptr or any other smart pointer. IMHO, extensive use of auto_ptr means that either the program has poor memory management design, or C++ is not a good choice for that specific program.

Working on infrastructures, I always deal with the same memory usage pattern: objects "live" in batches. Inside a batch, objects are created one-by-one. Later a batch is deleted at once. In most cases, lifetime of such a batch is represented by some "upper" class. My point is: that class should have Arena allocator as its member.

So, the lifetime of all objects of a program form a set of "contexts". Each of these "contexts" should have its own memory allocator - Arena.

Scope is just a special case of a context I talked above. It is a pretty simple context, formed by two curly brackets {}.

void foo()

{

MyArena<256> arena;

int* arr = new (arena) int [10]; Thing* thing = new (arena) Thing; }

Many implementers make an optimized memory allocator a TLS (thread local storage) variable. I also used to do it. However, in favor of context thinking, I realized, that what actually should be made a TLS value is a specific context, rather then allocator itself. If such a context has its own allocator, we get an implicit TLS-based-allocator.

Arena allocator presented here is a kind of "enabling technology" class for me. It serves me both as a memory allocator and as a lifetime manager. It helps to distinguish "collaboration contexts" - sets of related objects. And finally, Arena provides a uniform interface to the memory management throughout the program.

Arena makes my programming easier. That's the way I like it.

History

- 25th November, 2009: Initial post