A high-performance application doesn't happen by accident. It requires serious engineering efforts that start well before a single line of code is written and continues long after the product is released to the public.

Many organizations still follow the old mindset of either no performance testing at all, or do it only if there is any time left at the end of the release cycle. With more applications moving into cloud-hosted complex multi-tenant application servers, it has become imperative for developers to pay attention to the "cost" of their code right from the very beginning of the development cycle.

This article showcases how Visual Studio features like PerfTips, Diagnostics Hub and Performance Analyzer can help developers measure the cost of their code in a meaningful manner in the context of developing cloud-focused applications. I’ll start by focusing on using conventional approaches for investigating performance related issues.

Performance Defined

Performance Engineering can be defined in many ways. Here is how Wikipedia defines it.

"Performance engineering encompasses the techniques applied during a systems development life cycle to ensure the non-functional requirements for performance (such as throughput, latency, or memory usage) will be met."

This definition is a good start, but performance engineering is a complex domain that can mean many different things to different people depending upon their background. However, there is a broader consensus that application performance has a direct impact on the revenue and operating costs of the product.

The key aspects of performance engineering revolve around the idea of measurements. Certain performance goals are defined for the application and appropriate measurements are performed from time to time to see if those goals are met. If needed, corrective actions are taken and then the process of taking the same measurements and corrective actions is repeated until the target is achieved.

Applications Performance in the Cloud

Cloud computing has revolutionized the way organizations perform their day to day operations irrespective of their size, business type or geography. Cloud-based solutions have seen the evolution of platforms such as Infrastructure-as-a-Service (IaaS), Platform-as-a-Service (PaaS) or Software-as-a-Service (SaaS) over the years. The industry has seen wide adaptability of these platforms not just to reduce the cost of ownership for the businesses but also to provide better availability, scalability, and reliability of their solutions to customers.

However, there are no free lunches. A cloud-based solution also has its own fault lines. Noisy Neighbours and Cascading Effects are some of the common problems associated with a cloud solution. What this means for software developers is that now they have heavier responsibility to ensure that the code they write scales well against much higher payloads. They need to be highly cognizant of the cost of their code because, with cloud offerings, the scale can change massively, which makes code-cost even more prominent.

Scope

When it comes to writing performance-centric code, one of the fundamental principles is best described in one of Vance Morrisons's old articles, Measure Early and Measure Often. However, many developers either ignore or struggle to put these principles into practice during their daily workflows using tools of their choice. In an attempt to apply Vance ideas to follow performance centric design, I always go back to the following excerpt from Joe Duffy's The 'premature optimization is evil' myth blog post. I highly recommend reading the full article to get the context behind this quote.

"To be successful at this, you’ll need to know what things cost. If you don’t know what things cost, you’re just flailing in the dark, hoping to get lucky."

This is a great point that needs to be understood and followed rigorously. However, executing this point has practical challenges. When measuring the performance of an application, many developers add log entries to get a "feel" of code's performance. More sophisticated users may use a StopWatch type mechanism in an attempt to get a more precise measurement of the application's performance. Both of these approaches have their share of limitations and constraints.

There are many effective and powerful tools available that can be used to measure the cost of your application in development, QA or production environments. However, as a software developer, you need to be aware of that cost during your day-to-day development job using the same tools that you use to write and debug the code.

Sample Application Setup

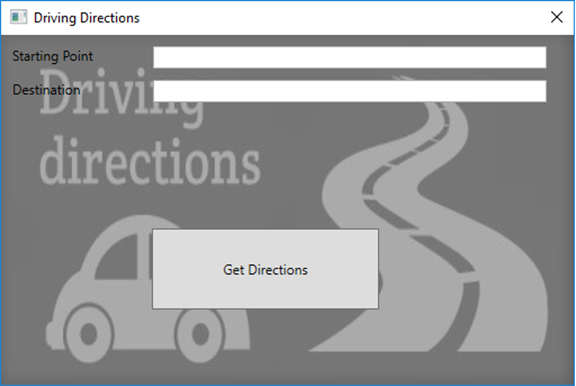

The approach for this article will be to showcase a sample application with performance issues, then demonstrate possible approaches to getting to the bottom of those issues. The sample application is a simple WPF application that provides driving directions between a starting address and the destination.

You won’t be able to see this in the illustration, but for the sake of this example the problem is that once a user clicks the Get Directions button, it takes a while before the driving directions are displayed in the application. In addition, after clicking this button, it looks like the application consumes a significant amount of resources.

Memory consumption also looks to creeping up during the same time frame.

It is important to repeat the process of observing application behavior several times to make sure the issue is consistent across a reasonable sample set. It is equally important to note that just looking at CPU and Memory usage graphs in task manager is not the best way to conclude any performance, memory or CPU issues. There can be legitimate reasons why one (or more) applications are consuming these resources. As a developer, it is imperative to have a good understanding of the cost of the code you write.

Conventional Approaches to Troubleshooting

There are many commercial and free tools available that can be helpful to troubleshoot the application and find the root cause of the memory and CPU consumption. Windbg, DebugDiag, ProcDump, PerfView are a few free tools from Microsoft that are generally considered good because of the flexibility to use these during development, testing, staging or even field issues. Not all these tools will be used in this section but hopefully, there will be enough to provide some sense of what these tools can offer.

PerfView

Let’s first review the traces collected by the PerfView tool. This tool can be downloaded from Microsoft GitHub repository. It provides different mechanisms for analyzing CPU and memory related issues.

This figure shows the application traces collected and analyzed by PerfView. The CallTree view provides the distribution of CPU consumption by various threads. As you can see, the first Thread (Id 3204) is responsible for 40% of the CPU consumption by the application. This view also shows other threads (Ids 10484, 932, 10140) and their CPU consumption.

In this figure, I drilled down of the first thread (Id 3204). It shows that the vast majority of 40% CPU usage by this thread is consumed by the application’s RouteViewer.DisplayDirection and RouteCalculator.CalculateRoute methods.

Similarly, this figure highlights the two other threads (Ids 10484, 932). This also shows significant CPU usage by the application’s RouteCalculator.XmlDataProcessor methods.

It is quite clear that PerfView can help pinpoint the section of code responsible for high CPU usage.

DebugDiag

Let’s turn our attention to figuring out which objects are responsible for the high memory consumption. The following figure shows DebugDiag report that was generated against the memory snapshots of the same process.

This report shows that the overall GC Heap size is approximately 2GB. This report then also provides the list of most expensive objects responsible for the memory consumption. The XmlElement is on the top of that list responsible for about 80% of the memory consumption. This can provide clear pointers to the developers as to what area of the application could be responsible for the high memory consumption.

Conclusion

Given the firepower of PerfView and DebugDiag, we have only touched the surface of using the flexibility these tools to find the root cause of problem with sample application. These tools could be used to not only retrieve more detailed information, but also troubleshoot far more complex problems.

However, most developers don’t use tools such as PerfView or DebugDiag for their day-to-day work. They instead use Visual Studio for writing, compiling, troubleshooting and testing their code. That poses a big dilemma for developers as to how to tackle complex issues, take any required measurements and perform corrective actions while doing their day to day work from the comfort of using Visual Studio.

In a future article, we will use Visual Studio to find the source of the problem much earlier in the development cycle.

History

- 11th June, 2019: Initial version