MemCache++ is a light-weight, type-safe, simple to use and full-featured Memcache client.

It was developed by Dean Michael Berris who is a C++ fanatic, loves working on network libraries (cpp-netlib.github.com), and currently works at Google Australia. He also is part of the Google delegation to the ISO C++ Committee. You can read more of his published works at deanberris.github.com and his C++ blog at www.cplusplus-soup.com.

Studying the well designed libraries is recommended to master C++ concepts, and the goal of this article is to discover some memcache++ design choices that makes it easy to understand and use.

Let’s discover the memcache++ design by analyzing it with CppDepend.

Namespace Modularity

Namespaces represents a good solution to modularize the application, unfortunately this artifact is not enough used in the C++ projects, just a look at a random open source C++ projects show us this fact.And more than that when We search for C++ namespace definition the common one is like that:

"A namespace defines a new scope. Members of a namespace are said to have namespace scope. They provide a way to avoid name collisions."

Many times the collision is shown as the first motivation, and not the modularity unlike for C# and Java where namespaces are more quoted to modularize the application.

However Some modern C++ libraries like boost use the namespaces to structure well the library and encourage developers to use them.

What about the namespace modularity of memcache++?

Here’s the dependency graph between memcache++ namespaces:

The namesapces are used for two main reasons:

- Modualrize the library.

- Hide details like “memcache::detail” namespace, this approach could be very interesting if we want to inform the library user that he dont need to use directly types inside this namesapce. For C# the “internal” keyword did the job, but in C++ there’s no way to hide public types to the library user.

memcache++ exploit gracefully the namespace concept,however a dependency cycle exist between memcache and memcache::detail.We can remove this dependency cycle by searching types used by memcache::detail from memcache.

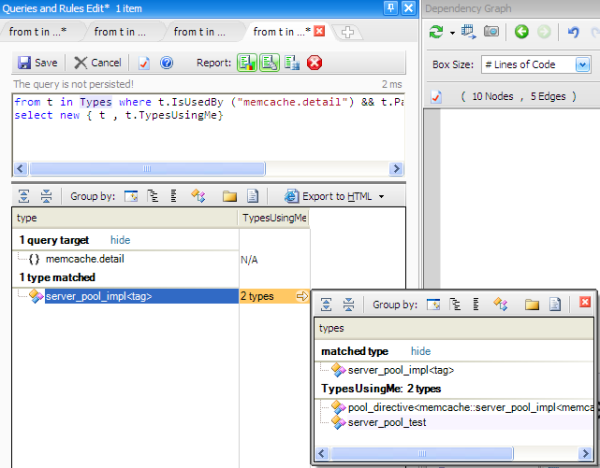

For that we can execute the following CQlinq request:

from t in Types where t.IsUsedBy("memcache.detail") && t.ParentNamespace.Name=="memcache"

select new { t,t.TypesUsingMe }

Here’s the result after executing the request:

To remove the dependency cycle we can move pool_directive and server_pool_test to memcache.

Generic or OOP?

In the C++ world two schools are very popular : Object Oriented Programming and Generic programming, each approach has their advocates, this article explain the tension between them.

What the approach used by memcache++?

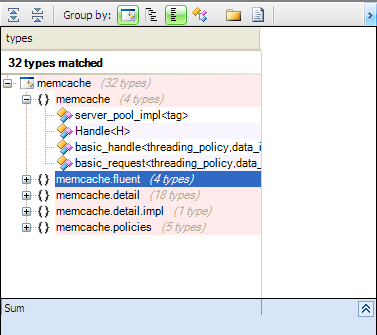

To answer this question Let’s search first for Generic types:

from t in Types where t.IsGeneric && !t.IsThirdParty select t

What about the not generic ones:

from t in Types where !t.IsGeneric && !t.IsGlobal && !t.IsNested && !t.IsEnumeration && t.ParentProject.Name=="memcache"

select t

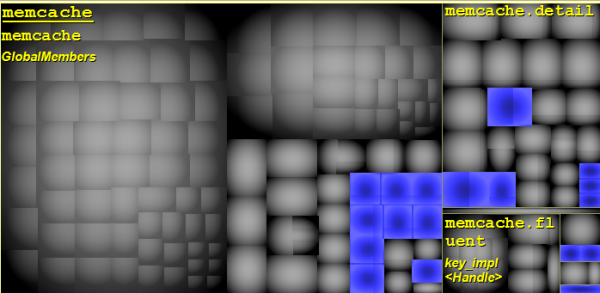

Almost all not generic types concern exception classes, and to have a better idea of their use proportion, the treemap view is very useful.

The blue rectangles represents the result of the CQLinq query, and as we can see only a minimal part of the libray concern the not generic types.

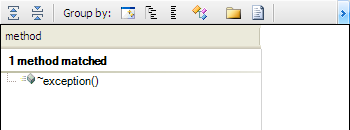

Finally we can search for generic methods:

from m in Methods where m.IsGeneric && !m.IsThirdParty select m

As we can observe memcache++ use mostly generics, but it’s not sufficient to confirm that it follow the C++ generic approach.To check that a good indicator is the use of inheritence and dynamic polymorphism, indeed OOP use them mostly , however for the generic approach using inheritence is very limited and the dynamic polymorphism is avoided.

Let’s search for types having base classes.

from t in Types where t.BaseClasses.Count()>0 && !t.IsThirdParty && t.ParentProject.Name=="memcache"

select t

It’s normal that exceptions classes use inheritence but what about the other classes? did they use inheritence for dynamic polymorphism purpose? to answer this question let’s search for all virtual methods.

from m in Methods where m.IsVirtual select m

Only exception class has a virtual method, and for the other few classes using inheritence the main motivation is to reuse the code of the base class.

If the dynamic polymorphism is not used, what’s the solution adopted if we need another behavior for a specific classes?

The common solution for the generic approach is to use Policies.Here’s a short definition from wikipedia

"The central idiom in policy-based design is a class template (called the host class), taking several type parameters as input, which are instantiated with types selected by the user (called policy classes), each implementing a particular implicit interface (called a policy)."

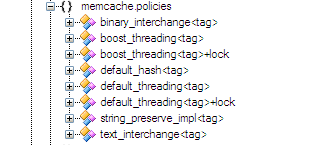

memcache++ has many policies inside memcache.policies namespace.

Let’s discover an example from memcache++ to understand better the policy based design.

memcache++ use the type basic_handle to implement all commands like add, set, get and delete from the cache.This class is defined like that:

template <

class threading_policy = policies::default_threading,

class data_interchange_policy = policies::binary_interchange,

class hash_policy = policies::default_hash

>

struct basic_handle

The memcache++ is thread safe and for the multithreaded context have to manage synchronisation, by default the threading_policy is “default_threading” where no special treatement is added, however for multithreading the policy used is “boost_threading”.

Let’s take a look to connect method implementation.

void connect(boost::uint64_t timeout = MEMCACHE_TIMEOUT) {

typename threading_policy::lock scoped_lock(*this);

for_each(servers.begin(), servers.end(), connect_impl(service_, timeout));

};

If threading_policy is “default_threading” , the first line has no effect because the lock constructor did nothing, however if it’s the boost_threading one, lock use boost to synchronize between threads.

Using policies give us more flexibility to implement different behaviors, and it’s not very difficult to understand and use.

Generic Functors

memcache++ implement many commands to interact with the cache like add,get,set and delete.The command pattern is a good candidate for such case.

memcache++ implement this pattern by using generic functors, here’s a CQLinq queries to have all functors:

from t in Types where t.Methods.Where(a=>a.IsOperator && a.Name.Contains("()")).Count()>0

select t

Functor encapsulate a function call, with its state, and it can be used to defer call to a later times, and act as callback.Generic functors gives more flexibility to normal functors.

Interface exposed

How the library expose it’s capabilities is very important, it impact its flexibility and its easy of use.

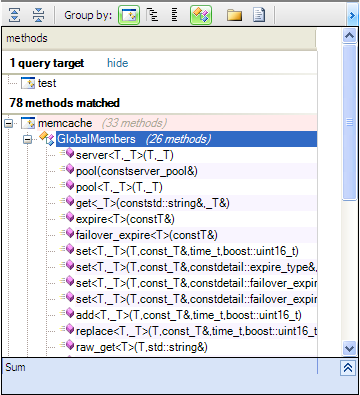

To discover that let’s search for the communication between the test project and the memcache++ library.

from m in Methods where m.IsUsedBy ("test")

select m

test project use mainly generic methods to invoke memcache++ functionalities. What the benefits using template methods , why not use classes or functions?

For POO approach the library interface is composed of classes and functions, and for well designed ones Abstract classes are used as contracts to enforce low coupling, this solution is very interesting but have some drawbacks:

- The interface become more complicated and can change many times: to explain that let’s take as example the add method exposed by memcache++, if we dont use generic approach many methods must be added, each one for a specific type int,double,string,…

However the generic add method is declared like that add<T> where T is the type, and in this case we need only one method, and even we want to add another type no change requiered in the interface.

- The interface is less flexible: if for example we expose a method like this

calculte(IAlgo* algo).

The user must give a class inhereting from IAlgo , however if we use generic and define it like that calculate<T> , the user have only to provide a class with methods needed and not necessarilly inherit from IAlgo, and if IAlgo change to IAlgo2 because some methods are added , the user of this library will not be impacted.

Ideally the interface exposed by a library must not have any breaking changes, and the user have not to be impacted when changes are introduced in the library. generic approach is the most suitable for such constraints because it’s very tolerent when changes are needed.

External API used

Here are the external types used by memcache++

memcache++ use mostly boost and STL to acheive its needs,here are some boost features used:

- multithreading

- algorithm

- spirit

- asio

- unit testing

and of course the well known shared_ptr for RIIA idiom.

And for STL the containers are mostly used.

So finally what The advantages using the generic approach?

- The first indicator of the efficency of memcache++ design choices is the Line number of code(LOC) where is only arround 600 lines of code, this result is due to two main reasons:

– using generic approach remove boilerplate code.

– using the richness of boost and stl.

- The second force is it’s flexibiliy , and any changes will impact only a minimal portion of code.

Drawbacks of using this kind of approach:

many developers found that the generic approach is very complicated , and understanding the code become very difficult.

What’s to do? use or not the generic approach?

if it’s very difficult to design an application with this appraoch ,but it’s more easy to use librairies using it, like using STL or boost.

So even if we want to avoid the risk of designing the application with the modern approach, it will be a good idea to use libraries like STL or boost.

Filed under:

CodeProject