Introduction

OLAF (Online Automated Forensics) is an automated digital forensics tool which uses computer vision and machine learning to automatically classify images and documents a user downloads while browsing the internet.

OLAF monitors browsers and well known user folders for file-system activity and uses a two-stage detection process that first runs image object-detection algorithms to determine if a downloaded image is text-rich or is likely a photo containing human faces. Photographic images are sent to Azure Cognitive Services' Computer Vision API for analyzing and classifying the content including whether or not the photo may contain sexual or adult content. For images that are not photos, OLAF also runs OCR on the image to extract any text and sends this to Azure Cognitive Services' Text Analytics API to extract information regard things like the entities mentioned. OLAF captures the precise date and time an image artifact was created on a PC together with the artifact itself and attributes describing the content of the image which can then be used to provide forensic evidence for an investigation into violations of computer use policies.

For PCs in places like libraries or schools or internet cafes, OLAF can help enforce an organization's computer use policies on intellectual property and piracy, adult or sexual images, hate speech or incitements to violence and terrorism, and other disallowed content downloaded by users. For businesses, OLAF can detect the infiltration of documents which may contain the intellectual property of competitors and put the organization in legal jeopardy.

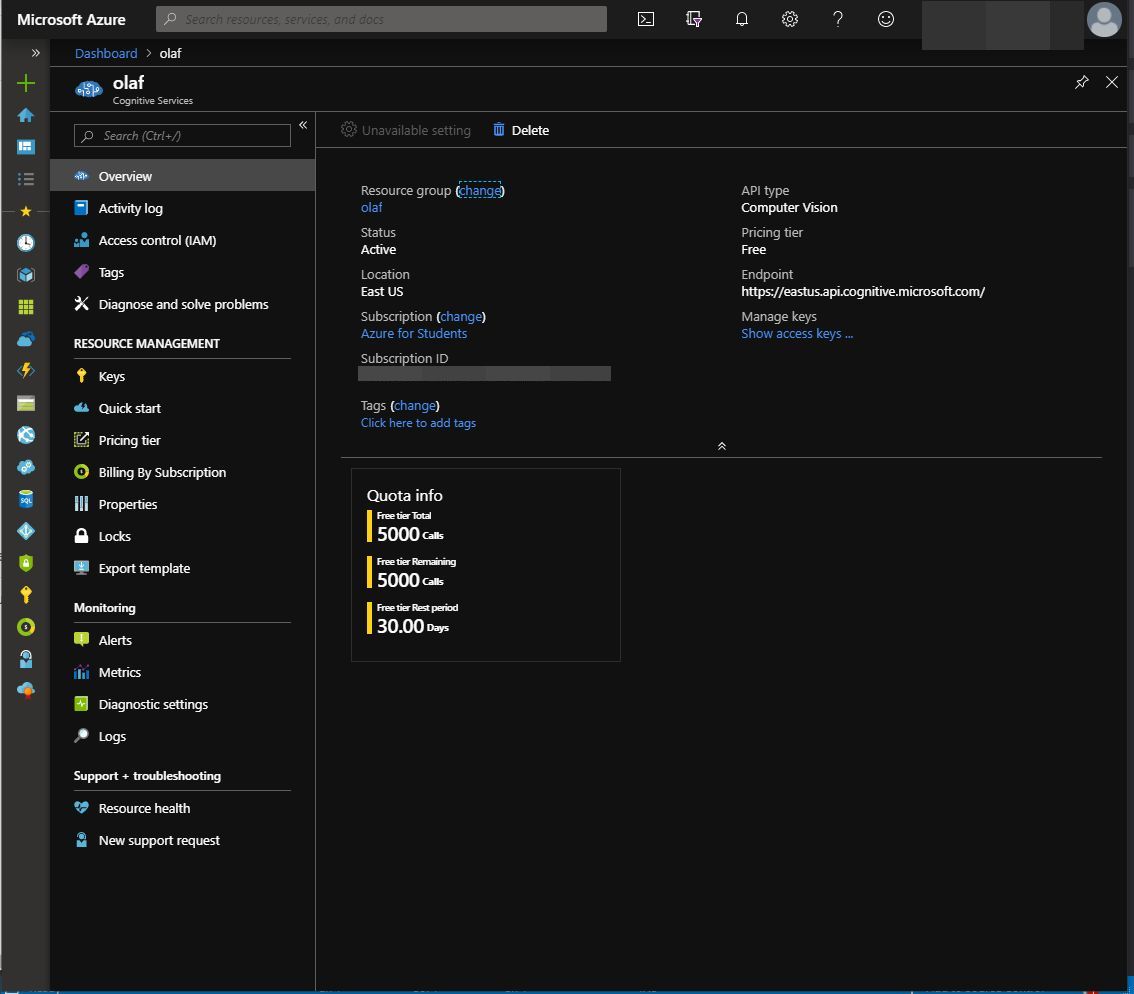

In this article, I will describe some of the steps and challenges in building a real-world image classification application in .NET. I will talk about using two popular open-source machine learning libraries - Tesseract OCR and Accord.NET as well as using a cloud-based machine learning service - the Computer Vision API from Azure Cognitive Services - to analyze and classify images. I won't talk much here about image classification algorithms or setting up the Azure Cognitive Services accounts, rather I will focus more on the process of putting together different image classification and machine learning software components and the design decisions and tradeoffs that arise in this process. Setting up an Azure Cognitive Services resource is pretty simple and can be done in five minutes from the Azure Portal:

Like many Azure services, the Computer Vision API has a free tier of usage so anyone with a valid Azure subscription including the free Azure for Students subscription can try it out or run an application like OLAF.

Background

Of course technological issues aside, OLAF seems to be just a sophisticated way of spying on a user. Whether on a public PC or not, no one likes the idea of a program watching over their shoulder at what they are looking at online. But as far as technology is concerned, the most reliable position is that if something can possibly be done then someone is going to do it so worrying about the misuse of the things you create is largely pointless. It's way better to learn about how something like user-activity monitoring can be done and publishing it as open-source means other people can study it and verify that at least the information being collected about the user's activity is being used in a legitimate way.

You can count at least ten commercial user monitoring applications available for purchase today and the number of commercial companies making proprietary user monitoring software is only growing. Open-source user monitoring software like OLAF can provide a somewhat more ethical and secure way of user monitoring where such monitoring does have a legitimate purpose and value.

Overview

OLAF is a .NET application written in C# targeting .NET Framework 4.5+. Although envisoned as a desktop Windows application, nothing in the core libraries is desktop or Windows specific and OLAF can conceivably be run on other desktop platforms like Linux or on mobile phones using Xamarin.

OLAF runs in the background monitoring the user's browser and the files he or she is downloading to specific folders.

When a file download of an image is detected, OLAF runs different image classification algorithms on the artifact. First, it determines if the image likely contains text using the Tesseract OCR library. Then it determines if the image is likely to contain a human face using the Viola-Jones object detection framework. If the image is likely a photo with human faces, OLAF sends the image to the Azure Computer Vision API for more advanced classification. In this way, we can avoid calls to the Azure API until it is absolutely necessary.

The video above shows a short demonstration of the basic features of OLAF. First, I navigate to and download an image of a sports car to my downloads folder. The image classification part of OLAF runs locally and determines that the image does not contain any human faces and the processing pipeline ends.

05:51:48<03> [DBG] Read 33935 bytes from "C:\\Users\\Allister\\Downloads\\hqdefault.jpg".

05:51:48<03> [DBG] Wrote 33935 bytes to

"c:\\Projects\\OLAF\\data\\artifacts\\20190628_UserDownloads_636973760617841436\\3_hqdefault.jpg".

05:51:48<08> [DBG] "FileImages" consuming message 3.

05:51:48<08> [INF] Extracting image from artifact 3 started...

05:51:48<08> [DBG] Extracted image from file "3_hqdefault.jpg"

with dimensions: 480x360 pixel format: Format24bppRgb hres: 96 yres: 96.

05:51:48<08> [INF] Extracting image from artifact 3 "completed" in 17.4 ms

05:51:48<08> [INF] "FileImages" added artifact id 4

of type "OLAF.ImageArtifact" from artifact 3.

05:51:48<09> [DBG] "TesseractOCR" consuming message 4.

05:51:48<08> [DBG] Pipeline ending for artifact 3.

05:51:48<09> [DBG] Pix has width: 480 height: 360 depth: 32 xres: 0 yres: 300.

05:51:48<09> [INF] Tesseract OCR (fast) started...

05:51:48<09> [INF] Artifact id 4 is likely a photo or non-text image.

05:51:49<09> [INF] Tesseract OCR (fast) "completed" in 171.4 ms

05:51:49<10> [DBG] "ViolaJonesFaceDetector" consuming message 4.

05:51:49<10> [INF] Viola-Jones face detection on image artifact 4. started...

05:51:49<10> [INF] Found 0 candidate face object(s) in artifact 4.

05:51:49<10> [INF] Viola-Jones face detection on image artifact 4. "completed" in 96.9 ms

05:51:49<11> [DBG] "MSComputerVision" consuming message 4.

05:51:49<11> [DBG] Not calling MS Computer Vision API for image artifact 4

without face object candidates.

When I download an image of celebrity Rashida Jones, the image classification algorithm detects that the downloaded image has a human face and then sends the image to the Azure Computer Vision API which analyzes the image and adds tags with all the objects it detected in the image including a score on whether it thinks the image is adult content.

05:52:13<03> [DBG] Waiting a bit for file to complete write...

05:52:13<03> [DBG] Read 62726 bytes from

"C:\\Users\\Allister\\Downloads\\rs_1024x759-171120092206-1024.

Rashida-Jones-Must-Do-Monday.jl.112017.jpg".

05:52:13<03> [DBG] Wrote 62726 bytes to

"c:\\Projects\\OLAF\\data\\artifacts\\20190628_UserDownloads_636973760617841436\\

5_rs_1024x759-171120092206-1024.Rashida-Jones-Must-Do-Monday.jl.112017.jpg".

05:52:13<08> [DBG] "FileImages" consuming message 5.

05:52:13<08> [INF] Extracting image from artifact 5 started...

05:52:13<08> [DBG] Extracted image from file

"5_rs_1024x759-171120092206-1024.Rashida-Jones-Must-Do-Monday.jl.112017.jpg"

with dimensions: 1024x759 pixel format: Format24bppRgb hres: 72 yres: 72.

05:52:13<08> [INF] Extracting image from artifact 5 "completed" in 34.4 ms

05:52:14<08> [INF] "FileImages" added artifact id 6 of type

"OLAF.ImageArtifact" from artifact 5.

05:52:14<08> [DBG] Pipeline ending for artifact 5.

05:52:14<09> [DBG] "TesseractOCR" consuming message 6.

05:52:14<09> [DBG] Pix has width: 1024 height: 759 depth: 32 xres: 0 yres: 300.

05:52:14<09> [INF] Tesseract OCR (fast) started...

05:52:14<09> [INF] Artifact id 6 is likely a photo or non-text image.

05:52:14<09> [INF] Tesseract OCR (fast) "completed" in 99.8 ms

05:52:14<10> [DBG] "ViolaJonesFaceDetector" consuming message 6.

05:52:14<10> [INF] Viola-Jones face detection on image artifact 6. started...

05:52:14<10> [INF] Found 1 candidate face object(s) in artifact 6.

05:52:14<10> [INF] Viola-Jones face detection on image artifact 6. "completed" in 173.6 ms

05:52:14<11> [DBG] "MSComputerVision" consuming message 6.

05:52:14<11> [INF] Artifact 6 is likely a photo with faces detected;

analyzing using MS Computer Vision API.

05:52:14<11> [INF] Analyze image using MS Computer Vision API. started...

05:52:16<11> [INF] Analyze image using MS Computer Vision API. "completed" in 2058.9 ms

05:52:16<11> [INF] Image categories: ["people_portrait/0.7265625"]

05:52:16<11> [INF] Image properties: Adult: False/0.00276160705834627

Racy: False/0.00515600480139256 Description:["indoor", "person", "sitting",

"holding", "woman", "box", "looking", "front", "man", "laptop", "table", "smiling", "shirt",

"white", "large", "computer", "yellow", "young", "food", "refrigerator", "cat",

"standing", "sign", "kitchen", "room", "bed"]

05:52:16<12> [DBG] "AzureStorageBlobUpload" consuming message 6.

05:52:16<12> [INF] Artifact id 6 not tagged for preservation.

05:52:16<12> [DBG] Pipeline ending for artifact 6.

Azure Computer Vision is able to detect both 'racy' images and images which contain nudity.

23:35:11<03> [DBG] Waiting a bit for file to complete write...

23:35:11<03> [DBG] Read 7247 bytes from "C:\\Users\\Allister\\Downloads\\download (1).jpg".

23:35:11<03> [DBG] Wrote 7247 bytes to

"c:\\Projects\\OLAF\\data\\artifacts\\20190628_UserDownloads_636973760617841436\\

1_download (1).jpg".

23:35:11<08> [DBG] "FileImages" consuming message 1.

23:35:11<08> [INF] Extracting image from artifact 1 started...

23:35:11<08> [DBG] Extracted image from file "1_download (1).jpg" with dimensions:

194x259 pixel format: Format24bppRgb hres: 96 yres: 96.

23:35:11<08> [INF] Extracting image from artifact 1 "completed" in 14.6 ms

23:35:11<09> [DBG] "TesseractOCR" consuming message 2.

23:35:11<08> [INF] "FileImages" added artifact id 2 of type "OLAF.ImageArtifact"

from artifact 1.

23:35:11<08> [DBG] Pipeline ending for artifact 1.

23:35:11<09> [DBG] Pix has width: 194 height: 259 depth: 32 xres: 0 yres: 300.

23:35:11<09> [INF] Tesseract OCR (fast) started...

23:35:11<09> [INF] Artifact id 2 is likely a photo or non-text image.

23:35:11<09> [INF] Tesseract OCR (fast) "completed" in 176.5 ms

23:35:11<10> [DBG] "ViolaJonesFaceDetector" consuming message 2.

23:35:11<10> [INF] Viola-Jones face detection on image artifact 2. started...

23:35:11<10> [INF] Found 1 candidate face object(s) in artifact 2.

23:35:11<10> [INF] Viola-Jones face detection on image artifact 2. "completed" in 86.0 ms

23:35:11<11> [DBG] "MSComputerVision" consuming message 2.

23:35:11<11> [INF] Artifact 2 is likely a photo with faces detected;

analyzing using MS Computer Vision API.

23:35:11<11> [INF] Analyze image using MS Computer Vision API. started...

23:35:14<11> [INF] Analyze image using MS Computer Vision API. "completed" in 2456.2 ms

23:35:14<11> [INF] Image categories: ["people_/0.99609375"]

23:35:14<11> [INF] Image properties: Adult: False/0.0119723649695516 Racy:

True/0.961882710456848 Description:["clothing", "person", "woman", "posing",

"young", "smiling", "underwear", "carrying", "holding", "dress", "standing",

"white", "water", "board", "suitcase", "wedding", "bed"]

23:35:14<12> [DBG] "AzureStorageBlobUpload" consuming message 2.

Design

The core internal design centers around an asynchronous messaging queue that is used by different parts of the application to communicate in an efficient way without blocking a particular thread. Since OLAF is designed to run continuously in the background and has to perform some potentially computationally intensive operations, performance is a key consideration of the overall design.

The app is structured as a pipeline where artifacts generated by user activity are processed through OCR, image classification and other services. Each component of the pipeline is a C# class which inherits from the base OLAFApi class.

public abstract class OLAFApi<TApi, TMessage> where TMessage : Message

Each component declares the kind of queue message it is interested in processing or sending. For instance, the DirectoryChangesMonitor component is declared as:

public class DirectoryChangesMonitor : FileSystemMonitor<FileSystemActivity,

FileSystemChangeMessage, FileArtifact>

This component listens to FileSystemChangeMessage queue messages and after preserving the file artifact places a FileArtifact message on the queue indicating that a file artifact is ready for processing by the other pipeline components.

Pipeline components are built on a set of base classes declared in the OLAF.Base project. There are 3 major categories of components: Activity Detectors, Monitors, and Services.

Activity Detectors

Activity detectors interface with the operating system to detect user activity like file downloads.

public abstract class ActivityDetector<TMessage>:

OLAFApi<ActivityDetector<TMessage>, TMessage>, IActivityDetector

where TMessage : Message

Activity Detectors only place messages on the message queue and do not listen to any messages from the queue. For instance, the FileSystemActivity detector is declared as:

public class FileSystemActivity : ActivityDetector<FileSystemChangeMessage>, IDisposable

File-system actvity is detected via the standard .NET FileSystemWatcher class. When a file is created, a message is enqueued:

private void FileSystemActivity_Created(object sender, FileSystemEventArgs e)

{

EnqueueMessage(new FileSystemChangeMessage(e.FullPath, e.ChangeType));

}

which allows another component to process the actual file that was created. Activity detectors are designed to be lightweight and execute quickly since they represent OLAF's point of contact with the operating system and may be executed inside operating system hooks that need to execute quickly and without errors. Activity detectors simply place messages on the queue and return to idle allowing them to process a potentially large amount of activity notifications coming from the operating system. Actual processing of the messages and creation of the image artifacts is handled by the Monitor components.

Monitors

Monitors listen for activity detector messages on the queue and create the initial artifacts that will be processed through the pipeline based on the information supplied by the activity detectors.

public abstract class Monitor<TDetector, TDetectorMessage, TMonitorMessage> :

OLAFApi<Monitor<TDetector, TDetectorMessage, TMonitorMessage>, TMonitorMessage>,

IMonitor, IQueueProducer

where TDetector : ActivityDetector<TDetectorMessage>

where TDetectorMessage : Message

where TMonitorMessage : Message

For instance, the DirectoryChangesMonitor class is declared as:

public class DirectoryChangesMonitor :

FileSystemMonitor<FileSystemActivity, FileSystemChangeMessage, FileArtifact>

This monitor listens for FileSystemChange messages indicating a file was downloaded and first copies the file to an internal OLAF data folder so that the file is preserved even if the user deletes it afterwards. It then places a FileArtifact message on the queue indicating than an artifact is available for processing by the queue image classification and other services.

Services

Services are the components that actually perform analysis on the image and other artifacts generated by user activity.

public abstract class Service<TClientMessage, TServiceMessage> :

OLAFApi<Service<TClientMessage, TServiceMessage>, TServiceMessage>, IService

where TClientMessage : Message

where TServiceMessage : Message

For instance, the TesseractOCR service is declared as:

public class TesseractOCR : Service<ImageArtifact, Artifact>

This service consumes an ImageArtifact but as it can produce different artifacts depending on the results of the OCR operation, it only uses a generic Artifact in its signature indicating the output queue message type. The BlobDetector service consumes image artifacts and also produces image artifacts.

public class BlobDetector : Service<ImageArtifact, ImageArtifact>

Each service processes artifacts received in the queue and outputs an artifact enriched with any information that the service was able to add via text extraction or other machine learning applications. This artifact can then be further processed by other services in the queue.

OCR

OLAF uses the popular Tesseract OCR library for detecting and extracting any text in an image the user downloads. We use the tesseract.net and also leptonica.net .NET libraries which wrap both Tesseract and the Leptonica image processing library which Tesseract uses. Although Azure's Computer Vision API also has OCR capabilities, using Tesseract locally saves the cost of calling the Azure service for each image artifact being processed. The TesseractOCR service processes image artifact as follows. First, we create a Leptonica image from the image artifact posted to the queue.

protected override ApiResult ProcessClientQueueMessage(ImageArtifact message)

{

BitmapData bData = message.Image.LockBits(

new Rectangle(0, 0, message.Image.Width, message.Image.Height),

ImageLockMode.ReadOnly, message.Image.PixelFormat);

int w = bData.Width, h = bData.Height, bpp =

Image.GetPixelFormatSize(bData.PixelFormat) / 8;

unsafe

{

TesseractImage.SetImage(new UIntPtr(bData.Scan0.ToPointer()),

w, h, bpp, bData.Stride);

}

Pix = TesseractImage.GetInputImage();

Debug("Pix has width: {0} height: {1} depth: {2} xres: {3} yres: {4}.",

Pix.Width, Pix.Height, Pix.Depth,

Pix.XRes, Pix.YRes);

Then we run the recognizer on the image:

List<string> text;

using (var op = Begin("Tesseract OCR (fast)"))

{

TesseractImage.Recognize();

ResultIterator resultIterator = TesseractImage.GetIterator();

text = new List<string>();

PageIteratorLevel pageIteratorLevel = PageIteratorLevel.RIL_PARA;

do

{

string r = resultIterator.GetUTF8Text(pageIteratorLevel);

if (r.IsEmpty()) continue;

text.Add(r.Trim());

}

while (resultIterator.Next(pageIteratorLevel));

If Tesseract recognizes less than seven text sections, then the service decides it is likely a photo or non-text image. Services automatically pass artifacts they receive to other services that are listening on the queue so in this case we don't have to do anything further. If there are more than seven text sections, then the service creates an additional TextArtifact and posts it to the queue.

if (text.Count > 0)

{

string alltext = text.Aggregate((s1, s2) => s1 + " " + s2).Trim();

if (text.Count < 7)

{

Info("Artifact id {0} is likely a photo or non-text image.",

message.Id);

}

else

{

message.OCRText = text;

Info("OCR Text: {0}", alltext);

}

}

else

{

Info("No text recognized in artifact id {0}.", message.Id);

}

op.Complete();

}

message.Image.UnlockBits(bData);

if (text.Count >= 7)

{

TextArtifact artifact = new TextArtifact(message.Name + ".txt", text);

EnqueueMessage(artifact);

Info("{0} added artifact id {1} of type {2} from artifact {3}.",

Name, artifact.Id, artifact.GetType(),

message.Id);

}

return ApiResult.Success;

}

Face Recognition

The ViolaJonesFaceDetector service attempts to guess if an image artifact contains a human face. This service uses the Accord.NET machine learning framework for .NET. and the HaarObjectDetector class which implements the Viola-Jones object-detection algorithm for human faces. If the detector detects human faces in the image artifact, it adds this information to the artifact.

protected override ApiResult ProcessClientQueueMessage(ImageArtifact artifact)

{

if (artifact.HasOCRText)

{

Info("Not using face detector on text-rich image artifact {0}.", artifact.Id);

return ApiResult.Success;

}

Bitmap image = artifact.Image;

using (var op = Begin("Viola-Jones face detection on image artifact {0}.",

artifact.Id))

{

Rectangle[] objects = Detector.ProcessFrame(image);

if (objects.Length > 0)

{

if (!artifact.DetectedObjects.ContainsKey(ImageObjectKinds.FaceCandidate))

{

artifact.DetectedObjects.Add(ImageObjectKinds.FaceCandidate,

objects.ToList());

}

else

{

artifact.DetectedObjects

[ImageObjectKinds.FaceCandidate].AddRange(objects);

}

}

Info("Found {0} candidate face object(s) in artifact {1}.",

objects.Length, artifact.Id);

op.Complete();

}

return ApiResult.Success;

}

The information on detected human faces is now available in the artifact to other services listening to the queue.

Computer Vision API

The MSComputerVision service use the Azure Cognitive Services' Computer Vision API SDK which is available on NuGet. Like most other Azure SDKs, setting up and using this Azure API from .NET is very easy. Once we have a valid Computer Vision API key, we create an API client in our service's Init() method:

public override ApiResult Init()

{

try

{

ApiKeyServiceClientCredentials c =

new ApiKeyServiceClientCredentials(ApiAccountKey);

Client = new ComputerVisionClient(c);

Client.Endpoint = ApiEndpointUrl;

return SetInitializedStatusAndReturnSucces();

}

catch(Exception e)

{

Error(e, "Error creating Microsoft Computer Vision API client.");

Status = ApiStatus.RemoteApiClientError;

return ApiResult.Failure;

}

}

If the artifact being processed does not have any detected face objects, then we do not call the Computer Vision API. This reduces the cost of calling the Azure API to only those artifacts we think are photos of human beings.

protected override ApiResult ProcessClientQueueMessage(ImageArtifact artifact)

{

if (!artifact.HasDetectedObjects(ImageObjectKinds.FaceCandidate) ||

artifact.HasOCRText)

{

Debug("Not calling MS Computer Vision API for image artifact {0}

without face object candidates.", artifact.Id);

}

else if (artifact.FileArtifact == null)

{

Debug("Not calling MS Computer Vision API for non-file image artifact {0}.",

artifact.Id);

}

else

{

To call the API, we open a file stream and pass this to the API client which calls the API and returns a Task<ImageAnalysis> instance with the result of the asynchronous operation:

Info("Artifact {0} is likely a photo with faces detected;

analyzing using MS Computer Vision API.", artifact.Id);

ImageAnalysis analysis = null;

using (var op = Begin("Analyze image using MS Computer Vision API."))

{

try

{

using (Stream stream = artifact.FileArtifact.HasData ?

(Stream) new MemoryStream(artifact.FileArtifact.Data)

: new FileStream(artifact.FileArtifact.Path, FileMode.Open))

{

Task<ImageAnalysis> t1 = Client.AnalyzeImageInStreamAsync(stream,

VisualFeatures, null, null, cancellationToken);

analysis = t1.Result;

op.Complete();

}

}

catch (Exception e)

{

Error(e, "An error occurred during image analysis

using the Microsoft Computer Vision API.");

return ApiResult.Failure;

}

}

The image analysis instance contains the categories the Computer Vision API classified the image into:

if (analysis.Categories != null)

{

Info("Image categories: {0}", analysis.Categories.Select

(c => c.Name + "/" + c.Score.ToString()));

foreach (Category c in analysis.Categories)

{

artifact.Categories.Add(new ArtifactCategory(c.Name, null, c.Score));

}

}

As well as scores indicating if the image was classified as racy or having adult content.

Info("Image properties: Adult: {0}/{1} Racy: {2}/{3} Description:{4}",

analysis.Adult.IsAdultContent, analysis.Adult.AdultScore,

analysis.Adult.IsRacyContent,

analysis.Adult.RacyScore, analysis.Description.Tags);

artifact.IsAdultContent = analysis.Adult.IsAdultContent;

artifact.AdultContentScore = analysis.Adult.AdultScore;

artifact.IsRacy = analysis.Adult.IsRacyContent;

artifact.RacyContentScore = analysis.Adult.RacyScore;

analysis.Description = analysis.Description;

}

return ApiResult.Success;

}

All the information provided by the image classifiers of the Computer Vision API is added to the artifact and is now available to other services further down the queue for further processing.

History

- 1st July, 2019: Initial version