Table of Contents

- Introduction

- Background

- Calling WinRT APIs from C# Desktop Applications

- Sensor Overview

- An accelerometer

- A gyroscope

- A compass

- A light sensor

- An orientation sensor

- A simplified orientation sensor

- An inclinometer

- Using the code

Introduction

When developing apps for Windows 8 and Intel devices, you have a unique opportunity and decision regarding which type of Windows 8 app you would like to develop. The following article helps developer to write sensors application for Windows 8 Desktop with WinRT sensor (APIs). Now lot's of question arise:-

Why to use WinRT api in Windows 8 Desktop application?

How to use WinRT api in Windows 8 Desktop application to deals with sensors?

This article deals with there questions.

Background

I was trying to access some of the sensors that are built into this Intel Ultrabook that runs Windows 8. However, while there's support for Location Sensors built into the .NET 4 libraries on Windows 7 and up, I want to access the complete Sensor and Location Platform that is built into Windows 8 itself. Those APIs are available via COM and I could call them via COM, but calling them via the WinRT layer is so much nicer. Plus, this is kind of why WinRT exists.

This got me thinking about WinRT. Just like the C Language has the C Runtime that provides a bunch of supporting functions and defines a calling convention for them, so the Windows Runtime (WinRT) does for Windows and its languages. These APIs and runtime includes metadata about calling conventions that make WinRT APIs easier to call than COM.

Calling WinRT APIs from C# Desktop Applications

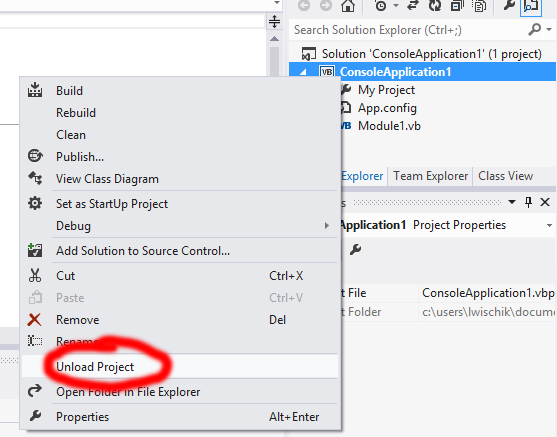

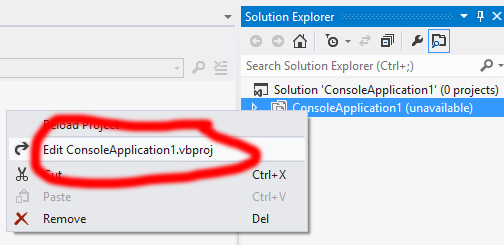

In the desktop projects, the Core tab doesn’t appear by default. The user can choose to code against the Windows Runtime by opening the shortcut menu for the project node, choosing Unload Project, adding the following snippet, opening the shortcut menu for the project node again, and then choosing Reload Project.

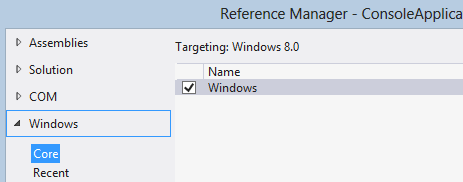

Now, when the user invokes the Reference Manager dialog box from the project, the Core tab will appear.

<PropertyGroup>

<TargetPlatformVersion>8.0</TargetPlatformVersion>

</PropertyGroup>

However, when I compile the app, I get an error on the line where I'm trying to hook up an event handler. The "+=" language sugar for adding a miulticast delegate isn't working.

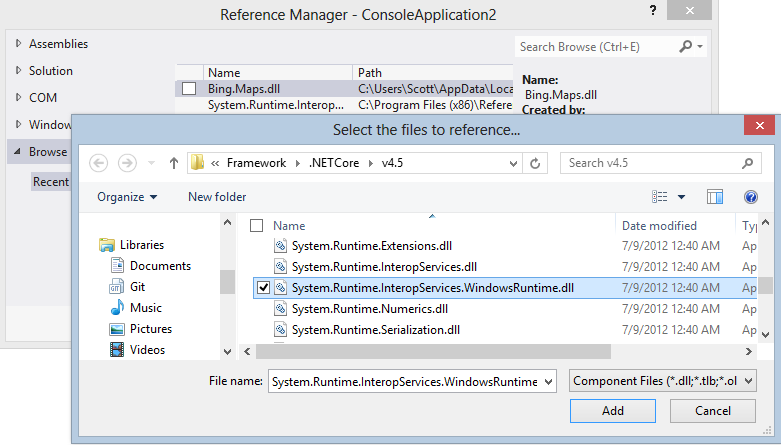

To fix this and get the appropriate assemblies loaded within my application support calling WinRT from my Desktop Application I need to add a reference to System.Runtime and System.Runtime.InteropServices.WindowsRuntime.dll. It's in C:\Program Files (x86)\Reference Assemblies\Microsoft\Framework\.NETCore\v4.5 on my system.

AddReference > Browse > C:\Program Files (x86)\Reference Assemblies\Microsoft\Framework\.NETCore\v4.5\System.Runtime.WindowsRuntime.dll

Sensor Overview

Basically, the intels Ultrabook ships with four sensor devices:

- An ambient light sensor

- An accelerometer

- A gyroscope

- A magnetometer

Given the existence of these four sensors on a hardware layer, we may exploit them in various ways on an application layer:

- An accelerometer

- A gyroscope

- A compass

- A light sensor

- An orientation sensor

- A simplified orientation sensor

- An inclinometer

Positioning in a 3D Cartesian coordinate system

Whenever we intend to sense values related to a device´s actual positioning in our physical, real world, we need to take a certain geometrical model as a basis we may refer to. For instance, we may want to differentiate, if a device is being moved from left to right or up and down. This implies that we need to align the device with a set of axes in our three-dimensional space.

Regardless of which 3D sensor interface we might think of, we can always take three axes as a basis:

- X: the long side (left/ right)

- Y: the short side (up/ down)

- Z: “into” the screen

Sensor interfaces & functionality

As MSDN already provides some sample

C# code illustrating how to use WinRT´s sensor API that appears to be fairly straight-forward, in this section we are going to focus exclusively on the particular sensor´s actual functionality. We are aware that this code sample only considers the usage of the accelerometer interface (source code contains all sensors), but as all sensors as listed below may be applied analogously we trust that our audience may apply further sensors as well without any considerable overhead.

Accelerometer

|

Returned

property

|

Measurement

|

Unit

|

Type

|

|

X

|

Acceleration

in relation to free-fall

|

G-Force

|

Double

|

|

Y

|

Acceleration

in relation to free-fall

|

G-Force

|

Double

|

|

Z

|

Acceleration

in relation to free-fall

|

G-Force

|

Double

|

The accelerometer senses the device´s acceleration in relation to free-fall; this means, that we cannot (at least not on earth or without any zero-gravity-simulation) achieve a state where the results are zero for all three axes at the same point of time. For instance, assuming that our Surface tablet is lying on its back, the returned z-value will be -1 as 1g is the standard g-force drawing our device towards the center of the earth (similarly, the z-value will be +1 if the device is lying on its front).

Gyrometer

|

Returned

property

|

Measurement

|

Unit

|

Type

|

|

X

|

Angular

velocity

|

Degrees

per second

|

Double

|

|

Y

|

Angular

velocity

|

Degrees

per second

|

Double

|

|

Z

|

Angular

velocity

|

Degrees

per second

|

Double

|

As the gyrometer (also referred to as gyroscope) senses our device´s angular velocity while being moved, all three axes´ values will be zero when we hold it motionlessly. Simultaneously, only the x value will change when we flip our Surface´s screen open and shut again without moving it into any other direction (as we are rotating the device about its x-axis).

Compass

|

Returned

property

|

Measurement

|

Unit

|

Type

|

|

Magnetic

north

|

Angle

relative to direction of magnetic north

|

Degrees

|

Double

|

|

True

north

|

Angle

relative to direction of true north

|

Degrees

|

Double?

|

While the magnetic north (and consequently the device´s current angle to the direction to this point) may be determined by the built-in magnetometer, this single sensor device is not able to sense the geographical (or true) north as well, since magnetic and true north usually differ. As the Surface RT tablet features no GPS sensor, we initially assumed that the compass interface might exploit the tablet´s internet connection to approximate the true north – unfortunately, we were not able to receive a value, though.

Light sensor

Please note, that the Surface´s light sensor is positioned left of the front camera. Hold your hands in front of the sensor and you can see that the sensor delivers different results.

Orientation sensor

|

Returned

property

|

Measurement

|

Unit

|

Type

|

|

Quaternion

|

Orientation

|

4×1

Matrix

|

SensorQuaternion

|

|

Rotation

matrix

|

Orientation

|

3×3

Matrix

|

SensorRotationMatrix

|

The orientation sensor interface exposes a quaternion and a rotation matrix indicating the device´s current orientation, both of which can i.e. be utilized when (similarly) rotating view elements. As diving deeper into computer graphics would go beyond the scope of this article, we will refrain from a detailed explanation of the underlying mathematical concepts (nevertheless, please feel free to look up those concepts yourself.

Simple orientation sensor

|

Returned

property

|

Type

|

Members

|

|

Simple

orientation

|

Enum

|

NotRotated

|

|

Rotated90DegreesCounterclockwise

|

|

Rotated180DegreesCounterclockwise

|

|

Rotated270DegreesCounterclockwise

|

|

Faceup

|

|

Facedown

|

This sensor simplifies sensing the device´s current orientation by auto-converting complex numeric sensor values (as provided by the actual orientation sensor) to a set of discrete, human-readable states.

Inclinometer

|

Returned

property

|

Measurement

|

Unit

|

Type

|

|

Pitch

|

Angle

relative to x-axis

|

Degrees

|

Double

|

|

Roll

|

Angle

relative to y-axis

|

Degrees

|

Double

|

|

Yaw

|

Angle

relative to z-axis

|

Degrees

|

Double

|

As the inclinometer sensor provides information about our device´s angle in relation to its respective axis, the question about the device´s assumed initial position arises: to achieve a distinct initial position, the inclinometer utilizes the magnetometer. Thus, acknowledging a certain variance, we may observe that Pitch=Roll=Yaw=0 if our device is perfectly aligned with the magnetic north (similarly, that Yaw=0 if MagneticNorth=0).

As the inclinometer sensor provides information about our device´s angle in relation to its respective axis, the question about the device´s assumed initial position arises: to achieve a distinct initial position, the inclinometer utilizes the magnetometer. Thus, acknowledging a certain variance, we may observe that Pitch=Roll=Yaw=0 if our device is perfectly aligned with the magnetic north (similarly, that Yaw=0 if MagneticNorth=0).

Using the code

For the teaching purpose, I used all 7 sensors in a single application. The code can be separated into following sections:-

- Create a class to initialize the Sensor object.

- Inherit control class so it can be used as a user control.

- Describe DependencyProperty to notify property change.

- Binding to Reading Change event of the sensor.

- Updating UI Asynchronously with the change.

Step 1:

Sensorbase.cs

It is an abstract class which inherits control class and defines Dependency Property.

public abstract class SensorBase : Control

{

protected static readonly DependencyProperty ReportIntervalProperty = DependencyProperty.Register(

"ReportInterval",

typeof (int),

typeof (SensorBase),

new PropertyMetadata(1, ReportIntervalPropertyPropertyChanged));

public int ReportInterval

{

get { return (int) GetValue(ReportIntervalProperty); }

set { SetValue(ReportIntervalProperty, value); }

}

protected abstract void OnReportIntervalChanged(DependencyObject d, DependencyPropertyChangedEventArgs e);

private static void ReportIntervalPropertyPropertyChanged(DependencyObject d,

DependencyPropertyChangedEventArgs e)

{

var sender = d as SensorBase;

sender.OnReportIntervalChanged(d, e);

}

} Step 2:

AccelerometerSensor.cs

AccelerometerSensor class inherits SensorBase class. In this class firstly I create the Sensor

object and attach it to the ReadingChanged event.

private readonly Accelerometer _accelerometer;

_accelerometer = Accelerometer.GetDefault();

if (_accelerometer != null)

{

_accelerometer.ReadingChanged += AccelerometerReadingChanged;

} Step 3:

Describing Dependency Property for X,Y,Z axis of the accelerometer.

public static readonly DependencyProperty XProperty = DependencyProperty.Register(

"X",

typeof (double),

typeof (AccelerometerSensor),

new PropertyMetadata(0.0));

public static readonly DependencyProperty YProperty = DependencyProperty.Register(

"Y",

typeof (double),

typeof (AccelerometerSensor),

new PropertyMetadata(0.0));

public static readonly DependencyProperty ZProperty = DependencyProperty.Register(

"Z",

typeof (double),

typeof (AccelerometerSensor),

new PropertyMetadata(0.0)); Here X, Y, Z describe as follows:

public double X

{

get { return (double) GetValue(XProperty); }

set { SetValue(XProperty, value);

}

}

public double Y

{

get { return (double) GetValue(YProperty); }

set { SetValue(YProperty, value); }

}

public double Z

{

get { return (double) GetValue(ZProperty); }

set { SetValue(ZProperty, value); }

}

Step 4:

It’s

time to read sensors data asynchronously.private async void AccelerometerReadingChanged(Accelerometer sender, AccelerometerReadingChangedEventArgs args)

{

await this.Dispatcher.BeginInvoke(DispatcherPriority.Normal, (Action)(() =>

{

X = args.Reading.AccelerationX;

Y = args.Reading.AccelerationY;

Z = args.Reading.AccelerationZ;

}));

}

In the above code, method AccelerometerReadingChanged described as async and reading code

as await to process the task asynchronously. And Dispatcher.BeginInvoke

is used to write acceleration values to its parent thread.

Step 5:

Now, Accelerometer sensor control is ready, we can place it on UI and use its X, Y, Z axis data to anywhere in the program.

xmlns:local="clr-namespace:SensorsUltrabook"

<local:AccelerometerSensor Template="{StaticResource AccelerometerSensorTemplate}" /> Don’t forget to apply style to the Accelerometer control.

<ControlTemplate x:Key="AccelerometerSensorTemplate" TargetType="local:AccelerometerSensor">

<StackPanel Orientation="Vertical" Margin="15,15,15,15">

<TextBlock Text="ACCELEROMETER" />

<Image Source="{StaticResource AccelerometerImage}" Width="288" Height="300"/>

<StackPanel Orientation="Horizontal">

<TextBlock Text=" x:" />

<TextBlock Text="{Binding RelativeSource={RelativeSource TemplatedParent}, Path=X}" />

</StackPanel>

<StackPanel Orientation="Horizontal">

<TextBlock Text=" y:" />

<TextBlock Text="{Binding RelativeSource={RelativeSource TemplatedParent}, Path=Y}" />

</StackPanel>

<StackPanel Orientation="Horizontal">

<TextBlock Text=" z:" />

<TextBlock Text="{Binding RelativeSource={RelativeSource TemplatedParent}, Path=Z}" />

</StackPanel>

</StackPanel>

</ControlTemplate>

In the code each sensor is described as a control in a separate CS file.