Background

In the wake of mass shootings where the alleged perpetrators may have posted their manifestos or intents on Internet forums like 8chan and 4chan or social media sites like Twitter, there has been an increased call for technology that can monitor and detect possible threats through signals on social media. In July, the FBI issued an RFP for tools that can perform these functions.

Quote:

The FBI issued a request for proposals for a subscription-based social media alerting tool that will filter and evaluate big data from Twitter, Facebook, Instagram and other social media platforms while ensuring privacy and civil liberties compliance requirements are met. It wants a web-based system – also accessible through a mobile app – that can identify of possible threats and assist with current missions in near real time through alerting, analysis, and geolocation services.

Quote:

“With increased use of social media platforms by subjects of current FBI investigations and individuals that pose a threat to the United States, it is critical to obtain a service which will allow the FBI to identify relevant information from Twitter, Facebook, Instagram, and other Social media platforms in a timely fashion,” the agency said in the RFP. “Consequently, the FBI needs near real-time access to a full range of social media exchanges in order to obtain the most current information available in furtherance of its law enforcement and intelligence missions.”

Quite separate from the technical challenges, the ethics of this technology will be dubious to many. Recently, an open-source Chef developer pulled his source code from GitHub when he discovered the Chef Software company had a contract with ICE, and the company has stated it will not renew such contracts due to the views of its developers on the morality of the actions the agency has taken. There is a big discussion happening in tech now among open-source developers about companies who do business with government agencies they find objectionable, and the ethics of using software for face-recognition and other forms of digital surveillance and monitoring.

But just like with my other projects, my own view is that pragmatism is the better approach. There are so many commercial entities with the money and resources to build closed-source communication monitoring systems like these, that it's not clear what positive impact having a morotorium on developing these systems will have. These kinds of problems are very interesting to solve from a technical POV and require a diverse set of skills and knowledge from machine learning and natural-language processing to database and cloud system design. Software that is free or open-source allows others to study how it works in order to address security or ethical or concerns. Free software means giving others the freedom to study and make use of what you create within the terms of a free software licence, regardless of your own political or ethical convictions.

Design

Canaan (Computer-Assisted News Aggregation and Analysis) is an in-development open-source .NET framework for aggregating and analyzing social and traditional news. Canaan allows you to build pipelines which aggregate and extract news posts and articles from different sources, apply text analytics and natural language and machine learning processing to the news content, and store the resulting artifacts in different kinds of local and cloud storage.

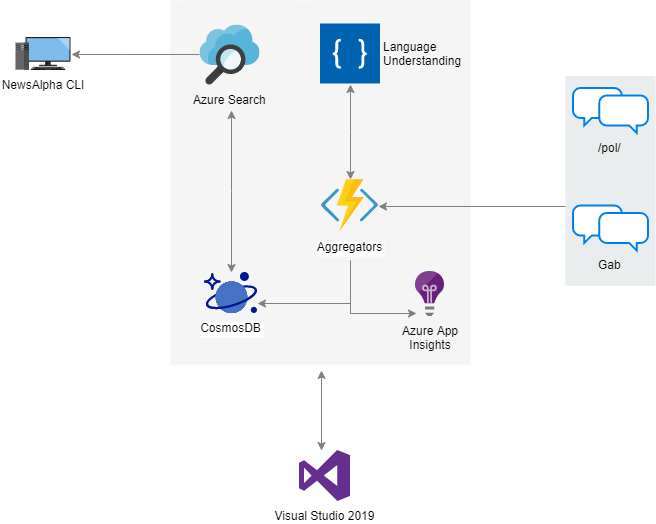

NewsAlpha is implemented as a set of Canaan pipelines which aggregate news posts from social media sites, apply NLU tasks like intent and entity recognition, and then store the results in a CosmosDB database. An NLU model is trained using Azure LUIS to recognize statements that constitute an intent to harm others or oneself and a numeric confidence score measuring the threat intent along with other annotations are attached to each post.

Azure Search is then used to create a full-text index over the results. This index can then be queried by the NewsAlpha frontends - either the CLI or in future a web interface - allowing the operator to rapidly search over millions of social news posts and annotations. Aggregators and indexers run frequently - typically every five minutes - allowing social news posts to be analyzed and queried very shortly after they are posted.

NewsAlpha currently aggregates posts from 4Chan's /pol/ forum and the Gab microblogging site. More aggregators for forums like Reddit and Twitter will be added in the future.

Implementation

Components

Each Canaan component is simply a class which inherits from the base Api class. The base class provides common functions like logging and timing and configuration management and common patterns for things like initialization and calling remote services (like Azure Storage). The base API class is designed to get the boilerplate stuff out of the way so that the code for each component can be written as easily and clearly as possible, e.g., the constructor for the CosmosDB storage component is:

public CosmosDB(string endpointUrl, string authKey,

string databaseId, CancellationToken ct) : base(ct)

{

EndpointUrl = endpointUrl;

AuthKey = authKey;

Client = new CosmosClient(EndpointUrl, AuthKey,

new CosmosClientOptions()

{

ApplicationName = "Canaan",

ConnectionMode = ConnectionMode.Direct,

MaxRetryAttemptsOnRateLimitedRequests = 30,

MaxRetryWaitTimeOnRateLimitedRequests = TimeSpan.FromSeconds(30),

ConsistencyLevel = ConsistencyLevel.Session});

DatabaseId = databaseId;

Info("Created client for CosmosDB database {0} at {1}.",

DatabaseId, Client.Endpoint);

Initialized = true;

}

public CosmosDB(string databaseId, CancellationToken ct) :

this(Config("CosmosDB:EndpointUrl"), Config("CosmosDB:AuthKey"), databaseId, ct) {}

and a call to retrieve an item from a CosmosD database looks like:

public async Task<T> GetAsync<T>(string containerId, string partitionKey, string itemId)

{

ThrowIfNotInitialized();

var container = Client.GetContainer(DatabaseId, containerId);

var r = await container.ReadItemAsync<T>

(itemId, new PartitionKey(partitionKey), cancellationToken: CancellationToken);

return r.Resource;

}

The policy on exception handling is that all exceptions are allowed to bubble up from lower-level components to pipelines, which are the highest level Canaan components and the places where application logic can make the correct decisions about operation errors, and how to handle them. This allows Canaan components to be written with a minimum of try/catch handlers and makes the code easier to write and read.

Canaan components used by Newsalpha can be broken down into the following categories:

- Aggregators

- Extractors

- Analyzers

- Storage

- Pipelines

Aggregators

Aggregators ingest social news posts from forums and social media sites. Usually (but not always), social news posts can be obtained from either the site's own JSON API or via an RSS or Atom XML feed. In the case of the 4chan aggregator, we can use their JSON API to retrieve threads and posts made on a particular board. For instance, the code to get the JSON list of current threads for a particular forum board looks like:

public async Task<IEnumerable<NewsThread>> GetThreads(string board)

{

using (var op = Begin("Get threads for board {0}", board))

{

var r = await HttpClient.GetAsync($"http://a.4cdn.org/{board}/threads.json",

CancellationToken);

r.EnsureSuccessStatusCode();

var json = await r.Content.ReadAsStringAsync();

var pages = JArray.Parse(json);

var threads = new List<NewsThread>();

foreach (dynamic page in pages)

{

int p = 1;

foreach (dynamic thread in page.threads)

{

var t = new NewsThread()

{

Source = "4ch.pol",

No = thread.no,

Id = ((string)thread.no) + "-" + YY,

Position = p++,

LastModified = DateTimeOffset.FromUnixTimeSeconds

((long)thread.last_modified).UtcDateTime,

ReplyCount = thread.replies

};

threads.Add(t);

}

}

op.Complete();

return threads;

}

}

Since posts are usually returned as HTML, aggregators rely on other Canaan components to extract the text or other content that can be analyzed and stored.

Extractors

Extractors extract text, images, links and other content from HTML content, e.g., the WebScraper class has the following method for extracting links from an HTML fragment using the CsQuery HTML selector library:

public static Link[] ExtractLinksFromHtmlFrag(string html)

{

CQ dom = html;

var links = dom["a"];

if (links != null && links.Count() > 0)

{

return links.Select(l => new Link()

{

Uri = l.HasAttribute("href") && Uri.TryCreate

(l.GetAttribute("href"), UriKind.RelativeOrAbsolute, out Uri u) ? u : null,

HtmlAttributes = l.Attributes.ToDictionary(a => a.Key, a => a.Value),

InnerHtml = l.InnerHTML,

InnerText = l.InnerText

}).ToArray();

}

else

{

return Array.Empty<Link>();

}

}

Extracted text and links from social news posts will be processed and enriched by the analyzer components. In future, extractors for images and documents like PDFs will be added.

Analyzers

Analyzers perform tasks like semantic tagging, entity extraction, intent detection, sentiment analysis and other NLP and NLU activities on the text of social news posts. NewsAlpha currently uses Azure's Language Understanding (LUIS) service:

Using the LUIS web interface, an NLU model is trained with sentences that are likely to indicate threats (together with sentences that are likely not threats.) The model also recognizes entities like dates and geographic locations. The model is made available via an HTTP endpoint that can be accessed via the Azure LUIS SDK, e.g., the constructor for the AzureLUIS class looks like:

public AzureLUIS(string endpointUrl, string authKey,

string appId, CancellationToken ct) : base(ct)

{

EndpointUrl = endpointUrl ?? throw new ArgumentNullException("endpointUrl");

AuthKey = authKey ?? throw new ArgumentNullException("authKey");

AppId = appId ?? throw new ArgumentNullException("appId");

var credentials = new ApiKeyServiceClientCredentials(authKey);

Client = new LUISRuntimeClient(credentials,

new System.Net.Http.DelegatingHandler[] { });

Client.Endpoint = EndpointUrl;

AppId = appId;

Initialized = true;

}

and applied to the text of each social news post:

public async Task<Post> GetPredictionForPost(Post post)

{

var prediction = new Prediction(Client);

var query = post.Text.Length <= 500 ? post.Text : post.Text.Substring(0, 500);

var result = await prediction.ResolveAsync(AppId, query);

var results = new Dictionary<string, object>();

List<string> entities = new List<string>();

foreach(var e in result.Entities)

{

entities.Add(e.Entity);

}

post.Entities = entities;

if (result.TopScoringIntent != null &&

result.TopScoringIntent.Intent.ToLower() == "threat")

{

post.ThreatIntent = result.TopScoringIntent.Score.Value;

}

return post;

}

For posts that have the "threat" intent score higher than the "none" score, the intent score is added as an annotation or additional property to the post.

LUIS is a "no-code" NLU service that is rated as one of the most accurate compared to other online and offline NLU services like IBM Watson and AI.ai. Although you can't use code to create and tweak your model, the luis.au web console provides everything you need to train your model including pre-built entities that don't require additional training:

One of the best features of LUIS is the ability to use utterances received at the HTTP endpoint in real-time to train your model. LUIS allows you to update and re-train and deploy your model without having to go offline at anytime. But in spite of its accuracy and flexibility, the major downside to LUIS is its cost. For usage at the scale of NewsAlpha where hundreds of utterances need to be analyzed every minute, using LUIS becomes expensive. Future development will look at using offline NLU libraries like SnipsNLU for the tasks of entity and intent extraction.

Storage

Storage components use different kinds of database and other storage to store and retrieve news content and metadata and annotations. The CosmosDB storage component uses the Azure CosmosDB cloud database SDK to create and retrieve entities, e.g., the method for retrieving a set of entities from an CosmosDb container looks like:

public async Task<IEnumerable<T>> GetAsync<T>(string containerId, string partitionKey,

string query, Dictionary<string, object> parameters)

{

ThrowIfNotInitialized();

var container = Client.GetContainer(DatabaseId, containerId);

QueryDefinition q = new QueryDefinition(query);

if (parameters != null)

{

for (int i = 0; i < parameters.Count; i++)

{

q = q.WithParameter(parameters.Keys.ElementAt(i),

parameters.Values.ElementAt(i));

}

}

var r = container.GetItemQueryIterator<T>(q,

requestOptions: new QueryRequestOptions()

{

PartitionKey = new PartitionKey(partitionKey)

});

var items = new List<T>();

while (r.HasMoreResults)

{

var itemr = await r.ReadNextAsync(CancellationToken);

items.AddRange(itemr.Resource);

}

return items;

}

NewsAlpha uses CosmosDB to store social news posts and threads and their associated metadata and annotations added by analyzers, e.g., the 4chan thread mentioned in the Media Matters article is stored as a JSON document in the threads container in the socialnews database:

The socialnews CosmosDB database currently has about 2M social news posts and provides good query performance at a low cost. Other storage components for databases like SQL Server and Sqlite will be added in the future.

Indexes

Social news posts and their annotations stored in CosmosDB are indexed via an Azure Search service.

Azure Search basically provides a web interface to the open-source Lucene full-text search server. Using the Search interface, you specify the fields from your data source that should be indexed:

Currently, we have about 1.5M posts in our 4Chan index dating from around the 7th of September. The NewsAlpha search indexes allow users to run complex queries and filters over these posts with a very quick response time.

Pipelines

A pipeline is a high-level wiring together of Canaan components. For example, the FourChanAzure pipeline ingests threads and posts for a given 4Chan board using the FourChan aggregator, adds annotations for entities and threat intent from the AzureLUIS analyzer, and then stores the data in the socialnews CosmosDB database where it will be indexed by Azure Search and can then be searched via the NewsAlpha CLI. The GabAzure pipeline does the same for Gab posts. The Update method of the GabAzure pipeline looks like:

public async Task Update()

{

ThrowIfNotInitialized();

Info("Listening to Gab live stream for 100 seconds.");

var posts = await Aggregator.GetUpdates(100);

if (!string.IsNullOrEmpty(Config("CognitiveServices:EnableNLU")))

{

using (var op = Begin("Get intents for {0} posts from Azure LUIS",

posts.Count()))

{

foreach (var post in posts)

{

await NLU.GetPredictionForPost(post);

if (post.Entities.Count > 0)

{

Info("Detected {0} entities in post {1}.",

post.Entities.Count, post.Id);

}

if (post.ThreatIntent > 0.0)

{

Info("Detected threat intent {0:0.00} in post {1}.",

post.ThreatIntent, post.Id);

}

}

op.Complete();

}

}

using (var op = Begin("Insert {0} posts into container {1} in database {2}",

posts.Count(), "posts", "socialnews"))

{

foreach (var b in posts.Batch(4))

{

await Task.WhenAll(b.Select(p => Db.CreateAsync("posts", "gab", p)));

}

op.Complete();

}

}

NewsAlpha's aggregators run as scheduled Azure Functions meaning they are triggered on a set schedule (every 5 minutes say) as opposed to being triggered by HTTP requests. Using Azure Functions eliminates the need for a backend-framework like ASP.NET core and simplifies both the implementation and testing of aggregators tremendously.

Logging

Logging is a critical part of cloud apps and is the only way to monitor services which run on remote infrastructure. Canaan has dedicated components for logging and NewsAlpha aggregators rely on the logging infrastructure of Azure Functions. We can use Canaan's Serilog logger component with a Serilog event sink configured to write to the TraceWriter available to each Azure Function:

Serilog.Core.Logger logger = new LoggerConfiguration()

.WriteTo.Console(Serilog.Events.LogEventLevel.Debug)

.MinimumLevel.Debug()

.Enrich.FromLogContext()

.WriteTo.TraceWriter(log)

.CreateLogger();

Api.SetLogger(new SerilogLogger(logger));

With this configuration, NewsAlpha's aggregators will log all events using the Azure Functions logging infrastructure:

Interfaces

NewsAlpha currently has a CLI interface which can be downloaded from the GitHub repo's releases page.

Although CLIs are faster to use and more responsive than web interfaces, for the broadest audience, a web interface will be developed to query the NewsAlpha indexes.

Performance

The performance of the index itself is good as querying an index of 1M+ 4Chan posts does not take more than a few seconds and is even faster when the index server is warmed up.

But as seen in the demo video, the model isn't very good right now at detecting actual threats due to a lack of training examples and the wide variance in what people say on forums. NLU models typically require hundreds or more of examples to achieve good performance especially over such a wide variance of utterances.

Conclusion

NewsAlpha is still at the alpha stage of development but both frontend and backend are functional and can be used and I believe the basic design is sound. The major need right now is to use an offline NLU framework for threat intent detection as I could not afford to run the LUIS service for more than a few days. I plan to continue work on NewsAlpha and Canaan and expand into indexing other forums and social media sites like Reddit's /The_Donald/ and Twitter.

History

- First draft submitted

- Corrected typos

- Updated content

- Formatting fixes and typos