Introduction

This is the fourth tutorial I am writing for this year. For this one, I was going to do some research on one of my favorite subjects - full text search engine. The common one that people use is Apache Lucene. I used this before, wrote a tutorial about it with Hibernate. This time, I want to explore it without mixing with other technology. I will have a simple Java console application, which will perform three different functions:

- Index some document

- Full text search to find the target document

- Get document by unique identifier

The program also performs some other miscellaneous functions like deleting all documents from the index, or deleting just some documents from index.

The program will use file directory as index repository. The version of Apache Lucene used in this is 8.2.0. We only needed the lucene-core library to get all these to work.

Background

Working with Lucene seems to be complicated. But it is quite simple if you compare it with relational databases. Let me lay out some terminologies. First, there is the concept of index. Index is like a database. It can store a lot of documents. Document is like a table. As we all know, there can be one or more columns in a table. In a document, there can be one or more fields. These fields are like columns in a table of a relational database.

So, adding a document is like adding a row into a table. Then finding documents in an index is like querying the table to find the data rows that match the query criteria. As we all know, when query a table in a relational database is specifying query criteria against the columns. Finding the documents in the index can be done with the same way, by specifying the search terms against the fields of the documents.

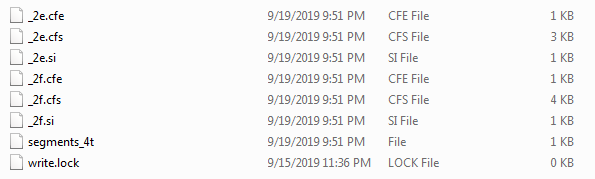

For this sample application, I will use the file system to store the document index. After documents are added into an index, you will see that the directory looks like this:

Let's start with the way in which indexing a document works.

Indexing a Document

In order to perform a full text search operation, the first thing you have to do is add some documents into the index. Apache Lucene library provides two object types, one is called a Document; the other is called an IndexableField. A document contains multiple indexable fields. Once a document with multiple indexable fields are created, it can be added into the full text search index. IndexableField is an abstract type, its sub types included TextField, StringField, IntPoint, FloatPoint, IntRange, FloatRange, and many other field types. For this tutorial, I am using only TextField, and StringField.

The reason there are so many field types is that different types of values can be analyzed differently and yet added into the same searchable index as a single document. The difference between TextField and StringField is that any value of a TextField will be broken into words (tokens). For English language, a sentence is created with words separated with spaces, and punctuation marks. If a sentence is stored in a text fields, all the words are extracted and each is a searchable token. Lucene would associate this document with all these words. If the sentence is stored in a StringField, then the entire sentence is considered as one token. And the numeric values in numeric fields will be treated as numeric values, which equal, greater than, less than, or other range based comparisons can be used to query against these fields and used to locate the documents.

Let's take a look at how to create a indexable document. Here is the code:

public Document createIndexDocument(IndexableDocument docToAdd)

{

Document retVal = new Document();

IndexableField docIdField = new StringField("DOCID",

docToAdd.getDocumentId(),

Field.Store.YES);

IndexableField titleField = new TextField("TITLE",

docToAdd.getTitle(),

Field.Store.YES);

IndexableField contentField = new TextField("CONTENT",

docToAdd.getContent(),

Field.Store.NO);

IndexableField keywordsField = new TextField("KEYWORDS",

docToAdd.getKeywords(),

Field.Store.YES);

IndexableField categoryField = new StringField("CATEGORY",

docToAdd.getCategory(),

Field.Store.YES);

IndexableField authorNameField = new TextField("AUTHOR",

docToAdd.getAuthorName(),

Field.Store.YES);

long createTime = docToAdd.getDocumentDate().getTime();

IndexableField documentTimeField = new StoredField("DOCTIME", createTime);

IndexableField emailField = new StringField("AUTHOREMAIL",

docToAdd.getAuthorEmail(),

Field.Store.YES);

retVal.add(docIdField);

retVal.add(titleField);

retVal.add(contentField);

retVal.add(keywordsField);

retVal.add(categoryField);

retVal.add(authorNameField);

retVal.add(documentTimeField);

retVal.add(emailField);

return retVal;

}

The above code snippet is not hard to understand. It creates a Document object. Then create a number of IndexableFields objects. All these field objects are added to the Document object. Then the Document object is returned from the method. This method can be found in the file "FileBasedDocumentIndexer.java".

In the above code snippet, I have used TextField and StringField. The constructor of these two types has three parameters. The first is the field name. The second one is the value of the field. The last one is an enum value that indicates whether or not to store the value in the index or simply index and throw away the value. The difference between storing or not storing the value is that by storing the value, when you retrieve the document, you can also get the value of these stored fields.

The next step is the actual indexing of the document. Here is the code:

String indexDirectory;

...

public void indexDocument(Document docToAdd) throws Exception

{

IndexWriter writer = null;

try

{

Directory indexWriteToDir =

FSDirectory.open(Paths.get(indexDirectory));

writer = new IndexWriter(indexWriteToDir, new IndexWriterConfig());

writer.addDocument(docToAdd);

writer.flush();

writer.commit();

}

finally

{

if (writer != null)

{

writer.close();

}

}

}

The above code snippet uses FDiretory class' static method open() to get a reference of the index directory, which is an object of type Directory (an object type in Apache Lucene). Next, I instantiate a IndexWriter object. The constructor takes in two arguments, the first is the Directory object; the second one is a configuration object of type IndexWriterConfig. I use the default configuration, which uses the StandardAnalayzer. The standard analyzer is by default working with English language based sentences. There are many other types of analyzers, which you can include an additional jar to use them, or implement your own analyzer if you wanted to. For the sake of simplicity, this tutorial is using only the StandardAnalyzer.

Next, I use the IndexWriter object's method addDocument() to add the document into the full text search index. This is where the document indexing happens. It follows by the call to flush() and commit() to make sure the indexing is fully committed to the full text search index.

I wrapped the whole operation in a try-finally block, but no catch block. It is done so that any exception can be handled by any callers. The finally block would close the writer to clean up the resource use. If you run the sample project, and finish this method, you will see something similar to the above screenshot.

Now that we know how to index a document, next we will take a look at how to search for this document.

Full Text Search to Locate Documents

Before I present the code, I would like to explain my design intention. I like to search all fields of the documents in the index. If any field has a match of the full text search criteria, then the document will be considered as found.

Here is the code:

public List<FoundDocument> searchForDocument(String searchVal)

{

List<FoundDocument> retVal = new ArrayList<FoundDocument>();

try

{

Directory dirOfIndexes =

FSDirectory.open(Paths.get(indexDirectory));

IndexSearcher searcher = new IndexSearcher(DirectoryReader.open(dirOfIndexes));

QueryBuilder bldr = new QueryBuilder(new StandardAnalyzer());

Query q1 = bldr.createPhraseQuery("TITLE", searchVal);

Query q2 = bldr.createPhraseQuery("KEYWORDS", searchVal);

Query q3 = bldr.createPhraseQuery("CONTENT", searchVal);

Query q4 = bldr.createBooleanQuery("CATEGORY", searchVal);

Query q5 = bldr.createPhraseQuery("AUTHOR", searchVal);

Query q6 = bldr.createBooleanQuery("AUTHOREMAIL", searchVal);

BooleanQuery.Builder chainQryBldr = new BooleanQuery.Builder();

chainQryBldr.add(q1, Occur.SHOULD);

chainQryBldr.add(q2, Occur.SHOULD);

chainQryBldr.add(q3, Occur.SHOULD);

chainQryBldr.add(q4, Occur.SHOULD);

chainQryBldr.add(q5, Occur.SHOULD);

chainQryBldr.add(q6, Occur.SHOULD);

BooleanQuery finalQry = chainQryBldr.build();

TopDocs allFound = searcher.search(finalQry, 100);

if (allFound.scoreDocs != null)

{

for (ScoreDoc doc : allFound.scoreDocs)

{

System.out.println("Score: " + doc.score);

int docidx = doc.doc;

Document docRetrieved = searcher.doc(docidx);

if (docRetrieved != null)

{

FoundDocument docToAdd = new FoundDocument();

IndexableField field = docRetrieved.getField("TITLE");

if (field != null)

{

docToAdd.setTitle(field.stringValue());

}

field = docRetrieved.getField("DOCID");

if (field != null)

{

docToAdd.setDocumentId(field.stringValue());

}

field = docRetrieved.getField("KEYWORDS");

if (field != null)

{

docToAdd.setKeywords(field.stringValue());

}

field = docRetrieved.getField("CATEGORY");

if (field != null)

{

docToAdd.setCategory(field.stringValue());

}

if (docToAdd.validate())

{

retVal.add(docToAdd);

}

}

}

}

}

catch (Exception ex)

{

ex.printStackTrace();

}

return retVal;

}

This code snippet can be split into several parts, the first part is opening the directory of the full text search index:

Directory dirOfIndexes =

FSDirectory.open(Paths.get(indexDirectory));

IndexSearcher searcher = new IndexSearcher(DirectoryReader.open(dirOfIndexes));

This part is similar to the way directory is opened for adding document to the index. But instead of using an IndexWriter, I am using an IndexSearcher object. Again, the analyzer I used is the default StandardAnalyzer. You won't see StandardAnalyzer objects or any references in my sample code, because it is assumed as the default analyzer used.

Next, I need to create a query. The query basically specifies that if any fields contain the phrase (passed in by the parameter of my method), then the document is considered as found. Since I have multiple fields, I have to create the query like this:

QueryBuilder bldr = new QueryBuilder(new StandardAnalyzer());

Query q1 = bldr.createPhraseQuery("TITLE", searchVal);

Query q2 = bldr.createPhraseQuery("KEYWORDS", searchVal);

Query q3 = bldr.createPhraseQuery("CONTENT", searchVal);

Query q4 = new TermQuery(new Term("CATEGORY", searchVal));

Query q5 = bldr.createPhraseQuery("AUTHOR", searchVal);

Query q6= new TermQuery(new Term("AUTHOREMAIL", searchVal));

BooleanQuery.Builder chainQryBldr = new BooleanQuery.Builder();

chainQryBldr.add(q1, Occur.SHOULD);

chainQryBldr.add(q2, Occur.SHOULD);

chainQryBldr.add(q3, Occur.SHOULD);

chainQryBldr.add(q4, Occur.SHOULD);

chainQryBldr.add(q5, Occur.SHOULD);

chainQryBldr.add(q6, Occur.SHOULD);

BooleanQuery finalQry = chainQryBldr.build();

The above code snippet first creates six different Query objects. Each for one field of the document. As observed, I used two different kinds of Query sub-type. One is called a phrase query. This type of query will try to match the input string as a sub text segment of the field value. The other type is called a term query. The reason for two different types of Query is that phrase query does not work on StringField type fields. So I used Term query to attempt matching the input search value against the StringField type fields. This simple approach is enough to make the query work.

Once I constructed the queries, I need to create a master query that concatenate all six queries together. The logic should be if field #1 matches the input query value, or field #2 matches the input query value, or field #3 matches the input query value, ..., or field #6 matches the input query value, then the document is the one that should be retrieved. We can create such master query using the query builder of BooleanQuery. Once I created the build object, I add all six queries one by one to the builder. Each query is added with the Occur option with object value Occur.Should. The object value Occur.Should is equivalent to the logical operator "OR". If you want to use something equivalent to logical operator "AND", then you can use Occur object value Occur.Must. For my scenario, object value Occur.Should is the one I needed.

The last line will construct the final master query. Then I need to invoke the query against the index. Here is how to do it:

TopDocs allFound = searcher.search(finalQry, 100);

I used the search() method of the object IndexSearcher to find the most relevant documents. This method takes two parameters. The first is the master query. The second one is the max number of relevant documents to be returned. TopDocs is a collection of documents that are found most relevant against the query execution. Each of the object inside the TopDocs is an integer index, and a score. The score indicates how relevant the document is against the search criteria.

Now that I have a collection of documents, I will fetch them and get the document information I needed. Here is the full code of how I did this:

if (allFound.scoreDocs != null)

{

for (ScoreDoc doc : allFound.scoreDocs)

{

System.out.println("Score: " + doc.score);

int docidx = doc.doc;

Document docRetrieved = searcher.doc(docidx);

if (docRetrieved != null)

{

FoundDocument docToAdd = new FoundDocument();

IndexableField field = docRetrieved.getField("TITLE");

if (field != null)

{

docToAdd.setTitle(field.stringValue());

}

field = docRetrieved.getField("DOCID");

if (field != null)

{

docToAdd.setDocumentId(field.stringValue());

}

field = docRetrieved.getField("KEYWORDS");

if (field != null)

{

docToAdd.setKeywords(field.stringValue());

}

field = docRetrieved.getField("CATEGORY");

if (field != null)

{

docToAdd.setCategory(field.stringValue());

}

if (docToAdd.validate())

{

retVal.add(docToAdd);

}

}

}

}

The above code snippet iterates through the found documents. For each found document, first I output the score of relevance. Next, I take the integer index value of the document via doc.doc. Finally, I use the searcher object to retrieve the document using the integer index of the document. Here is the code snippet:

System.out.println("Score: " + doc.score);

int docidx = doc.doc;

Document docRetrieved = searcher.doc(docidx);

Once I retrieved the document, I have to extract the field values and store in my document object. This is the code that does it:

if (docRetrieved != null)

{

FoundDocument docToAdd = new FoundDocument();

IndexableField field = docRetrieved.getField("TITLE");

if (field != null)

{

docToAdd.setTitle(field.stringValue());

}

field = docRetrieved.getField("DOCID");

if (field != null)

{

docToAdd.setDocumentId(field.stringValue());

}

field = docRetrieved.getField("KEYWORDS");

if (field != null)

{

docToAdd.setKeywords(field.stringValue());

}

field = docRetrieved.getField("CATEGORY");

if (field != null)

{

docToAdd.setCategory(field.stringValue());

}

if (docToAdd.validate())

{

retVal.add(docToAdd);

}

}

This is the end of the most exciting part of this tutorial. Next, I will go over some miscellaneous operations.

Some Other Fun Stuff

We all know that in relational databases, each row in a table has a unique identifier. When I use Lucene, I do the same. I use one field of the document to store a unique identifier. What I do is I use Java's UUID object to create a GUID value. I take the string representation of the GUID value, then remove the dash character. Here is an example of a GUID value I use:

77bbd895bb6f4c16bb637a44d8ea6f1e

Assuming the field that stores the unique identifier is called "DOCID". In order to locate a document with such an ID, here is how I do it:

public Document getDocumentById(String docId)

{

Document retVal = null;

try

{

Directory dirOfIndexes =

FSDirectory.open(Paths.get(indexDirectory));

StandardAnalyzer analyzer = new StandardAnalyzer();

IndexSearcher searcher = new IndexSearcher(DirectoryReader.open(dirOfIndexes));

QueryBuilder quryBldr = new QueryBuilder(analyzer);

Query idQury = quryBldr.createPhraseQuery("DOCID", docId);

TopDocs foundDocs = searcher.search(idQury, 1);

if (foundDocs != null)

{

if (foundDocs.scoreDocs != null && foundDocs.scoreDocs.length > 0)

{

System.out.println("Score: " + foundDocs.scoreDocs[0].score);

retVal = searcher.doc(foundDocs.scoreDocs[0].doc);

}

}

}

catch (Exception ex)

{

ex.printStackTrace();

}

return retVal;

}

This is pretty similar to the search method presented in the previous section. First, I open a Directory that contains the full text index, then I create an object of IndexSearcher. Using this searcher object, I create a phrase query which specifies the GUID input value to be searched against just one field, called "DOCID". I specify only one document should be returned for such query. So it is a one result or nothing query. Whatever is found would be the document I expected. Once I found the document, I would use the integer index value to retrieve it and convert into a document of my own type.

Next, I like to discuss two ways that can be useful for cleaning up. One is how to delete a document from the full text index. The other is deleting all documents in a full text index. Both are easy to perform. First, let's check how to delete a single document from the index. Here is the code:

public void deleteDocument(String docId) throws Exception

{

IndexWriter writer = null;

try

{

Directory indexWriteToDir =

FSDirectory.open(Paths.get(indexDirectory));

writer = new IndexWriter(indexWriteToDir, new IndexWriterConfig());

writer.deleteDocuments(new Term("DOCID", docId));

writer.flush();

writer.commit();

}

finally

{

if (writer != null)

{

writer.close();

}

}

}

This code snippet shows why it is important to have a unique identifier for the document. In this code snippet, it calls the IndexWriter's deleteDocuments(). This method uses a Term object to find all the documents that matches the search term. Then it removes all these documents. In the above code, I uses again the field "DOCID" to find the document that matches the unique identifier. This method is not limited to deleting just one doucment. You can use the Term object to specify search term for multiple documents. Then the method will remove all these documents.

Similarly, deleting all indexes can be done by just a call to method deleteAll(), here is how it can be done:

public void deleteAllIndexes() throws Exception

{

IndexWriter writer = null;

try

{

Directory indexWriteToDir =

FSDirectory.open(Paths.get(indexDirectory));

writer = new IndexWriter(indexWriteToDir, new IndexWriterConfig());

writer.deleteAll();

writer.flush();

writer.commit();

}

finally

{

if (writer != null)

{

writer.close();

}

}

}

In both methods, I open the directory; create a IndexWriter object with the directory object, and default configuration. Finally, I call the delete methods of the IndexWriter object. Lastly, I flush the IndexWriter object and commit the changes.

This is it! All the little trinkets needed for basic document indexing and full text search. They are not much. But they work.

Test Run

The big question now is how to test all these code design. In my sample application. I have a class called IndexingMain. Inside, there is the main entry, and a bunch of helper methods. Let me begin with the method that creates a document. Here it is:

public static IndexableDocument prepareDocForTesting(String docId)

{

IndexableDocument doc = new IndexableDocument();

Calendar cal = Calendar.getInstance();

cal.set(2018, 8, 21, 13, 13, 13);

doc.setDocumentId(docId);

doc.setAuthorEmail("testuser@lucenetest.com");

doc.setAuthorName("Lucene Test User");

doc.setCategory("Index File Sample");

doc.setContent("There are two main types of medical gloves: "

+ "examination and surgical. Surgical gloves have more "

+ "precise sizing with a better precision and sensitivity "

+ "and are made to a higher standard. Examination gloves "

+ "are available as either sterile or non-sterile, while "

+ "surgical gloves are generally sterile.");

doc.setDocumentDate(cal.getTime());

doc.setKeywords("Joseph, Brian, Clancy, Connery, Reynolds, Lindsay");

doc.setTitle("Quick brown fox and the lazy dog");

return doc;

}

IndexableDocument is a document type I created. And I have to convert my document type object into the Apache Lucene Document object. This is done with this code snippet, which you can find in the main entry:

FileBasedDocumentIndexer indexer = new FileBasedDocumentIndexer("c:/DevJunk/Lucene/indexes");

...

Document lucDoc1 = indexer.createIndexDocument(doc1);

indexer.indexDocument(lucDoc1);

And the last line in the above code snippet is indexing the document into the Lucene file index. Now that we can successfully index a document, it is time to see how search works. Here it is:

public static void testFindDocument(String searchTerm)

{

LuceneDocumentLocator locator = new LuceneDocumentLocator("c:/DevJunk/Lucene/indexes");

List<FoundDocument> foundDocs = locator.searchForDocument(searchTerm);

if (foundDocs != null)

{

for (FoundDocument doc : foundDocs)

{

System.out.println("------------------------------");

System.out.println("Found document...");

System.out.println("Document Id: " + doc.getDocumentId());

System.out.println("Title: " + doc.getTitle());

System.out.println("Category: " + doc.getCategory());

System.out.println("Keywords: " + doc.getKeywords());

System.out.println("------------------------------");

}

}

}

Here is how this helper method is used in the main entry:

...

System.out.println("********************************");

System.out.println("Search first document");

testFindDocument("available as either");

System.out.println("********************************");

...

Lastly, I have created a helper method that finds a document by the "DOCID". Here it is:

public static Document testGetDocumentById(String docId)

{

LuceneDocumentLocator locator = new LuceneDocumentLocator("c:/DevJunk/Lucene/indexes");

Document retVal = locator.getDocumentById(docId);

if (retVal != null)

{

System.out.println("Get Document by Id [" + docId + "] found.");

}

else

{

System.out.println("Get Document by Id [" + docId + "] **not** found.");

}

return retVal;

}

To use this helper method to test it, like this:

...

testGetDocumentById(id1);

...

Here it is, the main entry of the sample application:

public static void main(String[] args)

{

UUID x = UUID.randomUUID();

String id1 = x.toString();

id1 = id1.replace("-", "");

System.out.println("Document #1 with id [" + id1 + "] has been created.");

x = UUID.randomUUID();

String id2 = x.toString();

id2 = id2.replace("-", "");

System.out.println("Document #2 with id [" + id2 + "] has been created.");

IndexableDocument doc1 = prepareDocForTesting(id1);

IndexableDocument doc2 = prepare2ndTestDocument(id2);

FileBasedDocumentIndexer indexer =

new FileBasedDocumentIndexer("c:/DevJunk/Lucene/indexes");

try

{

indexer.deleteAllIndexes();

Document lucDoc1 = indexer.createIndexDocument(doc1);

indexer.indexDocument(lucDoc1);

System.out.println("********************************");

System.out.println("Search first document");

testFindDocument("available as either");

System.out.println("********************************");

Document lucDoc2 = indexer.createIndexDocument(doc2);

indexer.indexDocument(lucDoc2);

testGetDocumentById(id1);

System.out.println("********************************");

System.out.println("Search second document");

testFindDocument("coocoobird@moomootease.com");

System.out.println("********************************");

testGetDocumentById(id2);

indexer.deleteAllIndexes();

}

catch (Exception ex)

{

ex.printStackTrace();

return;

}

}

The sample application included an Apache Maven pom.xml. To build the application, just run:

mvn clean install

If you wish, you can create an Eclipse project from this Maven pom.xml file. Then you can import the project into Eclipse. To create the Eclipse project files, do this:

mvn eclipse:eclipse

When you run the application, you will see this:

Document #1 with id [ae1541e5051743e5af310bcfb50a19e8] has been created.

Document #2 with id [c1f20e79043d4b40aa2b9f3ac74e287b] has been created.

********************************

Search first document

Score: 0.39229375

------------------------------

Found document...

Document Id: ae1541e5051743e5af310bcfb50a19e8

Title: Quick brown fox and the lazy dog

Category: Index File Sample

Keywords: Joseph, Brian, Clancy, Connery, Reynolds, Lindsay

------------------------------

********************************

Score: 0.3150669

Get Document by Id [ae1541e5051743e5af310bcfb50a19e8] found.

********************************

Search second document

Score: 0.3150669

------------------------------

Found document...

Document Id: c1f20e79043d4b40aa2b9f3ac74e287b

Title: The centre of kingfisher diversity is the Australasian region

Category: Once upon a Time

Keywords: Liddy, Yellow, Fisher, King, Stevie, Nickolas, Feng Feng

------------------------------

********************************

Score: 0.3150669

Get Document by Id [c1f20e79043d4b40aa2b9f3ac74e287b] found.

It is messy, but it is proof that all the methods I have created above work as expected. There might be some bugs in it. I hope you can catch some. At least, feel free to change the search strings in the method calls to testFindDocument(...);<code> Like this:

...

testFindDocument("<Your test search string here>");

...

Summary

Finally, it comes to the point to write the summary of this tutorial. This tutorial has not been the fun kind of tutorial I used to write. I was not very familiar with the subject matter. And I have struggled. In the end, the outcome looked OK. And I am fairly happy with it.

In this tutorial, I have discussed the following topics:

- How to open a directory as a document Index

- How to index a document into a document index

- How to search documents in a document index. It is simple but does the job OK.

- How to locate a document by unique identifier

- How to delete documents by using a unique identifier. And how to delete all documents

I still had fun writing this tutorial. And I hope you would enjoy this as well.

History

- 09/24/2019 - Initial draft