Introduction

In this article I will describe an end-to-end system for exploring and analyzing large amounts of climate data using SAP's HANA in-memory database and Amazon's SageMaker cloud-hosted Jupyter notebooks. HANA's ability to store and query terabytes of data in RAM and its integration of graph and spatial database engines makes it a great back-end database platform for exploratory and statistical data analysis of the large datasets from diverse domains encountered in studying climate-change. Jupyter notebooks are the go-to web-based environments today for data exploration and visualization, and HANA's client libraries integrate directly with Python data frame libraries like Pandas. This allows Jupyter notebook users to execute queries that run server-side on the HANA instance and populate a Pandas data frame on the client-side, which can then be used with the dozens of Python data science and machine learning libraries available today. The combination of the ease of use and rich data exploration and visualization capabilities of Jupyter and Python, together with the low-latency in-memory queries of HANA over large datasets makes this an ideal data science system for anyone interested in studying climate change.

We will use a cloud-hosted 32GB instance of SAP HANA running on Amazon's EC2 service to ingest data from the Global Historical Climatology Network project which aggregates and makes publicly available weather station observations from all over the world spanning more than two centuries. We will use the freely available SAP HANA Studio as our main desktop IDE to administer and develop on our cloud-hosted HANA database server. Finally we'll user Jupyter notebooks as the user-facing front-end of our system to connect to and run queries on our climate data and create models for prediction.

Background

Climate Change

Climate change is one of the defining global issues of our time and many scientists, civilians and political leaders believe we must rapidly implement fundamental changes to our present way of life in order to avoid catastrophe in the near future. Studying climate change and its consequences means working with huge amounts of data gathered from diverse areas of human life: from metereological observations to geographical data to economic and population data.

At the same time there are many skeptics of climate change. People have questioned everything from the theoretical assumptions and models climate scientists use to the accuracy and validity of the data collected to the political motivations of those scientists and citizens advocating the need for serious revisions in our economic systems and way of life.

A big part of the reason we should trust science comes from it's reproducibility. Using the same data that climate and other scientists use, we should be able to arrive at the same conclusions that scientists do. The kinds of questions about climate world-wide we would like to answer using the available data are:

- Are global temperatures rising per year?

- How does global temperature vary in each century of human history?

- What areas of the world are temperatures rising most?

Before the advent of cloud computing and open source software frameworks for data science and machine learning, analyzing the available climate data would have been a daunting task requiring a supercomputer and incurring a considerable cost. Today, anyone from climate change believer to skeptic can spin up a virtual machine on AWS with any one of the many publicly available datasets on climate, and crunch the same numbers that climate scientists do to try to reproduce their findings.

GHCN

The Global Historical Climatology Network project aggragates climate data from all over the world and makes it available to the public via an FTP server hosting collections of CSV files. The GHCN-Daily database is a daily-updated dataset of weather station observations collected over a period of more than two centuries.

Quote:

The GHCN-Daily database collates daily weather station data from across the world and is likely the most comprehensive global dataset for daily in situ climate measurements (Menne et al., 2012b). Since the overview by Menne et al. (2012b), an additional 38 countries and territories have been added to the database (Table 1). The collection now encompasses data from 218 countries and territories, with some records going back as far as 1763. GHCN-Daily is updated operationally, although the station record is source-dependent. A relatively dense network of upto-date data is obtainable for Australia, Japan, Europe and North America. For some regions, most notably for Brazil, India and South Africa, historical data availability from GHCN-Daily is extensive, while updated data are only provided for a small subset of weather stations

-Jasmine B. D. Jaffrés GHCN-Daily: a treasure trove of climate data awaiting discovery

GHCN-Daily has far more detail and data than other GHCN datasets like GHCN-Monthly which contains only monthly mean temperature readings. Using SAP HANA and freely available data science libraries like Pandas and scikit-learn and data science environments like Jupyter, anyone can analyze and visualize the data GHCN collects.

SAP HANA

HANA is an in-memory column-oriented database which makes it ideal for aggregate calculations on numeric data. From an administrator's POV, SAP HANA let's us eschew different database systems (like OLAP cubes) for different users or projects. We can use HANA's calculation and analytic views to create low-latency views over a single source of data that serve different needs from data exploration to aggregate and statistical calculations. Climate or other data that is updated daily can be inserted into our database and will be reflected in our queries without the need to rebuild cubes or other analysis structures. From a developer's POV, SAP offers free desktop and web-based developer environments like HANA Studio and Web Studio and HANA has a huge set of database features and data modelling capabilities for building data-centric applications.

Amazon SageMaker

SageMaker is Amazon's hosted Jupyter notebook service which also comes with an Amazon managed machine learning service which lets you create and manage an entire ML workflow from data-labelling to training to prediction. SageMaker notebooks come with data science and machine learning libraries like Pandas, scikit, TensorFlow, PyTorch et.al ready to use. These libraries can talk to our HANA database and execute server-side queries via the HANA Python client library which maps Pandas data frame operations like selecting and filtering to SQL queries to be executed by the server.

Installation

The three main installation tasks for our system are creating our EC2 instance on AWS to run our HANA server, installing the HANA Studio IDE on our desktop machine, and creating our Amazon Sagemaker notebook instance which includes the HANA Python database client library.

SAP HANA on AWS

SAP provides several AMIs with HANA express edition pre-installed. The 32GB image allows you to create databases using up to 32GB of RAM and is free to use; you only pay for the cost of using the EC2 instance and other infrastructure costs like storage on AWS. This lets you try out a fully functional version of HANA relatively cheaply without needing to install it on a server of your own. The HANA instance can be spun up pretty quickly from the AWS marketplace on a m4.2xlarge instance which gives you a Xeon E5-2686 processor with 8 VCPUs and 32GB of RAM. The setup instructions SAP provides for the remaining configuration required are pretty thourogh and should have the server up and running pretty quickly. There are some additional points that should be added:

- Make sure you allocate a volume for you machine with enough EBS storage for the data you want to import into HANA. For large data files > 100GB the default volume size that EC2 uses is not enough.

- You should create an entry for the hostname hxehosts in your HOSTS file that points to the public IP of your EC2 machine, and use this hostname for all network connections to the HANA database either from the IDE or web browser.

- If you only need SSH access from the workstation you are currently on then restrict inbound SSH traffic in your EC2 security group to only your IP

- If you want to run the SAP HANA Web IDE you may need to open additional ports in your EC2 security group.

SAP HANA Studio

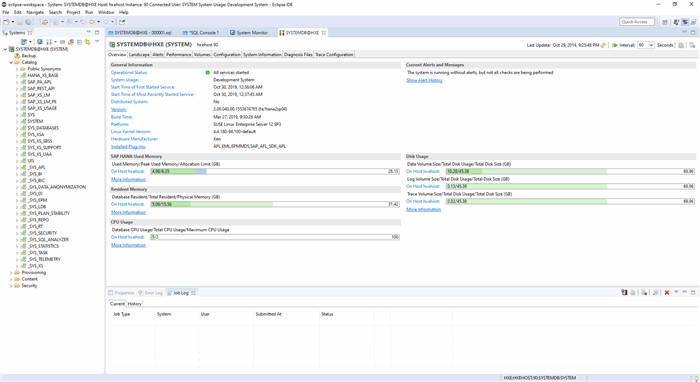

SAP HANA Studio is the Eclipse-based IDE for both administering and developing on SAP HANA. HANA Studio can connect both to local server instances as well as instances hosted on AWS

HANA Studio is freely available to download and install and only requires a JVM like Oracle Java 8 and a recent Eclipse release. Once it is up and running you can connect to your EC2 instance running HANA using the SYSTEM user you configured during the HANA setup steps on the EC2 server. Make sure you select to use SSL on you client connection from HANA Studio to your database but uncheck the option to validate the SSL certificate since you will be initially using a self-signed certificate on your newly-configured server.

In order to conect to our HANA instance running in the cloud we must make sure that our EC2 instance is assigned a security group with the correct inbound rules. And just as in Ryan's tutorial if you want to use Web Studio or other web-based HANA tools you must add an entry into your HOSTS file or provide another way to resolve the hostname hxehost to the public IP address of your HANA server.

Amazon SageMaker

Jupyter notebook instances can be created from the AWS console.

Lifecycle configurations allows us to execute code or shell scripts when a notebook is created or run. In theory we can use a lifecycle configuration to install the HANA Python client libraries before the notebooks run, but in practice I was unable to get working as there are known issues with installing Python packages outside the notebook environment. However we can easily install the packages we need in a cell at the top of our notebooks. The ml.t2.medium notebook instance has 4GB of memory which is enough to run queries against our database.

Development

Bulk importing data into HANA

The GHCN FTP server has a folder called superghcnd with single compressed CSV file containing the entire GHCN-Daily dataset up to the current date. The entire GCHN-Daily dataset is more than 100GB when uncompressed snd since we only have limited RAM on our HANA server we can't ingest the entire dataset. Fortunately on *nix we have command-line tools that can easily slice the file into smaller subsets without needing to read the entire file in memory. From a shell on our HANA server we can download an uncompress the superghcnd CSV file using curl and tar. Then can count how many rows there are in our CSV file using wc:

hxeadm@hxehost:~> cd /import

hxeadm@hxehost:/import> wc -l superghcnd.csv

2911313414 superghcnd.csv

hxeadm@hxehost:/import>

So it's about 3 billion rows in the file. The recommendation for HANA is to reserve 50% RAM for your main tables and the rest for views. To be safe we will only ingest 10GB of data right now which is roughly 300 million rows. We will use the head command to grab the top 300 million rows from the original file and write that to a new CSV file:

hxeadm@hxehost:/import> head -n 300000000 superghcnd.csv > superghcnd_1.csv

hxeadm@hxehost:/import> ls

superghcnd.csv superghcnd_1.csv superghcnd_2.csv

hxeadm@hxehost:/import> ls -alF

total 109215760

drwxr-xrwx 2 root root 4096 Oct 30 19:31 ./

drwxr-xr-x 26 root root 4096 Oct 30 15:44 ../

-rw-r--rw- 1 hxeadm sapsys 101365375181 Oct 30 01:15 superghcnd.csv

-rw-r----- 1 hxeadm sapsys 10471526993 Oct 30 02:28 superghcnd_1.csv

So we now have a 10 GB file of data we can import into our 32GB HANA instance which should leave use enough RAM for views and queries. We can copy this file to the work directory of our HANA instance which is typically /usr/sap/HXE/HDB90/work. By default HANA allows data import from flat files from this folder. We must then create the table that will hold the imported data. An easy way to do this is to use the Import wizard in HANA Studio to load a small CSV file with the same schema as our dataset:

The wizard gives us a nice GUI for defining mappings from the fields in our CSV file to table columns. HANA Studio will generate the table definitions that best match the imported data and we can use the GUI to tweak the definitions to what we want:

We can use the GUI to define a table with the same schema as our CSV file and import a small amount of data from our developer machine which we then delete leaving the table definition intact. The DDL for our table definition looks like:

CREATE COLUMN TABLE "GHCN"."DAILY" (

"ID" NVARCHAR(11) COMMENT 'Station ID',

"ODATE" DATE CS_DAYDATE COMMENT 'Observation Date',

"ELEMENT" NVARCHAR(4) COMMENT 'Element',

"OVALUE" INTEGER CS_INT COMMENT 'Observation Value',

"M" NVARCHAR(1) COMMENT 'Measurement flag',

"Q" NVARCHAR(1) COMMENT 'Quality flag',

"S" NVARCHAR(1) COMMENT 'Source flag'

) UNLOAD PRIORITY 5 AUTO MERGE

But this won't work for our main 10 GB import file which resides on the remote server. The main HANA bulk import facility is invoked through the IMPORT SQL statement

IMPORT FROM CSV FILE '/usr/sap/HXE/HDB90/work/superghcnd_1.csv' INTO GHCN.DAILY

WITH RECORD DELIMITED BY '\n'

FIELD DELIMITED BY ','

COLUMN LIST ("ID", "ODATE", "ELEMENT", "OVALUE" "M", "Q", "S")

FAIL ON INVALID DATA;

The IMPORT statement takes a lot of options that can improve the performance of the bulk import (like how many threads to use) but this code snippet is sufficient to import our 300 million records into HANA in a reasonable amount of time. We can then query the amount of rows in the GHCN.DAILY table on the server from the command-line using the hdbsql tool:

hxeadm@hxehost:/usr/sap/HXE/HDB90> hdbsql -n hxehost:39013 -i 90 -u SYSTEM

Password:

Welcome to the SAP HANA Database interactive terminal.

Type: \h for help with commands

\q to quit

hdbsql SYSTEMDB=> SELECT COUNT(1) FROM GHCN.DAILY;

COUNT(1)

300000000

1 row selected (overall time 2808.359 msec; server time 629 usec)

With our data import complete we can move to building packages of column views in our database which provide the main analysis structures for our queries.

Using the SageMaker Jupyer notebook front-end

Setup

HANA

The only thing we need to do on the database side is create a HANA user for our notebooks which can be done easily inside HANA Studio:

At minimum the user needs to have SELECT permission on the GHCN.DAILY table and SELECT on the column views in the _SYS_.BIC schema where we created under our ghcn package

Jupyter

When a notebook starts up we should install all the necessary Python packages into our notebook instance. We will need the HANA database client (hdbcli) which is available on PyPi and installable via pip. We also need to install the hana_ml library which has the Pandas data frame library integration with the HANA client. hana_ml doesn't seem to available on PyPi so it must be installed manually. For convenience I put the package archive in an S3 bucket so it can be downloaded when needed and installed from there

!pip install hdbcli > /dev/null 2>&1

!if [ ! -f hana_ml-1.0.7.tar.gz ]; then wget https://allisterb-hana.s3.us-east-2.amazonaws.com/hana_ml-1.0.7.tar.gz > /dev/null 2>&1; fi

!pip install hana_ml-1.0.7.tar.gz > /dev/null 2>&1

!pip show hana

Once the necessary packages are installed we can test the database connection from the notebook. First we create a small script called db.py to store our connection settings:

hxehost = my server ip

user = my user

passwd = my pass

Then we can test the connection:

import db

import sys

from hdbcli import dbapi

conn = dbapi.connect(db.hxehost, 39013, db.user, db.passwd)

print (conn.isconnected())

with conn.cursor() as cursor:

cursor.execute("SELECT COUNT(1) FROM GHCN.DAILY")

result = cursor.fetchone()

print (f'{result[0]} rows in table.')

Which gives the output:

True

300000000 rows in table.

Now we're ready to start querying!

import pandas as pd

from hana_ml import dataframe

conn = dataframe.ConnectionContext(db.hxehost, 39013, db.user, db.passwd)

df = conn.table('DAILY', schema='GHCN')

df.head(5).collect()

The DataFrame object executes SQL statements on the server corresponding to data frame operations on the client e.g if we can ask the DataFrame object what the SQL statement is which corresponds to calling the head() function:

df.head(5).select_statement

which prints this out:

'SELECT TOP 5 * FROM (SELECT * FROM "GHCN"."DAILY") dt'

Calling the collect() method on a DataFrame object actually sends the SQL query to the server. We can also query the calculation view for daily observations in the ghcn package we created :

df = conn.table("ghcn/DAILY_STATION_OBSERVATION", schema="_SYS_BIC")

df.head(5).collect()

which gives us this result: