In my previous tome, 16 Days: A TypeScript Application from Concept to Implementation, one of the features I wanted to add was the ability to export the local storage to the server. I ended up implementing two ways of doing this -- one by simply sending the audit log transactions, and the second by sending all the data in all the stores to be imported as a complete snapshot of the local storage. It is this second option that I want to explore here in a much shorter article as it took me down the rabbit hole of working with transactional updates (meaning, the ability to rollback all the table insert operations) within the context of asynchronous AJAX calls.

There are several approaches to solving the problem of exporting (on the client side) and importing (on the server side) data that spans tables. The salient point here is that if any one AJAX call fails for whatever reason, the entire transaction should be rolled back. For example:

- Implement a purely server-side mechanism for rolling back the transaction when a particular AJAX call fails and handling subsequent AJAX calls after failure.

- Send all the data for each table in one potentially large AJAX call.

- Send the data in each table as synchronous AJAX calls so they can be called sequentially.

- Using

when/then functions in jQuery, or if you prefer not to use jQuery, using Promise to send each table's data synchronously. - Again using jQuery's

when/then functions, send each table's data asynchronously and use a jQuery's "master Deferred" (aka a master Promise) to handle success/failure.

I chose option #5 as:

- Option 1 actually needs to be implemented in option 5.

- Option 2 is too easy. Claiming that the JSON may be too large is not a good argument because the data for an individual table may be very large and this point is something to consider regardless of a "send everything" or "send tables one at time" with regards to server-side maximum JSON length.

- Option 3 defeats the purpose of the A in AJAX: Asynchronous.

- Option 4 again defeats the purpose of asynchronous as it turns the requests into synchronous calls.

And quite frankly, I chose option 5 because it was the more challenging implementation.

Note that there is no prerequisite that you have to read the article mentioned above as this is more about an implementation approach with concrete examples rather than about my crazy TypeScript application generator.

Since my back-end doesn't have any referential integrity (foreign keys) I'm not concerned with the order in which each table's data is sent, nor do I turn off integrity checking as part of the import process.

There is no source code download mainly because you can copy and paste the code from the article and it's not packaged as a library which would require a certain level of Inversion of Control to implement the actual "what should I do when I get the request" as a callback. The source code is available here though for the entire application. Long live Copy & Paste!

The goal therefore is very simple.

- The client informs the server that it's about to make a bunch of AJAX calls that should be wrapped in a transaction.

- The client is responsible for determining whether all the AJAX calls succeed or one of them fails.

- On success, the client tells the server to commit the transactions.

- On failure, the client tells the server to rollback the transactions. The client also attempts to cancel any pending AJAX calls.

From the server's perspective:

- The server opens a connection to the database and creates a transaction object. This is "keyed" by the user's ID on the assumption that the user will be initiating only one transactional operation at a time.

- Upon receiving the AJAX call, the server processes the call and returns an error if an exception occurs. The server does not initiate rolling back the transactions. It certainly could, and probably should, but I wanted to explore the behavior of the client-server application from the perspective of the client requesting the rollback rather than the server assuming the rollback should occur. Idealistically, maybe the client wants to try to recover from the failure, but this is pretty much pie-in-the-sky thinking.

- The server commits the transactions when requested by the client.

- The server rolls back the transactions when requested by the client.

The idea here is that the server is as dumb as possible. It:

- Doesn't know how many AJAX calls it will receive.

- Doesn't make assumptions about how to handle an exception.

The export method should be straight forward:

- Send a

BeginTransaction call and wait for it to complete. The reason should be obvious -- we need the server to open the DB connection and create a SqlTransaction object. - Make all the AJAX calls.

- Either request the transactions be committed if all succeeded, or rolled back on any single failure.

The code:

public ExportAll(entities: string[]): void {

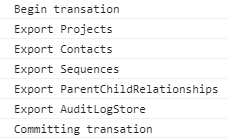

console.log("Begin transaction");

jQuery.when(jQuery.post(this.UrlWithUserId("BeginTransaction"))).then(() => {

let calls: JQueryXHR[] = [];

entities.forEach(e => this.ExportStore(calls, e));

this.ExportStore(calls, "Sequences");

this.ExportStore(calls, "ParentChildRelationships");

this.ExportStore(calls, "AuditLogStore");

jQuery.when.apply(this, calls).then(

() => {

console.log("Committing transaction");

jQuery.post(this.UrlWithUserId("CommitTransaction"));

},

(d) => {

console.log("Rollback: ");

console.log(d);

calls.forEach(c => c.abort());

jQuery.post(this.UrlWithUserId("RollbackTransaction"));

}

);

});

}

So that you don't have to read the previous article:

entities is simply a list of "store" names- Each store contains data associated with a table of that store's name.

userId is something managed by the class that wraps this function. Just treat it as a unique identifier for the transaction.

The actual AJAX calls look like this:

private ExportStore(calls: JQueryXHR[], storeName: string): void {

let storeData = this.storeManager.GetStoreData(storeName);

let xhr = undefined;

if (storeData.length > 0) {

console.log(`Export ${storeName}`);

xhr = jQuery.post(

this.UrlWithUserId("ImportEntity"),

JSON.stringify({ storeName: storeName, storeData: storeData }),

);

calls.push(xhr);

}

}

Note that the fail option is not implemented here, though it certainly could be. Also note that the array calls is being populated by this method as we have this if (storeData.length > 0) statement that would otherwise need to be in the caller, and I wanted the caller to be very simple.

With regards to the use of jQuery's when, then, it's very important to note this from the jQuery when documentation (my bolding):

In the multiple-Deferreds case where one of the Deferreds is rejected, jQuery.when() immediately fires the failCallbacks for its master Deferred. Note that some of the Deferreds may still be unresolved at that point. The arguments passed to the failCallbacks match the signature of the failCallback for the Deferred that was rejected. If you need to perform additional processing for this case, such as canceling any unfinished Ajax requests, you can keep references to the underlying jqXHR objects in a closure and inspect/cancel them in the failCallback.

The apply usage is a common practice to iterate over an array for a given function and is not jQuery specific. Read more here.

The first thing we need is a way to save the transaction and connection information as the separate AJAX requests come in, and this storage mechanism needs to be thread safe:

private static ConcurrentDictionary<Guid, (SqlTransaction t, SqlConnection c)> transactions =

new ConcurrentDictionary<Guid, (SqlTransaction, SqlConnection)>();

The routes are defined as:

router.AddRoute<RequestCommon>("POST", "/BeginTransaction", BeginTransaction, false);

router.AddRoute<RequestCommon>("POST", "/CommitTransaction", CommitTransaction, false);

router.AddRoute<RequestCommon>("POST", "/RollbackTransaction", RollbackTransaction, false);

router.AddRoute<EntityData>("POST", "/ImportEntity", ImportEntity, false);

where we have:

public class RequestCommon : IRequestData

{

public Guid UserId { get; set; }

public string StoreName { get; set; }

}

and:

public class EntityData : RequestCommon

{

public List<JObject> StoreData = new List<JObject>();

}

The begin, commit, and rollback transaction handlers are straight forward. For this discussion, please be aware that web requests are running in separate threads:

Task.Run(() => ProcessContext(context));

private static IRouteResponse BeginTransaction(RequestCommon req)

{

var conn = OpenConnection();

var transaction = conn.BeginTransaction();

transactions[req.UserId] = (transaction, conn);

return RouteResponse.OK();

}

private static IRouteResponse CommitTransaction(RequestCommon req)

{

transactions[req.UserId].t.Commit();

transactions[req.UserId].c.Close();

transactions.Remove(req.UserId, out _);

return RouteResponse.OK();

}

private static IRouteResponse RollbackTransaction(RequestCommon req)

{

lock (schemaLocker)

{

Console.WriteLine($"Abort {req.UserId}");

transactions[req.UserId].t.Rollback();

transactions[req.UserId].c.Close();

transactions.Remove(req.UserId, out _);

}

return RouteResponse.OK();

}

That lock is pretty important -- each AJAX request is being handled in its own thread and we can't have one request processing an import while the client requests a rollback because some other request failed. Either the rollback needs to wait until an import request completes, or an import request is held off until the rollback completes.

So the fun begins here:

private static IRouteResponse ImportEntity(EntityData entity)

{

IRouteResponse resp = RouteResponse.OK();

lock (schemaLocker)

{

if (transactions.ContainsKey(entity.UserId))

{

try

{

var transaction = transactions[entity.UserId].t;

var conn = transactions[entity.UserId].c;

entity.StoreData.ForEach(d =>

InsertRecord(conn, transaction, entity.UserId, entity.StoreName, d));

}

catch (Exception ex)

{

Console.WriteLine(ex.Message);

resp = RouteResponse.ServerError(new { Error = ex.Message });

}

}

}

return resp;

}

We execute a lock which has two purposes:

- If rollback occurred while an import request was in-flight, the transaction and connection are no longer valid, so we need to check that the transaction identifier still exists with

if (transactions.ContainsKey(entity.UserId)) - While it apparently is safe to execute multiple inserts in separate threads on a shared transaction object, it's not possible to do this without a more advanced locking mechanism to ensure that a rollback doesn't occur in a separate thread.

The effect of this lock statement though is important -- it results in the AJAX calls executing sequentially, not simultaneously. So that simple locking mechanism needs to be revisited.

Unfortunately, this is no easy task. The lock above ensures that SqlConnection object, which appears to be shared between threads, is actually used serially, not concurrently. From MSDN SQL:

...the actual ado.net public objects (connection, command, reader, etc) are NOT thread safe, so you cannot share a given instance of a connection, command, etc. across threads unless you guarantee (with synchronization or any other means) that you won't touch them concurrently from different threads.

So this makes it even more complicated to handle commit/rollback when the actual transactions are occurring on separate threads!

If you're curious what that insert statement actually looks like, here it is:

private static void InsertRecord(

SqlConnection conn,

SqlTransaction t,

Guid userId,

string storeName,

JObject obj)

{

Assert.That(schema.ContainsKey(storeName), $"{storeName} is not a table in the database.");

Assert.ThatAll(

obj.Properties(),

f => schema[storeName].Contains(f.Name, ignoreCaseComparer),

f => $"{f.Name} is not a valid column name.");

Dictionary<string, string> fields = new Dictionary<string, string>();

obj.Properties().ForEach(p => fields[p.Name] = p.Value.ToString());

string columnNames = String.Join(",", fields.Select(kvp => $"[{kvp.Key}]"));

string paramNames = String.Join(",", fields.SelectWithIndex((kvp, idx) => $"@{idx}"));

var sqlParams = fields.SelectWithIndex((kvp, idx) =>

new SqlParameter($"@{idx}", kvp.Value)).ToList();

sqlParams.Add(new SqlParameter("@userId", userId));

string sql = $"INSERT INTO [{storeName}]

(UserId, {columnNames}) VALUES (@userId, {paramNames})";

Execute(conn, sql, sqlParams.ToArray(), t);

}

This is a specialized piece of code based on my "schema generated on the fly" approach (for that, you will have to read the article I referenced in the introduction). The Assert calls verify that actual table and column names are being used to prevent SQL injection, as storeName and columnNames are not parameters but injected as part of the actual SQL statement.

When all is happy with the world, we see this:

And when there is an exception, we see this:

Notice how four of the AJAX calls could be cancelled. Because this is all asynchronous, the results will vary. For example, here three AJAX calls were processed and returned exceptions and two were able to be cancelled:

I really don't want to deal with the complexity of the lack of thread safety (which makes sense) in a SqlConnection instance and the only solution that I can sort of see would be to create a separate SqlConnection for each thread, probably with its own SqlTransaction instance, and then collect those transactions and commit or roll them back. Even if the SqlTransaction instance can be shared, this would mean keeping open SqlConnection's until all the AJAX calls had been processed and were ready to commit. And that is very dubious given the limit of the connection pool.

So instead, moving the lock to a better location and keeping track of whether we're in a rollback state or in the middle of processing an AJAX call is definitely a performance improvement. Keep in mind that the rollback might be received in the middle of processing another import, and any imports currently in process should be terminated as quickly as possible.

So let's start with a wrapper class (sadly, we can't use tuples since they are "value" types) to keep track of the connection, transaction, and who is using what counts:

public class Transaction

{

public SqlTransaction t;

public SqlConnection c;

public long rollbackCount;

public long transactionCount;

public Transaction(SqlTransaction t, SqlConnection c)

{

this.t = t;

this.c = c;

}

}

Next, the insert process does a couple things:

- It increments in a thread safe manner the "transactions are occurring for an entity" counter.

- It checks if the rollback counter is non-zero (it'll only be 0 or 1.)

- The lock is only around the actual

Insert call which technically frees up some other thread to do something. Given that tasks are CPU bound, this shouldn't involve thread context switching.

Here's the code:

private static IRouteResponse ImportEntity(EntityData entity)

{

IRouteResponse resp = RouteResponse.OK();

var tinfo = transactions[entity.UserId];

var transaction = tinfo.t;

var conn = tinfo.c;

try

{

Interlocked.Increment(ref tinfo.transactionCount);

Console.WriteLine($"{tinfo.transactionCount} {tinfo.rollbackCount} {tinfo.c.State}");

for (int n = 0; n < entity.StoreData.Count && Interlocked.Read

(ref tinfo.rollbackCount) == 0; ++n)

{

lock (schemaLocker)

{

InsertRecord(conn, transaction, entity.UserId,

entity.StoreName, entity.StoreData[n]);

}

}

}

catch (Exception ex)

{

Console.WriteLine(ex.Message);

resp = RouteResponse.ServerError(new { Error = ex.Message });

}

finally

{

Interlocked.Decrement(ref tinfo.transactionCount);

}

return resp;

}

And now, the rollback doesn't require even performing a lock -- in fact, doing so would be unproductive because we want the rollback function to "signal" that a rollback is in process. Here, the rollback waits for the ImportEntity insert loop to terminal before performing the rollback:

private static IRouteResponse RollbackTransaction(RequestCommon req)

{

var tinfo = transactions[req.UserId];

Interlocked.Increment(ref tinfo.rollbackCount);

while (Interlocked.Read(ref tinfo.transactionCount) > 0)

{

Thread.Sleep(1);

}

Console.WriteLine($"Abort {req.UserId}");

transactions[req.UserId].t.Rollback();

transactions[req.UserId].c.Close();

transactions.Remove(req.UserId, out _);

return RouteResponse.OK();

}

This works quite well to immediately terminal a long-running insert operation due to a lot of records whenever another AJAX import call causes an exception.

At the end of the day, there really is no easy solution using .NET's SqlConnection and SqlTransaction objects to manage transactions for SQL operations across threads. What's presented here is a workaround that is optimized as best as possible but relies on synchronization with regards to the use of the SqlConnection instance. Probably the simplest way to work around the issues is to not use these class at all and manage connection pooling outside of the context of a using new SqlConnection statement, as this closes the connection when the using exits and invalidates the transaction. Using the OdbcConnection class doesn't really help because what basically is needed is a single connection to which SQL statements can simply be streamed "thread safely", which serializes the statements, yes, but avoid all the silliness of having to implement a lock. Well heck, such an class could be written even for SqlConnection. Maybe I'll look at that at some point, as it would demystify the behavior of the current implementation.

- 10th November, 2019: Initial version