Introduction

In this series, I will outline the results of a comparison between five different CI/CD services, namely:

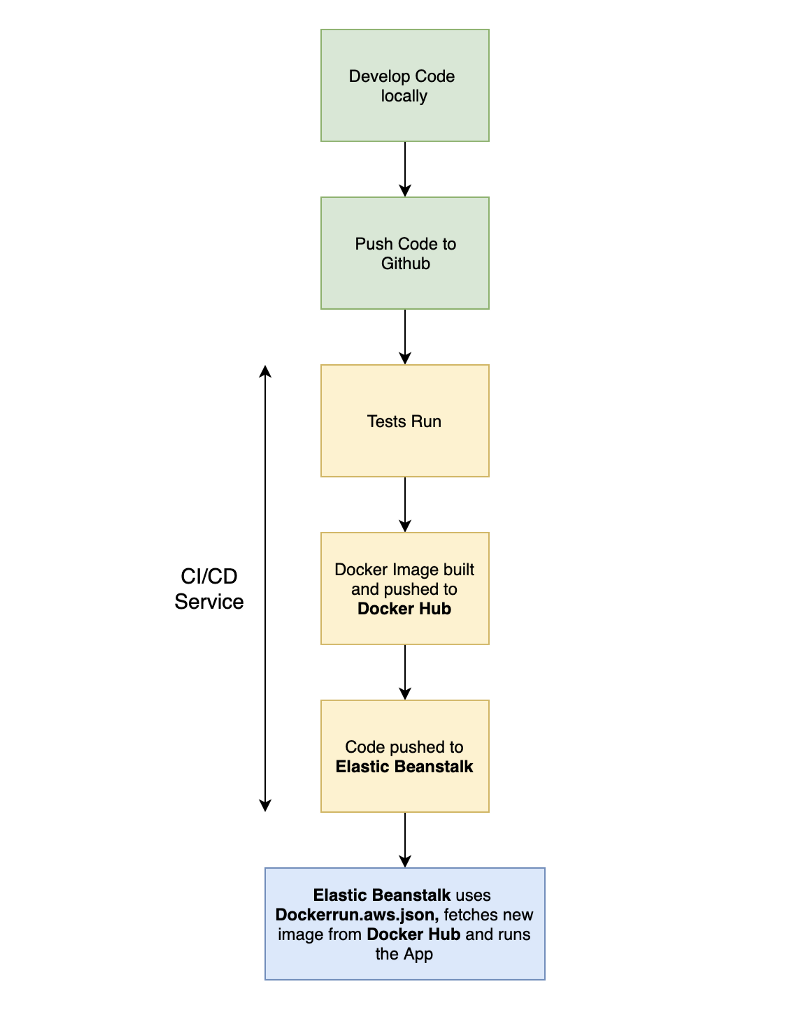

For this comparison, I will use each service to run tests and deploy a Dockerised Node application to AWS Elastic Beanstalk. I will detail how to configure the CI/CD process with each service and I will provide some performance statistics that compare the services.

Test Project and Process

The repository hosting the project tested and deployed by the various services can be found here. The application is a small Node/Express application that is Dockerised and has a single test. Clone this application and push it up to your own Github repository as we will use it frequently throughout this article.

The process followed for the test for each service is as follows:

Results

The results can be seen in the table below. I encourage you to follow the instructions to replicate these results yourself. A few notes:

The configuration time is subjective as it depends on your familiarity with each tool. For transparency, I had setup pipelines with Buddy as you can see in my other blog posts. That said, the ability to setup pipelines visually drastically decreases the configuration time.

The run times are from when I first ran these pipelines and can be seen in screenshots later on in this post. I welcome suggestions on how to improve my run times. The run times are all from using the default machines that these services provide. These can, of course, be configured and a pricing comparison is out of the scope of this article.

From my use case, Buddy is the clear winner. As I am not a DevOps specialist, setting up CI/CD pipelines visually really appealed to me and I find that it was extremely easy to configure. Buddy also had the quickest Run Time but I suspect that people will soon make suggestions on how I can improve my existing pipelines. Buddy is, however, not as well known as the other services and you should therefore examine their offering carefully before using their service over their competitors.

In second place, I would recommend AWS CodePipeline as it was surprisingly easy to configure which has not always been my experience with AWS. CodePipeline is deeply integrated with other AWS services which made it immensely powerful. If you are already using AWS heavily, I would thoroughly recommend this service.

I personally found that Gitlab was the hardest to use out of all services as it wasn’t as well documented. Travis and CircleCI were very well documented and, if I wasn’t using AWS or couldn’t use a smaller company like Buddy, I would definitely consider these services.

Please comment if you have any suggestions — I would love to regularly update this series of articles with further optimizations. If you disagree with my findings, please let me know. Finally, please try and replicate these results yourself and share your experiences using these tools in your own company.

Methodology

Creating AWS User

For this article, we will need to create an AWS User so that the CI/CD services can programmatically access Elastic Beanstalk. Navigate to the IAM service in IAM:

Click Users.

Click Add user:

Name your user whatever you wish. In this example, I named mine CIComparisonBlogUser and gave them Programmatic access before clicking Next.

We then need to add a policy for this user. Click Attach existing policies directly and search for AWSElasticBeanstalkFullAccess, attach the policy and click Next.

Review your user and save the Access key ID and Secret access key in a secure location. These credentials will be used when setting up each CI service.

Creating Elastic Beanstalk App

We will need to create an application on Elastic Beanstalk where all the services can deploy to. Navigate to AWS Elastic Beanstalk where you should see the following starter screen:

Click Get started:

Name your application (I named mine CI Comparison Blog), select Docker as the platform and choose Sample application which we will use for now. Click Create application and go make some coffee as it will take some time to spin up:

Click the provided URL to view the sample application:

Creating Docker Hub Repository

The final step needed for all services is the creation of a Docker Hub repository. Head over to https://hub.docker.com/, log in and you should see the following splash screen:

Click Repositories and click Create Repository:

Name your repository whatever you wish (I named mine ci-comparison-blog) and click Create.

You should see your created repository. The key part to remember is your equivalent of andrewbestbier/ci-comparison-blog:

Setting up a CircleCI Pipeline

To setup a CircleCI pipeline, head over to https://circleci.com/ and click Log in with Github (You will need to Authorise CircleCI through Github).

Next click Settings and Projects and find your Github repository (mine is called ci-comparison-blog) before clicking the settings cog on the right:

Click Environment variables and add your AWS and Docker Hub credentials (AWS_ACCESS_KEY_ID, AWS_SECRET_ACCESS_KEY, DOCKER_USER and DOCKER_PASS). These will be used later on:

Next click Add Projects on the sidebar, search for your Github repository and click Set Up Project:

You will then see a screen providing instructions on how to set your project up. The most important instruction is “Create a folder named .circleci and add a file config.yml ”.

If you look at our Github repository, you can see that I have already created this file:

version: 2

jobs:

test:

working_directory: ~/app

docker:

- image: circleci/node:latest # (1)

steps:

- checkout

- run:

name: Update npm

command: 'sudo npm install -g npm@latest'

- restore_cache: # (2)

key: dependency-cache-{{ checksum "package-lock.json" }}

- run:

name: Install npm dependencies

command: npm install

- save_cache:

key: dependency-cache-{{ checksum "package-lock.json" }}

paths:

- ./node_modules

- run:

name: Run tests # (3)

command: 'npm run test'

docker-deploy-image:

working_directory: ~/app

machine:

docker_layer_caching: true # (4)

steps:

- checkout

- run: | # (5)

docker build -t andrewbestbier/ci-comparison-blog .

docker login -u $DOCKER_USER -p $DOCKER_PASS

docker push andrewbestbier/ci-comparison-blog

deploy-aws:

working_directory: ~/app

docker:

- image: circleci/python:latest

steps:

- checkout

- run: # (6)

name: Installing deployment dependencies

working_directory: /

command: 'sudo pip install awsebcli --upgrade'

- run: # (7)

name: Deploying application to Elastic Beanstalk

command: eb deploy

workflows:

version: 2

build-test-and-deploy:

jobs:

- test

- docker-deploy-image:

requires:

- test

- deploy-aws:

requires:

- docker-deploy-image

Let’s break down what’s happening during this build’s execution:

- The first job,

test is run with a Node Docker container - The

node_modules are restored if they exist in the cache, otherwise they are installed - The tests are run

- Docker layer caching is enabled to speed up image building performance (https://circleci.com/docs/2.0/glossary/#docker-layer-caching)

- Next, the Docker image is built and pushed to Docker Hub with the

DOCKER_USER and DOCKER_PASS environment variables. Remember to change the repository to the one you created. - The Elastic Beanstalk CLI tool is installed.

- The CLI is used to deploy the app to Elastic Beanstalk with

eb deploy . This command works as we have already been authenticated invisibly by CircleCI with the AWS_ACCESS_KEY_ID, AWS_SECRET_ACCESS_KEY environment variables.

You may be wondering, how does eb deploy know where to deploy to? To specify a location, you will need to modify the .elasticbeanstalk/config.yml file in your Github repository to align with the sample Elastic Beanstalk application you created:

branch-defaults:

master:

environment: CiComparisonBlog-env

environment-defaults:

CiComparisonBlog-env:

branch: null

repository: null

global:

application_name: CI Comparison Blog

default_ec2_keyname: null

default_platform: arn:aws:elasticbeanstalk:eu-west-2::platform/Docker running on

64bit Amazon Linux/2.12.17

default_region: eu-west-2

include_git_submodules: true

instance_profile: null

platform_name: null

platform_version: null

profile: null

sc: git

workspace_type: Application

To find your equivalent values, see the following screenshot and the AWS documentation here: https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/using-regions-availability-zones.html

We are finally ready to go so click Start Building in the CircleCI dashboard and, with a little luck, your project should test, build and deploy successfully:

Gitlab

First, head over to https://about.gitlab.com/ and sign in.

If this is your first time using Gitlab, you will see the following screen. Click Create a project:

Next click the CI/CD for external repo tab and connect to Github.

You will be prompted for a personal access token from Github with repo access. To do so, follow this short guide: https://help.github.com/en/enterprise/2.17/user/articles/creating-a-personal-access-token-for-the-command-line

Next, connect your repository:

You should then see a dashboard with your project details:

The next step is to add the environment variables. Click Settings on the side bar and then click CI/CD on the popup.

Scroll down and add your AWS and Docker Hub credentials (AWS_ACCESS_KEY_ID, AWS_SECRET_ACCESS_KEY, DOCKER_USER and DOCKER_PASS).

The next step is to .gitlab-ci.yml file to the root of your directory. If you look in the Github repository, you will see the following file:

image: node:latest # (1)

stages:

- build

- test

- docker-deploy-image

- aws-deploy

cache:

paths:

- node_modules/ # (2)

install_dependencies:

stage: build

script:

- npm install # (3)

artifacts:

paths:

- node_modules/

testing:

stage: test

script: npm test # (4)

docker-deploy-image:

stage: docker-deploy-image

image: docker:dind

services:

- docker:dind

script:

- echo "$DOCKER_PASS" | docker login -u "$DOCKER_USER" --password-stdin # (5)

- docker build -t andrewbestbier/ci-comparison-blog .

- docker push andrewbestbier/ci-comparison-blog

aws-deploy:

image: 'python:latest'

stage: aws-deploy

before_script:

- 'pip install awsebcli --upgrade' # (6)

script:

- eb deploy CiComparisonBlog-env

Let’s break down what’s happening during this build’s execution:

- The base image is a Node Docker container

- The

node_modules cached - The packages are installed

- The tests are run

- Next, the Docker image is built and pushed to Docker Hub with the

DOCKER_USER and DOCKER_PASS environment variables. Remember to change the repository to the one you created. - The Elastic Beanstalk CLI tool is installed

- The CLI is used to deploy the app to Elastic Beanstalk with

eb deploy . This command works as we have already been authenticated invisibly by Gitlab with the AWS_ACCESS_KEY_ID, AWS_SECRET_ACCESS_KEY environment variables. Note how we have to specify the environment name in this command.

We are finally ready to go, so push up a small irrelevant commit and, with a little luck, your project should test, build and deploy successfully:

Travis CI

First, head over to https://travis-ci.com and Sign in with Github:

You should then see a dashboard showing your connected projects. I have blocked out other personal projects I have. Click the small + button to add a new project:

Next, search for your Github repository and click the Settings button:

Scroll down and, like the other services, add your AWS and Docker Hub credentials (AWS_ACCESS_KEY_ID, AWS_SECRET_ACCESS_KEY, DOCKER_USER and DOCKER_PASS).

The next step is to .travis.yml file to the root of your directory. If you look in the Github repository, you will see the following file:

language: node_js # (1)

node_js:

- 'node'

services:

- docker # (2)

jobs:

include:

- stage: test

script:

- npm install # (3)

- npm test # (4)

- stage: docker-deploy-image # (5)

script:

- echo "$DOCKER_PASS" | docker login -u "$DOCKER_USER" --password-stdin

- docker build -t andrewbestbier/ci-comparison-blog .

- docker push andrewbestbier/ci-comparison-blog

- stage: deploy

script: skip

deploy: # (6)

provider: elasticbeanstalk

access_key_id: $AWS_ACCESS_KEY_ID

secret_access_key: $AWS_SECRET_ACCESS_KEY

region: 'eu-west-2'

app: 'CI Comparison Blog'

env: 'CiComparisonBlog-env'

bucket_name: 'elasticbeanstalk-eu-west-2-094505317841'

bucket_path: 'CI Comparison Blog'

Let’s break down what’s happening during this build’s execution:

- The base image is a Node Docker container

- The docker service is used so that docker commands can later be used

- The packages are installed (Travis caches npm modules by default)

- The tests are run

- Next, the Docker image is built and pushed to Docker Hub with the

DOCKER_USER and DOCKER_PASS environment variables. - This step is quite different to other services as Travis offers its own Elastic Beanstalk deployment method (rather than using the CLI). One simply needs to specify the Elastic Beanstalk configuration details in the format Travis specifies and they take care of the rest.

We are finally ready to go so push up a small irrelevant commit and, with a little luck, your project should test, build and deploy successfully:

Buddy

Head over to Buddy and sign in with Github. Then click Create new project:

Buddy should intelligently work out that our project is an Express application. Click Add a new pipeline:

Specify a pipeline name (I picked CI Blog Post), select trigger On push and click Add a new pipeline.

We can then create a first action to install our dependencies and run the tests. Search for the Node.js action and click it.

You are then prompted for some bash commands to run. In our case, we wish to run yarn install and yarn test . Then click Add this action:

Next we want to build a Docker image from our source code. Search for the Build Image action:

Buddy automatically detects your Dockerfile so just click Add this action:

Next we want to push the image we just build up to Docker Hub. Search for the Push Image action:

Buddy automatically uses the image built in the previous action. Provide your Docker Hub username, password, repository and tags before clicking Add this action:

Finally, we want to deploy our files to Elastic Beanstalk so search for this action:

A modal will appear requesting your AWS user Access Key and Secret Access Key:

Select the AWS Region and your application should automatically appear. Then click Add this action:

Your pipeline should look like this:

Click Run pipeline and it should test, build and deploy your application successfully:

If you wish, you can also set up this pipeline with a buddy.yml file in your root directory:

- pipeline: "CI Blog Post"

trigger_mode: "ON_EVERY_PUSH"

ref_name: "master"

ref_type: "BRANCH"

trigger_condition: "ALWAYS"

actions:

- action: "Execute: yarn test"

type: "BUILD"

working_directory: "/buddy/ci-comparison-blog"

docker_image_name: "library/node"

docker_image_tag: "10"

execute_commands:

- "yarn install"

- "yarn test"

setup_commands:

- "npm install -g gulp grunt-cli"

mount_filesystem_path: "/buddy/ci-comparison-blog"

shell: "BASH"

trigger_condition: "ALWAYS"

- action: "Build Docker image"

type: "DOCKERFILE"

dockerfile_path: "Dockerfile"

trigger_condition: "ALWAYS"

- action: "Push Docker image"

type: "DOCKER_PUSH"

login: "andrewbestbier"

password: "secure!KmF0va9L3z4s450LWVlvNdHi1+6Z6+45vQbkHS4bWFo="

docker_image_tag: "latest"

repository: "andrewbestbier/ci-comparison-blog"

trigger_condition: "ALWAYS"

- action: "Upload files to Elastic Beanstalk/CI Comparison Blog"

type: "ELASTIC_BEANSTALK"

application_name: "CI Comparison Blog"

environment: "e-zpfbesiqpa"

environment_name: "CiComparisonBlog-env"

region: "eu-west-2"

trigger_condition: "ALWAYS"

integration_id: 65587

AWS CodePipeline

Login to AWS and navigate to the CodePipeline service. Next click Create pipeline:

Next, name your pipeline (I named mine CI-comparison) before clicking Next:

Next, you will need to add a pipeline source. Select Github, follow the login prompts, connect your repository, select the master branch and click Next:

We then need to add a build stage. Select AWS CodeBuild as a build provider and click Create project. This will open a new tab where you configure the build.

Name your build (I named mine CI-comparison) and scroll down the page.

Select Managed image, Ubuntu operating system, Standard runtime, and the 2.0 image. Also enable the Privileged flag as we wish to build Docker images before scrolling down further:

Next, add the DOCKER_USER and DOCKER_PASS environment variables and:

Select use buildspec file as we will be using a buildspec.yml file in our repositories root directory to specify the build steps. Finally, click Continue to CodePipeline.

The tab will close and you will be taken back to your CodePipeline configuration. Click Next:

Finally, we need to add a deploy stage. Select AWS Elastic Beanstalk as the deploy provider and find your application before clicking Next:

Review your pipeline and click Create pipeline:

Now view the buildspec.yml file in the root of our directory:

version: 0.2

phases:

install:

runtime-versions:

nodejs: 10 # (1)

commands:

- echo "$DOCKER_PASS" | docker login -u "$DOCKER_USER" --password-stdin # (2)

pre_build:

commands:

- npm install # (3)

- npm test # (4)

build:

commands:

- docker build -t andrewbestbier/ci-comparison-blog . # (5)

post_build:

commands:

- docker push andrewbestbier/ci-comparison-blog

Let’s break down what’s happening during this build’s execution:

- The Node version is specified.

- The build logs into our docker account with the environment variables.

- The packages are installed.

- The tests are run.

- Next, the Docker image is built and pushed to Docker Hub.

Finally, click Release change and it should test, build and deploy your application successfully.

History

- 11th November 2019: Initial post