Contents

Related Article

Introduction

This is the second part of Simple Transaction: Microservices Sample Architecture for .NET Core Application, a continuation to demonstrate how to build a command-driven / Messaging based solution using .NET Core. The sample application which was used in Previous Article is extended with few additional microservices.

The Previous Sample implements microservices for a simple automated banking feature like Balance, Deposit, Withdraw in ASP.NET Core Web API with C#.NET, Entity Framework and SQL Server. This second part is about designing a queue based messaging solution using RaabitMQ to plug-in a feature to generate monthly account statement through background service and store it in No-SQL Db (MongoDB) which is then accessed through a separate microservice.

Problem Description

Generating reports like account statements for the users on the fly from the transactional data available in the SQL Server would cause performance issue in a real time system due to the huge amount of data.

A better way to handle this problem is to separate the statement generation process (Write / Command) and accessing (Read / Query) the data. This sample will show you how to separate the command responsibility through background service and Query data through a separate microservice which solves the performance problem and makes the system capable of handling large data by allowing us to scale the services independently depending on the load.

Application Architecture

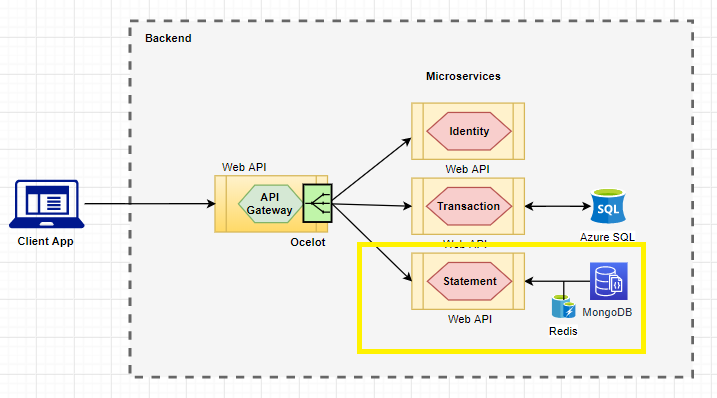

Shown below is the updated version of the architecture diagram. The highlighted part is the newly added microservice to access the account statement. This "Statement" service uses MongoDb as persistent store and Redis Cache as temporary store where the data is loaded into cache from MongoDb for a period of time and it's retrieved from cache on subsequent access.

Solution Design

The below diagram shows the high level design of the background implmentation that separates the Command responsibility from querying data. It has three core components that separates the write operation using the Command / Message processing and read operation using Query data.

- Publisher API / Scheduler Service

- Receiver Service

- Statement API

Publisher API / Scheduler Service

This microservice publishes a command into the message bus (Message Queue) when monthly account statement needs to be generated for a given month. This service has an automated scheduler to trigger the statement generation process on a scheduled time and also exposes WebApi endpoints to manually trigger the process.

Receiver Service

Receiver service is a listener, listens to message queue and consumes the incoming message and processes it. Receiver communicates with other dependent service to get the data and generates user's monthly account statement for a given month and stores the document in MongoDB.

Statement API

This microservice exposes a WepApi endpoint that communicates with the Redis Cache and MongoDb through data repository to access the account statement.

Development Environment

- Mongo DB as Persistent Store

- Redis Cache as Provisional Store

- RabbitMQ as Message Broker

- EasyNetQ as RabbitMQ Client

- NCrontab for time-based scheduling

- .NET Core

IHostedService as background service

WebApi Endpoints

End-Points Implemented in "Publisher API" to Publish Command into Message Queue

- Route: "api/publish/statement" [HttpPost] - To publish a message in to queue to trigger the background process. Defaults to previous month.

- Route: "api/publish/statement/{month}" [HttpPost] - To publish a message in to queue to trigger the background process.

- Route: "api/publish/statement/{month}/accountnumbers" [HttpPost] - To publish a message in to queue to trigger the background process for the given list of account numbers.

End-Point Implemented in "Statement API" to Access Account Statement

- Route: "api/statement/{month}" [HttpGet] - To access the account statement of authenticated user for a given month

- Route: "statement/{month}" [HttpGet] - To access the account statement of authenticated user.

Solution Structure

The highlighted part in the below diagram shows the new services / components added to the sample.

Interaction Between the Components

The below diagram shows the interaction between the components / objects in the background service.

- Message Queues: Background service both "Publisher" and "Receiver" works with two different queues. One of them is "Trigger" Queue. The initial trigger / command from the scheduler is send to "Trigger" Queue which is processed by the "Receiver" service. The second Queue in the workflow is the "Statement" queue which receives input from the "Trigger" processor and processed by "Statement" processor.

- Message Types: The background service both "

Publisher" and Receiver" understands Messages of Type ICommand which is defined in "Publish.Framework". Two message types are defined to work with two different queues, "TriggerMessage" and "StatementMessage". - Publisher: Send "

Trigger" message into "Trigger" queue on a scheduled time by the scheduler or on demand when API endpoint is called. - Receiver: Consumes the "

Trigger" message and prepares a "Statement" Message for the list of user accounts and publishes a "Statement" message into next queue for each user account. "Receiver" service then consumes the "Statement" message and calls the processor. - Processor: Contains the logic to prepare the account statement.

- Document Repository: Contains the data logic to save the document to MongoDb.

Scheduled Background Task with Cron Expression

A cron expression is a format to specify a time based schedule to execute a task. NCrontab is the Nuget package included in the "Publisher.Service" to parse the expression and determine the next run. The service is configured to trigger the background process at the start of every month to generate the account statement for the previous month.

Reference: Run scheduled background tasks in ASP.NET Core

Communication Between the Microservices

There's a need for the microservices to communicate with each other to let other services know when changes happen or to get dependent information. Here, in our sample , both Synchronous and Asynchronous mechanism are used to establish communication between microservices whereever needed.

Message based asynchronous communication is used to trigger a event. It's a point-to-point communication with a single receiver. This means when a message is published to the queue, there's a single receiver that consumes the message for processing. The below diagram shows the asynchronous communication that happens between "Publisher / Scheduler" and "Receiver" through message bus.

The background service "Receiver" communicates with dependent services (Identity and Transaction) through Http request to get the actual data for background processing.

How to Run the Application

Follow the same instruction that was given in the previous article to run the application. In addition to that, the newly added services (Publisher, Receiver and Statement API) should also be up and running.

You need to have the setup of MongoDB and Redis Cache either in your local environment or in the cloud. Following that, You can update the MongoDB and Redis Cache connection setup in the appsettings.json file of "Receiver.Service" and "Statement.Service" accordingly.

For the database changes, refer to database project included in this version of code which has the updated script.

Console App: Gateway Client

Conclusion

It is not necessary that you need to have two no-sql db for a single microservice to improve the performance of a system. In this case, just the mongoDb should do the needful, but I have included redis cache to show an alternate way of achieving better performance for frequently accessed data.

History

- 22nd November, 2019: Initial version