In this article, you will learn about extending Kubernetes APIs, creating and deploying custom resource definitions, custom controllers, and much more.

Kubernetes already has a rich set of resources, starting from pods as building blocks to higher-level resources such as stateful sets and deployments. Modern cloud-native applications can be deployed in terms of Kubernetes resources and their high-level configuration options. However, they are not sufficient when human expertise and operations are required.

This article is an excerpt taken from the book Kubernetes Design Patterns and Extensions by Packt Publishing written by Onur Yılmaz. This book covers the best practices in Kubernetes with design patterns, basics of Kubernetes extension points, networking models in Kubernetes and much more.

In this article, you will learn about extending Kubernetes APIs, creating and deploying custom resource definitions, custom controllers, and much more.

Kubernetes enables extending its own API with new resources and operates them as Kubernetes-native objects with the following features:

- RESTful API: New resources are directly included in the RESTful API so that they are accessible with their special endpoints.

- Authentication and authorization: All requests for new resources go through the steps of authentication and authorization, like native requests.

- OpenAPI discovery: New resources can be discovered and integrated into OpenAPI specifications.

- Client libraries: Client libraries such as kubectl or client-go can be used to interact with new resources.

Two major steps are involved when extending the Kubernetes API:

- Create a new Kubernetes resource to introduce the new API types

- Control and automate operations to implement custom logic as an additional API controller

Custom Resource Definitions

In Kubernetes, all of the resources have their REST endpoints in the Kubernetes API server. REST endpoints enable operations for specific objects, such as pods, by using /api/v1/namespaces/default/pods. Custom resources are the extensions of the Kubernetes API that can be dynamically added or removed during runtime. They enable users of the cluster to operate on extended resources.

Custom resources are defined in Custom Resource Definition (CRD) objects. Using the built-in Kubernetes resources, namely CRDs, it is possible to add new Kubernetes API endpoints by using the Kubernetes API itself.

Creating and Deploying Custom Resource Definitions

Consider a client wants to watch weather reports in a scalable cloud-native way in Kubernetes. We are expected to extend the Kubernetes API so that the client and further future applications natively use weather report resources. We want to create CustomResourceDefinitions and deploy them to the cluster to check their effects, and use newly defined resources to create extended objects.

Let's begin by implementing the following steps:

- Deploy the custom resource definition with

kubectl with the following command:

kubectl apply -f k8s-operator-example/deploy/crd.yaml

Custom resource definitions are Kubernetes resources that enable the dynamic registration of new custom resources. An example custom resource for WeatherReport can be defined as in the k8s-operator-example/deploy/crd.yaml file, which is shown as follows:

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: weatherreports.k8s.packt.com

spec:

group: k8s.packt.com

names:

kind: WeatherReport

listKind: WeatherReportList

plural: weatherreports

singular: weatherreport

scope: Namespaced

version: v1

Like all other Kubernetes resources, CRD has API version, kind, metadata, and specification groups. In addition, the specification of CRD includes the definition for the new custom resource. For WeatherReport, a REST endpoint will be created under k8s.packt.com with the version of v1, and their plural and singular forms will be used within clients.

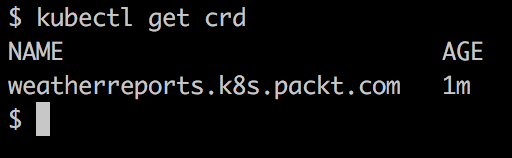

- Check the custom resources deployed to the cluster with the following command:

kubectl get crd

You will get the following output:

As shown in the preceding screenshot, the weather report CRD is defined with the plural name and group name.

- Check the REST endpoints of the API server for new custom resources:

kubectl proxy &

curl -s localhost:8001 |grep packt

You will get the following output:

New endpoints are created, which shows that the Kubernetes API server is already extended to work with our new custom resource, weatherreports.

- Check the weather report instances from Kubernetes clients such as

kubectl:

kubectl get weatherreports

You will get the following output:

Although the output of No resources found looks like an indication of an error, it shows us that there are no live instances of the weatherreports resource as expected. It shows us that, without any further configuration other than creating a CustomResourceDefinition, the Kubernetes API server is extended with new endpoints and clients are ready to work with the new custom resource.

After defining the custom resource, it is now possible to create, update, and delete resources with the WeatherReport. An example of WeatherReport can be defined, as in the k8s-operator-example/deploy/cr.yaml file:

apiVersion: "k8s.packt.com/v1"

kind: WeatherReport

metadata:

name: amsterdam-daily

spec:

city: Amsterdam

days: 1

The WeatherReport resource has the same structure, with built-in resources and consists of API version, kind, metadata, and specification. In this example, the specification indicates that this resource is for the weather report for Amsterdam city and for the last 1 day.

- Deploy the weather report example with the following command:

kubectl apply -f k8s-operator-example/deploy/cr.yaml

- Check for the newly created weather reports with the following command:

kubectl get weatherreports

You'll see the following output:

- Use the following commands for cleaning up:

kubectl delete -f k8s-operator-example/deploy/cr.yaml

kubectl delete -f k8s-operator-example/deploy/crd.yaml

Custom Controllers

A custom controller, also known as an operator, is an application where domain knowledge and human expertise is converted into code. Operators work with custom resources and take the required actions when custom resources are created, updated, or deleted. The primary tasks of operators can be divided into three sections, Observe, Analyze, and Act, as shown in the following diagram:

The stages are explained as follows:

- Observe: Watches for changes on custom resources and related built-in resources such as pods.

- Analyze: Makes an analysis of observed changes and decides on which actions to take.

- Act: Takes actions based on the analysis and requirements and continues observing for changes.

For the weather report example, the operator pattern is expected to work as follows:

- Observe: Wait for weather report resource creation, update, and deletion.

- Analyze:

- If a new report is requested, create a pod to gather weather report results and update weather report resources.

- If the weather report is updated, update the pod to gather new weather report results.

- If the weather report is deleted, delete the corresponding pod.

- Act: Take the actions from the Analyze step on the cluster and continue watching with Observe.

Operators are already being utilized in the Kubernetes environment since they enable complex applications to run on the cloud with minimum human interaction. Storage providers (Rook), database applications (MySQL, CouchDB, PostgreSQL), big data solutions (Spark), distributed key/value stores (Consul, etcd), and many more modern cloud-native applications are installed on Kubernetes by their official operators.

Operator Development

Operators are native Kubernetes applications, and they extensively interact with the Kubernetes API. Therefore, being compliant with the Kubernetes API and converting domain expertise into software with a straightforward approach is critical for operator development. With these considerations, there are two paths for developing operators, as explained in the following sections.

Kubernetes Sample Controller

In the official Kubernetes repository, a sample controller that implements watching custom resources is maintained. This repository demonstrates how to register new custom resources and how to perform basic operations on the new resource, such as creating, updating, or listing. In addition, controller logic is also implemented to show how to take actions. Repository and interaction with the Kubernetes API is a complete approach, which shows you how to create a Kubernetes like custom controller.

Operator Framework

The Operator Framework was announced at KubeCon 2018 as an open source toolkit for managing Kubernetes native applications. The Operator SDK is a part of this framework, and it simplifies operator development by providing higher level API abstractions and code generation. The Operator Framework and its environment toolset is open source and community maintained with the control of CoreOS.

The complete life cycle of operator development is covered with the following main steps:

- Create an operator project: For the

WeatherReport custom resource, an operator project in the Go language is created by using the Operator Framework SDK CLI. - Define custom resource specification: The specification of the

WeatherReport custom resource is defined in Go. - Implement handler logic: The manual operations needed for weather report collection are implemented in Go.

- Build operator: The operator project is built using the Operator Framework SDK CLI.

- Deploy operator: The operator is deployed to the cluster, and it is tested by creating custom resources.

Creating and Deploying the Kubernetes Operator

A client wants to automate the operations of the weather report collection. They are currently connecting to third-party data providers and retrieving the results. In addition, they want to use cloud-native Kubernetes solutions in their clusters. We are expected to automate the operations of weather report data collection by implementing a Kubernetes operator. We'll create a Kubernetes operator by using the Operator Framework SDK and utilize it by creating a custom resource, custom controller logic, and finally, deploying into the cluster. Let's begin by implementing the following steps:

- Create the operator project using the Operator Framework SDK tools with the following command:

operator-sdk new k8s-operator-example --api-version=k8s.

packt.com/v1 --kind=WeatherReport

This command creates a completely new Kubernetes operator project with the name k8s-operator-example and watches for the changes of the WeatherReport custom resource, which is defined under k8s.packt.com/v1. The generated operator project is available under the k8s-operator-example folder.

- A custom resource definition has already been generated in the deploy/crd.yaml file. However, the specification of the custom resource is left empty so that it can be filled by the developer. Specifications and statuses of the custom resources are coded in Go, as shown in pkg/apis/k8s/v1/types.go:

type WeatherReport struct {

metav1.TypeMeta 'json:",inline"'

metav1.ObjectMeta 'json:"metadata"'

Spec WeatherReportSpec

'json:"spec"'

Status WeatherReportStatus

'json:"status,omitempty"'

}

type WeatherReportSpec struct {

City string 'json:"city"'

Days int 'json:"days"'

}

In the preceding code snippet, WeatherReport consists of metadata, spec, and status, just like any built-in Kubernetes resource. WeatherReportSpec includes the configuration, which is City and Days in our example.WeatherReportStatus includes State and Pod to keep track of the status and the created pod for the weather report collection.

Note: You can refer to the complete code on Github.

- In this example, when a new

WeatherReport object is created, we will publish a pod that queries the weather service and writes the result to the console output. All of these steps are coded in the pkg/stub/handler.go file as follows:

func (h *Handler) Handle(ctx types.Context, event types.Event) error {

switch o := event.Object.(type) {

case *apiv1.WeatherReport:

if o.Status.State == "" {

weatherPod := weatherReportPod(o)

err := action.Create(weatherPod)

if err != nil && !errors.IsAlreadyExists(err) {

logrus.Errorf("Failed to create weather report pod : %v", err)

Note: You can refer the complete code on Github.

- Build the complete project as a Docker container with the Operator SDK and toolset:

operator-sdk build <DOCKER_IMAGE:DOCKER_TAG>

The resulting Docker container is pushed to Docker Hub as onuryilmaz/k8s-operator-example for further usage in the cluster.

- Deploy the operator into the cluster with the following commands:

kubectl create -f deploy/crd.yaml

kubectl create -f deploy/operator.yaml

With the successful deployment of the operator, logs could be checked as follows:

kubectl logs -l name=k8s-operator-example

The output is as follows:

- After deploying the custom resource definition and the custom controller, it is time to create some resources and collect the results. Create a new

WeatherReport instance as follows:

kubectl create -f deploy/cr.yaml

With its successful creation, the status of the WeatherReport can be checked:

kubectl describe weatherreport amsterdam-daily

You will see the following output:

- Since the operator created a pod for the new weather report, we should see it in action and collect the results:

kubectl get pods

You'll see the following result:

- Get the result of the weather report with the following command:

kubectl logs $(kubectl get weatherreport amsterdam-daily -o jsonpath={.status.pod})

You'll see the following output:

- Clean up with the following command:

kubectl delete -f deploy/cr.yaml

kubectl delete -f deploy/operator.yaml

kubectl delete -f deploy/crd.yaml

Summary

To summarize, in this article, we learned about extending Kubernetes APIs, creating and deploying custom resource definitions, custom controllers, and much more. To read more about Kubernetes with design patterns and understand the basics of Kubernetes extension points, please read our book Kubernetes Design Patterns and Extensions by Packt Publishing.

History

- 3rd December, 2019: Initial version