Welcome back to a new post on thoughs-on-cpp.com. This time we will have a closer look at a possible implementation of a matrix and decomposition algorithms used for solving systems of linear equations. But before we dive deep into implementations of decomposition methods we first need to do a little excursion into the performance of different types of containers we could use to eventually store the data. As always you can find the respective repository at GitHub. So let’s have some fun with matrices.

Implementing a matrix

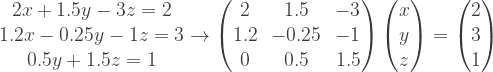

So let’s start by defining what we mean when we talk about matrices. Matrices are rectangular representations of the coefficients of linear equation systems of  linear equations with

linear equations with  unknowns such as

unknowns such as

A special subset of matrices are invertible matrices with  linear equations and

linear equations and  unknowns which we will discuss in this post. They are a key concept in linear algebra and used at almost every area in mathematics to represent linear transformations such as rotation of vectors in three-dimensional space. Knowing this a representation of the data as a two-dimensional vector would somehow feel natural not only because of the structure of the data but also how to access the data. As a result, the first rough implementation of a matrix could look like this

unknowns which we will discuss in this post. They are a key concept in linear algebra and used at almost every area in mathematics to represent linear transformations such as rotation of vectors in three-dimensional space. Knowing this a representation of the data as a two-dimensional vector would somehow feel natural not only because of the structure of the data but also how to access the data. As a result, the first rough implementation of a matrix could look like this

.gist table { margin-bottom: 0; }

To get a simpler, and more natural, way to access the data, the implementation provides two overloads of the function call operator() (1,2) which are defining the matrix as a function object (functor). The two overloads of the function call operator() are necessary because we are not only want to manipulate the underlying data of the matrix but also want to be able to pass the matrix as a constant reference to other functions. The private AssertData() (3) class function guarantees that we only allow quadratic matrices. For comparison reasons, the matrix provides a ‘naive’ implementation of matrix multiplications realized with an overloaded operator*() (4) whose algorithm has a computational complexity of  . Real-life implementations of matrix multiplication are using Strassen’s algorithm, with a computational complexity of

. Real-life implementations of matrix multiplication are using Strassen’s algorithm, with a computational complexity of  , or even more sophisticated algorithms. For our purpose, we only need the matrix multiplication to explore a little bit of the performance of the matrix implementation and verify the matrix decomposition methods.

, or even more sophisticated algorithms. For our purpose, we only need the matrix multiplication to explore a little bit of the performance of the matrix implementation and verify the matrix decomposition methods.

Bound of different matrix multiplication algorithm (Wikipedia)

Bound of different matrix multiplication algorithm (Wikipedia)

Analysis of Performance

Until now we still don’t know if we really should use a two-dimensional vector, regarding computational performance, to represent the data or if it would be better to use another structure. From several other linear algebra libraries, such as LAPACK or Intel’s MKL, we might get the idea that the ‘naive’ approach of storing the data in a two-dimensional vector would be suboptimal. Instead, it is a good idea to store the data completely in one-dimensional structures, such as STL containers like std::vector or std::array or raw C arrays. To clarify this question we will examine the possibilities and test them with quick-bench.com.

Analysis of std::vector<T>

std::vector<T> is a container storing its data dynamic (1,2,3) on the heap so that the size of the container can be defined at runtime.

.gist table { margin-bottom: 0; }

std::vector<T> implementation from libstdc++

The excerpt of the libstdc++ illustrates also the implementation of the access operator[] (4). And because the std::vector<T> is allocating the memory on the heap, the std::vector<T> has to resolve the data query via a pointer to the address of the data. This indirection over a pointer isn’t really a big deal concerning performance as illustrated at chapter heap-vs-stack further down the article. The real problem is evolving with a two-dimensional std::vector<T> at the matrix multiplication operator*() (5) where the access to the right hand side, rhs(j,k), is violating the row-major-order of C++. A possible solution could be to transpose the rhs matrix upfront or storing the complete data in a one-dimensional std::vector<T> with a slightly more advanced function call operator() which retrieves the data.

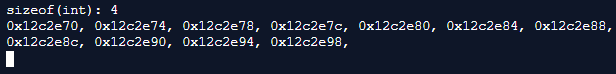

std::vector<T> is fulfilling the requirement of a ContiguousContainer which means that it’s storing its data in contiguous memory locations. Because of the contiguous memory locations, the std::vector<T> is a cache friendly (Locality of Reference) container which makes its usage fast. The example below illustrates the contiguous memory locations of a std::vector<int> with integer size of 4-byte.

.gist table { margin-bottom: 0; }

std::vector<int>

Contiguous memory locations of a std::vector with a 4-byte integer

Contiguous memory locations of a std::vector with a 4-byte integer

A slightly extended example with a two-dimensional array is pointing out the problem we will have, with this type of container, to store the matrix data. As soon as a std::vector<T> has more than one dimension it is violating the Locality of Reference principle, between the rows of the matrix, and therefore is not cache-friendly. This behavior is getting worse with every additional dimension added to the std::vector<T>.

.gist table { margin-bottom: 0; }

std::vector<T> example

Multi-dimensional std::vector with a 4-byte integer and 24-byte row

Multi-dimensional std::vector with a 4-byte integer and 24-byte row

Analysis of std::array<T, size>

std::array<T, size> is a container storing its data on the stack. Because of the memory allocation on the stack, the size of std::array<T, size> needs to be defined at compile time. The excerpt of libstdc++ illustrates the implementation of std::array<T, size>. std::array<T, size> is in principle a convenience wrapper of a classical C array (1).

.gist table { margin-bottom: 0; }

std::array<T, size> implementation from libstdc++

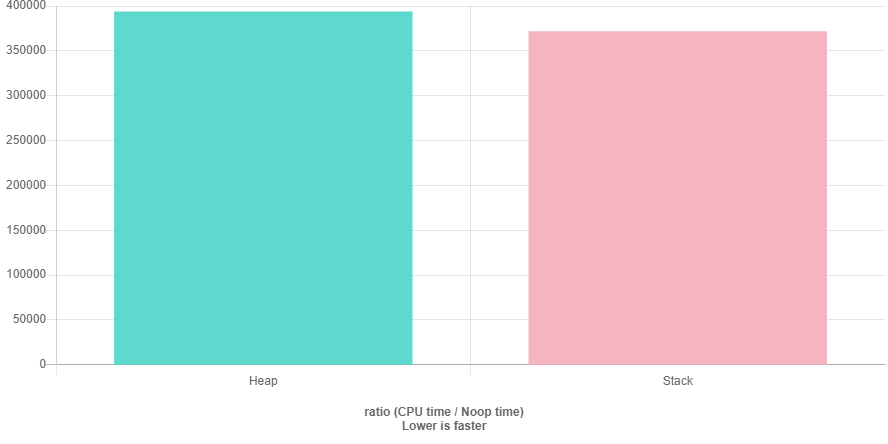

Heap vs. Stack

The code below shows a simple test of the performance difference between memory allocated on the heap and allocated on the stack. The difference can be explained by the fact that memory management on the heap needs an additional level of indirection, a pointer which points to the address in memory of the data element, which is slowing down the heap a little bit.

.gist table { margin-bottom: 0; }

Benchmark results with GCC-9.1 and O3 optimization of heap and stack (quick-bench.com)

Benchmark results with GCC-9.1 and O3 optimization of heap and stack (quick-bench.com)

Benchmarks

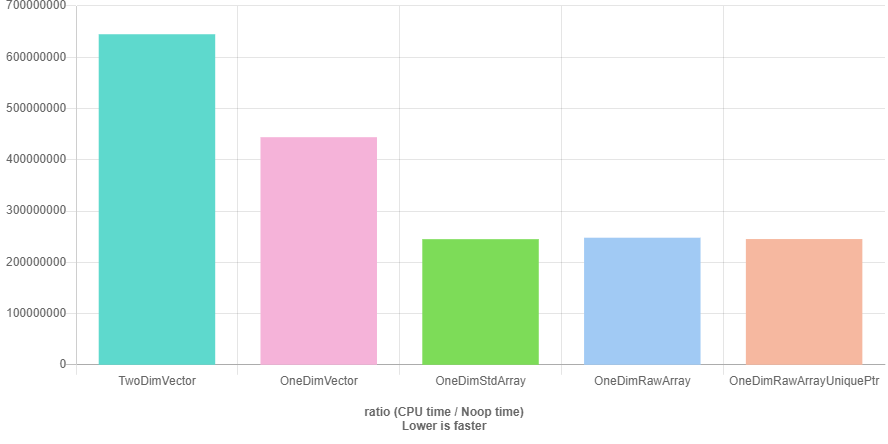

The code below is Benchmarking the 4 different ways to store the data (two- and one-dimensional std::vector, std::array, C array) and in addition shows the performance of a C array wrapped in std::unique_ptr<T[]>. All Benchmarks are done with Clang-8.0 and GCC-9.1.

.gist table { margin-bottom: 0; }

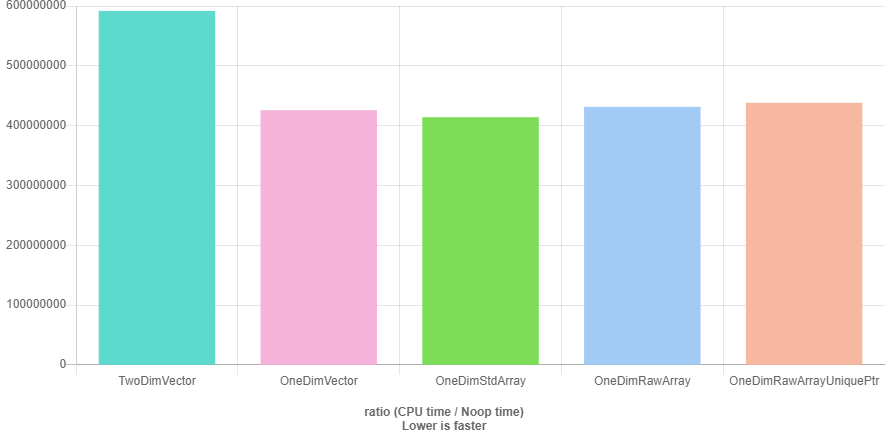

The graphs below point out what we already thought might happen, the performance of a two-dimensional std::vector is worse than the performance of a one-dimensional std::vector, std::array, C array, and the std::unique_ptr<T[]>. GCC seems to be a bit better (or more aggressive) in code optimization then Clang, but I’m not sure how comparable performance tests between GCC and Clang are with quick-bench.com.

Benchmark results with gcc-9.1 and O3 optimization (quick-bench.com)

Benchmark results with gcc-9.1 and O3 optimization (quick-bench.com)

Benchmark results with clang-8.0 and O3 optimization (quick-bench.com)

Benchmark results with clang-8.0 and O3 optimization (quick-bench.com)

If we would choose Clang as compiler it wouldn’t make a difference if we would take a std::vector or one of the others, but for GCC it does. std::array we can’t choose because we want to define the size of the matrix at runtime and therefore std::array is not an option. For our further examination of a possible matrix implementation we could choose a C array, but for simplicity, safer memory management we will use std::unique_ptr<T[]>. std::vector<T> to store the data and querying the data via data() would also be an option.

Resulting Basis Matrix Implementation

.gist table { margin-bottom: 0; }

Decomposition Methods

Let’s finally start discussing different matrix decomposition methods after all these preceding performance considerations.

LU-Decomposition

As long as we can guarantee that all main diagonal elements (also called pivot elements) are unequal to zero at any iteration of the decomposition  , we can use a simple LU-Decomposition.

, we can use a simple LU-Decomposition.

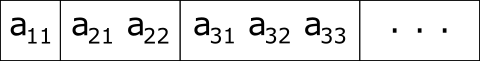

The LU-Decomposition (also known as Gaussian elimination) method is decomposing a given matrix A into two resulting matrices, the lower (L) matrix which contains quotients and main diagonal elements of value 1, and the upper (U) matrix which contains the resulting elements. Through back substitution, the upper matrix can be used to get the solution of the linear equation system.

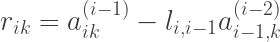

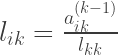

At first (1) the main diagonal elements of the L matrix need to be initialized with 1. The quotients (2) of the L matrix can then be calculated by

And the results (3) of the matrix U can afterward be calculated by

.gist table { margin-bottom: 0; }

An example of a matrix A and its decomposition matrices L and U would look like the following.

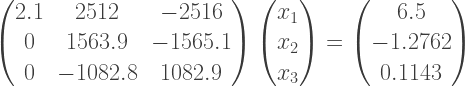

We have now a reliable algorithm to decompose an invertible matrix and solve the linear equation system. So let’s say we have one of these invertible matrices which have non zero main diagonal pivot elements. It should be no problem to solve the linear equation system with the algorithm described above, now. Let’s find out and say we have to solve the following linear equation system, with an accuracy of 5 digits, which should lead to the results  and

and  :

:

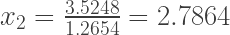

After solving the linear equation system we can get the results of  and

and  by back substitution

by back substitution

Unfortunately, the result of  is quite off-target. The problem is the loss of significance due to the difference between the two values of the almost same size. To solve this problem we need the partial pivoting strategy which is exchanging the actual pivot element with the value of the largest element of the column. Ok let’s try again with the example above, but this time we have exchanged both rows to have the maximum values at the main diagonal pivots:

is quite off-target. The problem is the loss of significance due to the difference between the two values of the almost same size. To solve this problem we need the partial pivoting strategy which is exchanging the actual pivot element with the value of the largest element of the column. Ok let’s try again with the example above, but this time we have exchanged both rows to have the maximum values at the main diagonal pivots:

Again after solving the linear equation system we can get the results of  and

and  by back substitution

by back substitution

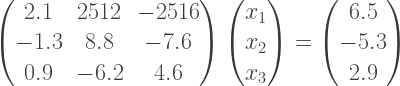

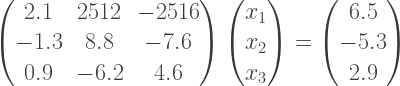

Now the results are much better. But unfortunately, we can prove that also performing a partial pivoting, according to the largest element in the column, is not always sufficient. Let’s say we have the following linear equation system

And after solving the linear equation system and applying partial pivoting before each iteration, the solution looks like

As a result, after the back substitution, we get  and

and  , but the exact results are

, but the exact results are  and

and  . Again the solution if off-target. The difference of the exact and numerical calculated solution can be explained by the big coefficients

. Again the solution if off-target. The difference of the exact and numerical calculated solution can be explained by the big coefficients  after the first iteration which already leads to a loss of information due to value rounding. Additional the values of

after the first iteration which already leads to a loss of information due to value rounding. Additional the values of  and

and  lead again to a loss of significance at

lead again to a loss of significance at  . The reason behind this behavior is the small value of the first pivot element at the first iteration step compared to the other values of the first row. The matrix was neither strict diagonal dominant nor weak diagonal dominant.

. The reason behind this behavior is the small value of the first pivot element at the first iteration step compared to the other values of the first row. The matrix was neither strict diagonal dominant nor weak diagonal dominant.

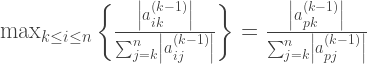

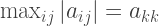

A solution to this problem is the relative scaled pivoting strategy. This pivoting strategy is scaling indirectly by choosing the pivot element whose value is, relative to the sum of the values of the other elements of the row, maximum before each iteration.

As long as  the p-row gets exchanged by the k-row. The exchange of rows can be represented by a permutation matrix and therefore

the p-row gets exchanged by the k-row. The exchange of rows can be represented by a permutation matrix and therefore

.gist table { margin-bottom: 0; }

After initializing the U matrix with the A matrix and the permutation matrix P with an identity matrix, the algorithm is calculating (1) the sum of all elements of row i where column  and afterward (2) the quotient q. As long as the maximum is not zero and

and afterward (2) the quotient q. As long as the maximum is not zero and  the pk and p row of L, U and P matrix will be swapped.

the pk and p row of L, U and P matrix will be swapped.

The results after back substitution are  and

and  illustrate the supremacy of an LU-Decomposition algorithm with a relative scaled pivot strategy compared to an LU-Decomposition with a plain diagonal pivoting strategy. Because of the rounding errors of the LU-Decomposition, with and without relative scaled pivoting, an iterative refinement is necessary, which is not part of this post.

illustrate the supremacy of an LU-Decomposition algorithm with a relative scaled pivot strategy compared to an LU-Decomposition with a plain diagonal pivoting strategy. Because of the rounding errors of the LU-Decomposition, with and without relative scaled pivoting, an iterative refinement is necessary, which is not part of this post.

Cholesky-Decomposition

Many problems solved with matrices, such as the Finite Element Method, are depending on the law of conservation of energy. Important properties of these matrices are their symmetry and that these matrices are positive definite. A matrix is positive definite if their corresponding quadratic form is positive.

That means that the elements of a symmetric positive definite matrix are necessarily fulfilling the criteria

- there is one k with

A symmetric positive definite matrix can be decomposed with the Cholesky-Decomposition algorithm which results in a L (lower) triangular matrix. L multiplied with its transposed form results in A.

The formulas of the elements of the Cholesky-Decomposition are the same as the LU-Decomposition but because of the symmetry, the algorithm does only needs to take care of the elements of the diagonal and below the diagonal.

.gist table { margin-bottom: 0; }

Both algorithms, LU- and Cholesky-Decomposition, have a computational complexity of  . But because of the symmetry, the Cholesky-Decomposition needs around half of the operations compared to LU-Decomposition for a large number of elements. If the symmetry of the symmetric positive definite matrices would also be considered in a more advanced storage algorithm it could be also possible to reduce its space complexity significantly.

. But because of the symmetry, the Cholesky-Decomposition needs around half of the operations compared to LU-Decomposition for a large number of elements. If the symmetry of the symmetric positive definite matrices would also be considered in a more advanced storage algorithm it could be also possible to reduce its space complexity significantly.

Storing symmetric matrices with reduced space complexity

Storing symmetric matrices with reduced space complexitytl;dr

Matrices are a key concept in solving linear equation systems. Efficient implementations of matrices are not only considering computation complexity but also space complexity of the matrix data. If the size of a matrix is already definable at compile-time, std::array<T, size> can be a very efficient choice. If the number of elements needs to be defined at runtime either a std::vector<T> or a raw C array (respective std::unique_ptr<T*>) could be of choice. To choose a fitting decomposition algorithm, the characteristics of the linear equation system need to be known. Luckily this is the case for many applications.

Did you like the post?

What are your thoughts? Did you like the post?

Feel free to comment and share this post.

<form

aria-describedby="wp-block-jetpack-mailchimp_consent-text"

="">

<input

aria-label="Enter your email"

="" placeholder="Enter your email" required

="" title="Enter your email" type="email" name="email">

Join my email list

Please also join my mailing list, seldom mails, no spam and no advertising, guaranteed.

By clicking submit, you agree to share your email address with the site owner and Mailchimp to receive updates, and other emails from the site owner. Use the unsubscribe link in those emails to opt out at any time.

Processing…

Success! You're on the list.

Whoops! There was an error and we couldn't process your subscription. Please reload the page and try again.