Introduction

Recently, I came across a problem where the client reported severe performance degradation of their OLAP system. Most of the reports that were running were either timing out or were returning data after a long time. The problem started right after the client had undergone an upgrade, that included the following:

- Software change, that includes a higher version of the product that my company develops

- Migration of OLAP server to a new server with additional memory and CPU

- Version change of SQL Server from 2014 edition to 2016 edition (SP2-CU3)

Here are the specifications of the old and new servers:

Old Server

- OS: 2012 R2 Std

- System Model: Proliant DL360 Gen9

- CPU: 28 (CPU E5-2690 v4 @ 2.60GHz)

- RAM: 768 GB

New Server

- OS: 2016 Std

- System Model: Proliant DL360 Gen10

- CPU: 36(Gold 6154 CPU @ 3.00GHz)

- RAM: 1024GB

Investigating the Issue

I started the investigation by looking through some of the useful DMVs, like sys.dm_exec_requests, sys.sysprocesses, etc. My initial review revealed pressure on the temp db. Almost all the processes that were running slow were waiting on PAGELATCH wait type.

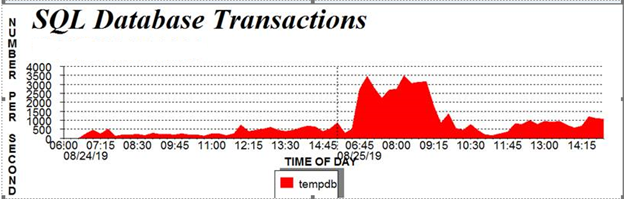

In fact, other than the above wait type, the fact that tempdb was unable to keep up the transaction pressure was also evident by the transaction volume that was hitting tempdb. See the images below (Figure 1 and Figure 2).

Figure 1 - Database transactions

At peak, the transaction rates on temp db were close to 4k/sec.

Figure 2 - tempdb allocation vs the-then tempdb allocation of some of the processes that were running

I started checking if the temp db files were set up correctly in relation to the CPUs. I found that there were only 8 temp db files on a 36 CPU server. This was one of the causes of the slowness, and I immediately requested the OP DBAs to increase this to 18, following a standard that my organization follows. We create files equal to ½ of the total CPUs and as needed thereafter.

Though the system performance improved, there were still complaints of slowness as users had to wait for a fair amount of time to have their requests processed. Everything seemed normal with no major blocking, memory crunch, CPU spike or the disk latency. However, a closer look at the CPU showed it was seen ranging from 40% to 50% (Figure 3), even during peak activity, whereas, on a normal condition, an average utilization of 80%-85% is fairly normal.

Figure 3 - CPU utilization

When looking at the Task Manager, it was found that around 50% of the CPU were getting threads (Fig 4).

Figure 4 - Task Manager view of CPUs

Some followup discussions with the hardware team revealed that SQL Server was unable to send threads across all CPUs due to a non-core-based licensing policy. The problem was every SQL Server 2016 install that was done used older Server+CAL license media. This edition is limited to use 20 cores on the host machine.

To fix this, we needed to use core-based licensing instead for all new installs. To fix this problem in existing servers, a downtime window was approved by the client as it would have required a SQL restart.

An example of the change that was implemented:

Pre Change

- Description: Microsoft SQL Server 2016

- ProductName: SQL Server 2016

- Type: RTM

- Version:13

- SPLevel: 0

- Installation Edition: Enterprise: Server+CAL

Post Change

- Description: Microsoft SQL Server 2016

- ProductName: SQL Server 2016

- Type: RTM

- Version:13

- SPLevel: 0

- Installation Edition: Enterprise: Core-based Licensing

The above change resulted in threads being spread across multiple processors, and, the system performance increased massively.

This revelation also led to the correction of the overall CPU licensing policies and tempdb set up during installation, which served as a proactive approach in addressing future client issues, that possibly could have come at later dates.

Additionally, two of the client stored procedures were identified as a 'heavy hitter', in terms of CPU and IO consumption, and, efforts were put in to replace them with an optimized version, that had a better coding approach. The older version of these stored procedures were performing a very expensive operation, through a single transaction, and, this was broken down to smaller batches, along with replacement of CTEs with temp tables.

The above issue was quite interesting and a learning experience for me, and, I felt this was a right medium where I could share it.