Machine learning (ML) with neural networks is enabling exciting new inference capabilities for software. Typically, ML models have run in the cloud, which meant that, to make a classification or prediction, you needed to send the text, sound, or images over the network to an external vendor. The performance of such solutions strongly depends on the network bandwidth and latency. Moreover, sending your data to an external service introduces potential privacy concerns.

To solve those problems, AI is now moving to edge devices. Arm is leading this revolution by providing accelerated hardware IPs and middle-layer solutions.

Arm NN, Arm’s open-source software tool, helps developers build an application once and port it to different platforms seamlessly and effortlessly.

Arm NN and the Arm Compute Library are open-source libraries for optimizing ML on Arm-based processors and IoT edge devices. ML workloads can run entirely on edge devices, allowing sophisticated AI-enabled software to run almost anywhere, even without network access.

In practice, this means you can train your models using the most popular ML frameworks. You can then load models and optimize them on your edge device to perform fast inference, eliminating network and privacy problems.

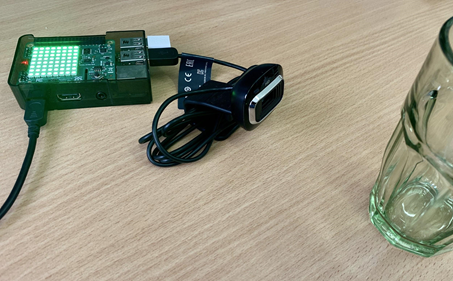

To demonstrate ML with edge computing resources, we'll use Arm NN APIs to classify images of trash from a webcam attached to a Raspberry Pi. The Raspberry Pi will display classification results.

What do we need for this tutorial?

Here's what we used to create the device and application for this tutorial:

- A Raspberry Pi device. The tutorial has been verified with Pi 2, Pi 3, and Pi 4 Model B.

- A MicroSD card

- A USB or MIPI camera module for the Raspberry Pi

- If you want to build your own Arm NN library, you also need a Linux host machine or a computer with Linux virtual environment installed.

- Glass, paper, cardboard, plastic, metal or any trash that your Raspberry Pi can help you classify!

Device Setup

I used the Raspberry Pi 4 Model B with a quad-core ARM Cortex A72 CPU, 1GB onboard memory, an 8GB MicroSD card, a WiFi dongle, a USB camera (Microsoft HD-3000).

Before powering up the device, you need to install Raspbian on the MicroSD card as described on the Setting up your Raspberry Pi tutorial. I used NOOBS for easy setup.

I then booted my Raspberry Pi, configured Raspbian, and set up virtual network computing (VNC) for remote access to my Raspberry Pi. You’ll find detailed instructions for setting up the VNC at the RaspberryPi.org VNC (Virtual Network Computing) page.

After configuring the hardware, I started on the software. The solution is comprised of three components:

- camera.hpp implements helper methods for capturing images from a webcam.

- ml.hpp contains methods to load the ML model and classify trash images based on camera input.

- main.cpp contains the main method, which wires the above components together. This is the entry point of the application.

All of these components are discussed below. Everything you see here was created with the Geany editor, which comes with the default Raspbian installation.

Setting up the Camera

To get images from the webcam, I used OpenCV, the computer vision (CV) open-source library. This library provides a convenient interface to capture and process images. You can easily use the same API across different applications and devices, ranging from IoT to mobile to desktop.

The easiest way to incorporate OpenCV into your IoT Raspbian apps is to install the libopencv-dev package through apt-get:

sudo apt-get update

sudo apt-get upgrade

sudo apt-get install libopencv-dev

After the packages are downloaded and installed, you’re ready to capture images from a webcam. I started by implementing two methods: grabFrame and showFrame (see camera.hpp in the companion code):

Mat grabFrame()

{

// Open default camera

VideoCapture cap(0);

// If camera was open, get the frame

Mat frame;

if (cap.isOpened())

{

cap >> frame;

imwrite("image.jpg", frame);

}

else

{

printf("No valid camera\n");

}

return frame;

}

void showFrame(Mat frame)

{

if (!frame.empty())

{

imshow("Image", frame);

waitKey(0);

}

}

The first method, grabFrame, opens the default web camera (index of 0) and captures a single frame. Note that the C++ OpenCV interface uses the Mat class to represent images, so grabFrame returns objects of this type. The raw image data can be accessed by reading the data member of the Mat class.

The second method, showFrame, is used to display the captured image. To that end, showFrame uses OpenCV’s imshow method to create a window in which the image will be displayed. I then invoke the waitKey method, which forces the image window to be displayed until the user hits a key on the keyboard. I used the waitKey parameter to specify the timeout. Here, I used an infinite timeout, represented by 0.

To test the above methods, I invoked them within the main method (main.cpp):

int main()

{

// Grab image

Mat frame = grabFrame();

// Display image

showFrame(frame);

return 0;

}

To build the app, I used the g++ command and linked the OpenCV libraries through pkg-config:

g++ main.cpp -o trashClassifier 'pkg-config --cflags --libs opencv'

Afterward, I executed the app to capture a single image:

Trash Dataset and Model Training

I trained the TensorFlow classification model using an existing dataset created by Gary Thung and available from his trashnet Github repo.

To train the model, I followed the steps of the TensorFlow image classification tutorial. However, I trained the model on images of 256x192 pixels — half the width and height of the original images from the trash dataset. Here is what our model consists of:

# Building the model

model = Sequential()

# 3 convolutional layers

model.add(Conv2D(32, (3, 3), input_shape = (IMG_HEIGHT, IMG_WIDTH, 3)))

model.add(Activation("relu"))

model.add(MaxPooling2D(pool_size=(2,2)))

model.add(Conv2D(64, (3, 3)))

model.add(Activation("relu"))

model.add(MaxPooling2D(pool_size=(2,2)))

model.add(Conv2D(64, (3, 3)))

model.add(Activation("relu"))

model.add(MaxPooling2D(pool_size=(2,2)))

model.add(Dropout(0.25))

# 2 hidden layers

model.add(Flatten())

model.add(Dense(128))

model.add(Activation("relu"))

model.add(Dense(128))

model.add(Activation("relu"))

# The output layer with 6 neurons, for 6 classes

model.add(Dense(6))

model.add(Activation("softmax"))

The model achieved approximately 83% accuracy. We use tf.lite.TFLiteConverter to convert it to TensorFlow Lite format trash_model.tflite.

converter = tf.lite.TFLiteConverter.from_keras_model_file('model.h5')

model = converter.convert()

file = open('model.tflite' , 'wb')

file.write(model)

Setting up the Arm NN SDK

The next step is to prepare the Arm NN SDK. To generate the Arm NN library for your Raspberry Pi, you can either follow the Arm Cross-compile Arm NN and Tensorflow for the Raspberry Pi tutorial or run the automated script from the Arm Tool-Solutions Github repository to cross-compile the SDK. The Arm NN 19.08 binary tar file for Raspberry Pi can be found on GitHub.

No matter which approach you choose, copy the resulting tar (armnn-dist) to your Raspberry Pi. In this case, I use the VNC to transfer files between my development PC and the Raspberry Pi.

Next, set the LD_LIBRARY_PATH environment variable to point to the armnn/lib subfolder of armnn-dist:

export LD_LIBRARY_PATH=/home/pi/armnn-dist/armnn/lib

Here, I assume that the armnn-dist is located under the home/pi folder.

Use Arm NN for on-device ML inference

Load the model output labels

You must use the model output labels to interpret the outputs of the model. In our code, we create a string vector to store the 6 classes.

const std::vector<std::string> modelOutputLabels = {"cardboard", "glass", "metal", "paper", "plastic", "trash"};

Load and pre-process an input image

We must pre-process images before the model can use them as inputs. The pre-processing method that we use depends on the framework, model, or model data type.

Our model has an input of a Conversion 2D layer, with id ‘conv2d_input’. Its output is a softmax activation layer, with id ‘activation_5/Softmax’. The model properties are extracted by using Tensorboard, a visualization tool provided by TensorFlow for model inspection.

const std::string inputName = "conv2d_input";

const std::string outputName = "activation_5/Softmax";

const unsigned int inputTensorWidth = 256;

const unsigned int inputTensorHeight = 192;

const unsigned int inputTensorBatchSize = 32;

const armnn::DataLayout inputTensorDataLayout = armnn::DataLayout::NHWC;

Note that the batch size used for training is equal to 32, so you need to provide at least 32 images for training and validation.

The following code loads and pre-processes an image the camera captures:

// Load and preprocess input image

const std::vector<TContainer> inputDataContainers =

{ PrepareImageTensor<uint8_t>("image.jpg" ,

inputTensorWidth, inputTensorHeight,

normParams,

inputTensorBatchSize,

inputTensorDataLayout) } ;

All logic related to loading the ML model and making predictions is contained in the ml.hpp file,

Create a parser and load the network

The next step when working with Armn NN is to create a parser object that will be used to load your network file. Arm NN has parsers for a variety of model file types, including TFLite, ONNX, Caffe etc. Parsers handle creation of the underlying Arm NN graph so you don't need to construct your model graph by hand.

In this example, we are using TFLite model so we create the TfLite parser to load the model from the specified path.

The most important method from ml.hpp is loadModelAndPredict. It first creates the TensorFlow model parser:

// Import the TensorFlow model.

// Note: use CreateNetworkFromBinaryFile for .tflite files.

armnnTfLiteParser::ITfLiteParserPtr parser =

armnnTfLiteParser::ITfLiteParser::Create();

armnn::INetworkPtr network =

parser->CreateNetworkFromBinaryFile("trash_model.tflite");

Next, I invoke the armnnTfLiteParser::ITfLiteParser::Create method and use this parser to load trash_model.tflite.

Once the model is parsed, you create the bindings to those layers using the GetNetworkInputBindingInfo / GetNetworkOutputBindingInfo method:

// Find the binding points for the input and output nodes

const size_t subgraphId = 0;

armnnTfParser::BindingPointInfo inputBindingInfo =

parser->GetNetworkInputBindingInfo(subgraphId, inputName);

armnnTfParser::BindingPointInfo outputBindingInfo =

parser->GetNetworkOutputBindingInfo(subgraphId, outputName);

You must prepare a container to receive the output of the model. The output tensor size is equal to the number of model output labels. This is implemented as below:

// Output tensor size is equal to the number of model output labels

const unsigned int outputNumElements = modelOutputLabels.size();

std::vector<TContainer> outputDataContainers = { std::vector<uint8_t>(outputNumElements)};

Choose backends, create runtime, and optimize the model

You must optimize your network and load it onto a compute device. The Arm NN SDK supports optimized execution backends on Arm CPU, Mali GPU, and DSP devices. Backends are identified by a string that must be unique across backends. You can specify one or more backend in order of preference.

In our code, Arm NN decides which layers are supported by the backend. First the CPU is checked. If one or more of the layers can't be run on CPU, it will fall back first to the reference implementation.

After specifying the backend list, you can create a runtime and optimize the network in the runtime context. The backends may choose to implement backend-specific optimizations. Arm NN splits the graph into subgraphs based on backends, it calls an optimize subgraph function on each of them, and substitutes the corresponding sub-graph in the original graph with its optimized version when possible.

Once this is done, LoadNetwork creates the backend-specific workloads for the layers, it creates a backend specific workload factory and calls this to create the workloads. The input image is wrapped in a const tensor and bound to the input tensor.

// Optimize the network for a specific runtime compute

// device, e.g. CpuAcc, GpuAcc

armnn::IRuntime::CreationOptions options;

armnn::IRuntimePtr runtime = armnn::IRuntime::Create(options);

armnn::IOptimizedNetworkPtr optNet = armnn::Optimize(*network,

{armnn::Compute::CpuAcc, armnn::Compute::CpuRef},

runtime->GetDeviceSpec());

The Arm NN SDK inference engine provides a bridge between existing neural network frameworks and Arm Cortex-A CPUs, Arm Mali GPUs, and DSPs. Running ML inference with Arm NN SDK ensures the ML algorithms will be optimized for the underlying hardware.

After optimization, you load the network to the runtime:

// Load the optimized network onto the runtime device

armnn::NetworkId networkIdentifier;

runtime->LoadNetwork(networkIdentifier, std::move(optNet));

Then, perform predictions using the EnqueueWorkload method:

// Predict

armnn::Status ret = runtime->EnqueueWorkload(networkIdentifier,

armnnUtils::MakeInputTensors(inputBindings, inputDataContainers),

armnnUtils::MakeOutputTensors(outputBindings, outputDataContainers));

The last step gives you the prediction result.

std::vector<uint8_t> output = boost::get<std::vector<uint8_t>>(outputDataContainers[0]);

size_t labelInd = std::distance(output.begin(), std::max_element(output.begin(), output.end()));

std::cout << "Prediction: ";

std::cout << modelOutputLabels[labelInd] << std::endl;

In the above example, the only aspect that’s inherently related to the ML framework is the part where you load the model and configure bindings. Everything else is independent of the ML framework. Hence, you can easily switch among various ML models without modifying other parts of your application.

Putting Things Together and Building the App

Finally, I put all of the components together within the main method (main.cpp):

#include "camera.hpp"

#include "ml.hpp"

int main()

{

// Grab frame from the camera

grabFrame(true);

// Load ML model and predict

loadModelAndPredict();

return 0;

}

Note that grabFrame has an extra parameter. When this parameter is true, the camera image is converted to grayscale and resized to 256x192 pixels to match the input format of the ML model, and the converted image is then passed to the loadModelAndPredict method.

To build the app, use the g++ command and link OpenCV and the Arm NN SDK:

g++ main.cpp -o trashClassifier 'pkg-config --cflags --libs opencv' -I/home/pi/armnn-dist/armnn/include -I/home/pi/armnn-dist/boost/include -L/home/pi/armnn-dist/armnn/lib -larmnn -lpthread -linferenceTest -lboost_system -lboost_filesystem -lboost_program_options -larmnnTfLiteParser -lprotobuf

Again, I assume that Arm NN SDK is in the home/pi/armnn-dist folder. Launch the application and take a photo of some cardboard.

pi@raspberrypi:~/ $ ./trashClassifier

ArmNN v20190800

Running network...

Prediction: cardboard

If, during app execution, you see the message, "error while loading shared libraries: libarmnn.so: cannot open shared object file: No such file or directory," check to make sure your LD_LIBRARY_PATH is set correctly.

Wrapping Up

You could also improve the app by triggering image capture and recognition via an external signal. To do this, you’d modify the loadAndPredict method from the ml.hpp module. You need to separate model loading from the prediction (inference).

This article shows how you can move AI from the cloud to the edge. Arm leads in this transition with hardware that’s tailored for inference and software components to help you implement your solutions no matter which ML framework you choose: TensorFlow, TensorFlow Core, Caffe, or any other ML framework.

You can also refer to Arm NN how to guides for additional details:

About Arm

Check out the Arm solutions page to learn more about what we are doing in AI and ML. If you are a business that wants to partner with Arm, check out our Arm AI Partner Program.