Introduction

At 4DotNet, we have an annual developer day. This means, that one day in a year, we all come together to challenge ourselves with one of the latest technologies/features. As we were looking for a good challenge, I remember a demo project by Microsoft that describes a game in which players get an instruction to take a picture of ‘object’. The object here changes all the time. Players can now grab their mobile device and take a picture of that object. The first player who does so wins the round and then a new instruction with a different object will be sent out. The technique behind this game (validate if the picture taken contains the instructed object) is Azure Cognitive Services (ACS). Because I never ran into a project that uses ACS, I thought this would be a nice case for our developer day and so I proposed to re-create this game in several teams during our developer day. Then our CEO came in and said that it would be fun to create the game, but isn't challenging enough because it “can't be that hard to call an API in Azure”. I agreed with him, but since I (again) never used ACS, I was still curious about the system and so I started just a small project to see how it works. So here we go…

Getting ACS Up & Running

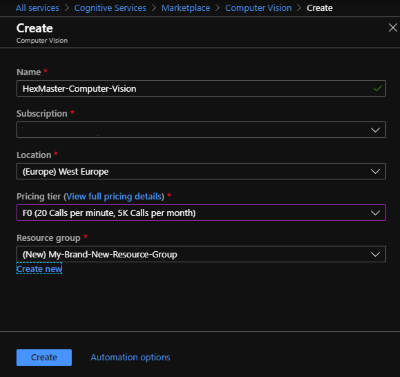

First, I logged in to the Azure Portal to see what it takes to spin up a Cognitive Service. As soon as you do, you're confronted with a variety of services already in place:

- Bing Autosuggest

- Bing Custom Search

- Bing Entity Search

- Bing Search

- Bing Spell Check

- Computer Vision

- Content Moderator

- Custom Vision

- …

- Immersive Reader (preview)

- Language Understanding

Today, 20 very smart features can be enabled running under the hood of Cognitive Services, so you kind of need to know the direction you're going to. For example, when you want to use face recognition you can go for computer vision or custom vision which allows you to interpret pictures/images, but ACS also contains a service called ‘Face’ which is way more suitable for this particular solution. So be aware there are a lot of services available for you and pick wisely. ;)

Today, 20 very smart features can be enabled running under the hood of Cognitive Services, so you kind of need to know the direction you're going to. For example, when you want to use face recognition you can go for computer vision or custom vision which allows you to interpret pictures/images, but ACS also contains a service called ‘Face’ which is way more suitable for this particular solution. So be aware there are a lot of services available for you and pick wisely. ;)

For this demo, I was aiming to upload pictures and call the service to tell me what's on that picture. I chose ‘Computer Vision’. As you can see, you can create one free cognitive service. Yes!! It's free!! As long as you make less than 5K calls monthly, and less than 20 per minute. So for testing/playing, you should be fine with this free instance.

Developing the Cool Stuff

OK, so now the service is running and everything should be set. Now when you navigate to the Computer Vision instance in your Azure portal, you'll see a key (Key1) and an endpoint. You need these to call the service and get a meaningful response.

I opened Visual Studio (2019, updated to the latest version so I can run Azure Functions on .NET Core 3.0). Then I started a new Azure Functions project. I opened the local.settings.json file and edited it so it looks like this:

{

"IsEncrypted": false,

"Values": {

"AzureWebJobsStorage": "UseDevelopmentStorage=true",

"FUNCTIONS_WORKER_RUNTIME": "dotnet",

"COMPUTER_VISION_SUBSCRIPTION_KEY": "your-key",

"COMPUTER_VISION_ENDPOINT": "your-endpoint"

}

}

Of course, make sure you change your-key and your-endpoint to the values you find in the Azure Portal. Now I created a new function with a BLOB trigger. The Computer Vision service accepts images up to 4 Mb. So if you plan to shoot some images with your full-frame SLR and upload them, think again. So I imagined some system would upload pictures using the Valet Key Pattern to BLOB storage. An Azure Function will resize that image so it fits the needs of the Computer Vision service and then stores that image as a BLOB in a container called ‘scaled-images’. The function we're going to create is triggered as soon as an image is stored inside the ‘scaled-images’ container.

[FunctionName("CognitiveServicesFunction")]

public static async Task Run(

[BlobTrigger("scaled-images/{name}", Connection = "")] CloudBlockBlob blob,

string name,

ILogger log)

{

}

That's it, the function now triggers as soon as a BLOB was written to our container. Computer Vision can easily be called using an HTTP request, I'll create an HTTP Client later on but first, we need to know the endpoint and our key so we can make valid HTTP requests. Inside your functions class, make three static strings like so:

private static string subscriptionKey =

Environment.GetEnvironmentVariable("COMPUTER_VISION_SUBSCRIPTION_KEY");

private static string endpoint =

Environment.GetEnvironmentVariable("COMPUTER_VISION_ENDPOINT");

private static string uriBase = endpoint + "vision/v2.1/analyze";

The first two lines read your key and endpoint from settings, and the third append the path to the analysis service of computer vision. As you can see, we'll be calling version 2.1 of the analysis service. Now move back inside your function and create the HTTP client.

var httpClient = new HttpClient();

httpClient.DefaultRequestHeaders.Add("Ocp-Apim-Subscription-Key", subscriptionKey);

As you can see, we'll add your personal key to the request header of your HTTP request. Now it's time to define the information we want to be analyzed by the service. You can set these using the VisualFeature enum. This enum is available in the Cognitive Services NuGet library (more here). In the end, using them only adds a query string parameter with string values. For our plain HTTP Request, we just add that query string param to our HTTP request. Available options are:

- Categories - categorizes image content according to a taxonomy defined in the documentation

- Tags - tags the image with a detailed list of words related to the image content

- Description - describes the image content with a complete English sentence

- Faces - detects if faces are present. If present, generate coordinates, gender, and age

- ImageType - detects if image is clipart or a line drawing

- Color - determines the accent color, dominant color, and whether an image is black&white

- Adult - detects if the image is pornographic in nature (depicts nudity or a sex act). Sexually suggestive content is also detected

- Celebrities - identifies celebrities if detected in the image

- Landmarks - identifies landmarks if detected in the image

I'm going for the categories, description, and color. So in the end, the endpoint I call will be:

https://instance-name.cognitiveservices.azure.com/vision/v2.1/

analyze?visualFeatures=Categories,Description,Color

Posting the Request

Now almost everything is set, we just need to add one more header, and of course the image, so let's go! First, I'm going to download the BLOB content to a byte array:

await blob.FetchAttributesAsync();

long fileByteLength = blob.Properties.Length;

byte[] fileContent = new byte[fileByteLength];

var downloaded = await blob.DownloadToByteArrayAsync(fileContent, 0);

Because I'm going to use this byte array in an HTTP request, I'm creating a ByteArrayContent object, so my client accepts the byte array as HttpContent when I post it. So to complete the request:

using (ByteArrayContent content = new ByteArrayContent(fileContent))

{

content.Headers.ContentType =

new MediaTypeHeaderValue("application/octet-stream");

response = await httpClient.PostAsync(uri, content);

}

You see I add the MediaTypeHeaderValue so the ‘application/octet-steam’ value is sent in the Content-Type header. Then I send the post and wait for a response.

The Response

Cognitive Services responds with a JSON message containing all the information requested for using the visual features, and that applies on the uploaded image. Below is an example of the JSON returned:

{

"categories": [

{

"name": "abstract_",

"score": 0.00390625

},

{

"name": "others_",

"score": 0.0703125

},

{

"name": "outdoor_",

"score": 0.00390625,

"detail": {

"landmarks": []

}

}

],

"color": {

"dominantColorForeground": "White",

"dominantColorBackground": "White",

"dominantColors": [

"White"

],

"accentColor": "B98412",

"isBwImg": false,

"isBWImg": false

},

"description": {

"tags": [

"person",

"riding",

"outdoor",

"feet",

"board",

"road",

"standing",

"skateboard",

"little",

"trick",

"...",

"this was a long list, I removed some values"

],

"captions": [

{

"text": "a person riding a skate board",

"confidence": 0.691531186126425

}

]

},

"requestId": "56320ec6-a008-48fe-a69a-e2ad3b374804",

"metadata": {

"width": 1024,

"height": 682,

"format": "Jpeg"

}

}

The picture I sent was a larger version of the image shown here and as you can see, the description Computer Vision came up with is pretty spot-on, ‘A person riding a skateboard’ is actually what's on the picture. Also, some tags are pretty neat. The accent color #B98412 returned by the service also matches (it's a darker yellowish color which matches the picture). Only the categories are less impressive. It doesn't come up with a solid idea and all three values have a confidence score of ‘objectionable’. To make it easier for you, I shared my Azure Function in a GitHub Gist. Make sure there's a container in your storage account called ‘

The picture I sent was a larger version of the image shown here and as you can see, the description Computer Vision came up with is pretty spot-on, ‘A person riding a skateboard’ is actually what's on the picture. Also, some tags are pretty neat. The accent color #B98412 returned by the service also matches (it's a darker yellowish color which matches the picture). Only the categories are less impressive. It doesn't come up with a solid idea and all three values have a confidence score of ‘objectionable’. To make it easier for you, I shared my Azure Function in a GitHub Gist. Make sure there's a container in your storage account called ‘scaled-images’. Now you can run the function and start uploading images to the ‘scaled-images’ container. The function will pick them up and start analyzing them. The outcome JSON message will be shown in the Azure Functions Console window.

So there you go, you now know how to use Azure Cognitive Services as well… And a Merry Christmas!

History

- 31st December, 2019: Initial version