Introduction

I had an application in mind whose requirements were to capture Audio, save it to disk and playback later, all using Silverlight. I also needed the captured

audio file that is saved on disk to be as small as possible.

The possibility of accessing raw audio samples in Silverlight has been available for a while now but the preparation and writing the raw bytes to disk

is the task you will have to take care of yourself. This is made possible by using the

AudioCaptureDevice to connect to available Audio source (e.g., microphone, etc.)

and using the AudioSink class in the System.Windows.Media namespace to access the raw samples.

Now, given that my intentions were not just to capture and gain access to the raw data, I also needed to write to file and of course compressed. I had no clue

what to use, considering that I wanted the feature in Silverlight.

NSpeex (http://nspeex.codeplex.com/) came to the rescue – this was after a quick search on Code Project (see this article’s discussion

section: http://www.codeproject.com/Articles/20093/Speex-in-C?msg=3660549#xx3660549xx).

Unfortunately there was not much of documentation on the website, so I had a tough time figuring out the whole encoding/decoding process.

This is how I did it and I hope that it will help others who need to work on similar application.

Preparations

- Access to

CaptureDevices require elevated trust … - Writing to disk requires you to enable running application out of browser

- Encoding raw samples requires you to write Audio Capture class that derives from

AudioSink - Decoding to raw samples requires you to write a custom Stream Source class that derives from

MediaStreamSource

Our Audio Capture class must override some methods derived from AudioSink

abstract class. These are OnCaptureStarted(), OnCaptureStopped(),

OnFormatChange(), and OnSamples().

As for MediaStreamSource you may wish

to refer to my previous article describing in summary the methods to override -

http://www.codeproject.com/Articles/251874/Play-AVI-files-in-Silverlight-5-using-MediaElement

Using the code

- Create a new Silverlight 5 Project (C#) and give it any name you wish

- Set the Project properties as explained in my previous article -

http://www.codeproject.com/Articles/251874/Play-AVI-files-in-Silverlight-5-using-MediaElement

- Open the default created UserControl named MainPage.xaml

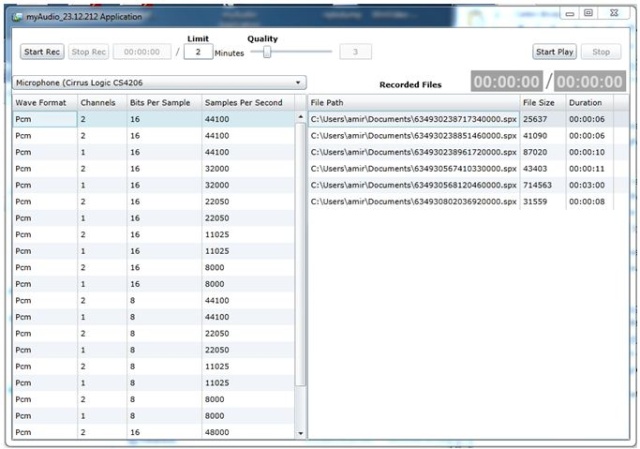

- Change the XAML code to resemble the one below, which includes the

following controls:

MediaElement: Requesting Audio samples- Four buttons: Start/Stop recording, Start/Stop playing audio

- Slider: Adjusting the Audio Quality during encoding

TextBoxes: Displaying Record/Play Audio duration, recording

limit in minutesComboBox: Listing of capture devices- Two

DataGrids: Listing audio formats and displaying saved audio

file details

<Grid x:Name="LayoutRoot" Background="White">

<Grid.RowDefinitions>

<RowDefinition Height="56"/>

<RowDefinition Height="344*" />

</Grid.RowDefinitions>

<MediaElement AutoPlay="True"

Margin="539,8,0,0" x:Name="MediaPlayer"

Stretch="Uniform" Height="20" Width="47"

VerticalAlignment="Top" HorizontalAlignment="Left"

MediaOpened="MediaPlayer_MediaOpened" MediaEnded="MediaPlayer_MediaEnded" />

<Button Content="Start Rec" Height="23" HorizontalAlignment="Left" Margin="12,23,0,0"

Name="StartRecording" VerticalAlignment="Top" Width="64" Click="StartRecording_Click" />

<Button Content="Stop Rec" Height="23" HorizontalAlignment="Left" Margin="82,23,0,0"

Name="StopRecording" VerticalAlignment="Top" Width="59" Click="StopRecording_Click" IsEnabled="False" />

<Button Content="Start Play" Height="23" HorizontalAlignment="Right" Margin="0,23,77,0"

Name="StartPlaying" VerticalAlignment="Top" Width="64" Click="StartPlaying_Click" />

<Button Content="Stop" Height="23" HorizontalAlignment="Right" Margin="0,23,12,0"

Name="StopPlaying" VerticalAlignment="Top" Width="59" Click="StopPlaying_Click" IsEnabled="False" />

<Slider Name="Quality" Value="{Binding QualityValue, Mode=TwoWay}" Height="23" Width="129"

HorizontalAlignment="Left" VerticalAlignment="Top"

Margin="342,23,0,0"

Minimum="2" Maximum="7" SmallChange="1" DataContext="{Binding}" />

<TextBox Text="{Binding timeSpan, Mode=OneWay}" Margin="147,23,0,0" TextAlignment="Center"

HorizontalAlignment="Left" Width="85" IsEnabled="False" Height="23" VerticalAlignment="Top" />

<TextBlock Text="/" Margin="0,3,104,0" HorizontalAlignment="Right" Width="25" Height="38"

VerticalAlignment="Top" FontSize="24" TextAlignment="Center"

FontWeight="Normal" Foreground="#FF8D8787" Grid.Row="1" />

<TextBox Text="{Binding RecLimit, Mode=TwoWay}" Margin="250,23,0,0"

TextAlignment="Center" HorizontalAlignment="Left" Width="43" Height="23" VerticalAlignment="Top" />

<TextBox Text="{Binding QualityValue, Mode=TwoWay}" Margin="477,23,0,0"

TextAlignment="Center" HorizontalAlignment="Left" Width="47"

IsEnabled="False" Height="23" VerticalAlignment="Top" />

<TextBox Text="{Binding timeSpan, Mode=OneWay}" Margin="0,5,125,0" TextAlignment="Center"

IsEnabled="False" FontSize="24" FontWeight="Bold" FontFamily="Arial" BorderBrush="{x:Null}"

Height="33" VerticalAlignment="Top" HorizontalAlignment="Right" Width="107"

Grid.Row="1" Background="Black" Foreground="#FFADABAB" />

<TextBlock Text="/" Margin="229,25,0,0" HorizontalAlignment="Left" Width="25" Height="21"

VerticalAlignment="Top" FontSize="13" TextAlignment="Center" FontWeight="Normal" Foreground="#FF8D8787" />

<TextBox Text="{Binding PlayTime, Mode=OneWay}" Margin="0,5,4,0" TextAlignment="Center"

IsEnabled="False" FontSize="24" FontWeight="Bold" FontFamily="Arial" BorderBrush="{x:Null}"

Height="33" VerticalAlignment="Top" Grid.Row="1" HorizontalAlignment="Right"

Width="107" Foreground="#FFADABAB" Background="#FF101010" />

<TextBlock Text="Minutes" Margin="295,29,0,0"

HorizontalAlignment="Left" Width="47" Height="15" VerticalAlignment="Top" />

<TextBlock Text="Quality" Margin="340,8,0,0" TextAlignment="Center" FontWeight="Bold"

HorizontalAlignment="Left" Width="50" Height="15" VerticalAlignment="Top" />

<TextBlock Text="Limit" Margin="253,8,0,0" TextAlignment="Center"

FontWeight="Bold" HorizontalAlignment="Left" Width="36" Height="15" VerticalAlignment="Top" />

<TextBlock Text="Recorded Files" Margin="0,19,238,0" TextAlignment="Left"

FontWeight="Bold" HorizontalAlignment="Right" Width="126"

Height="15" VerticalAlignment="Top" Grid.Row="1" />

<ComboBox

x:Name="comboDeviceType" ItemsSource="{Binding AudioDevices, Mode=OneWay}"

DisplayMemberPath="FriendlyName" SelectedIndex="0" Height="21" VerticalAlignment="Top"

HorizontalAlignment="Left" Width="429" Margin="0,13,0,0" Grid.Row="1" />

<dg:DataGrid x:Name="RecordedFiles" SelectedIndex="0"

ItemsSource="{Binding FileItems, Mode=OneWay}" Grid.Row="1"

AutoGenerateColumns="False" Margin="430,40,0,0"

SelectionChanged="RecordedFiles_SelectionChanged">

<dg:DataGrid.Columns>

<dg:DataGridTextColumn Width="209" Header="File Path" Binding="{Binding FilePath}" />

<dg:DataGridTextColumn Header="File Size" Binding="{Binding FileSize}" />

<dg:DataGridTextColumn Header="Duration" Binding="{Binding FileDuration}" />

</dg:DataGrid.Columns>

</dg:DataGrid>

<dg:DataGrid x:Name="SourceDetailsFormats" SelectedIndex="0" Grid.Row="1"

ItemsSource="{Binding ElementName=comboDeviceType,Path=SelectedValue.SupportedFormats}"

AutoGenerateColumns="False" HorizontalAlignment="Left" Width="429" Margin="0,40,0,0">

<dg:DataGrid.Columns>

<dg:DataGridTextColumn Header="Wave Format" Binding="{Binding WaveFormat}" />

<dg:DataGridTextColumn Header="Channels" Binding="{Binding Channels}" />

<dg:DataGridTextColumn Header="Bits Per Sample" Binding="{Binding BitsPerSample}" />

<dg:DataGridTextColumn Header="Samples Per Second" Binding="{Binding SamplesPerSecond}" />

</dg:DataGrid.Columns>

</dg:DataGrid>

</Grid>

We now have the UI created, we definitely need to write the code behind for the UI controls to respond to.

But first, let us write the classes we need for capturing audio from a capture device and the other one for responding

to MediaElement’s request for Audio Samples during playback:

- Make sure to download the NSpeex library from

http://nspeex.codeplex.com and add reference to the NSpeex.Silverlight DLL

- Create a custom class derived from

AudioSink (I named it MemoryAudioSink.cs) - Create a custom class derived from

MediaStreamSource (I named it

CustomSourcePlayer.cs)

using System;

using System.Windows.Media;

using System.IO;

using NSpeex;

namespace myAudio_23._12._212.Audio

{

public class MemoryAudioSink : AudioSink

{

protected override void OnCaptureStarted()

{

encoder.Quality = _quality;

writer.Write(BitConverter.GetBytes(timeSpan));

recTime = DateTime.Now;

_timeSpan = 0;

}

protected override void OnCaptureStopped()

{

writer.Seek(0, SeekOrigin.Begin);

writer.Write(BitConverter.GetBytes(timeSpan));

writer.Close();

}

protected override void OnFormatChange(AudioFormat audioFormat)

{

if (audioFormat.WaveFormat != WaveFormatType.Pcm)

throw new InvalidOperationException("MemoryAudioSink supports only PCM audio format.");

}

protected override void OnSamples(long sampleTimeInHundredNanoseconds,

long sampleDurationInHundredNanoseconds, byte[] sampleData)

{

numSamples++;

var encodedAudio = EncodeAudio(sampleData);

_timeSpan = (Int32)(DateTime.Now.Subtract(recTime).TotalSeconds);

try

{

encodedByteSize = (Int16)encodedAudio.Length;

writer.Write(BitConverter.GetBytes(encodedByteSize));

writer.Write(encodedAudio);

}

catch (Exception ex)

{

}

}

public byte[] EncodeAudio(byte[] rawData)

{

var inDataSize = rawData.Length / 2;

var inData = new short[inDataSize];

for (int index = 0; index < rawData.Length; index +=2 )

{

inData[index / 2] = BitConverter.ToInt16(rawData, index);

}

inDataSize = inDataSize - inDataSize % encoder.FrameSize;

var encodedData = new byte[rawData.Length];

var encodedBytes = encoder.Encode(inData, 0, inDataSize, encodedData, 0, encodedData.Length);

byte[] encodedAudioData = null;

if (encodedBytes != 0)

{

encodedAudioData = new byte[encodedBytes];

Array.Copy(encodedData, 0, encodedAudioData, 0, encodedBytes);

}

return encodedAudioData;

}

}

}

CustomSourcePlayer.cs:

using System;

using System.Windows.Media;

using System.Collections.Generic;

using System.IO;

using System.Globalization;

using NSpeex;

namespace myAudio_23._12._212

{

public class CustomSourcePlayer : MediaStreamSource

{

protected override void OpenMediaAsync()

{

playTime = DateTime.Now;

_timeSpan = "00:00:00";

Dictionary<MediaSourceAttributesKeys, string> sourceAttributes =

new Dictionary<MediaSourceAttributesKeys, string>();

List<MediaStreamDescription> availableStreams = new List<MediaStreamDescription>();

_audioDesc = PrepareAudio();

availableStreams.Add(_audioDesc);

sourceAttributes[MediaSourceAttributesKeys.Duration] =

TimeSpan.FromSeconds(0).Ticks.ToString(CultureInfo.InvariantCulture);

sourceAttributes[MediaSourceAttributesKeys.CanSeek] = false.ToString();

ReportOpenMediaCompleted(sourceAttributes, availableStreams);

}

private MediaStreamDescription PrepareAudio()

{

int ByteRate = _audioSampleRate * _audioChannels * (_audioBitsPerSample / 8);

_waveFormat = new WaveFormatEx();

_waveFormat.BitsPerSample = _audioBitsPerSample;

_waveFormat.AvgBytesPerSec = (int)ByteRate;

_waveFormat.Channels = _audioChannels;

_waveFormat.BlockAlign = (short)(_audioChannels * (_audioBitsPerSample / 8));

_waveFormat.ext = null;

_waveFormat.FormatTag = WaveFormatEx.FormatPCM;

_waveFormat.SamplesPerSec = _audioSampleRate;

_waveFormat.Size = 0;

Dictionary<MediaStreamAttributeKeys, string> streamAttributes = new Dictionary<MediaStreamAttributeKeys, string>();

streamAttributes[MediaStreamAttributeKeys.CodecPrivateData] = _waveFormat.ToHexString();

return new MediaStreamDescription(MediaStreamType.Audio, streamAttributes);

}

protected override void CloseMedia()

{

cleanup();

}

public void cleanup()

{

reader.Close();

}

protected override void GetSampleAsync(

MediaStreamType mediaStreamType)

{

GetAudioSample();

}

private void GetAudioSample()

{

MediaStreamSample msSamp;

if (reader.BaseStream.Position < reader.BaseStream.Length)

{

encodedSamples = BitConverter.ToInt16(reader.ReadBytes(2), 0);

byte[] decodedAudio = Decode(reader.ReadBytes(encodedSamples));

memStream = new MemoryStream(decodedAudio);

msSamp = new MediaStreamSample(_audioDesc, memStream, _audioStreamOffset, memStream.Length,

_currentAudioTimeStamp, _emptySampleDict);

_currentAudioTimeStamp += _waveFormat.AudioDurationFromBufferSize((uint)memStream.Length);

}

else

{

msSamp = new MediaStreamSample(_audioDesc, null, 0, 0, 0, _emptySampleDict);

}

ReportGetSampleCompleted(msSamp);

_timeSpan = TimeSpan.FromSeconds((Int32)(DateTime.Now.Subtract(playTime).TotalSeconds)).ToString();

}

public byte[] Decode(byte[] encodedData)

{

try

{

short[] decodedFrame = new short[44100];

int decodedBytes = decoder.Decode(encodedData, 0, encodedData.Length, decodedFrame, 0, false);

byte[] decodedAudioData = null;

decodedAudioData = new byte[decodedBytes * 2];

try

{

for (int shortIndex = 0, byteIndex = 0; shortIndex < decodedBytes; shortIndex++, byteIndex += 2)

{

byte[] temp = BitConverter.GetBytes(decodedFrame[shortIndex]);

decodedAudioData[byteIndex] = temp[0];

decodedAudioData[byteIndex + 1] = temp[1];

}

}

catch (Exception ex)

{

return decodedAudioData;

}

return decodedAudioData;

}

catch (Exception ex)

{

return null;

}

}

}

}

Once our custom classes have been created, we then need to hook them up in our UI using the code behind …

using System;

using System.Windows;

using System.Windows.Controls;

using System.Windows.Media;

using System.IO;

using System.Collections;

using System.ComponentModel;

using System.Windows.Threading;

using System.Collections.ObjectModel;

using myAudio_23._12._212.Audio;

using System.Runtime.Serialization;

namespace myAudio_23._12._212

{

public partial class MainPage : UserControl, INotifyPropertyChanged

{

public MainPage()

{

InitializeComponent();

this.Loaded += (s, e) =>

{

this.DataContext = this;

this.timer = new DispatcherTimer()

{

Interval = TimeSpan.FromMilliseconds(200)

};

this.timer.Tick += new EventHandler(timer_Tick);

path = System.IO.Path.Combine(Environment.GetFolderPath(

Environment.SpecialFolder.MyDocuments), "recordedAudio.s2x");

if (reader != null)

reader.Close();

if (File.Exists(path))

{

reader = new BinaryReader(File.Open(path, FileMode.Open));

Int32 fSize = BitConverter.ToInt32(reader.ReadBytes(4), 0);

byte[] b = reader.ReadBytes(fSize);

FileList fL = new FileList(FileItems);

fL = (FileList)WriteFromByte(b);

FileItems = fL._files;

reader.Close();

}

RecordedFiles.SelectedIndex = 0;

};

}

void timer_Tick(object sender, EventArgs e)

{

if (this._sink != null)

{

if (this._sink.timeSpan <= _recLimit * 60)

{

timeSpan = TimeSpan.FromSeconds(this._sink.timeSpan).ToString();

PlayTime = timeSpan;

}

else

StopRec();

}

else

{

timeSpan = this._mediaSource.timeSpan;

}

}

private bool EnsureAudioAccess()

{

return CaptureDeviceConfiguration.AllowedDeviceAccess ||

CaptureDeviceConfiguration.RequestDeviceAccess();

}

private void StartRecording_Click(object sender, RoutedEventArgs e)

{

if (!EnsureAudioAccess())

return;

long fileName = DateTime.Now.Ticks;

path = System.IO.Path.Combine(Environment.GetFolderPath(

Environment.SpecialFolder.MyDocuments), fileName.ToString()+".spx");

if (File.Exists(path))

{

File.Delete(path);

}

writer = new BinaryWriter(File.Open(path, FileMode.OpenOrCreate));

var audioDevice = CaptureDeviceConfiguration.GetDefaultAudioCaptureDevice();

_captureSource = new CaptureSource() { AudioCaptureDevice = audioDevice };

_sink = new MemoryAudioSink();

_sink.CaptureSource = _captureSource;

_sink.SetWriter = writer;

_sink.Quality = (Int16)Quality.Value;

_captureSource.Start();

_timeSpan = "00:00:00";

this.timer.Start();

StartRec();

}

private void StartRec()

{

StartRecording.IsEnabled = false;

StopRecording.IsEnabled = true;

StartPlaying.IsEnabled = false;

}

private void StopRecording_Click(object sender, RoutedEventArgs e)

{

StopRec();

}

private void StopRec()

{

FileItem fI = new FileItem();

fI.FilePath = path;

fI.FileSize = writer.BaseStream.Length;

fI.FileDuration = timeSpan;

FileItems.Add(fI);

FileList fL = new FileList(FileItems);

byte[] b = WriteFromObject(fL);

path = System.IO.Path.Combine(Environment.GetFolderPath(

Environment.SpecialFolder.MyDocuments), "recordedAudio.s2x");

if (File.Exists(path))

{

File.Delete(path);

}

BinaryWriter fileListwriter = new BinaryWriter(File.Open(path, FileMode.OpenOrCreate));

Int32 fSize = b.Length;

fileListwriter.Write(fSize);

fileListwriter.Write(b);

_captureSource.Stop();

this.timer.Stop();

writer.Close();

fileListwriter.Close();

_sink = null;

StartRecording.IsEnabled = true;

StopRecording.IsEnabled = false;

StartPlaying.IsEnabled = true;

}

private void StartPlaying_Click(object sender, RoutedEventArgs e)

{

if (reader != null)

reader.Close();

if (fileToPlay != "")

{

reader = new BinaryReader(File.Open(fileToPlay, FileMode.Open));

_mediaSource = new CustomSourcePlayer();

PlayTime = TimeSpan.FromSeconds(

BitConverter.ToInt32(reader.ReadBytes(4), 0) - 1).ToString();

_mediaSource.SetReader = reader;

MediaPlayer.SetSource(_mediaSource);

StartPlaying.IsEnabled = false;

StopPlaying.IsEnabled = true;

StartRecording.IsEnabled = false;

}

}

private void StopPlaying_Click(object sender, RoutedEventArgs e)

{

MediaPlayer.Stop();

StopPlay();

}

private void MediaPlayer_MediaOpened(object sender, RoutedEventArgs e)

{

timer.Start();

}

private void MediaPlayer_MediaEnded(object sender, RoutedEventArgs e)

{

StopPlay();

}

private void StopPlay()

{

timer.Stop();

_mediaSource.cleanup();

_mediaSource = null;

StartPlaying.IsEnabled = true;

StopPlaying.IsEnabled = false;

StartRecording.IsEnabled = true;

}

public static byte[] WriteFromObject(FileList files)

{

FileList fileItems = files;

MemoryStream ms = new MemoryStream();

DataContractSerializer ser = new DataContractSerializer(typeof(FileList));

ser.WriteObject(ms, fileItems);

byte[] array = ms.ToArray();

ms.Close();

ms.Dispose();

return array;

}

public static object WriteFromByte(byte[] array)

{

MemoryStream memoryStream = new MemoryStream(array);

DataContractSerializer ser = new DataContractSerializer(typeof(FileList));

object obj = ser.ReadObject(memoryStream);

return obj;

}

private void RecordedFiles_SelectionChanged(object sender, SelectionChangedEventArgs e)

{

IList list = e.AddedItems;

fileToPlay = ((FileItem)list[0]).filePath;

}

}

}

Points of Interest

You may wish to enable logging in the attached code so that you can actually

have a feel of size of the encoded bytes returned by the NSpeex encoder for each

Sample. in my case the very first Sample was different in size from the rest of

the encoded samples and sometimes the very last sample also turns out to be

different.

For me to be sure to decode the correct number of bytes for every sample I decide to insert 2

bytes before each sample containing the size of that particular encoded sample ...

encodedByteSize = (Int16)encodedAudio.Length;

writer.Write(BitConverter.GetBytes(encodedByteSize));

writer.Write(encodedAudio);

logWriter.WriteLine(numSamples.ToString() + "\t" + encodedAudio.Length);

If anyone can figure out how to save the file such that it can be playable in any player with Speex codec, that would be great to share.

I hope you find this application useful.

History

No changes yet.