Table of Contents

I was having this conversation with a coworker about using a third-party library vs. rolling your own, specifically regarding parsing a comma separated value (CSV) data, or more generally, any text data separated by a known delimiter.

This subject has been pretty much beaten to death and there definitely are many libraries in every language under the sun to parse CSV files. My coworker brought this particular article to my attention: CSV Parsing in .NET Core which is a useful example (which I ignore here) of some edge cases -- certainly not all of them. The article references a couple projects, CsvHelper and TinyCsvParser and one can easily find several CSV parsers on Code Project:

That last link is quite comprehensive in parsers that Tomas Takac evaluated and has a great graph of the finite state machine to parse CSV data. Even more interesting (to me at least), he references RFC-4180 which documents Common Format and MIME Type for Comma-Separated Values (CSV) Files. Pretty cool.

That said, having looked at a few myself and having some very basic requirements (really no edge cases), the question again came up as to whether to roll my own or use a library. Some of the discussion points were:

- What if there's a bug in the roll-your-own code?

- What if it needs to be extended?

- What if the file specification changes?

My answers here are biased of course, but they still might be of some value:

What if there's a bug in the roll-your-own code?

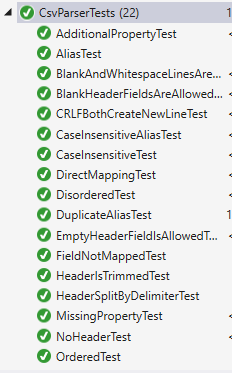

Well, given that the actual parser is 80 lines long (not including extension methods and helper functions), how hard is it to have bugs? And there's unit tests, which actually are 3x more lines of code! My first reaction to rolling my own is always a question to complexity: how complex is the problem to be solved, and how complex are the third party libraries out there that solve that problem? The fewer lines of code, the less bugs and the fewer unit tests one has to write. It cannot be guaranteed that a third-party library is bug free or has implemented decent unit tests. Granted, this is all rather moot for non-edge cases anyways. Still, looking at the code for some of these libraries, in which the implementation is in the thousands of lines of code, I really really can't justify adding that to my code base -- the npm install, nuget install, and so forth are so easy that they preclude any thinking about what you're about to do. This is really a dangerous situation, especially when packages have hidden dependencies and you're suddenly installing 10s or 100s of additional, breakable, dependencies.

What if it needs to be extended?

Extensibility is easily implemented with virtual methods, assuming the implementation breaks the process apart in small steps. There are of course other mechanisms. I find this question however to be a specious argument as it poses a future "what-if" scenario that is unrealistic given the requirements scope. Certainly if the requirements say that all sorts of edge cases have to be handled, then yes, let's go for a third-party library. But I disagree with the argument that edge-cases will suddenly crop up in data files that are produced by other programs and in such a way as to break the parser.

What if the file specification changes?

This is definitely plausible but is not actually an issue of the parser but the class in which the data is parsed and the mapping of properties to fields. So this is not a valid argument in my opinion.

So having just said all that, I put together a proof of concept. It's actually a good example to see how far programming languages have evolved, as this code is basically just a set of map, reduce, and filter operations. It took me about 30 minutes to write this code (compared to my writing the F# example, which took over 5 hours, as F# is not something I'm particularly well-skilled at!

I decided to use a simplified version of the data in that article on CSV Parsing in .NET Core referenced earlier:

string data =

@"Make,Model,Type,Year,Cost,Comment

Toyota,Corolla,Car,1990,2000.99,A Comment";

You'll note here that I'm assuming there's a header.

At a minimum, I wanted the ability to rename properties that are mapped to fields in the CSV:

public enum AutomobileType

{

None,

Car,

Truck,

Motorbike

}

public class Automobile : IPopulatedFromCsv

{

public string Make { get; set; }

public string Model { get; set; }

public AutomobileType Type { get; set; }

public int Year { get; set; }

[ColumnMap(Header = "Cost")]

public decimal Price { get; set; }

public string Comment { get; set; }

}

Note the ColumnMap attribute:

public class ColumnMapAttribute : Attribute

{

public string Header { get; set; }

}

and for giggles, I require that any class that is going to be populated by the CSV parser implements IPopulatedFromCsv as a useful hint to the user.

Here's the core parser:

using System;

using System.Collections.Generic;

using System.Linq;

using System.Reflection;

using Clifton.Core.Utils;

namespace SimpleCsvParser

{

public class CsvParser

{

public virtual List<T> Parse<T>(string data, string delimiter)

where T : IPopulatedFromCsv, new()

{

List<T> items = new List<T>();

var lines = GetLines(data);

var headerFields = GetFieldsFromHeader(lines.First(), delimiter);

var propertyList = MapHeaderToProperties<T>(headerFields);

lines.Skip(1).ForEach(l => items.Add(Populate<T>(l, delimiter, propertyList)));

return items;

}

protected virtual IEnumerable<string> GetLines(string data)

{

var lines = data.Split(new char[] { '\r', '\n' }).Where

(l => !String.IsNullOrWhiteSpace(l));

return lines;

}

protected virtual T Create<T>() where T : IPopulatedFromCsv, new()

{

return new T();

}

protected virtual string[] GetFieldsFromHeader(string headerLine, string delimiter)

{

var fields = headerLine.Split(delimiter)

.Select(f => f.Trim())

.Where(f =>

!string.IsNullOrWhiteSpace(f) ||

!String.IsNullOrEmpty(headerLine)).ToArray();

return fields;

}

protected virtual List<PropertyInfo> MapHeaderToProperties<T>(string[] headerFields)

{

var map = new List<PropertyInfo>();

Type t = typeof(T);

headerFields

.Select(f =>

(

f,

t.GetProperty(f,

BindingFlags.Instance |

BindingFlags.Public |

BindingFlags.IgnoreCase |

BindingFlags.FlattenHierarchy) ?? AliasedProperty(t, f)

)

)

.ForEach(fp => map.Add(fp.Item2));

return map;

}

protected virtual PropertyInfo AliasedProperty(Type t, string fieldName)

{

var pi = t.GetProperties()

.Where(p => p.GetCustomAttribute<ColumnMapAttribute>()

?.Header

?.CaseInsensitiveEquals(fieldName)

?? false);

Assert.That<CsvParserDuplicateAliasException>(pi.Count() <= 1,

$"{fieldName} is aliased more than once.");

return pi.FirstOrDefault();

}

protected virtual T Populate<T>(

string line,

string delimiter,

List<PropertyInfo> props) where T : IPopulatedFromCsv, new()

{

T t = Create<T>();

var fieldValues = line.Split(delimiter);

props.ForEach(fieldValues,

(p, v) => p != null && !String.IsNullOrWhiteSpace(v),

(p, v) => p.SetValue(t, Converter.Convert(v.Trim(), p.PropertyType)));

return t;

}

}

}

There's copious use of LINQ, null continuation and null coalescence operators to map fields to properties, and properties with names overridden with the ColumnMap attribute, and handle cases where there is no property that maps to a specific field.

Also note that all methods, even Create are virtual so that they can be overridden for custom behaviors.

This piece of code is not entirely obvious. What it does is:

- Get all the

public instance properties of the class we're populating - Try and get the custom attribute

- Using

null continuation, get the aliased header - Again using

null continuation, compare the aliased header with the CSV header - Using

null coalescence, return either the result of the comparison or return false to the where condition - Assert that

0 or 1 properties have that alias - Finally,

FirstOrDefault is used because we now know we have only 0 or 1 items in the resulting enumeration, and we want to return null as a placeholder if after all this, there is no property that is aliased to the CSV header field value.

The piece of code uses an extension method ForEach:

- that iterates both collections in lock step and..

- executes the action only when the condition is met.

Thus the code:

props.ForEach(fieldValues,

(p, v) => p != null && !String.IsNullOrWhiteSpace(v),

(p, v) => p.SetValue(t, Converter.Convert(v.Trim(), p.PropertyType)));

does the following:

- The two collections are

props and fieldValues. - The property must exist (remember, we use

null as a placeholder). - The value is not

null or whitespace. - When that condition is met, we use reflection to set the property value, using the

Converter helper.

class Program

{

static void Main(string[] args)

{

string data =

@"Make,Model,Type,Year,Cost,Comment

Toyota,Corolla,Car,1990,2000.99,A Comment";

CsvParser parser = new CsvParser();

var recs = parser.Parse<Automobile>(data, ",");

var result = JsonConvert.SerializeObject(recs);

Console.WriteLine(result);

}

}

And the output:

[{"Make":"Toyota","Model":"Corolla","Type":1,"Year":1990,"Price":2000.99,"Comment":"A Comment"}]

The JsonConvert is my anti-example of using a huge third party library simply so as to write out an array of instances values! Because I was lazy! See? I validate my own argument on the dangers of reaching for a third-party library when something much simpler will do!

So there's a bunch of supporting code. We have extension methods:

public static class ExtensionMethods

{

public static void ForEach<T>(this IEnumerable<T> collection, Action<T> action)

{

foreach (var item in collection)

{

action(item);

}

}

public static void ForEach<T, U>(

this IEnumerable<T> collection1,

IList<U> collection2,

Func<T, U,

bool> where,

Action<T, U> action)

{

int n = 0;

foreach (var item in collection1)

{

U v2 = collection2[n++];

if (where(item, v2))

{

action(item, v2);

}

}

}

public static string[] Split(this string str, string splitter)

{

return str.Split(new[] { splitter }, StringSplitOptions.None);

}

public static bool CaseInsensitiveEquals(this string a, string b)

{

return String.Equals(a, b, StringComparison.OrdinalIgnoreCase);

}

}

And we have a simple Assert static class:

public static class Assert

{

public static void That<T>(bool condition, string msg) where T : Exception, new()

{

if (!condition)

{

var ex = Activator.CreateInstance(typeof(T), new object[] { msg }) as T;

throw ex;

}

}

}

And we have this Converter class I wrote ages ago:

public class Converter

{

public static object Convert(object src, Type destType)

{

object ret = src;

if ((src != null) && (src != DBNull.Value))

{

Type srcType = src.GetType();

if ((srcType.FullName == "System.Object") ||

(destType.FullName == "System.Object"))

{

ret = src;

}

else

{

if (srcType != destType)

{

TypeConverter tcSrc = TypeDescriptor.GetConverter(srcType);

TypeConverter tcDest = TypeDescriptor.GetConverter(destType);

if (tcSrc.CanConvertTo(destType))

{

ret = tcSrc.ConvertTo(src, destType);

}

else if (tcDest.CanConvertFrom(srcType))

{

if (srcType.FullName == "System.String")

{

ret = tcDest.ConvertFromInvariantString((string)src);

}

else

{

ret = tcDest.ConvertFrom(src);

}

}

else

{

if (destType.IsAssignableFrom(srcType))

{

ret = src;

}

else

{

throw new ConverterException("Can't convert from " +

src.GetType().FullName +

" to " +

destType.FullName);

}

}

}

}

}

else if (src == DBNull.Value)

{

if (destType.FullName == "System.String")

{

ret = null;

}

}

return ret;

}

}

Really, the last commit was Nov 26, 2015! It's so old that inline string parsing $"{}" didn't exist (or I wasn't aware of it, haha.)

So then I thought, well, let's write some unit tests, since this code is actually unit-testable:

public class NoAliases : IPopulatedFromCsv

{

public string A { get; set; }

public string B { get; set; }

public string C { get; set; }

}

public class WithAlias : IPopulatedFromCsv

{

public string A { get; set; }

public string B { get; set; }

[ColumnMap(Header = "C")]

public string TheCField { get; set; }

}

public class WithDuplicateAlias : IPopulatedFromCsv

{

public string A { get; set; }

public string B { get; set; }

[ColumnMap(Header = "C")]

public string TheCField { get; set; }

[ColumnMap(Header = "C")]

public string Oops { get; set; }

}

public class NoMatchingField : IPopulatedFromCsv

{

public string A { get; set; }

public string B { get; set; }

public string C { get; set; }

public string D { get; set; }

}

public class NoMatchingProperty : IPopulatedFromCsv

{

public string A { get; set; }

public string C { get; set; }

}

public class Disordered : IPopulatedFromCsv

{

public string C { get; set; }

public string B { get; set; }

public string A { get; set; }

}

[TestClass]

public class CsvParserTests : CsvParser

{

[TestMethod]

public void BlankAndWhitespaceLinesAreSkippedTest()

{

GetLines("").Count().Should().Be(0);

GetLines(" ").Count().Should().Be(0);

GetLines("\r").Count().Should().Be(0);

GetLines("\n").Count().Should().Be(0);

GetLines("\r\n").Count().Should().Be(0);

}

[TestMethod]

public void CRLFBothCreateNewLineTest()

{

GetLines("a\rb").Count().Should().Be(2);

GetLines("a\nb").Count().Should().Be(2);

GetLines("a\r\nb").Count().Should().Be(2);

}

[TestMethod]

public void HeaderSplitByDelimiterTest()

{

GetFieldsFromHeader("a,b,c", ",").Count().Should().Be(3);

}

[TestMethod]

public void SingleHeaderTest()

{

GetFieldsFromHeader("a", ",").Count().Should().Be(1);

}

[TestMethod]

public void NoHeaderTest()

{

GetFieldsFromHeader("", ",").Count().Should().Be(0);

}

[TestMethod]

public void EmptyHeaderFieldIsAllowedTest()

{

GetFieldsFromHeader("a,,c", ",").Count().Should().Be(3);

}

[TestMethod]

public void BlankHeaderFieldsAreAllowedTest()

{

GetFieldsFromHeader(",,", ",").Count().Should().Be(3);

}

[TestMethod]

public void HeaderIsTrimmedTest()

{

GetFieldsFromHeader("a ", ",")[0].Should().Be("a");

GetFieldsFromHeader(" a", ",")[0].Should().Be("a");

}

[TestMethod]

public void DirectMappingTest()

{

MapHeaderToProperties<NoAliases>

(new string[] { "A", "B", "C" }).Count().Should().Be(3);

}

[TestMethod]

public void AliasTest()

{

MapHeaderToProperties<WithAlias>

(new string[] { "A", "B", "C" }).Count().Should().Be(3);

}

[TestMethod]

public void CaseInsensitiveTest()

{

MapHeaderToProperties<NoAliases>

(new string[] { "a", "b", "c" }).Count().Should().Be(3);

}

[TestMethod]

public void AdditionalPropertyTest()

{

MapHeaderToProperties<NoMatchingField>

(new string[] { "A", "B", "C" }).Count().Should().Be(3);

}

[TestMethod]

public void MissingPropertyTest()

{

MapHeaderToProperties<NoMatchingProperty>

(new string[] { "A", "B", "C" }).Count().Should().Be(3);

}

[TestMethod]

public void FieldNotMappedTest()

{

var props = MapHeaderToProperties<NoMatchingProperty>(new string[] { "A", "B", "C" });

props.Count().Should().Be(3);

props[0].Should().NotBeNull();

props[1].Should().BeNull();

props[2].Should().NotBeNull();

}

[TestMethod]

public void CaseInsensitiveAliasTest()

{

AliasedProperty(typeof(WithAlias), "c").Should().NotBeNull();

}

[TestMethod]

public void PropertyNotFoundTest()

{

AliasedProperty(typeof(NoAliases), "D").Should().BeNull();

}

[TestMethod]

public void DuplicateAliasTest()

{

this.Invoking((_) => AliasedProperty(typeof(WithDuplicateAlias), "C")).Should()

.Throw<CsvParserDuplicateAliasException>()

.WithMessage("C is aliased more than once.");

}

[TestMethod]

public void PopulateFieldsTest()

{

var props = MapHeaderToProperties<NoAliases>(new string[] { "a", "b", "c" });

var t = Populate<NoAliases>("1,2,3", ",", props);

t.A.Should().Be("1");

t.B.Should().Be("2");

t.C.Should().Be("3");

}

[TestMethod]

public void PopulatedFieldsAreTrimmedTest()

{

var props = MapHeaderToProperties<NoAliases>(new string[] { "a", "b", "c" });

var t = Populate<NoAliases>("1 , 2, 3 ", ",", props);

t.A.Should().Be("1");

t.B.Should().Be("2");

t.C.Should().Be("3");

}

[TestMethod]

public void PopulateFieldNotMappedTest()

{

var props = MapHeaderToProperties<NoMatchingProperty>(new string[] { "a", "b", "c" });

var t = Populate<NoMatchingProperty>("1,2,3", ",", props);

t.A.Should().Be("1");

t.C.Should().Be("3");

}

[TestMethod]

public void OrderedTest()

{

var map = MapHeaderToProperties<NoAliases>(new string[] { "A", "B", "C" });

map[0].Name.Should().Be("A");

map[1].Name.Should().Be("B");

map[2].Name.Should().Be("C");

}

[TestMethod]

public void DisorderedTest()

{

var map = MapHeaderToProperties<Disordered>(new string[] { "A", "B", "C" });

map[0].Name.Should().Be("A");

map[1].Name.Should().Be("B");

map[2].Name.Should().Be("C");

}

}

Woohoo!

And then I decided to flog myself by rewriting this in F# and learn some things along the way. I wanted to try this because I really like the forward pipe |> operator in F#, as it lets you chain functions together using the output of one function as the input of another function. I also "made the mistake" of not implementing a CsvParser class -- this led to discovering that generics apply only to members, not functions, but I discovered a really cool workaround. So here's my crazy F# implementation:

open System

open System.Reflection

open System.ComponentModel

type AutomobileType =

None = 0

| Car = 1

| Truck = 2

| Motorbike = 3

[<AllowNullLiteral>]

type ColumnMapAttribute(headerName) =

inherit System.Attribute()

let mutable header : string = headerName

member x.Header with get() = header

type Automobile() =

let mutable make : string = null

let mutable model : string = null

let mutable year : int = 0

let mutable price : decimal = 0M

let mutable comment : string = null

let mutable atype : AutomobileType = AutomobileType.None

member x.Make with get() = make and set(v) = make <- v

member x.Model with get() = model and set(v) = model <- v

member x.Type with get() = atype and set(v) = atype <- v

member x.Year with get() = year and set(v) = year <- v

member x.Comment with get() = comment and set(v) = comment <- v

[<ColumnMap("Cost")>]

member x.Price with get() = price and set(v) = price <- v

module String =

let notNullOrWhiteSpace = not << System.String.IsNullOrWhiteSpace

type TypeParameter<'a> = TP

[<EntryPoint>]

let main _ =

let mapHeaderToProperties(_ : TypeParameter<'a>) fields =

let otype = typeof<'a>

fields |>

Array.map (fun f ->

match otype.GetProperty(f,

BindingFlags.Instance |||

BindingFlags.Public |||

BindingFlags.IgnoreCase |||

BindingFlags.FlattenHierarchy) with

| null -> match otype.GetProperties() |> Array.filter

(fun p ->

match p.GetCustomAttribute<ColumnMapAttribute>() with

| null -> false

| a -> a.Header = f) |> Array.toList with

| head::_ -> head

| [] -> null

| a -> a

)

let convert(v, destType) =

let srcType = v.GetType();

let tcSrc = TypeDescriptor.GetConverter(srcType)

let tcDest = TypeDescriptor.GetConverter(destType)

if tcSrc.CanConvertTo(destType) then

tcSrc.ConvertTo(v, destType)

else

if tcDest.CanConvertFrom(srcType) then

if srcType.FullName = "System.String" then

tcDest.ConvertFromInvariantString(v)

else

tcDest.ConvertFrom(v);

else

if destType.IsAssignableFrom(srcType) then

v :> obj

else

null

let populate(_ : TypeParameter<'a>)

(line:string) (delimiter:char) (props: PropertyInfo[]) =

let t = Activator.CreateInstance<'a>()

let vals = line.Split(delimiter)

for i in 0..vals.Length-1 do

match (props.[i], vals.[i]) with

| (p, _) when p = null -> ()

| (_, v) when String.isNullOrWhiteSpace v -> ()

| (p, v) -> p.SetValue(t, convert(v.Trim(), p.PropertyType))

t

let data ="Make,Model,Type,Year,Cost,Comment\r\nToyota,Corolla,Car,1990,2000.99,A Comment";

let delimiter = ',';

let lines = data.Split [|'\r'; '\n'|] |> Array.filter

(fun l -> String.notNullOrWhiteSpace l)

let headerLine = lines.[0]

let fields = headerLine.Split(delimiter) |> Array.map (fun l -> l.Trim()) |>

Array.filter (fun l -> String.notNullOrWhiteSpace l ||

String.notNullOrWhiteSpace headerLine)

let props = mapHeaderToProperties(TP:TypeParameter<Automobile>) fields

let recs = lines |> Seq.skip 1 |> Seq.map (fun l ->

populate(TP:TypeParameter<Automobile>) l delimiter props) |> Seq.toList

printf "fields: %A\r\n" fields

printfn "lines: %A\r\n" lines

printfn "props: %A\r\n" props

recs |> Seq.iter

(fun r -> printfn "%A %A %A %A %A %A\r\n" r.Make r.Model r.Year r.Type r.Price r.Comment)

0

And the output is:

fields: [|"Make"; "Model"; "Type"; "Year"; "Cost"; "Comment"|]

lines: [|"Make,Model,Type,Year,Cost,Comment";

"Toyota,Corolla,Car,1990,2000.99,A Comment"|]

props: [|System.String Make; System.String Model; AutomobileType Type; Int32 Year;

System.Decimal Price; System.String Comment|]

"Toyota" "Corolla" 1990 Car 2000.99M "A Comment"

It was a struggle not to use FirstOrDefault:

fields |>

Array.map (fun f ->

match otype.GetProperty(f,

BindingFlags.Instance |||

BindingFlags.Public |||

BindingFlags.IgnoreCase |||

BindingFlags.FlattenHierarchy) with

| null -> otype.GetProperties() |>

Array.filter (fun p -> match p.GetCustomAttribute<ColumnMapAttribute>() with

| null -> false

| a -> a.Header = f)

|> Enumerable.FirstOrDefault

| a -> a

)

But I persevered! A few things I learned:

match is touchy, I spend a while figuring out why an array [] wouldn't work. My F# ignorance rears its ugly head.- F# doesn't like nullable types, and C# is full of them, so I learned about the

[<AllowNullLiteral>] attribute and its use! - This

type TypeParameter<'a> = TP was one gnarly piece of code to spoof functions into being "generic!" I don't take the credit for that -- see the code comments.

So I decided to rework the implementation into a true generic class -- very easily done:

type SimpleCsvParser<'a>() =

member __.mapHeaderToProperties fields =

let otype = typeof<'a>

fields |>

Array.map (fun f ->

match otype.GetProperty(f,

BindingFlags.Instance |||

BindingFlags.Public |||

BindingFlags.IgnoreCase |||

BindingFlags.FlattenHierarchy) with

| null -> match otype.GetProperties() |> Array.filter (fun p ->

match p.GetCustomAttribute<ColumnMapAttribute>() with

| null -> false

| a -> a.Header = f) |> Array.toList with

| head::_ -> head

| [] -> null

| a -> a

)

member __.populate (line:string) (delimiter:char) (props: PropertyInfo[]) =

let t = Activator.CreateInstance<'a>()

let vals = line.Split(delimiter)

for i in 0..vals.Length-1 do

match (props.[i], vals.[i]) with

| (p, _) when p = null -> ()

| (_, v) when String.isNullOrWhiteSpace v -> ()

| (p, v) -> p.SetValue(t, convert(v.Trim(), p.PropertyType))

t

member this.parse (data:string) (delimiter:char) =

let lines = data.Split [|'\r'; '\n'|] |> Array.filter

(fun l -> String.notNullOrWhiteSpace l)

let headerLine = lines.[0]

let fields = headerLine.Split(delimiter) |> Array.map

(fun l -> l.Trim()) |> Array.filter

(fun l -> String.notNullOrWhiteSpace l ||

String.notNullOrWhiteSpace headerLine)

let props = this.mapHeaderToProperties fields

lines |> Seq.skip 1 |> Seq.map

(fun l -> this.populate l delimiter props) |> Seq.toList

Note that convert, being a general purpose method, is not implemented in the class. Also note that the bizarre type TypeParameter<'a> = TP type definition isn't needed, as we use the class like this:

let parser = new SimpleCsvParser<Automobile>();

let recs2 = parser.parse data delimiter

recs2 |> Seq.iter

(fun r -> printfn "%A %A %A %A %A %A\r\n" r.Make r.Model r.Year r.Type r.Price r.Comment)

As I mentioned, the C# code (excluding unit tests) took 30 minutes to write. The F# code, about 5 hours. A great learning experience!

But more to the point is really still the unanswered question as to whether you want to roll your own or use a third party library. My view is that if the requirement is simple and unlikely to change, rolling your own should at least be considered before reaching for a third party library, especially if the library has one or more of the following qualities:

- Has a significantly larger code base because it's trying to handle all sorts of scenarios

- Has many dependencies on other libraries

- Does not have good test coverage

- Does not have good documentation

- Is not extensible

- It takes more time to evaluate libraries than to roll the code!

- 13th January, 2020: Initial version