Analyzing GPU-bound scenarios using a high-level system overview, and exploring software and hardware queue breakdowns. This analysis is quick and accurate. It doesn’t require graphics expertise or depend on the type of graphics API used for rendering. It also works on any platform where we can capture corresponding performance events from system tracing.

Computer graphics is an amazing, essential part of our everyday lives―while we work on computers, watch movies, use smartphones, and even drive cars. The performance of graphics processors has dramatically increased over the last 10 years. And the influence of the video gaming industry on this process is hard to underestimate. At the same time, the continuous growth of GPU capabilities is opening new opportunities for game developers, encouraging them to invent breakthrough rendering techniques and effects to gain every possible hardware advantage. But this race between GPU hardware developers and game developers creates a drawback. Often, innovative rendering techniques bump into hardware limits.

In this article, we’ll walk through a quick and easy way to see whether your game is CPU-bound using a high-level system overview.

Game Performance Fundamentals

Video game production is expensive. And investing in performance optimization is an important factor contributing to a game project’s profitability. Normally, different game genres―action, adventure, strategy, and others―have different performance requirements. If a game seems visually slow, with notable lags or delays in drawing artifacts, it definitely has performance issues that must be addressed.

A formal metric to measure game performance is frame rate, the number of frames rendered per second (FPS). FPS is used for benchmarking and ranking different applications: the higher the FPS, the better. In most cases, this approach is feasible. For example, an action game with a lot of motion doesn’t look good if FPS is low.

A modern game is a complex product consisting of multiple components:

- Rendering graphics

- Calculating physics

- Playing sounds

- Executing scripts

- Hosting network

- And more

Each component, separately or in combination, can affect game performance. That’s why it can be tricky to identify whether an application is GPU- or CPU-bound. Though it’s only one aspect of a game, it’s reasonable to start analysis from rendering, since graphics can be crucial to creating the game’s unique style, spirit, and atmosphere.

Classic Rendering Pipeline

There’s no way to define precisely whether the GPU is a performance bottleneck without understanding the graphics rendering pipeline, the graphics programming model, and the role of a graphics driver in this procedure. A thorough analysis of GPU activity through the whole stack―from the application code to the hardware―requires significant expertise. Fortunately, it’s enough to perform a basic performance analysis to see overall GPU utilization without going into great detail.

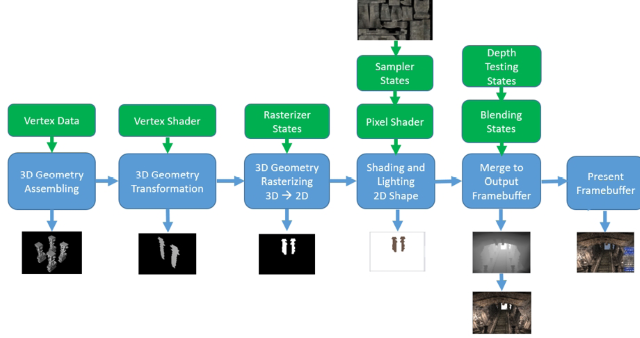

A rendering pipeline operates with resources and states. Resources bound to the pipeline specify what should be rendered and where. They can consist of geometries, textures, and render targets written in a proper format. Rasterization parameters, testing depth conditions, blending attributes, and other states specify how those resources should be interpreted and processed to generate an image on a screen. Render resources and states are tightly connected with GPU programs, also called shaders, which are executed in different stages of the rendering pipeline (Figure 1).

1 - Rendering pipeline

The pipeline’s classic rendering process takes source data and sequentially modifies it, passing it through the same stages until it reaches the destination. Any rendered object is first transformed in a virtual space, and then projected onto a screen surface. After that, a visible part of that projection is colorized and merged with other rendered objects in a framebuffer.

The graphics programming model is simple. Draw context is configured, opened, and used for submitting rendering commands in the correct order: required resources, states, and programs should be bound to the pipeline before invoking any draw command that orders the pipeline to do a job. This procedure is repeated as many times as needed for objects to be rendered, until a final scene forms in a framebuffer. We can push this to a screen with a buffer swap command. Regardless of whether an application uses OpenGL*, DirectX*, Vulkan* or any other graphics API, this concept stays the same.

It’s now obvious how many complicated operations constitute a single draw. And each operation contributes to the draw duration. Individual draw durations vary, affecting the total frame rendering time. A long frame time may indicate a GPU-bound scenario, which we can confirm or reject after estimating the GPU load based on graphics driver performance markers.

Graphics Driver Activity

A common graphics program works with a graphics driver, but never directly with the GPU. Any time we open a draw context in our application, we implicitly create a corresponding interface to a graphics driver, known as a driver device context. To make rendering possible, the driver must perform a lot of work:

- Release and allocate memory blocks on the GPU

- Upload the resources necessary for rendering from the CPU to the GPU

- Set registers of the GPU execution units

- Upload GPU programs

- Transfer results back to the CPU

- And more

Any time we invoke a sequence of graphics API calls within the application code, the driver translates them into a sequence of commands eligible for the GPU. Commands are not executed on the GPU immediately. Instead, they accumulate in a command buffer. The driver constantly batches a series of commands into packets, and then pushes these packets into a device context queue, scheduling them for execution (Figure 2).

2 - Command buffer

A device context queue can contain different types of packets with different types of commands. The prevailing command type in a packet defines the packet’s type. Each packet stays in the queue, waiting until the last command written in the previous packet has been executed on the GPU. (For example, see the selected render packet in Figure 2.)

Exploring the device context queue can give us some useful performance insights. For example, a huge queue size usually corresponds to a huge amount of graphics work submitted to the GPU. Long packet execution time may be due to computationally-intensive draw procedures. Long packet waits can be caused by inefficient rendering algorithms or synchronization.

When we’ve identified all the packets relating to a single frame, we can roughly estimate the frame duration, which we can calculate as a time range by submitting the first command packet in a queue until executing the last command from the last submitted packet within that frame.

However, even if a frame time is long, we can’t define whether our application is GPU-bound until we explore a corresponding GPU hardware queue associated with a graphics processor performing rendering. The GPU is a shared resource that can serve multiple applications, rendering graphics simultaneously. Long rendering time may be a result of concurrent execution with another application that acquired the GPU context at the same time.

The hardware GPU queue (Figure 3) provides a clear picture of the overall GPU utilization. We can use this queue to identify how busy the GPU is, and which application is rendered at the time.

3 - Hardware GPU queue

The GPU queue snapshot in Figure 3 shows at least two simultaneously rendered applications, differentiated by the colors of the command packets. Neither application is GPU-bound. The frame time of the application, highlighted in blue, is not much longer than 11 milliseconds, which corresponds to approximately 80 FPS. And 80 FPS is usually high enough. The green one seems to be a background process with very tiny frames (about 5 milliseconds each). Moreover, the GPU isn’t even as busy as it could be, since we can see multiple gaps between executing command packets, corresponding to periods when the GPU is idle.

The concept of analyzing software and hardware queues is quite promising from a reliability perspective. Plus, these queues are easy to build, since we know how to acquire the required performance data.

System Event Tracing

Regardless of whether we work on Windows*, Linux*, macOS*, or any other operating system, we can connect to a system event trace layer, which logs different types of events associated with key execution points within different system modules. Some events are eligible for performance analysis. The graphics driver is no exception. Any time the driver pushes a command packet into a device context queue, uploads a command packet to the GPU, or executes the last command written in a command packet, it submits corresponding events into a system tracing layer so that we can easily acquire them. For example, if we want to build a device context and GPU command packet queues on Windows, we need to capture several events from the Microsoft-Windows-DxgKrnl provider of the Event Tracing for Windows* (ETW*) system.

Different attributes encoded into events data enable binding different events together to distinguish the current status of each packet in a queue at any time.

Graphics Application Analysis

System event tracing is well documented and can be used on any platform. There are many tools that can capture or visualize system tracing data. However, the number of tools capable of proper simultaneous analysis of device context and GPU hardware queues is limited. Intel® GPA Graphics Trace Analyzer is one of these tools, designed to analyze the performance of graphics applications with different levels of detail, from a high-level system analysis to a single frame per-draw analysis.

Now let’s apply what we’ve learned to a real-life, graphics-intensive game. We can try it on a workstation with a graphics processor in the middle performance range to make our experiment predictable. We’ll use the just-releasedBorderlands 3*, a well-known game from the first-person shooter genre. We’ll run it on the Intel® NUC Mini PC NUC8i7HVK, which has two graphics processors: integrated Intel® HD Graphics 630 and discrete AMD Radeon RX Vega M GL*. If we run this game at 2560x1440 resolution adapted for widescreen monitors and switch all graphics options in the game to a high profile, the game engine selects for rendering the most capable graphics processor, which seems to be Radeon Vega on this device.

The first thing that catches our eye after five minutes of play is a lag between changing the state of an input device, such as a mouse or a gamepad, and changing a scene on a screen. Animation of some moving objects also looks a bit ragged. If we capture and open a trace with Intel GPA Graphics Trace Analyzer, we can observe all attributes of the GPU-bound scenario from the first sight at timeline tracks with a device context queue and GPU hardware queue (Figure 4).

4 - Device context queue and GPU hardware queue

The GPU queue has no gaps. It’s fully busy, continuously executing commands submitted from the game. The device context queue size is large enough, which means a lot of graphics work is prepared and waiting for rendering. We can also measure frame duration, selecting all command packets executed on the GPU within a single frame. The frame duration of about 48.8 milliseconds corresponds to approximately 21 FPS, which is definitely insufficient for this action game. Games like this one usually require 60+ FPS to achieve maximum game experience.

Analyzing GPU-bound scenarios using a high-level system overview, and exploring software and hardware queue breakdowns, gives us several benefits. This analysis is quick and accurate. It doesn’t require graphics expertise or depend on the type of graphics API used for rendering. It also works on any platform where we can capture corresponding performance events from system tracing.

Learn More