One of our key considerations is reducing the programmer's burden. Julia provides a range of modern primitives designed to compose effectively. In this article, we’ll discuss some of the tradeoffs we make to try to simplify the mental model for the programmer. One important design principle of Julia is to make common tasks easy and difficult tasks possible.

Taking advantage of multicore processors is a crucial capability for any modern programming language. Programmers need the best possible throughput for their applications, so we’re going to show how the new multithreading runtime in Julia* v1.3 unleashes the full power of a modern CPU with minimal hassle.

One of our key considerations is reducing the programmer's burden. Julia provides a range of modern primitives designed to compose effectively. We’ll discuss some of the tradeoffs we make to try to simplify the mental model for the programmer. One important design principle of Julia is to make common tasks easy and difficult tasks possible. This is demonstrated in multiple aspects of the language, e.g.:

- Automatic memory management

- Combining functions, objects, and templates into a single dispatch mechanism

- Optional type inference for performance

We now extend this to parallelism. By building on the language's existing concurrency mechanism, we’ve added parallel capabilities that preserve the (relative) simplicity of single-threaded execution for existing code, while allowing new code to benefit from multithreaded execution. This work has been inspired by parallel programming systems such as Threading Building Blocks.

In this paradigm, any piece of a program can be marked for parallel execution, and a task will be started to automatically run that piece of code on an available thread. A dynamic scheduler decides when and where to launch tasks. This model of parallelism has many helpful properties. We see it as somewhat analogous to garbage collection. With garbage collection, you freely allocate objects without worrying about when and how they’re freed. With task parallelism, you freely spawn tasks―potentially millions of them― without worrying about when and where they eventually run.

The model is portable and free from low-level details. The programmer doesn’t need to manage threads, or even know how many processors or threads are available. The model is nestable and composable. Parallel tasks can be started that call library functions that themselves start parallel tasks—and everything works correctly. This property is crucial for a high-level language where a lot of work is done by library functions. The programmer can write serial or parallel code without worrying about how the underlying libraries are implemented. This model isn't limited to Julia libraries, either. We've shown that it can be extended to native libraries such as FFTW*, and we are working on extending it to OpenBLAS*.

Running Julia with Threads

Let’s look at some examples using Julia v1.3 launched with multiple threads. To follow along on your own machine, you’ll need to download the latest Julia release (currently v1.3.0) from https://julialang. org/downloads. Run ./julia with the environment variable JULIA_NUM_THREADS set to the number of threads to use. Alternatively, after installing Julia, follow the steps at https://julialang.org/downloads/platform/ to install the Juno IDE*. It will automatically set the number of threads based on available processor cores. It also provides a graphical interface for changing the number of threads.

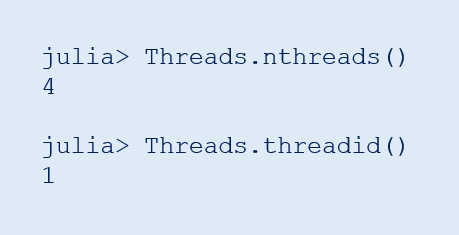

We can verify that threading is working by querying the number of threads and the ID of the current thread:

Tasks and Threads

A visual way to demonstrate that threads are working is to watch the scheduler picking up work in semirandom, interleaving orders. Previous versions of Julia already had a ‘@threads for’ macro which would split a range and run a portion on each thread with a static schedule. So in the range below, thread 1 would run items 1 and 2; thread 2 would run items 3 and 4; and so on.

It's now possible to run the same program with a completely dynamic schedule. We use the new ‘@spawn’ macro with the existing ‘@sync’ macro to delineate the work items.

Now, let’s look at some more practical examples.

Parallel Merge Sort

The classic merge sort algorithm shows a nice performance benefit from using multiple threads. This function will create O(n) subtasks, which will sort independent portions of the array before merging them into a final sorted copy of the input. We use here the ability of each task to return a value via fetch.

Figure 1 shows how adding more threads affects scaling. Since we’re using in-process threads, we could further optimize by mutating the input in place and reusing the work buffers for additional performance.

1 - Scaling ratios of psort on a server with 40 hyperthreads (two Intel® Xeon® Silver 4114 processors @ 2.20GHz)

While not demonstrated here, fetch would also automatically propagate exceptions from the child task.

Parallel Prefix

Prefix sum (also known as “scan sum”) is another classic problem that can benefit nicely from multiple threads. The parallel version of the algorithm computes partial sums by arranging the work into two trees. The short implementation below can take advantage of all cores and SIMD units available on the machine:

Figure 2 shows how adding more threads affects scaling.

Several features of Julia combine to make it particularly easy to express this simple yet performant implementation. Under the hood, the system automatically compiles versions of the function optimized for different types of arguments. The compiler can also automatically specialize the function for a specific CPU model, both ahead-of-time and just-in-time. Julia ships with a “system image” of code precompiled for a reasonable range of CPUs, but if the processor used at runtime supports a larger feature set, the compiler will automatically generate better-tailored code. Meanwhile, the threading system adapts to the available cores by dynamically scheduling work.

2 - Scaling ratios of prefix_threads on a server with 40 hyperthreads (two Intel® Xeon® Silver 4114 processors @ 2.20GHz)

Parallel-Aware APIs

Several operations in application code must be made thread-aware to be used safely in parallel. These user-facing APIs include:

- Concurrency basics:

Task, and associated functions including schedule, yield, and wait - Mutexes:

ReentrantLock and Condition variables, including lock, unlock, and wait - Synchronization primitives:

Channel, Event, AsyncEvent, and Semaphore - I/O and other blocking operations: Including

read, write, open, close, and sleep - Experimental Threads module: Various building blocks and atomic operations

Scheduler Design

A prototype implementation of the partr scheduler was first written for us in C by Kiran Pamnany while at Intel in late 20161, following research on cache-efficient scheduling2. The goal of this work was composition of threaded libraries with a globally depth-first work ordering. partr implements this using an approximate priority queue, where the priorities are set equal to the thread ID of the thread launching a task.

Foreign Libraries

An important motivation for this work was our desire to better support multithreaded libraries without CPU oversubscription killing performance due to cache-thrashing and frequent context switching. Previously, the only options were for the user to decide up-front to limit Julia to N threads, and to tell the threaded library (such as libfftw or libblas) to use M ÷ N cores. The most common choices are probably 1 and M, so only part of the application can benefit from multiple cores. However, given our ability to quickly create and run work items in our thread pool, we're looking at how to let external libraries integrate with our thread pool. This is an ongoing area of exploration as we get feedback on the performance and API needs of various libraries.

We've successfully adapted FFTW to run on top of our threading runtime instead of its own (a Pthreads-based workpool). This took us only a few hours. (We were fortunate to be able to enlist the help of that library's author.) Without any performance tuning (yet), it got competitive performance results. We learned important lessons to tightly optimize our scheduler latency, which is now ongoing work to achieve exact performance parity. Even with some overhead imposed by generality, however, we expect that the ability to compose thread-aware users and achieve resource sharing from the partr scheduler will make this an overall improvement in program operation.

Looking to the Future

Julia's approach to multithreading combines many previously known ideas in a novel framework. While each in isolation is useful, we believe that―as is so often the case―the sum is greater than the parts. From this point, we hope to see a rich set of composable parallel libraries develop within the Julia ecosystem.

References

- https://github.com/kpamnany/partr

- Shimin Chen, Phillip B. Gibbons, Michael Kozuch, Vasileios Liaskovitis, Anastassia Ailamaki, Guy E. Blelloch, Babak Falsafi, Limor Fix, Nikos Hardavellas, Todd C. Mowry, and Chris Wilkerson. Scheduling Threads for Constructive Cache Sharing on cmps. In Proceedings of the Nineteenth Annual ACM Symposium on Parallel Algorithms and Architectures, SPAA ’07, pages 105–115, New York, NY, USA, 2007. ACM.