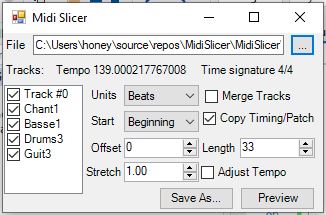

This tool allows one to do some basic editing of a MIDI file, preview the edits, and save. It uses a MIDI file library that has many features, which you can use in your own MIDI projects.

Introduction

Consider visiting my followup article here. It has improved code and a more thorough explanation of MIDI and the Midi library API.

Quite some time ago, I wrote a VST and FL Studio plugin which used looped MIDI streams to play audio. I wrote it in C++, but I prototyped many of the MIDI operations in C# first. Later, I expanded this C# prototype code into a MIDI file editing library, which I've provided here, along with an example utility that allows for simple editing of a MIDI file.

Update: Added time signature support. Small bugfix in playback.

Update 2: Added MIDI message classes for each type of MIDI operation, and several features to the MidiSlicer app

Update 3: Added Normalize and Level scaling. Made the Offset and Length floating point

Update 4: Improvements to correctness of API created MIDI sequences and files, better preview and save.

Update 5: Added Transpose() option to API and to GUI

Update 6: API enhancements and improvement to overall GUI behavior

Conceptualizing this Mess

MIDI stands for Musical Instrument Digital Interface. What it does is allow you to automate digital instruments similar to how one of those old player pianos work. MIDI contains all the note information - basically the "sheet music" for a song which it can then broadcast to up to 16 different digital audio devices like drum machines and synthesizers, or MIDI capable pianos and the like. Your Windows machine contains a default device that can play numerous synthesized sounds emulating various instruments like pianos and guitars. Your phone does too. Your systems can use this to play MIDI files out of your default audio device - usually your primary sound card or audio hardware. MIDI is often used by phones to store ring tones. That having been said, MIDI was originally designed for musicians, and its primary purpose is to allow musicians to record or "sequence" their performance and save it to a file for replay or editing.

The MIDI Protocol

MIDI is first and foremost a wire protocol, and secondly a file format. The protocol consists of realtime MIDI "messages". All a MIDI file is in its essence is the protocol stream stored as a file along with a delta time for each "message" - the time in the song the "message" should be played. A delta time plus a message is called a MIDI event. MIDI events are stored as a stream in the file for replay later. Therefore, understanding the protocol is fundamental to understanding the file format.

Having been designed in the 1980s, MIDI defines an 8 bit wire protocol. Any strings are ASCII, and most values are 0-127 (7 bits plus a leading 0 bit) with some values being 0-255 (8 bit). There are occasionally multibyte values (larger than 8 bits) in the stream. These are always big-endian, so on Intel platforms, you'll have to swap the byte order.

A MIDI message at the very least contains an 8 bit "status byte." However, a message may contain additional fields/payload depending on the value of the status byte. The status byte tells us both the "type" of message (in the first 4 bits), plus the "channel" (midi "device") the message is intended for in the final 4 bits - remember the MIDI protocol allows control of up to 16 devices - from here on referred to as channels. Most MIDI messages have additional fields. For example, a "note on" message contains the note number, and the velocity of the note to be played. The following MIDI message is composed of 3 bytes. In the first byte, the 9 half is the code for note on, and 0 half is the code for channel 0. Next the node number for note C, octave 5 (48 hex below) is to be played at maximum velocity (127/7F) as indicated by the final byte, which must have the high bit set to zero, leaving you with a numeric range of 0-127:

90 48 7F

This will cause the device to strike the note and hold it until a note off message is found for that same note:

80 48 7F

The only difference between a note off and a note on is the first nibble is 8 hex instead of 9 hex. Most devices won't use the velocity byte for a note off message but send it anyway. I typically will use the same value I specified in note on, or zero in cases where I don't know the corresponding note on velocity. Either way should work with MIDI devices out there, but it's always possible that a device is weird. The thing about this protocol is you have to be kind of forgiving of dodgy devices, and that requires good, old fashioned testing.

Again, different messages are different lengths. The patch/program change message indicates which "sound" a channel will use. Often MIDI devices such as synthesizers can produce many different kinds of sounds. This message allows you to send a 7-bit (0-127/7F) code (encoded as a full byte with the high bit 0) that indicates the patch to use. Selecting patch 2 gives us:

C0 02

You may have noticed that aside from the status byte, all of our values are 7-bit encoded as 8-bit with the high bit of zero leaving us with an effective range of values from 0-127/7F. This is important because of an optimization called a running status byte which I'll cover briefly, and is covered as well at the links in the Further Reading section.

A MIDI message may be a complete message with a status byte, or the status byte may be omitted in which case the previous status will be used. This allows for "runs" of multiple messages of the same type and sent to the same channel but with different parameters. Most of the time, this just causes extra complication for an optimization that often doesn't matter, so you don't really have to emit it but you have to be able to read it. That being said, MIDI is technically bandwidth limited so if you have a lot of events it might make sense to emit it as well.

Occasionally in MIDI messages, such as a the pitch bend (status nibble E), you'll find 14-bit values are used in messages. These are encoded by the least significant 7-bits (in an 8-bit field with high bit 0) followed by the most significant 7-bits (in an 8-bit field with high bit 0)

You can find a complete list of MIDI messages at the links in the Further Reading section.

The MIDI File Format

A MIDI file is laid out in "chunks." Each chunk is a fourCC ASCII value that indicates the "type" of the chunk. This is followed by a big-endian 4-byte integer that indicates the length of the data that follows, which is the actual data for the chunk. The first chunk type must be "MThd" and the only other common chunk type is "MTrk" Any chunk types not understood should be skipped.

The "MThd" chunk contains the MIDI file type (usually 1), the track count, and the timebase (commonly 480), each encoded as big-endian 16-bit words.

The "MTrk" chunk contains a track which is a sequence of note on/off message events and other MIDI events. Each event is a delta time followed by a partial or complete MIDI message. The first MIDI track in a MIDI file is usually "special" in that it contains meta information about the MIDI file, including critical data like the tempo information, but also things like lyrics.

The delta time is encoded as a "variable length integer" which I won't cover here, but is covered at the Standard MIDI File Format link in the Further Reading section. It indicates the number of MIDI "ticks" since the last event (hence delta.) I'll get into timing below.

The message that follows can be a full MIDI message, or partial MIDI message with the status byte omitted as described previously.

Timing in MIDI Files

MIDI timing is measured in ticks. The timing of a tick varies depending on the timebase of the MIDI file, which is measured in ticks-per-quarter-note. This is also known as pulses-per-quarter-note or PPQ. That gives you the length of one beat, at the default 4/4 time. The default tempo is 120 beats per minute. The tempo and time signature are set using MIDI events with special MIDI "meta" messages (status byte of FF) and can be set throughout the playback. These are typically in the first track, and are global to all tracks.

I've included a couple of MIDI files I downloaded in the project directory for testing. Any copyright information is available in the MIDI file itself. A-Warm-Place.mid uses features not fully supported by this library but it "mostly" plays. The reason I've included it is it's a useful test for extending the library in the future to support SMPTE timing and proper sysex transmission.

Coding this Mess

The main class is MidiFile, suitably named because this class represents MIDI data in the MIDI file format. It's not necessarily backed by a physical file. It can be created and operated on entirely in memory. It provides access to common features that apply to all tracks, plus timing information, and access to the meta information in track 0. Read a MIDI file using MidiFile.ReadFrom() and write a MIDI file using WriteTo().

The other really important class here is MidiSequence which represents a single sequence or track in a MidiFile. This class allows you to access all of its MidiEvents either as relative delta based or absolute time, and provides access to any meta information stored in the sequence. Sequences can be merged with Merge() or concatenated with Concat(). They can be stretched or compressed with Stretch(). You can retrieve a range of events using GetRange(). Note that some of these operations appear on MidiFile as well and those will operate on each track/sequence in the file.

In the demo code, we process each track depending on the settings in the UI:

if (NormalizeCheckBox.Checked)

trk.NormalizeVelocities();

if (1m != LevelsUpDown.Value)

trk.ScaleVelocities((double)LevelsUpDown.Value);

var ofs = OffsetUpDown.Value;

var len = LengthUpDown.Value;

if (0 == UnitsCombo.SelectedIndex)

{

len = Math.Min(len * _file.TimeBase, _file.Length);

ofs = Math.Min(ofs * _file.TimeBase, _file.Length);

}

...

if (1m != StretchUpDown.Value)

trk = trk.Stretch((double)StretchUpDown.Value, AdjustTempoCheckBox.Checked);

First, we handle velocity normalization and scaling. Next, we grab the offset and length of the selection. Then if it's specified in beats, we use the TimeBase to compute the beats. The rest of the Math calls just clamp the values to the maximum allowable length.

Next, if our length and offsets are different than 0 and the length of the sequence we get the range of events within that time.

I've omitted a bunch of code in the middle from the listing above, but it handles the rest of the features in the UI by calling the appropriate MidiSequence API methods.

Finally, if we've specified a stretch value other than 1, we call Stretch() to stretch the track.

MidiEvent simply contains a Position in ticks, and a MidiMessage. Depending on whether this event was retrieved through Events or AbsoluteEvents, the Position will be a delta time or an absolute time, respectively.

MidiMessage and its derivatives represent MIDI messages of various lengths such as MidiMessageByte and MidiMessageWord. There are also the special MidiMessageMeta and MidiMessageSysex classes which represent a MIDI meta message and a MIDI system exclusive message respectively. See the Standard MIDI File Format link in the Further Reading section for more about these messages. In addition, there are MIDI messages for each type of MIDI operation, such as MidiMessageNoteOn, MidiMessageCC, and MidiMessageChannelPitch.

MidiContext is a class that represents the current "state" of a MIDI sequence. When using GetContext(), one of these instances will be returned from the function and it will give you all of the current note velocities, control positions, pitch wheel position, aftertouch information and the rest. This allows you to know what is playing and how at any given moment within the sequence.

MidiUtility provides low level MIDI features and you shouldn't typically need to use it directly. It provides some low level IO methods, byte swapping, and conversion of tempo/microtempo.

Note that to do things like set the tempo and time signature, you must add MidiMessageMeta messages for each to the corresponding tempo or time signature change. In most files, these should be added to track #0.

Limitations

- This library currently only works with Windows even though most of it is portable.

- While it can read sysex messages from a file, it won't transmit them correctly.

- It does not support SMPTE timing.

- The loading and saving could be more robust in terms of handling corrupt files.

- FL Studio import for Track #0 doesn't seem to get fully recognized by FL Studio. I have yet to track down why. WMP seems to handle it fine, and FL does respect the timing and patch info so it doesn't seem to be a show stopper.

Points of Interest

The Preview() methods do not use Win32 MCI "play" to play the file or sequence. Instead, the sequence is played on the calling thread using C# and calls to the MIDI device out Win32 API directly. Getting the timing right in that method was a total bear. You may want to dispatch it on a separate thread because it is CPU intensive. It's possible (maybe) to do this in a more CPU efficient manner by replacing the hard loop with a timer callback but that's not trivial to implement. See the demo code for the Preview thread handling.

History

- 18th March, 2020 - Initial submission

- 18th March, 2020 - Update 1: Added time signature support, bugfix in playback

- 18th March, 2020 - Update 2: Added several features to MidiSlicer and to the MIDI API

- 18th March, 2020 - Preview now loops.

- 20th March, 2020 - Added Normalize and Level scaling. Made the Offset and Length floating point

- 20th March, 2020 - Bugfix and improvement to Preview/SaveAs implementations

- 21st March, 2020 - Improved timing in UI and of rendered tracks

- 22nd March, 2020 - Improved API, save, and preview

- 22nd March, 2020 - Added Transpose() and Transpose GUI option

- 22nd March, 2020 - Added copyTimingAndPatchInfo option to GetRange() API methods

- 23rd March, 2020 - Improvements to API, and overall GUI functionality