The goal of this article is to show how easy it is to get comprehensive, objective, and reproducible graph analytics performance data without obfuscation or resorting to benchmarking tricks.

What Is Graph Analytics And Why Does It Matter?

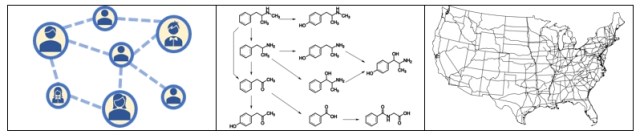

A graph is a good way to represent a set of objects and the relations between them (Figure 1). Graph analytics is the set of techniques to extract information from connections between entities.

Figure 1. Graphs are everywhere

For example, graphs analytics can be used to:

- Get recommendations among friends in a social network

- Find cut points in a communication network or electrical grid

- Determine drug effects on a biochemical pathway

- Detect robocalls in telecommunications network

- Find optimal routes between locations on a map

Graph analytics has been getting a lot of attention lately, possibly because Gartner listed it among the top 10 data and analytics technology trends for 2019:

“The application of graph processing and graph DBMSs will grow at 100 percent annually through 2022 to continuously accelerate data preparation and enable more complex adaptive data science.”

(Source: Gartner Identifies Top 10 Data and Analytics Technology Trends for 2019)

Intel has a long history of leadership in graph analysis. For example, Intel coauthored the GraphBLAS* specification to formulate graph problems as sparse linear algebra. Though the GraphBLAS API was just published in 2017, the initial proposal and manifesto were published more than 10 years ago. Today, the same industry and academic partnership is coauthoring the forthcoming LAGraph* specification for a library of graph algorithms. Intel was also selected by DARPA to develop a new processor to handle large graph datasets (see “DARPA Taps Intel for Graph Analytics Chip Project”). We will continue to innovate and push the graph analytics envelope.

Benchmarking Graph Analytics Performance

Graph analytics is a large and varied landscape. Even the simple examples in Figure 1 show differing characteristics. For example, some networks are highly connected; others are sparser. A network of webpages exhibits different connectivity than a network of Twitter users, where some users have millions of followers while most have only a few. Consequently, no single combination of graph algorithm, graph topology, or graph size can adequately represent the entire landscape.

Therefore, we use the GAP Benchmark Suite* from the University of California, Berkeley, to measure graph analytics performance. GAP specifies six widely-used algorithms (Table 1) and five small to medium-sized graphs (Table 2). Each graph has different characteristics, which is important because optimizations that work well for one graph topology may not work well for others. For example, the Road graph is relatively small, but its high diameter can cause problems for some algorithms. Consequently, the 30 GAP data points provide good coverage of the graph analytics landscape.

Table 1. GAP measures the performance of six common graph analytics algorithms.

Table 2. GAP uses five graphs of varying size and topology to give a more complete picture of graph analytics performance.

GAP also has the advantages of being a clearly-defined, objective, off-the-shelf benchmark. It does not require special hardware or software configurations, so it is easy to run.

Here are the steps that I took to run the benchmark:

- Download the GAP package.

- Run ‘make’ in the gapbs-master subdirectory to build the reference implementations for the six graph analytics kernels. For now, I’m just using the default GAP build parameters (the GNU C++ compiler with the ‑std=c++11 ‑O3 ‑Wall ‑fopenmp options). My system had the GNU* v7.4.0 compiler installed.

- In the same directory, run ‘make ‑f benchmark/bench.mk bench‑graphs’ to download or generate the five benchmark graphs and convert them to more efficient input formats.

- The GAP reference implementations are parallelized with OpenMP*, so it is important to set the number of threads. Otherwise, GAP will use all available cores, even if this doesn’t give the best parallel efficiency. The ‘export OMP_NUM_THREADS=32’ command sets the number of OpenMP threads to 32, for example.

- Finally, run ‘make ‑f benchmark/bench.mk bench‑run’ to launch the benchmarks and generate results (Table 3).

Table 3. Compute times (in seconds; lower is better) for GAP running on a two-socket Intel® Xeon® processor-based system.1 GAP was run with 1, 8, 16, 24, 32, 48, 64, and 96 threads. Best performance is shown for each test.

I tried to generate NVIDIA V100* comparative data, but ran into several technical barriers:

- Only one of the GAP graphs (Road) fits in the memory of a single V100.

- Only one of the GAP algorithms (PR) in RAPIDS cuGraph* can use the aggregate memory of multiple V100s.

- The current version of cuGraph does not provide a BC implementation.

- The cuGraph APIs and documentation do not expose certain implementation details or algorithm parameters. For example, the multi-V100 PR implementation in cuGraph does not provide a convergence tolerance parameter, which makes an apples-to-apples comparison to the GAP results difficult.

Consequently, it’s only possible to run nine of the 30 GAP tests (Table 4). As you can see, where GAP can run on both architectures, the Intel® Xeon® processors outperformed the V100 on most tests, even when the graph is small enough to fit in GPU memory (i.e., Road) or when the algorithm can use multiple GPUs (i.e., PageRank). Also, TCO clearly favors Intel Xeon processors for graph analytics (Table 5).

Table 4. Compute times (in seconds; lower is better) for cuGraph running on an AWS EC2* p3.16xlarge instance (eight V100* GPUs connected via NVLink*).2 All Road tests used a single V100. Twitter and Web tests used eight V100s. Note that the multi-V100 PR in cuGraph does not provide a convergence tolerance parameter, so the default parameters were used for these tests. Kron* and Urand* tests failed with a “thrust::system::system_error.” DNR = Does not run because of insufficient memory. NA = Not available in cuGraph v0.9.0.

These results highlight the advantages of a benchmark suite that tests multiple algorithms on different graph topologies. For example, SSSP implementations typically allow users to specify a delta stepping parameter to improve performance for high-diameter graphs. The cuGraph implementation provides no such option, so its internal defaults may be suboptimal for a high-diameter graph like Road. The same could also be true for the cuGraph CC implementation.

Table 5. Relative TCO of the Intel Xeon processor and V100 benchmark systems. Note that the AWS price comparison is only an approximation because no EC2 instances exactly match the Intel Xeon processor-based benchmarking system, but the m4.16xlarge instance is similar.

Conclusions

I can probably improve the GAP results on the Intel Xeon processor platform (Table 3) with a few simple changes like using the Intel compiler and aggressive optimization and vectorization, tweaking the OpenMP OMP_PROC_BIND and OMP_PLACES environment variables, experimenting with the numactl utility, adjusting page sizes, etc., but that’s not the purpose of this blog.5 My goal is to show how easy it is to get comprehensive, objective, and reproducible graph analytics performance data without obfuscation or resorting to benchmarking tricks.

[1] Processor: Intel® Xeon® Gold 6252 (2.1 GHz, 24 cores), HyperThreading enabled (48 virtual cores per socket); Memory: 384 GB Micron* DDR4-2666; Operating system: Ubuntu Linux* release 4.15.0-29, kernel 31.x86_64; Software: GAP Benchmark Suite* (downloaded and run September 2019).

[2] Processor: NVIDIA Tesla V100*; Memory: 16 GB; Operating system: Ubuntu Linux release 4.15.0-1047-aws, kernel 49.x86_64; Software: RAPIDS* v0.9.0 (downloaded and run September 2019).

[3] Source: https://www.microway.com/hpc-tech-tips/nvidia-tesla-v100-price-analysis/ (accessed September 2019) and https://ark.intel.com/content/www/us/en/ark/products/192447/intel-xeon-gold-6252-processor-35-75m-cache-2-10-ghz.html (accessed September 2019)

[4] Source: https://aws.amazon.com/ec2/pricing/on-demand/ (accessed September 2019)

[5] See Boosting the Performance of Graph Analytics Workloads if you’re interested in tuning GAP.

Notices and Disclaimers

Performance results are based on testing as of September 2019 by Intel Corporation and may not reflect all publicly available security updates. See configuration disclosure for details. No product or component can be absolutely secure. For more complete information about performance and benchmark results, visit www.intel.com/benchmarks.

Cost reduction scenarios described are intended as examples of how a given Intel-based product, in the specified circumstances and configurations, may affect future costs and provide cost savings. Circumstances will vary. Intel does not guarantee any costs or cost reduction.

Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries in the U.S. and/or other countries.

*Other names and brands may be claimed as the property of others.