This article compares the PageRank performance of RAPIDS cuGraph. and HiBench. Then I asked my colleagues on the HiBench team to generate a graph with 50M vertices and 2B edges so that I could run my own performance tests.

With all the attention graph analytics is getting lately, it’s increasingly important to measure its performance in a comprehensive, objective, and reproducible way. I covered this in another blog, in which I recommended using an off-the-shelf benchmark like the GAP Benchmark Suite* from the University of California, Berkeley. There are other graph benchmarks, of course, like LDBC Graphalytics*, but they can’t beat GAP for ease of use. There’s significant overlap between GAP and Graphalytics, but the latter is an industrial-strength benchmark that requires a special software configuration.

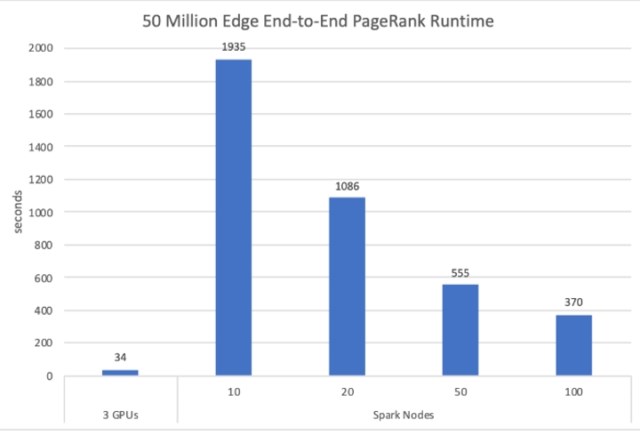

Personally, I find benchmarking boring. But it’s unavoidable when I need performance data to make a decision. Obviously, the data has to be accurate. But it also has to be relevant to the decision at hand. It’s important that my use of a particular benchmark is aligned with its intended purpose. That’s why the performance comparison shown in Figure 1 had me scratching my head. It compares the PageRank performance of RAPIDS cuGraph* and HiBench*. The latter is a big data benchmark developed by some of my Intel colleagues to measure a wide range of analytics functions—not just PageRank. Both tests in Figure 1 use the same synthetic graph with only 50M vertices and 2B edges. The HiBench measurements used Apache Spark*, but in my world, a Spark cluster is for big data (i.e., terabytes). A 2B-edge graph (about 8GB in compressed-sparse format) hardly qualifies as big data if I can load it into the memory of my laptop. Why use a Spark cluster when the dataset easily fits in the memory of a single system?

I asked my colleagues on the HiBench team to generate a graph with 50M vertices and 2B edges so that I could run my own performance tests. Using the PageRank reference implementation in the GAP benchmark package, the end-to-end computation on this graph took only 12 seconds with 48 threads running on a single, two-socket Intel® Xeon® processor-based system.1 This is much better than the performance reported in Figure 1, and I didn’t need three expensive NVIDIA V100* GPUs or a Spark cluster to get it.

It’s hard to say if this is an apples-to-apples comparison with the cuGraph performance in Figure 1. I used the default GAP convergence tolerance and capped iterations at three because that’s the HiBench default. However, there’s no way of knowing if the same parameters were used for the cuGraph test because benchmarking parameters weren’t reported.

Transparency is crucial for generating believable and reproducible benchmarks. In PageRank, for example, the convergence tolerance and the maximum number of iterations significantly affect run-time, so these parameters should be clearly stated. This is one of the biggest advantages of using an off-the-shelf benchmark like GAP to measure graph analytics performance. If someone reports results for the PageRank test in GAP, I know by definition that the damping factor is 0.85, the tolerance is 0.0001, and the maximum number of iterations is 1,000. I would expect any deviation from the default parameters to be duly noted.

The HiBench team was also intrigued by Figure 1 because the original caption for this figure stated, “cuGraph PageRank vs Spark GraphX for [2B] edges across varying n1-standard-8 [Google Cloud Platform] nodes (lower is better).” As mentioned above, the PageRank in HiBench is implemented using MLLib, not GraphX. So, for the sake of experiment, the HiBench team implemented PageRank using GraphX to compare its performance to the original implementation.

Unfortunately, it’s hard to know what processors were used in the n1-standard-8 nodes, but we do know that each node has 8 vCPUs (a vCPU is a single hardware Hyper-Thread) and 30GB memory. (That means the 100-node test in Figure 1 used a whopping 3TB memory for a graph that only requires a few GB!) This, plus numerous other omissions, makes an exact comparison impossible. But the HiBench team forged ahead with an approximate comparison on one of their available Spark clusters (one master plus four workers with a total of 440 vCPUs).2 For the same size graph (50M vertices, 2B edges), the MLLib PageRank took 461 seconds while the GraphX PageRank only took 157 seconds. The closest comparison is to the 50-node (400 vCPUs) Spark cluster in Figure 1. While I don’t condone using Spark for such a small problem, the results from the HiBench team are significantly better than those reported in Figure 1.

We’ve now come full circle, which leads back to the opening statement of this blog. It’s important to measure performance in a comprehensive, objective, and reproducible way. That’s why we recommend using an off-the-shelf graph analytics benchmark like the GAP Benchmark Suite. As mentioned in my previous blog on this subject, the graph analytics landscape is large and varied. No benchmark can cover every aspect of a computational domain, not even GAP. For example, GAP doesn’t cover community detection as well as I’d like. (One could argue that the connected components benchmark in GAP is close enough because its computational patterns overlap with many community detection algorithms.) If this is important for my decision-making requirements, I can either switch to the Graphalytics benchmark, which does cover community detection, or I can propose an update to GAP. Either option is better than creating an entirely new benchmark that has no community support or historical data.

1Processor: Intel® Xeon® Gold 6252 (2.1 GHz, 24 cores), HyperThreading enabled (48 virtual cores); Memory: 384 GB Micron DDR4-2666; Operating system: Ubuntu Linux release 4.15.0-29, kernel 31.x86_64; Software: GAP Benchmark Suite (downloaded and run September 2019).

2Processor: Intel® Xeon® Platinum 8180 (2.5 GHz, 28 cores), HyperThreading enabled (56 virtual cores); Memory: 384 GB Micron DDR4-2666; Network: Intel® 82599ES 10-Gigabit SFI/SFP+; Operating system: CentOS Linux release 7.6.1810, kernel v3.10.0-862.el7.x86_64; Software: Oracle Java JDK (build 1.8.0_65), Hadoop 2.7.0, Spark 2.4.3, and HiBench 7.0.

Notices and Disclaimers

Performance results are based on testing as of September 2019 by Intel Corporation and may not reflect all publicly available security updates. See configuration disclosure for details. No product or component can be absolutely secure. For more complete information about performance and benchmark results, visit www.intel.com/benchmarks.

Cost reduction scenarios described are intended as examples of how a given Intel-based product, in the specified circumstances and configurations, may affect future costs and provide cost savings. Circumstances will vary. Intel does not guarantee any costs or cost reduction.