In this article, you will find a discussion of AWS CLI & SAM CLI & miscellaneous subjects. Though this is not a comprehensive post, it should have some reference value for serverless development.

Background

You can definitely use the web console to work with AWS services. But you will find it more convenient to use the AWS CLI and the SAM CLI, CDK, and Cloudformation to issue commands to manage the AWS services and deploy your Serverless Applications. If you are a developer, you will find it extremely helpful to use SAM CLI to run/debug your API Gateway and Lambda functions locally.

The Environment

In this note, we will need an AWS account. It is not difficult to get an AWS free tier account. We need to make sure not to exceed the account limit, so we will not get charged. Besides the AWS account, we need to install the following software packages. Some of the packages are optional, but you will find them extremely helpful when you need them.

- AWS Account

- Node/NPM

- DOTNET CORE

- VSC

- GIT

- Docker

- AWS CLI V2

- SAM CLI

- AWS CDK

- NGROK

- https://ngrok.com/

- You can simply download it and unzip it. You can then add the executable file in the

PATH environment variable. - When you develop a web application that is hosted on your local computer, the NGROK can help you to create a public web address. People can then access your local web site from the internet.

- DBeaver

- https://dbeaver.io/

- DBeaver is optional. But if you work with databases, it is a great help because it is universal;

- DBeaver relies on JAVA, but since version 7.3.1, all distributions include OpenJDK 11 bundle by themselves;

- You can follow the instructions to install it - https://dbeaver.io/download/.

The "SAM CLI" is install by Homebrew. If you are not familar with Homebrew, the following commands may be of some help.

brew --version

brew update

brew doctor

brew tap

brew search ...

brew list

brew info <package-name>

brew install <package-name>

brew upgrade <package-name>

brew tap beeftornado/rmtree

brew rmtree <package-name>

brew remove <package-name>

sh -c \

"$(curl -fsSL https://raw.githubusercontent.com/Linuxbrew/install/master/install.sh)"

In case you want to uninstall Homebrew, you can run the following script.

ruby -e \

"$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/master/uninstall)"

For some reason, if you are unable to intall packages through Homebrew, you can try the following command.

sudo apt-get install build-essential patch ruby-dev zlib1g-dev liblzma-dev

The AWS Permissions & S3 Buckets

In order to use AWS & SAM CLI, you need to provide your credentials to prove that you are the user who is authorized to AWS. There are two types of users in AWS service:

- A Root user - It is the email address when you register the AWS account. It has full access to the account, including the accounting and payment information.

- An IAM user - It is a regular user that you can grant different levels of permissions.

We will use a regular user to interact with AWS through the AWS & SAM CLI. When you create an IAM user, AWS will ask you the "AWS access type".

- AWS Management Console access - The user is allowed to access AWS through the web console.

- Programmatic access - The user is allowed to access AWS through the AWS CLI & SAM CLI.

With programmatic access enabled, you can create an aws_access_key_id and aws_secret_access_key pair. You will use the key pair to access the AWS services. For simplicity, I just created a user with AdministratorAccess policy for the exercises. My experimental user has all the access to my AWS account except the payment information.

[default]

aws_access_key_id = ..PUT THE KEY ID HERE..

aws_secret_access_key = ..PUT THE SECRETE KEY HERE..

[profile-1]

aws_access_key_id = ..PUT THE KEY ID HERE..

aws_secret_access_key = ..PUT THE SECRETE KEY HERE..

The AWS CLI & SAM CLI check the ~/.aws/credentials file for the permissions. You can add multiple permissions in the credentials file. When you issue the commands, you can use the --profile option to choose the credential to use. If no --profile provided, the default is used.

The "config" & "credentials" Files

You may notice that there may be two files in the .aws folder. According to stackoverflow and the documentation, the two files serve similar and related purposes.

- The two files are distinct in order to enable the separation of credentials from less sensitive configuration information.

- The credentials file is intended for storing just credential information for the configured profiles (currently limited to:

aws_access_key_id, aws_secret_access_key and aws_session_token). - The config file is intended for storing non-sensitive configuration options for the configured profiles.

- The config file can also be configured to contain any information which could also be stored in the credentials file.

- In the case of conflicting credential information being specified for a profile in the config and credentials files, those in the credentials file will take precedence.

The Simple AWS S3 Commands

With the permissions ready, we can then give it a try to the AWS commands.

aws s3api create-bucket --bucket example.huge.head.li --region us-east-1

The above command creates a S3 bucket named example.huge.head.li. The name of a S3 bucket is globally unique. If you want to try it yourself, you need to pick a name that is unique globally. If you want to block the public access from this bucket, you can issue the following command:

aws s3api put-public-access-block \

--bucket example.huge.head.li \

--public-access-block-configuration \

"BlockPublicAcls=true,IgnorePublicAcls=true,

BlockPublicPolicy=true,RestrictPublicBuckets=true"

Now you can upload some files to your S3 bucket.

aws s3 cp testfile s3:

You can delete all the files and the folders in the S3 bucket with the following command:

aws s3 rm s3:

If you want, you can delete your S3 bucket.

aws s3 rb s3:

If everything went well, you should be able to run the AWS commands successfully. You can use the AWS console to see the S3 bucket created and the files sent to it.

The Serverless Application Model & SAM CLI

Among the bunch of AWS terminologies, AWS CLI, AWS SDK, CDK, Cloudformation, the SAM is designed to help the development work on the serverless applications. If you are a developer, it may be a great help because it allows you to run the lambdas and APIs on your local computer. In this note, I will keep an example on how to use SAM.

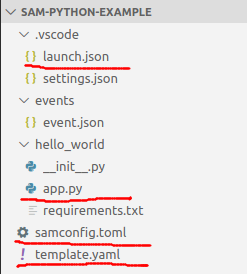

The attached is a simple serverless application in Python. You can create a serverless application using the SAM CLI by the sam init command.

sam init

You can simply follow the instructions to create the serverless application. In the template project created by the SAM CLI, the template.yaml file is the Cloudformation template for the project.

Description: >

sam-python-example

Sample SAM Template for sam-python-example

Globals:

Function:

Timeout: 3

Resources:

HelloWorldFunction:

Type: AWS::Serverless::Function

Properties:

CodeUri: hello_world/

FunctionName: HelloWorldFunction

Handler: app.lambda_handler

Runtime: python3.8

Events:

HelloWorld:

Type: Api

Properties:

Path: /hello

Method: get

In the template.yaml, we can find the following:

- It defines a lambda that is implemented in the hello_world folder. The entry point of the lambda is the lambda_handler function in the app.py file.

- It defines an API Gateway that exposes a

GET end-point with the path /hello.

In order to make the example simple, I made the lambda to respond a simple JSON object.

import json

def lambda_handler(event, context):

return {

'statusCode': 200,

'body': json.dumps({

'event-path': event.get('httpMethod', 'N/A'),

'message': 'hello world'

}),

}

Invoke Lambda & Start API

One of the best features of the SAM is that it allows to simulate the AWS environment on the local computer through a docker container. To run the lambda locally, you can issue the following command:

sam local invoke HelloWorldFunction -e ./events/event.json

- If the template.yaml declares only one lambda, you do not need to specify the name of the lambda in the command.

- The

-e option provides a file. The file contains a JSON string to provide the input parameter event to the function. If skipped, the event parameter points to an empty object.

You can also host the API locally on your computer by the following command:

sam local start-api

The default port number for the running API is 3000. You can then access the API in your browser by the URL "http://127.0.0.1:3000/hello" and you should be able to receive the following response:

{"event-path": "GET", "message": "hello world"}

The Python version used to run the lambda when you invoke it locally is the Python that comes with the Docker image. It is not the Python installed on your computer.

Local Debug & Break Points

With SAM, you can actually set break points in your source code and debug into your code locally in the Visual Studio Code. In order to debug a Python lambda, we need to add three things in the project. First, we need to add the ptvsd package and use it in the app.py file.

import ptvsd

ptvsd.enable_attach(address=('0.0.0.0', 5000), redirect_output=True)

ptvsd.wait_for_attach()

Then, we need to install ptvsd in the hello_world directory.

cd hello_world/

python3 -m pip install ptvsd==4.3.2 -t .

Finally, we need to add the debug configuration in the Visual Studio Code in the launch.json file.

{

"version": "0.2.0",

"configurations": [

{

"name": "SAM CLI Python Hello World",

"type": "python",

"request": "attach",

"port": 5000,

"host": "localhost",

"pathMappings": [

{

"localRoot": "${workspaceFolder}/hello_world",

"remoteRoot": "/var/task"

}

]

}

]

}

We can then invoke the lambda with the -d option.

sam local invoke -d 5000

With the -d option, the invocation of the lambda will wait for a debugger to attach. We can then set a break point and start the debugging.

We can also debug the lambda when it is called through the API by the following command:

sam local start-api -d 5000

If you create your lambda with Node.js, debugging is much simpler. All you need to do it is to create the debug configuration in the launch.json file.

{

"version": "0.2.0",

"configurations": [

{

"name": "Node lambda Attach",

"type": "node",

"request": "attach",

"address": "localhost",

"port": 5000,

"localRoot": "${workspaceRoot}/...folder of the lambda implementation...",

"remoteRoot": "/var/task",

"protocol": "inspector",

"stopOnEntry": false

}

]

}

SAM Deploy

You can use SAM CLI to deploy your serverless application to the AWS. You can simply issue the following command to initiate the deployment:

sam deploy --guided

You will need to follow the instructions and provide additional information so you can deploy the application. When you succeed, SAM will generate a samconfig.toml file.

version = 0.1

[default]

[default.deploy]

[default.deploy.parameters]

stack_name = "sam-python-example"

s3_bucket = "the.s3.bucket.name.used.for.deployment"

s3_prefix = "sam-python-example"

region = "us-east-1"

capabilities = "CAPABILITY_IAM"

The file has all the information needed for the deployment. If you make any changes to the lambda, you can simply issue sam deploy without any additional information.

sam deploy

AWS CDK & SAM Local

In terms of Cloudformation, AWS CDK is a much more powerful tool than SAM CLI. If you want to develop your applications with AWS CDK, you can still take advantage of the capability of local invoking and debugging capability provided by SAM CLI. With properly created CDK resources, you can issue the following command to create the template.yaml file:

cdk synth --no-staging > template.yaml

You can then use the SAM CLI commands to invoke and debug the lambdas.

Points of Interest

- This is a note on AWS & SAM CLI & miscellaneous subjects.

- AWS is a large topic and this note is not well organized for any particular subject, but it should have some reference value if for serverless development.

- I hope you like my postings and I hope this note can help you in one way or the other.

History

- 30th March, 2020: First revision