This article outlines how to copy the tables from one Azure Database to another Azure Database using AZURE DATA FACTORY. Along with this, there is an example of how to work with FOR-Each and customized Stored Procedure to retrieve only the data that is required in the destination table. You can apply the same pattern in other copy scenario as well, for example, copying data from SQL to BLOB, SQL to CSV, SQL Server / Oracle to Azure SQL.

High Level

At a high level, we are following the below steps:

- Creating a source and destination database with tables to be copied

- Mechanism to create a pipeline using wizard to copy the data between the tables

- Creating source dataset and destination dataset

- Mapping data flow and table schemas

- Creating activity to copy the table data

- Manual trigger action

- Schedule trigger actions

Prerequisites

- Azure SQL Database Accounts with Tables of same schema on both source and destination

- Azure Account / Subscriptions

Let's Start !!!!!

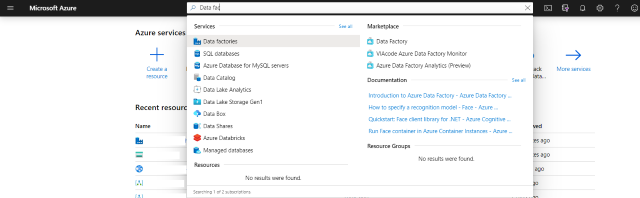

Click on Resource --> Search for Data Factories as shown in the below screen:

Select Data Factories from the Menu and then click on Create Data Factory from the below screen:

Fill the mandatory fields and click Create:

After creating data factory, the below screen would be presented. Then, click on Author and Monitor.

On click of the Author & Monitor --> the below screen would be shown in a new Tab to select the appropriate actions. Select on COPY DATA.

On click of the Copy Data --> From below Wizard, fill in all the Mandatory details and click on NEXT button.

Click on Next button --> Select the SOURCE Data Store from where the data needs to be picked.

Step 1: Click on Create New Connection

Step 2: Since we are working on Azure SQL table, we are going to select as below:

Fill the mandatory fields on the next screen. Fill in the below details on the screen:

- Server Name: Azure SQL Server

- Database Name: SourceSQLDB

- Username and Password: SQL Authentication Credential used while setting up Azure SQL server

Click on Test Connection. Once it’s successful, click on Finish.

The following screen would be presented after click on the screen to select the Tables from the source table where the data needs to be transferred.

On further configuration, as a requirement if any of the filter data or custom query needs to be added on retrieval of the data from the source table.

Add Filter by Custom query.

Now add another Data Set for the Destination Database. On the below screen, select AZURE SQL Database and Click on Finish.

On the next screen, enter the Destination data source mandatory information.

On click of the CREATE button, both source and destination data stores are shown as in the below screen:

As a next step, for each table you have selected to copy in the source data store, select a corresponding table in the destination data store or specify the Stored procedure to run at the destination.

On click of Next --> Need to choose how source and destination column are mapped.

On final Next Button Click --> A complete Deployments confirmation messages are shown with Pipeline, dataset, Creating & starting triggers.

Click on the FINISH button to deploy the Data factory with all the configuration from the Wizard. Then the below screen would be shown when clicking on the Pipeline configuration.

Click on the FINISH button to deploy the Data factory with all the configuration from the Wizard. Then the below screen would be shown when clicking on the Pipeline configuration.

After publishing the changes, let’s select the Pipeline –> Trigger –> Trigger Now to copy the data from source view to the destination table.

So, now the data has been copied to the destination database successfully.

Now, adding another activity to the flow, i.e., if there is an operation to perform after successful data transformation from source to destination table. As part of this exercise, I would be adding a Stored Procedure Activity to delete all the data with a conditional clause where Date is > 180 days from current date.

Click on the Stored Procedure Activity and change the Name and other required fields. Then, click on the SQL Account tab to configure the SQL Data source.

Next, select Stored Procedure tab and select the desired Stored procedure from the dropdown selection as shown below.

Again, on changes, let’s validate the changes. If no errors found, then we can Publish the changes.

Schedule Triggers

We can also schedule the trigger to run after a certain interval of time. Below are the steps to do it.

Click on the Author icon on the left–> Triggers –> +New

Fill in the mandatory fields. We are scheduling the trigger to run once a day at 12 AM EST. Select the options as shown below and do not check Activated button if you do not want to trigger as it can be activated once the trigger is associated with any pipeline. Click on Finish.

After publishing the trigger, Click on Monitor icon on the left –> Trigger Runs tab. You should be able to see the status of each trigger that runs.

The End !!!!!!

So, in this series of the blog post, we learned how to copy data form one database to another using Azure Data Factory by manually running the pipeline and scheduling the pipeline by creating trigger.

History

- 3rd April, 2020: Initial version