In this article, we'll outline a Tesseract and Arm NN text-to-speech solution running on Raspberry Pi. We'll use mostly off-the-shelf components and models, but will focus on understanding the process of converting a model from TensorFlow to Arm NN, such as choosing a model that works well with Arm NN. We'll also present some best practices for creating Arm NN-powered solutions.

A text-to-speech (TTS) engine is the crucial element of systems looking to create a natural interaction between humans and machines based on embedded devices. An embedded device can, for example, help visually impaired people read signs, letters, and documents. More specifically, a device can use optical character recognition to let a user know what it sees in an image.

TTS applications have been available on desktop computers for years, and are common on most modern smartphones and mobile devices. You'll find these applications in the accessibility tools for your system, but they have broad applications for screen readers, custom alerts, and more.

Typically these systems start with some machine-readable text, though. What if you don't have an existing document, browser, or application source of text? Optical character recognition (OCR) software can translate scanned images into text, but in the context of a TTS application, these are glyphs — individual characters. OCR software on its own is only concerned with accurately returning numbers and letters.

For accurate real-time text detection — recognizing collections of glyphs as words that can be spoken — we can turn to deep learning AI techniques. In this case we could use a recurrent neural network (RNN) to recognize the words within OCR-captured text. And what if we could do this on an embedded device, something lighter and more pocketable than even a smartphone?

Such a lightweight, powerful TTS device could help visually impaired people, could be embedded in child-proof devices for literacy or storytime applications, and more.

In this article, I’ll show you how to do so with TensorFlow, OpenCV, Festival, and a Raspberry Pi. I will use the TensorFlow machine learning platform along with a pre-trained Keras-OCR model to perform OCR. OpenCV will be used to capture images from the web camera. Finally, the Festival speech synthesis system will serve as the TTS module. Everything will then be combined to build the Python app for Raspberry Pi.

Along the way, I will tell you how the typical OCR models work, and how you could further optimize the solution with TensorFlow Lite, a set of tools to run optimized TensorFlow models on constrained environments like embedded and IoT devices. The full source code presented here is available at my GitHub page: https://github.com/dawidborycki/TalkingIoT.

Getting Started

First, though, to create the device and the application for this tutorial, you’ll need a Raspberry Pi. Versions 2, 3, or 4 will work for this example. You can also use your development PC (we tested the code with Python 3.7).

You will need to install two packages: tensorflow (2.1.0) and keras_ocr (0.7.1). Here are some useful links:

OCR with Recurrent Neural Networks

Here, I am using the keras_ocr package to recognize text within images. This package is based on the TensorFlow and convolutional neural network that was originally published as an OCR example on the Keras website.

The network’s architecture can be divided into three significant steps. The first one takes the input image and then extracts features using several convolutional layers. These layers partition the input image horizontally. For each partition, these layers determine the set of image column features. The sequence of column features is used in the second step by the recurrent layers.

The recurrent neural networks (RNNs) are typically composed of long short-term memory (LTSM) layers. LTSM revolutionized many AI applications, including speech recognition, image captioning, and time-series analysis. OCR models use RNNs to create the so-called character-probability matrix. This matrix specifies the confidence that the given character is found in the specific partition of the input image.

Thus, the last step uses this matrix to decode the text from the image. Usually, people use the connectionist temporal classification (CTC) algorithm. The CTC aims at converting the matrix into the word or sequence of words that makes sense. Such conversion is not a trivial task as the same characters can be found in the neighboring image partitions. Also, some of the input partitions might not have any characters.

Though RNN-based OCR systems are efficient, you can find multiple issues when trying to adopt it in your projects. Ideally, you would want to perform the transform learning to adjust the model to your data. Then you convert the model to the TensorFlow Lite format so it is optimized for inference on the edge device. Such an approach was found successful in mobile computer vision applications. For example, many MobileNet pre-trained networks perform efficient image classification on the mobile and IoT devices.

However, TensorFlow Lite is a subset of TensorFlow, and thus not every operation is currently supported. This incompatibility becomes a problem when you want to perform OCR like the one included in the keras-ocr package on the IoT device. The list of possible solutions is provided on the official TensorFlow website.

In this article, I will show how to use the TensorFlow model since the bidirectional LSTM layers (employed in keras-ocr) are not yet supported by TensorFlow Lite.

Pre-trained OCR model

I started by writing a test script, ocr.py that shows how to use the neural network model from keras-ocr:

# Imports

import keras_ocr

import helpers

# Prepare OCR recognizer

recognizer = keras_ocr.recognition.Recognizer()

# Load images and their labels

dataset_folder = 'Dataset'

image_file_filter = '*.jpg'

images_with_labels = helpers.load_images_from_folder(

dataset_folder, image_file_filter)

# Perform OCR recognition on the input images

predicted_labels = []

for image_with_label in images_with_labels:

predicted_labels.append(recognizer.recognize(image_with_label[0]))

# Display results

rows = 4

cols = 2

font_size = 14

helpers.plot_results(images_with_labels, predicted_labels, rows, cols, font_size)

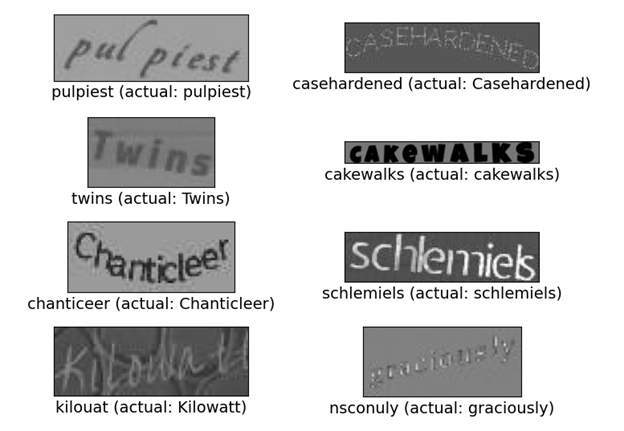

This script instantiates the Recognizer object from the keras_ocr.recognition module. Then the script loads images and their labels from the attached testing dataset (Dataset folder). This dataset contains eight randomly chosen images from the Synthetic Word Dataset (Synth90k). Subsequently, the script runs OCR recognition on every image in the dataset, and then displays prediction results.

To load images and their labels, I use the load_images_from_folder function that I implemented within the helpers module. This method expects two parameters: the path to the folder with the images, and the filter. Here, I assume that images are under the Dataset subfolder, and I read all images in the jpeg format (.jpg extension).

In the Synth90k dataset, each image file name contains its label between underscores. For example: 199_pulpiest_61190.jpg. Thus, to get the image label, the load_images_from_folder function splits the file name by the underscore and then takes the first element of the resulting collection of strings. Also, note that load_images_from_folder function returns an array of tuples. Each element of the array contains the image and the corresponding label. For this reason, I only pass the first element of this tuple to the OCR engine.

To perform recognition, I use the recognize method of the Recognizer object. This method returns the predicted label. I store the latter in the predicted_labels collection.

Finally, I pass the collection of predicted labels, the images, and the original labels to another helper function, plot_results, which displays images in the rectangular grid of the size rows x columns. You can change the grid appearance by modifying the corresponding variables.

Camera

After testing the OCR model, I implemented the camera class. The class uses OpenCV, which was installed along with the keras-ocr module. OpenCV provides a convenient programming interface to access the camera. Explicitly, you first initialize the VideoCapture object, then invoke its read method to get the image from the camera.

import cv2 as opencv

class camera(object):

def __init__(self):

# Initialize the camera capture

self.camera_capture = opencv.VideoCapture(0)

def capture_frame(self, ignore_first_frame):

# Get frame, ignore the first one if needed

if(ignore_first_frame):

self.camera_capture.read()

(capture_status, current_camera_frame) = self.camera_capture.read()

# Verify capture status

if(capture_status):

return current_camera_frame

else:

# Print error to the console

print('Capture error')

In this code I created the VideoCapture within the initializer of the camera class. I pass a value of 0 to VideoCapture to point to the default system’s camera. Then I store the resulting object within the camera_capture field of the camera class.

To get images from the camera, I implemented the capture_frame method. It has an additional parameter, ignore_first_frame. When this parameter is True, I invoke camera_capture.read twice, but I ignore the result of the first invocation. The rationale behind this operation is that the first frame returned by my camera is typically blank.

The second call to the read method gives you the capture status and the frame. If the acquisition was successful (capture_status is True), I return the camera frame. Otherwise, I print the "Capture error" string.

Text to Speech

The last element of the application is the TTS module. I decided to use Festival here because it can work offline. Other possible approaches for TTS are well described in the Adafruit article Speech Synthesis on the Raspberry Pi.

To install the Festival on your Raspberry Pi invoke the following command:

sudo apt-get install festival -y

You can test whether everything is working correctly by typing:

echo "Hello, Arm" | Festival –tts

Your Raspberry Pi should then speak "Hello, Arm."

Festival provides an API. However, to keep things simple, I decided to interface Festival through the command line. To that end I extended the helpers module with another method:

def say_text(text):

os.system('echo ' + text + ' | festival --tts')

Putting Things Together

Finally, we can put everything together. I did so within main.py:

import keras_ocr

import camera as cam

import helpers

if __name__ == "__main__":

# Prepare recognizer

recognizer = keras_ocr.recognition.Recognizer()

# Get image from the camera

camera = cam.camera()

# Ignore the first frame, which is typically blank on my machine

image = camera.capture_frame(True)

# Perform recognition

label = recognizer.recognize(image)

# Perform TTS (speak label)

helpers.say_text('The recognition result is: ' + label)

First, I create the OCR recognizer. Then, I create the Camera object and read the frame from the default webcam. The image is passed to the recognize method of recognizer, and the resulting label is spoken through the TTS helper.

Wrapping Up

In summary, we created a reliable system that can perform OCR with deep learning and then communicate results to the users through a text-to-speech engine. We used a pre-trained keras-OCR package.

In a more advanced scenario, text recognition can be preceded by text detection. You would first detect lines of text in the image, and then perform recognition on each of them. To do so, you would only need to employ text detection from keras-ocr as shown in this version of the Keras CRNN implementation and the published CRAFT text detection model by Fausto Morales.

By extending the above app with text detection, you could achieve the RNN-supported IoT system that performs OCR to help visually impaired people read menus in restaurants or documents in the government offices. Moreover, such an app, if supported by the translation service, could serve as the automatic translator.