Using APS, we ind that MPI_Allreduce is a performance “hotspot” in the application. With the new Intel MPI Library 2019 Technical Preview and Intel OPA, we are able to more efficiently utilize the system hardware to speedup MPI_Allreduce and improve the overall application.

Materials modeling and design is a very important area of science and engineering. Synthetic materials are a part of modern life. Materials for our computers, cars, planes, roads, and even houses and food are the result of advances in materials science. High-performance computing (HPC) makes modern materials simulation and design possible. And Intel® Software Development Tools can help both developers and end users achieve maximum performance in their simulations.

VASP* is one of the top applications for modeling quantum materials from first principles. It’s an HPC application designed with computing performance in mind. It uses OpenMP* to take advantage of all cores within a system, and MPI* to distribute computations across large HPC clusters.

Let’s see how Intel Software Development Tools can help with performance profiling and tuning of a VASP workload.

Benchmarking VASP

To get initial information about VASP performance, we tested it on systems with Intel® Xeon® Scalable processors. (Note that VASP is copyright-protected software owned by the University of Vienna, Austria, represented by Professor Dr. Georg Kresse at the Faculty of Physics. You must have an appropriate license to use VASP.)

Our performance experiments use the VASP developer version that implements MPI/OpenMP hybrid parallelism (see Porting VASP from MPI to MPI+OpenMP [SIMD]) to simulate silicon―specifically, to simulate a vacancy in bulk silicon using HSE hybrid density functional theory.

We use this command to launch VASP:

$ mpiexec.hydra –genv OMP_NUM_THREADS ${NUM_OMP} -ppn ${PPN} -n ${NUM_MPI}

./vasp_std

In the command:

${NUM_OMP} is the number of OpenMP threads.${PPN} is the number of MPI ranks on each node.${NUM_MPI} is the total number of MPI ranks in the current simulation.

For benchmarking, we will use output with LOOP+ time, which measures the execution time of the main computation without pre- and post-processing.

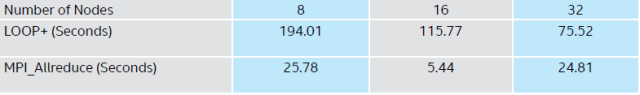

The initial results are for a dual-socket Intel® Xeon® Gold 6148 processor (2.4 GHz, 20 cores/socket, 40 cores/node), shown in Table 1.

Table 1. Initial LOOP+ and MPI_Allreduce times for a cluster with Intel Xeon Gold processors

Configuration: Hardware: Intel® Xeon® Gold 6148 processor @ 2.40GHz; 192 GB RAM. Interconnect: Intel® Omni-Path Host Fabric Interface (Intel® OP HFI) Adapter 100 Series [discrete]. Software: Red Hat Enterprise Linux* 7.3; IFS 10.2.0.0.158; Libfabric 1.3.0; Intel® MPI Library 2018 (I_MPI_FABRICS=shm:ofi); Intel® MPI Benchmarks 2018 (built with Intel® C++ Compiler XE 18.0.0 for Linux*). Benchmark Source: Intel Corporation.

We will use this data as our baseline performance. We use the MPI_Allreduce code path for this article. The default method based on MPI_Reduce can use the same approach.

Now let’s take a look at how to use Intel Software Development Tools to optimize application performance.

Performance Profiling with Application Performance Snapshot

A typical HPC application is a complex system composed of different programming and execution models. To achieve optimal performance on a modern cluster, the developer should consider many aspects of the system such as:

- MPI and OpenMP parallelism

- Memory access

- FPU utilization

- I/O

Poor performance in any of these aspects will degrade overall application performance. The Application Performance Snapshot (APS) in Intel® VTune™ Amplifier is a great feature to quickly summarize performance in these areas. It’s also freely available as a standalone utility.

From Getting Started with APS, the recommended way to launch MPI applications with APS is to run this command to collect data about your MPI application:

$ <mpi launcher> <mpi parameters> aps <my app> <app parameters>

where:

<mpi launcher> is an MPI job launcher such as mpirun, srun, or aprun.<mpi parameters> are the MPI launcher parameters.

Note that:

apps must be the last <mpi launcher> parameter.<my app> is the location of your application.<app parameters> are your application parameters.

APS launches the application and runs the data collection. When the analysis completes, an aps_result_<date> directory is created.

Intel® MPI Library also has two very convenient options for integrating external tools into the startup process:

- aps

- gtool

Let’s take a look at both.

-aps Option

When you use this option, a new folder with statistics data is generated: aps_result_<date>-<time>. You can analyze the collected data with the aps utility. For example:

$ mpirun -aps -n 2 ./myApp

$ aps aps_result_20171231_235959

This option explicitly targets aps and suits our goals well.

-gtool Option

Use this option to launch tools such as Intel VTune Amplifier, Intel® Advisor, Valgrind*, and GNU* Debugger (GDB*) for the specified processes through the mpiexec.hydra and mpirun commands. An alternative to this option is the I_MPI_GTOOL environment variable.

The –gtool option is meant to simplify analysis of particular processes, relieving you of specifying the analysis tool’s command line in each argument set (separated by colons ‘:’). Even though it’s allowed to use –gtool within an argument set, don’t use it in several sets at once and don’t mix the two analysis methods (with -gtool and argument sets).

Syntax:

-gtool "<command line for tool 1>:<ranks set 1>[=launch mode 1][@arch 1];

<command line for tool 2>:<ranks set 2>[=exclusive][@arch 2]; …

;<command line for a tool n>:<ranks set n>[=exclusive][@arch n]"

<executable>

or:

$ mpirun -n <# of processes>

-gtool "<command line for tool 1>:<ranks set 1>[=launch mode 1][@arch 1]"

-gtool "<command line for a tool 2>:<ranks set 2>[=launch mode 2][@arch 2]"

…

-gtool "<command line for a tool n>:<ranks set n>[=launch mode 3][@arch n]"

<executable>

Table 2 shows the arguments.

Table 2. Arguments

Note that rank sets cannot overlap for the same @arch parameter. A missing @arch parameter is also considered a different architecture. Thus, the following syntax is considered valid:

-gtool "gdb:0-3=attach;gdb:0-3=attach@hsw;/usr/bin/gdb:0-3=attach@knl"

Also, note that some tools cannot work together, or their simultaneous use may lead to incorrect results. Table 3 lists the parameter values for [=launch mode].

Table 3. Parameter values for [=launch mode]

This is a very powerful and complex control option. Intel MPI Library Developer Reference has a whole chapter dedicated to gtool options. We’ll focus on those needed for our purposes. They can be controlled with an environment variable (I_MPI_GTOOL) in addition to command line options, so we can leave the launch command untouched. This is extremely useful when you have complex launch scripts that setup everything before the run.

Our desired analysis is simple, so we only need to set:

$ export I_MPI_GTOOL="aps:all"

and collect the profile for VASP. The profile has the name and can be processed with:

$ aps –report=<name>

This will generate an HTML file with the results shown in Figure 1.

Figure 1 – APS of VASP

APS provides actionable information for finding performance bottlenecks. VASP is shown to be MPI bound (19.12% of the runtime was spent in MPI). We can look at the MPI Time section for more details. It shows that MPI Imbalance consumes 10.66% of the elapsed time. MPI Imbalance is defined as time spent in MPI that is unproductive, such as time spent waiting for other ranks to reach an MPI_Allreduce call. The Top 5 MPI functions section shows the most time-consuming functions. In this run, MPI_Allreduce was the top consumer, which suggests that it should be our first target for performance optimizations.

We’ll try using the latest Intel MPI Library 2019 with Intel® Performance Scaled Messaging 2 (PSM2) Multi-Endpoint (Multi-EP) technology to speedup MPI_Allreduce. Let’s begin.

Intel® MPI Library with Intel® Omni-Path Architecture

As you can see from the system specifications provided earlier, we’re using Intel® Omni-Path Architecture (Intel® OPA) hardware. For Intel MPI Library, the best way to take advantage of all Intel Omni-Path Architecture capabilities is to use the Open Fabrics Interface (OFI) for Intel MPI Library 2019. You can find Intel MPI Library 2019 Technical Preview bundled with Intel MPI Library 2018 Update 1 at:

<install_dir>/compilers_and_libraries_2018.1.163/linux/mpi_2019/

Both TMI and OFI support the provider PSM2 as the best option to use Intel OPA.

$ export I_MPI_FABRICS=shm:ofi

$ export I_MPI_OFI_PROVIDER=psm2

Intel Performance Scaled Messaging 2 (PSM2) is a successor of PSM and provides an API and library that targets very large clusters with huge numbers of MPI ranks. See New Features and Capabilities of PSM2 for a good overview.

Intel PSM2 Multi-Endpoint (Multi-EP) and Intel MPI Library 2019

A key concept for Intel PSM2 (and for PSM) is the endpoint. Intel PSM2 follows an endpoint communication model, where an endpoint is defined as an object (or handle) instantiated to support sending and receiving messages to other endpoints. (Learn more here.) By default, every process can use only one endpoint. But with latest versions of Intel PSM2, this has changed, with Intel PSM2 Multi-Endpoint (Multi-EP) functionality allowing the use of endpoints with multithreaded applications. Intel MPI Library 2019 Technical Preview gives the developer the ability to effectively use Intel PSM2 Multi-Endpoint. For Multi-Endpoint support, the MPI standard threading model was extended with the MPI_THREAD_SPLIT programming model. It allows a program to effectively use multithreading with MPI to remove most synchronizations and increase performance.

As explained in the Intel® MPI Library Developer Reference for Linux* OS, an MPI_THREAD_SPLIT-compliant program must be at least a thread-compliant MPI program (supporting the MPI_THREAD_MULTIPLE threading level). In addition to that, the following rules apply:

- Different threads of a process must not use the same communicator concurrently.

- Any request crested in a thread must not be accessed by other threads. That is, any non-blocking operation must be completed, checked for completion, or probed in the same thread.

- Communication completion calls that imply operation progress such as

MPI_Wait() or MPI_Test() being called from a thread don’t guarantee progress in other threads.

Also, all threads should have an identifier thread_id, and communications between MPI ranks can be done only for threads with the same thread_id.

Multi-EP in Collectives: MPI_Allreduce

Since VASP already has a hybrid MPI/OpenMP version, it can readily be adapted for MPI Multi-Endpoint. For each MPI rank, we create as many additional MPI communicators as the number of OpenMP threads with the MPI_Comm_dup routine. Then, each MPI buffer will be split between OpenMP threads as shown in Figure 2.

Figure 2 – Splitting the MPI buffers among threads

To skip all code processing, let’s use the PMPI_ interface and rewrite the MPI_Allreduce routine with the needed modifications:

/*

*Copyright 2018 Intel Corporation.

*This software and the related documents are Intel copyrighted materials, and your use of

them is governed by the express license under which they were provided to you (License).

*Unless the License provides otherwise, you may not use, modify, copy, publish, distribute,

disclose or transmit this software or the related documents without Intel’s prior written

permission.

*This software and the related documents are provided as is, with no express or implied

warranties, other than those that are expressly stated in the License.

*/

#include <stdio.h>

#include <mpi.h>

#include <omp.h>

#include <unistd.h>

int comm_flag=0;

int new_comm=0;

int first_comm=0;

int init_flag=0;

int mep_enable=0;

int mep_num_threads=1;

int mep_allreduce_threshold=1000;

int mep_bcast_threshold=1000;

int k_comm=0;

void mep_init() {

if(init_flag == 0) {

mep_enable=atoi(getenv("MEP_ENABLE"));

mep_num_threads=atoi(getenv("MEP_NUM_THREADS"));

mep_allreduce_threshold=atoi(getenv("MEP_ALLREDUCE_THRESHOLD"));

mep_bcast_threshold=atoi(getenv("MEP_BCAST_THRESHOLD"));

}

init_flag=1;

}

int MPI_Allreduce(const void *sendbuf, void *recvbuf, int count,

MPI_Datatype datatype, MPI_Op op, MPI_Comm comm) {

int err;

int count_thread;

int rest=0;

int num_threads=1;

int thread_num=0;

MPI_Aint lb,extent;

size_t datatype_size;

if (init_flag == 0) mep_init();

MPI_Type_get_extent(datatype, &lb, &extent);

datatype_size=extent;

// if MPI_Allreduce use MPI_IN_PLACE

if (&sendbuf[0] == MPI_IN_PLACE) {

if(mep_enable == 1) {

if(count >= mep_allreduce_threshold) {

comm_duplicate(comm,mep_num_threads);

count_thread=count/mep_num_threads;

rest=count%mep_num_threads;

#pragma omp parallel num_threads(mep_num_threads)

private (num_threads, thread_num) {

num_threads=omp_get_num_threads();

thread_num=omp_get_thread_num();

if(thread_num == num_threads-1) {

err = PMPI_Allreduce(MPI_IN_PLACE,

recvbuf+thread_num*count_thread*datatype_size,

count_thread+rest,

datatype, op, comms.dup_comm[k_comm][thread_num]);

}

else {

err = PMPI_Allreduce(MPI_IN_PLACE,

recvbuf+thread_num*count_thread*datatype_size,

count_thread,

datatype, op, comms.dup_comm[k_comm][thread_num]);

}

}

}

else {

err = PMPI_Allreduce (MPI_IN_PLACE, recvbuf, count, datatype, op, comm);

}

}

else {

err = PMPI_Allreduce (MPI_IN_PLACE, recvbuf, count, datatype, op, comm);

}

}

// else MPI_Allreduce not use MPI_IN_PLACE

else {

if(mep_enable == 1) {

if(count >= mep_allreduce_threshold) {

comm_duplicate(comm,mep_num_threads);

count_thread=count/mep_num_threads;

rest=count%mep_num_threads;

#pragma omp parallel num_threads(mep_num_threads)

private (num_threads,thread_num) {

num_threads=omp_get_num_threads();

thread_num=omp_get_thread_num();

if(thread_num == num_threads-1) {

err = PMPI_Allreduce(sendbuf+thread_num*count_thread*datatype_size,

recvbuf+thread_num*count_thread*datatype_size,

count_thread+rest,

datatype, op, comms.dup_comm[k_comm][thread_num]);

}

else {

err = PMPI_Allreduce(sendbuf+thread_num*count_thread*datatype_size,

recvbuf+thread_num*count_thread*datatype_size,

count_thread,

datatype, op, comms.dup_comm[k_comm][thread_num]);

}

}

}

}

else {

err = PMPI_Allreduce (sendbuf, recvbuf, count, datatype, op, comm);

}

}

else {

err = PMPI_Allreduce (sendbuf, recvbuf, count, datatype, op, comm);

}

}

return err;

}

Note that comm_duplicate(comm,mep_num_threads) is a function that duplicates communicators. We will use it with LD_PRELOAD to replace the default MPI_Allreduce.

Run with Multi-EP

The modified command line is:

$ export I_MPI_THREAD_SPLIT=1

$ export I_MPI_THREAD_RUNTIME=openmp

$ export MEP_ALLREDUCE_THRESHOLD=1000

$ export MEP_ENABLE=1

$ export OMP_NUM_THREADS=2

$ export MEP_NUM_THREADS=2

$ LD_PRELOAD= lib_mep.so

$ mpiexec.hydra -ppn ${PPN} -n ${NUM_MPI} ./vasp_std

Performance results are shown in Table 4.

Table 4. Final LOOP+ and MPI_Allreduce times for cluster with Intel Xeon Gold processors (the best combination of OpenMP x MPI is reported), comparison with initial data (the best combination of OpenMP x MPI is reported)

Improve Performance

Using APS, we found that MPI_Allreduce is a performance "hotspot" in the application. With the new Intel MPI Library 2019 Technical Preview and Intel OPA, we were able to more efficiently utilize the system hardware to speedup MPI_Allreduce and improve the overall application. The maximum observed speedup, with only software modifications, was 2.34x for just MPI_Allreduce and 1.16x for LOOP+.