This article looks at: Training a TensorFlow model with MNIST, converting your model to TensorFlow Lite, creating the embedded app, generate sample MNIST data for embedding, and testing the MNIST images.

Handwriting digit recognition using TensorFlow and MNIST has become a pretty common introduction to artificial intelligence (AI) and machine learning (ML). "MINST" is the Modified National Institute of Standards and Technology database, which contains 70,000 examples of handwritten digits. The MNIST database is a commonly used source of images for training image processing systems and ML software.

While ML tutorials using TensorFlow and MNIST are a familiar sight, until recently, they've typically been demonstrated on full-fledged x86 processing environments with workstation class GPUs. More recently there have been examples using some of the more powerful smartphones, but even these pocket-size computing environments commonly provide multi-core CPUs and powerful, dedicated GPUs.

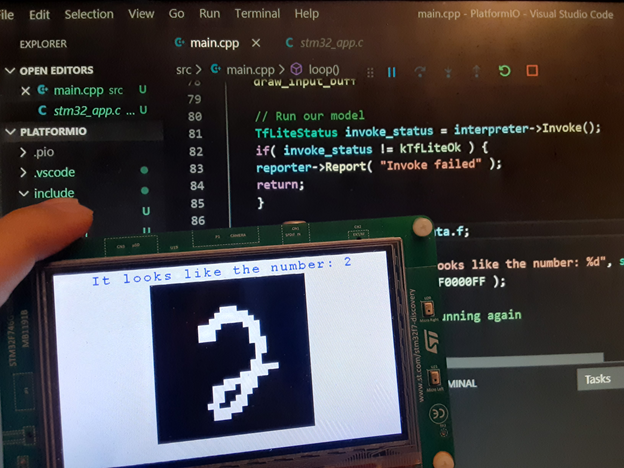

Today, however, you can create a fully functional MNIST handwriting recognition app on even an 8-bit microcontroller. To demonstrate, we’re going to build a fully functional MNIST handwriting recognition app, like the one in the image below, using TensorFlow Lite to run our AI inference on a low-power STMicroelectronics microcontroller using an Arm Cortex M7-based processor. An implementation like this demonstrates the ability to create robust ML applications even on battery powered, self-contained devices in all sorts edge IoT or handheld scenarios.

You’ll need a few things to build this project:

You’ll find the code for this project on GitHub.

This article assumes you’re familiar with C/C++ and ML, but don’t worry if you aren’t. You can still follow along and try deploying the project to your own device at home!

Quick Overview

Before we get started, let’s look at the steps required to run a deep-learning AI project on a microcontroller via TensorFlow:

- Train a predictive model with a dataset (MNIST handwritten digits)

- Convert the model to TensorFlow Lite

- Create the embedded app

- Generate sample data

- Deploy and test the app

To make this process faster and easier, I created a Jupyter notebook on Google Colab to take care of the first two steps for you from within your browser, without needing to install and configure Python on your machine. This can also serve as a reference for other projects as it includes all of the code needed to train and evaluate the MNIST model using TensorFlow, as well as to convert the model for offline use in TensorFlow Lite for Microcontrollers, and to generate a C array code version of the model to easily compile into any C++ program.

If you wish to skip ahead to the embedded app in step 3, be sure to first click Runtime > Run All in the notebook menu to generate the model.h file. Download it from the Files list on the left side, or you can download the prebuilt model from the GitHub repository to include in your project.

And if you’d like to run these steps locally on your own machine, make sure you’re using TensorFlow 2.0 or later, and using Anaconda to install and use Python. If you use the Jupyter notebook mentioned earlier, you don’t need to worry about installing TensorFlow 2.0 because it’s included in the notebook.

Training a TensorFlow Model with MNIST

Keras is a high-level neural network Python library often used for prototyping AI solutions. It's integrated with TensorFlow, and also includes a built-in MNIST dataset of 60,000 images and 10,000 test samples accessible right within TensorFlow.

To predict handwritten digits, I used this dataset to train a relatively simple model that takes a 28x28 image as the input shape and outputs to 10 categories using a Softmax activation function with one hidden layer in between. This was plenty to achieve an accuracy of 96.6%, but you can add more hidden layers or tensors if you’d like.

For a more in-depth discussion on working with the MNIST dataset in TensorFlow, I recommend checking out some of the many great tutorials for TensorFlow on the web, like Not another MNIST tutorial with TensorFlow from O'Reilly. You can also refer to the TensorFlow sine wave model example in this notebook to familiarize yourself with training and evaluating TensorFlow models and converting the model to TensorFlow Lite for Microcontrollers.

Converting Your Model to TensorFlow Lite

The model I created in step 1 is useful and highly accurate, but the file size and memory usage make it prohibitive to port or use on an embedded device. This is where TensorFlow Lite comes in, because the runtime is optimized for mobile, embedded, and IoT devices, and allows for low latency with a very small (just kilobytes!) size requirement. It allows you to make trade-offs between accuracy, speed, and size to choose a model that makes sense for what you need.

In this case, I need TensorFlow Lite to take as little flash space and memory as possible while still being quick, so we can knock a bit of precision away without sacrificing too much.

To help make the size even smaller, the TensorFlow Lite Converter supports quantization of the model to switch from using 32-bit floating-point values for calculations to using 8-bit integers, as often the high precision of floating-point values isn’t necessary. This also significantly reduces the size of the model and increases performance.

I couldn’t get a quantized model to properly and consistently use the Softmax function on my STM32F7 Discovery device with a “failed to invoke” error. The TensorFlow Lite Converter is under continual development and some model constructs are not yet supported. For example, it converts some weights into int8 values instead of uint8, and int8 is not supported. At least not yet.

That said, if the converter supports all of the elements used in your model, it can tremendously shrink the size of your trained model and it only takes a few lines of code to enable, so I recommend giving it a try. The lines of code needed are just commented out in my notebook and ready for you to uncomment and generate the final model to see if it works correctly on your device.

Microcontroller embedded devices in the field often have limited storage. On the bench I can always use a larger memory card for external storage. However, to simulate an environment that can't access external storage for the .tflite file, I can export the model as code so it lives in the application itself.

I’ve added a Python script to the end of my notebook to handle this part and turn it into a model.h file. You can also use the xxd -i shell command in Linux to convert the generated tflite file into a C array if you’d like. Download this file from the left-side menu and get ready to add it to your embedded app project in the next step.

import binascii

def convert_to_c_array(bytes) -> str:

hexstr = binascii.hexlify(bytes).decode("UTF-8")

hexstr = hexstr.upper()

array = ["0x" + hexstr[i:i + 2] for i in range(0, len(hexstr), 2)]

array = [array[i:i+10] for i in range(0, len(array), 10)]

return ",\n ".join([", ".join(e) for e in array])

tflite_binary = open("model.tflite", 'rb').read()

ascii_bytes = convert_to_c_array(tflite_binary)

c_file = "const unsigned char tf_model[] = {\n " + ascii_bytes +

"\n};\nunsigned int tf_model_len = " + str(len(tflite_binary)) + ";"

# print(c_file)

open("model.h", "w").write(c_file)

Create the Embedded App

Now we’re ready to take our trained MNIST model and put it to work on an actual low-power microcontroller. Your specific steps may vary depending on your toolchain, but here are the steps I took with the Platform IDE and my STM32F746G Discovery device:

First, create a new app project with the settings configured to your corresponding Arm Cortex-M powered device and get your main setup and loop functions ready. I selected the Stm32Cube framework so that I can output the results to the screen. If you are using Stm32Cube, you can download the stm32_app.h and stm32_app.c files from the repository and create a main.cpp with the setup and loop functions like this:

#include "stm32_app.h"

void setup() {

}

void loop() {

}

Add or download the TensorFlow Lite Micro Library. For the PlatformIO IDE, I’ve pre-configured the library for you so you can download the tfmicro folder from here into your project’s lib folder and add it as a library dependency into your platformio.ini file:

[env:disco_f746ng]

platform = ststm32

board = disco_f746ng

framework = stm32cube

lib_deps = tfmicro

Include the TensorFlowLite library headers at the top of your code, like this:

#include "stm32_app.h"

#include "tensorflow/lite/experimental/micro/kernels/all_ops_resolver.h"

#include "tensorflow/lite/experimental/micro/micro_error_reporter.h"

#include "tensorflow/lite/experimental/micro/micro_interpreter.h"

#include "tensorflow/lite/schema/schema_generated.h"

#include "tensorflow/lite/version.h"

void setup() {

}

void loop() {

}

Include the model.h file converted earlier to this project in the include folder and add it below the TensorFlow headers. Then save and build to ensure that everything is okay without any errors.

#include "model.h"

Define the following global variables for TensorFlow that you’ll be using in your code:

// Globals

const tflite::Model* model = nullptr;

tflite::MicroInterpreter* interpreter = nullptr;

tflite::ErrorReporter* reporter = nullptr;

TfLiteTensor* input = nullptr;

TfLiteTensor* output = nullptr;

constexpr int kTensorArenaSize = 5000; // Just pick a big enough number

uint8_t tensor_arena[ kTensorArenaSize ] = { 0 };

float* input_buffer = nullptr;

In your setup function, load the model, set up the TensorFlow runner, allocate tensors, and save the input and output vectors along with a pointer to the input buffer, which we’ll interface with as a floating-point array. Your function should now look like this:

void setup() {

// Load Model

static tflite::MicroErrorReporter error_reporter;

reporter = &error_reporter;

reporter->Report( "Let's use AI to recognize some numbers!" );

model = tflite::GetModel( tf_model );

if( model->version() != TFLITE_SCHEMA_VERSION ) {

reporter->Report(

"Model is schema version: %d\nSupported schema version is: %d",

model->version(), TFLITE_SCHEMA_VERSION );

return;

}

// Set up our TF runner

static tflite::ops::micro::AllOpsResolver resolver;

static tflite::MicroInterpreter static_interpreter(

model, resolver, tensor_arena, kTensorArenaSize, reporter );

interpreter = &static_interpreter;

// Allocate memory from the tensor_arena for the model's tensors.

TfLiteStatus allocate_status = interpreter->AllocateTensors();

if( allocate_status != kTfLiteOk ) {

reporter->Report( "AllocateTensors() failed" );

return;

}

// Obtain pointers to the model's input and output tensors.

input = interpreter->input(0);

output = interpreter->output(0);

// Save the input buffer to put our MNIST images into

input_buffer = input->data.f;

}

Prepare TensorFlow to run on the Arm Cortex-M device on each loop call with a short one-second delay in between each update, like this:

void loop() {

// Run our model

TfLiteStatus invoke_status = interpreter->Invoke();

if( invoke_status != kTfLiteOk ) {

reporter->Report( "Invoke failed" );

return;

}

float* result = output->data.f;

char resultText[ 256 ];

sprintf( resultText, "It looks like the number: %d", std::distance( result, std::max_element( result, result + 10 ) ) );

draw_text( resultText, 0xFF0000FF );

// Wait 1-sec til before running again

delay( 1000 );

}

Your app is now ready to run. It’s just waiting for us to feed it some fun MNIST test images to process!

Generate Sample MNIST Data for Embedding

Next, let’s get some images of handwritten digits for our device to read.

In order to add these images into the program without depending on external storage, we can convert the 100 MNIST images ahead of time from JPEG into bitwise monochrome images stored as C arrays just like our TensorFlow model. For this, I used an open-source web tool called image2cpp that does most of this work for us in a single batch. If you would like to generate them yourself, parse the pixels and encode eight of them at a time into each byte and write them out into C array-format like below.

NOTE: The web tool generates code for Arduino IDE, so find and remove all instances of PROGMEM in the code and then it will compile with PlatformIO.

As an example, this test image of this handwritten zero should be converted to the following array:

// 'mnist_0_1', 28x28px

const unsigned char mnist_1 [] PROGMEM = {

0x00, 0x00, 0x00, 0x00, 0x00, 0x00, 0x00, 0x00, 0x00, 0x00, 0x00, 0x00, 0x00, 0x00, 0x00, 0x00,

0x00, 0x07, 0x00, 0x00, 0x00, 0x07, 0x00, 0x00, 0x00, 0x0f, 0x00, 0x00, 0x00, 0x1f, 0x80, 0x00,

0x00, 0x3f, 0xe0, 0x00, 0x00, 0x7f, 0xf0, 0x00, 0x00, 0x7e, 0x30, 0x00, 0x00, 0xfc, 0x38, 0x00,

0x00, 0xf0, 0x1c, 0x00, 0x00, 0xe0, 0x1c, 0x00, 0x00, 0xc0, 0x1e, 0x00, 0x00, 0xc0, 0x1c, 0x00,

0x01, 0xc0, 0x3c, 0x00, 0x01, 0xc0, 0xf8, 0x00, 0x01, 0xc1, 0xf8, 0x00, 0x01, 0xcf, 0xf0, 0x00,

0x00, 0xff, 0xf0, 0x00, 0x00, 0xff, 0xc0, 0x00, 0x00, 0x7f, 0x00, 0x00, 0x00, 0x1c, 0x00, 0x00,

0x00, 0x00, 0x00, 0x00, 0x00, 0x00, 0x00, 0x00, 0x00, 0x00, 0x00, 0x00, 0x00, 0x00, 0x00, 0x00

};

Save your generated images into a new file called mnist.h in your project, or if you would like to save some time and skip this step, you can simply download my version from GitHub.

At the bottom of the file, I combined all the arrays into one ultimate collection so we can choose a random image to process each second:

const unsigned char* test_images[] = {

mnist_1, mnist_2, mnist_3, mnist_4, mnist_5,

mnist_6, mnist_7, mnist_8, mnist_9, mnist_10,

mnist_11, mnist_12, mnist_13, mnist_14, mnist_15,

mnist_16, mnist_17, mnist_18, mnist_19, mnist_20,

mnist_21, mnist_22, mnist_23, mnist_24, mnist_25,

mnist_26, mnist_27, mnist_28, mnist_29, mnist_30,

mnist_31, mnist_32, mnist_33, mnist_34, mnist_35,

mnist_36, mnist_37, mnist_38, mnist_39, mnist_40,

mnist_41, mnist_42, mnist_43, mnist_44, mnist_45,

mnist_46, mnist_47, mnist_48, mnist_49, mnist_50,

mnist_51, mnist_52, mnist_53, mnist_54, mnist_55,

mnist_56, mnist_57, mnist_58, mnist_59, mnist_60,

mnist_61, mnist_62, mnist_63, mnist_64, mnist_65,

mnist_66, mnist_67, mnist_68, mnist_69, mnist_70,

mnist_71, mnist_72, mnist_73, mnist_74, mnist_75,

mnist_76, mnist_77, mnist_78, mnist_79, mnist_80,

mnist_81, mnist_82, mnist_83, mnist_84, mnist_85,

mnist_86, mnist_87, mnist_88, mnist_89, mnist_90,

mnist_91, mnist_92, mnist_93, mnist_94, mnist_95,

mnist_96, mnist_97, mnist_98, mnist_99, mnist_100,

};

Don’t forget to include your new image header at the top of your code:

#include "mnist.h"

Test the MNIST Images

After these sample images are added to your code, you can add two helper functions, one to read the monochrome image into the input vector and another to render to the built-in display. Here are the functions I placed right above the setup function:

void bitmap_to_float_array( float* dest, const unsigned char* bitmap ) { // Populate input_vec with the monochrome 1bpp bitmap

int pixel = 0;

for( int y = 0; y < 28; y++ ) {

for( int x = 0; x < 28; x++ ) {

int B = x / 8; // the Byte # of the row

int b = x % 8; // the Bit # of the Byte

dest[ pixel ] = ( bitmap[ y * 4 + B ] >> ( 7 - b ) ) &

0x1 ? 1.0f : 0.0f;

pixel++;

}

}

}

void draw_input_buffer() {

clear_display();

for( int y = 0; y < 28; y++ ) {

for( int x = 0; x < 28; x++ ) {

draw_pixel( x + 16, y + 3, input_buffer[ y * 28 + x ] > 0 ? 0xFFFFFFFF : 0xFF000000 );

}

}

}

And finally, in our loop, we can select a random test image to read into the input buffer and draw to the display like this:

void loop() {

// Pick a random test image for input

const int num_test_images = ( sizeof( test_images ) /

sizeof( *test_images ) );

bitmap_to_float_array( input_buffer,

test_images[ rand() % num_test_images ] );

draw_input_buffer();

// Run our model

...

}

If all’s well, your project will build and deploy and you’ll see your microcontroller working to recognize all the handwritten digits to output some nice results! Can you believe it?

What’s Next?

Now that you’ve seen what’s possible with low-power Arm Cortex-M microcontrollers set up to harness the power of deep learning with TensorFlow, you’re ready to do so much more! From detecting different types of animals and objects, to training a device to understand speech or answer questions, you and your device can unlock new possibilities that were previously thought to be possible only by using high-power computers and devices.

There are some terrific examples of TensorFlow Lite for Microcontrollers developed by the TensorFlow team available on their GitHub, and read up on theseBest Practices to make sure you get the most out of your AI project running on an Arm Cortex-M device.