On Windows systems, managing huge sets of data in the process address space is not just limited by your computer system's installed Working Set Size and Swapping Pagefile. To keep the System stable, allocation frequency and quantity of allocations also need to be considered. In the present article, we introduce an observed std::allocator for all varieties of STL containers that allow to manage huge amounts of data while taking care of system requirements.

Introduction

When it comes to handling large volumes of data in your application's address space, the installed swap file size gives a natural limitation. Reaching this limit, the operating system will start to unconditionally terminate running processes and finally your working process. Moreover, it shows that if memory is acquired too hastily, the system gets into trouble pushing the data away from the Working-Set onto the pagefile. This leads to the effect, that the system itself starts to trash, becomes sluggish and finally stops responding to inputs at all.

These effects have been verified on independent Windows installation of Windows 8 and Windows 10.

One should be able to reproduce the described behaviour easily, by the following tiny program. Please take care before doing so, since this will stop your system from working and you might need to reboot.

int main()

{

for (size_t iter = 0; iter < 1000000000; ++iter)

{

void* ptr = malloc(1024);

}

}

Using ProcessExplorer and running this code, one can observe the speedy increase of allocated process memory until the maximum working set level is reached. At this point, the system stops operating. The excessive number of allocations seem to overload Windows virtual memory management and the system does reject further user input.

Studying the literature either the SetProcessWorkingSetSize API call or Windows Job objects are recommended.

While the first seems to have no effect on really limiting the process working set, the latter suffers from the requirement for additional privileges a user needs to have granted - which makes it difficult for general installations to be used.

To overcome the issue also direct memory mapping was verified. In that case, ProcessExplorer shows that the working set level of the executing process stays low. Nevertheless, the system wide consumed working space level grows constantly so that we finally end up in the same scenario.

In the following, we discuss how to overcome memory limitations and control the allocation frequency.

Controlling the Allocation Frequency

To control the rate of allocations taking place by a process, it is necessary to establish some monitoring. Since calls to malloc are direct API calls, it is necessary to replace them by versions that can be surveyed. On the other hand, the replacing function should affect the executing process' performance as little as possible.

In our approach, we achieve this by introducing a second thread - called "Observer Thread". Its objective is to measure and control the rate of allocations taking place within a given period of time. This thread then slows down the main thread if required.

To achieve access to the malloc function, we simply replace it by a global function pointer variable of the form:

#define malloc(a) (*mallocfct)(a);

Notice that mallocfct is a global non-const variable that can be modified during runtime.

In our approach, we utilize three different implementations of the mallocfct. Which one is used depends on the process' current state of consumed memory.

DWORD sleepCount=100;

void* sloppymalloc(size_t size_p)

{

Sleep(sleepCount);

return malloc(size_p);

}

bool stopMallocs = false;

void* stopmalloc(size_t size_p)

{

while (stopMallocs)

Sleep(0);

return malloc(size_p);

}

void* speedymalloc(size_t size_p)

{

return malloc(size_p);

}

To measure the consumed memory of the process, the observer thread periodically issues calls to GlobalMemoryStatusEx and the hereby provided status.ullAvailPhys attribute.

In general, the allocation function is set to speedymalloc which resembles regular malloc without any time delay. As soon as the workspace limit is reached, the sloppymalloc method is injected. During periods of flushing, the stopmalloc function is active, which will prevent the main thread from further allocations taking place.

By this extension, we were able to remove the limitation of the process to the workspace limit and to fully utilize the pagefile space. It is important to understand that this handling is not affecting the implementation of the main process and also allows multiple threads to be controlled at a time. On the downside, an additional core is consumed for operating the observer code.

Relying on a sufficient pagefile size still has limitations with an eye on available space. In addition, the pagefile space is not exclusively available for the worker process and rivals with other processes running on the system at same time of execution. Running out of swap space, the system starts to terminate randomly running processes and finally ends the user process. Increasing the pagefile size is not possible during uptime and comes with the need to reboot the system.

The following section will therefore discuss how to eliminate the system swapfile limitation.

Dynamically Extending the Process Address Space

To extend the available virtual address space of a process, memory mapping of files is shown to be the technique of choice. It allows to dynamically create and add swap space during runtime to process and eliminates the need for system reconfiguration. Each memory mapped file gives a new section of virtual address space that can be accessed directly. Please be aware that memory mapping files is most efficient for x64 applications which provide a nearly unlimited virtual memory address space.

We therefore implemented a simple memory manager, that hooks into the fore-mentioned mallocfct routine and allows to span multiple memory mapped regions. New swap files are created and added to the process virtual memory space as needed. The implementation is kept very simple and uses a first fit to reallocate memory space.

void* sloppymalloc(size_t size_p)

{

Sleep(sleepCount);

return Heap_g.allocateNextMemBlock(size_p);

}

bool stopMallocs = false;

void* stopmalloc(size_t size_p)

{

while (stopMallocs)

Sleep(0);

return Heap_g.allocateNextMemBlock(size_p);

}

void* speedymalloc(size_t size_p)

{

return Heap_g.allocateNextMemBlock(size_p);

}

void movetofree(void* p)

{

char* p_ = (char*)p;

Heap_g.freeMemBlock( (__s_block*)(p_ - BLOCK_SIZE) );

}

This approach theoretically removes any memory limitation, but showed in practice that consuming the working space still results into system instabilities. Monitoring the processes, it was observed that the workspace level of the executing process stays low while the overall system memory gets consumed. Again, as soon as the maximum workspace level is reached, the system starts to slow down and finally stops responding.

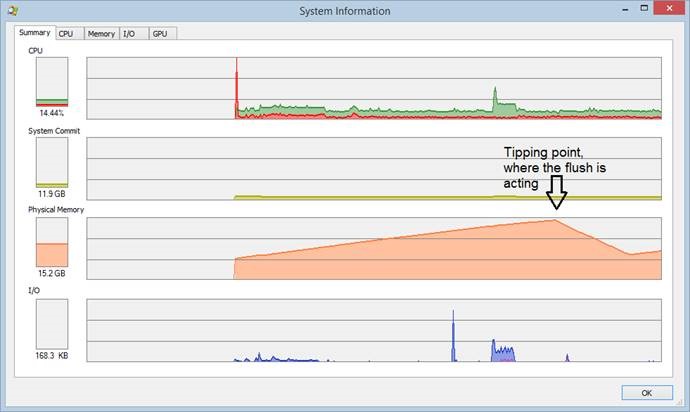

The solution to this issue was, that the observer thread explicitly inquires system flushing by calling the Windows API function SetProcessWorkingSetSize(HANDLE, -1, -1) at the time the allowed working space limit is reached. In our tests this was set to 1 Gbyte below the available physical memory.

It was also observed, that different Versions of Windows behave differently. While under Windows XP/7 and Wine calls to VirtualUnlock allow to release the mappings from workspace, Windows 8 and 10 requires full EmptyWorkingSet to release mapped pages.

Figure 1: Consumed system workspace over time

In the final step, the outlined techniques were integrated to be used conveniently in a std::allocator model template.

Integration in the std Template Library Model

Providing the observed malloc and memory mapped file management in a std::allocator gives the possibility to limit the outlined concept to the scope of specific, memory consuming instances of standard containers. Other data structures and containers will not be affected.

To make the allocator model use the memory mapped heap, the routines of allocate and deallocate have been implemented as follows:

pointer allocate(size_type count, const_pointer = 0)

{

if(count > max_size()){throw std::bad_alloc();}

return static_cast<pointer>(oheap::get()->malloc(count * sizeof(type)));

}

void deallocate(pointer ptr, size_type )

{

oheap::get()->free(ptr);

}

The project provides the required components of the implementation, consisting of:

- the observer thread (oheap.cpp)

- the memory manager (vheap.cpp)

- the file memory mapping (mmap.cpp)

- the stl allocator interface (allocator.h)

In case one wants to make general use of the observed malloc routines in its application, the global new and delete operators need to be implemented.

void * operator new(std::size_t n)

{

return mallocfct(n);

}

void operator delete(void * p) throw()

{

movetofree(p);

}

void *operator new[](std::size_t s)

{

return mallocfct(s);

}

void operator delete[](void *p) throw()

{

movetofree(p);

}

Background

Readers of this article should have basic knowledge of C++11, the use of the STL container template library and understand principles of threading.

Using the Code

The std::allocator can be simply used as additional argument in the parameter list of standard containers. The heap template argument refers to the observed memory manager.

#include <allocator.h>

#include <set>

int main()

{

std::multiset<Example, std::less<Example>, allocator<Example, heap<Example> > > foo;

for (int iter = 0; iter < 1000000000; ++iter)

{

foo.insert(Example(iter + 3));

foo.insert(Example(iter + 1));

foo.insert(Example(iter + 4));

foo.insert(Example(iter + 2));

}

for (std::multiset<Example, std::less<Example>,

allocator<Example, heap<Example> > >::const_iterator iter(foo.begin());

iter != foo.end(); ++iter)

{

;

}

return 0;

}

For your local installation, make sure to set the VFILE_NAME #define to refer to a writeable folder of sufficient size on your system. The maximum allocatable memory block is defined by the VFILE_SIZE #define. Please consider that in the current implementation, no memory alignment is taking place.

The attached project provides the required mmapallocator.dll library.

Special Credits

- Dr. Thomas Chust - File memory mapping layer and analysation of basic memory management behaviour

- Pritam Zope - Providing the basic outline for the sbrk memory manager implementation

- Joe Ruether - Sophisticated template implementation of the std::allocator

Points of Interest

There are major limitations in the virtual memory management of recent versions of Windows with regards to handling processes with large memory consumption.

History

- 18th May, 2020: Initial version

- 21th May, 2020: Fixes in the observer thread

- 24th May, 2020: Dynamically enlarge vmmap file sizes