Here we'll look at the stages and tools required in the process of recognizing a face, we'll look at the Python class that implements our face detector, and see the code in action using a sample image.

Introduction

If you’ve seen the Minority Report movie, you probably remember the scene where Tom Cruise walks into a Gap store. A retinal scanner reads his eyes, and plays a customized ad for him. Well, this is 2020. We don’t need retinal scanners, because we have Artificial Intelligence (AI) and Machine Learning (ML)!

In this series, we’ll show you how to use Deep Learning to perform facial recognition, and then – based on the face that was recognized – use a Neural Network Text-to-Speech (TTS) engine to play a customized ad. You are welcome to browse the code here on CodeProject or download the .zip file to browse the code on your own machine.

We assume that you are familiar with the basic concepts of AI/ML, and that you can find your way around Python.

Stages and Tools

The first four articles in this series correspond to the four stages in the process of recognizing a face, which are:

- Face detection – detection of all human faces in an image or video and extraction (cropping) of these faces

- Dataset processing – a stage included in most ML processes; fetching and parsing of the data, as well as normalization and categorization of the dataset variables

- Design, implementation, and training of a Convolutional Neural Network (CNN)

- The actual face recognition with the use of the CNN’s prediction ability

As we cover the Face Recognition and TTS, we’ll use a set of tools:

- Python – the programming language commonly used in AI/ML

- TensorFlow (TF) – the core open source library that helps you develop and train ML models

- Keras – an API that supports core ML functions

- NumPy – a package for scientific computations in Python

- SK-Image – a collection of algorithms for image processing

Detect, Extract, Resize, Plot...

So, face detection – time to dive into some code. Here is a Python class that implements our face detector:

from PIL import Image

from matplotlib import pyplot

from mtcnn import MTCNN

from numpy import asarray

from skimage import io

from util import constant

class MTCnnDetector:

def __init__(self, image_path):

self.detector = MTCNN()

self.image = io.imread(image_path)

The name of our class is MTCnnDetector because the predefined detector we’ll use is MTCNN (Multi-Task-Convolutional-Neural-Network). This is a type of CNN that follows the principle of Multi-Task learning. In other words, it is able to learn multiple tasks at the same time, thus supporting simultaneous detection of multiple faces. Using the MTCNN algorithm, we detect the bounding boxes of faces in an image, along with 5-point facial landmarks for each face (the simplest model, which detects the edges of the eyes and the bottom of the nose). The detection results are improved progressively by passing the inputs through a CNN, which returns candidate bounding boxes along with their probability scores.

This is the main method of the class:

def process_image(self, plot=False):

faces = self.__detect_face();

resized_face_list = []

for f in faces:

extracted_face = self.__extract_face(f)

resized_face = self.__resize_img_to_face(extracted_face)

resized_face_list.append(resized_face)

if plot:

self.__plot_face(resized_face)

return resized_face_list

The method is very simple: it calls the detect_face() method to get all faces from the image (whose path was input before through the class constructor), extracts the faces and resizes them, and returns a list of resized images. Additionally, it plots the detected faces if plot is True. It uses the following private methods as auxiliaries:

def __detect_face(self):

return self.detector.detect_faces(self.image)

def __extract_face(self, face):

x1, y1, width, height = face['box']

x2, y2 = x1 + width, y1 + height

return self.image[y1:y2, x1:x2]

def __resize_img_to_face(self, face):

image = Image.fromarray(face)

image = image.resize((constant.DETECTOR_FACE_DIM, constant.DETECTOR_FACE_DIM))

return asarray(image)

def __plot_face(self, face):

pyplot.imshow(face)

pyplot.show()

So the detect_face() method detects faces using the self.detector.detect_faces() method. The extract_face() method extracts from the image the portion corresponding to the bounding box returned earlier. Finally, the resize_img_to_face() method inputs the previously obtained portion of the image and resizes it to predefined dimensions. The plot_face() method plots the resulting face.

… and See What Happens

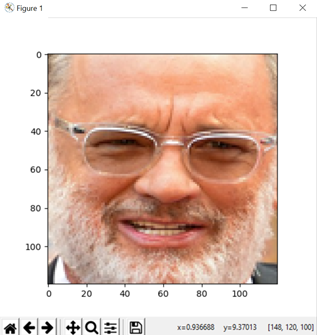

Let’s see this code in action using a sample image from Wikipedia.

face_detector = MTCnnDetector(constant.CELEBRITY_VGG_PATH)

resized_faces = face_detector.process_image(plot=True)

In the above code, constant.CELEBRITY_VGG_PATH is a constant file that will serve as a container for all paths and constants in the project. Let’s run the code and check out the plot of the detected face. Here is what we see – plotted using matplotlib.

Next Step?

In this article, we’ve gone over the steps to detect faces in an image. This stage is necessary if the images for training the CNN are not cropped to subject faces in advance. In the next article, we’ll talk about preparing a dataset for feeding the correct data to a CNN. Stay tuned!