Here we’ll investigate an alternative approach – utilizing a pre-trained model. We’ll take a CNN that had been previously trained for face recognition on a dataset with millions of images – and adapt it to solve our problem.

Introduction

If you’ve seen the Minority Report movie, you probably remember the scene where Tom Cruise walks into a Gap store. A retinal scanner reads his eyes, and plays a customized ad for him. Well, this is 2020. We don’t need retinal scanners, because we have Artificial Intelligence (AI) and Machine Learning (ML)!

In this series, we’ll show you how to use Deep Learning to perform facial recognition, and then – based on the face that was recognized – use a Neural Network Text-to-Speech (TTS) engine to play a customized ad. You are welcome to browse the code here on CodeProject or download the .zip file to browse the code on your own machine.

We assume that you are familiar with the basic concepts of AI/ML, and that you can find your way around Python.

Why Use Somebody Else’s CNN?

Up to this point, we’ve done everything required to design, implement, and train our own CNN for face recognition. In this article, we’ll examine an alternative approach – the use of one of pre-trained VGG (Visual Geometry Group at Oxford) models. These CNNs have been designed and trained over large datasets, with excellent results.

Why should we reuse a CNN that someone else has designed and trained for their dataset, obviously different from ours? Well, the main reason is that someone spent a lot of CPU/GPU time training those models on huge datasets. We can make good use of this training. The idea of reusing an already trained CNN in another model is known as "transfer learning."

Some of the well-known VGG models are VGG16, VGG19, ResNet50, InceptionV3, and Xception. They have different architectures, and all of them are available in Keras. Each of these models was trained on the ImageNet dataset that contains about 1.2 Million images.

In this article, we’ll adapt the VGG16 model.

The VGG16 architecture diagram shows that the input for this CNN is defined as (224, 224, 3). Therefore, if we want to adapt this CNN to our problem, we have two options. We can crop and resize our images to 224 x 224. Alternatively, we can change the input layer of VGG16 to (our_img_width, our_img_height, 3) for color images (RGB) or to (our_img_width, our_img_height, 1) for grayscale images.

Note that VGG16’s output layer consists of 1,000 classes. Since our problem does not have that many possible classes, we must change the shape of the output layer.

Implement VGG16

We’ll use VGG16 as the base model and derive from it a new CNN – VGGNet. This new CNN will have the layers and weights of VGG16 plus some modifications in the input layer (to adapt it to our image width, height, and color scheme), as well as in the output layer (to adapt it to our number of classes).

To implement our custom VGGNet model, let’s create a class that inherits from MLModel, same as we did in the previous article of this series. In this class, named VggModel, all methods except for init_model() will have the same implementation they had in our ConvolutionalModel class. This is what the code looks like:

def init_model(self):

base_model = VGG16(weights=constant.IMAGENET, include_top=False,

input_tensor=Input(shape=(constant.IMG_WIDTH,

constant.IMG_HEIGHT, 3)), pooling='max', classes=15)

base_model.summary()

for layer in base_model.layers:

layer.trainable = False

x = base_model.get_layer('block5_pool').output

x = Convolution2D(64, 3)(x)

x = MaxPooling2D(pool_size=(2, 2))(x)

x = Flatten()(x)

x = Dense(constant.NUMBER_FULLY_CONNECTED, activation=constant.RELU_ACTIVATION_FUNCTION)(x)

x = Dense(self.n_classes, activation=constant.SOFTMAX_ACTIVATION_FUNCTION)(x)

self.vgg = Model(inputs=base_model.input, outputs=x)

self.vgg.summary()

Note that we’ve added to the end of the CNN the following layers: Flatten, Dense, MaxPooling, and Dense. The purpose of the "mini-CNN" that we’ve appended to VGG’s end is to connect its block5_pool and make it fit into our problem with the correct number of classes.

Also, we’ve set the layer.trainable attribute for the added layers to False. This lets us keep the original model’s weights through the additional training, which we’ll have to carry out to fit the new layers. You can obtain a complete description of the modified model by calling self.vgg.summary().

We use the following loss and optimizer functions in the constructor of the class:

def __init__(self, dataSet=None):

super().__init__(dataSet)

opt = keras.optimizers.Adam(learning_rate=0.001)

self.vgg.compile(loss=keras.losses.binary_crossentropy,

optimizer=opt,

metrics=[constant.METRIC_ACCURACY])

Apply to the Yale Dataset

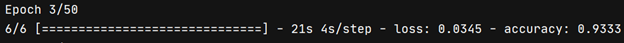

Now let’s apply the VGGNet model to our Yale Face dataset. Wow: we achieved the accuracy of over 93% in only three epochs!

Just to remind you: the CNN we’ve developed from scratch gave us about 85% accuracy after 50 epochs. So the use of a pre-trained model has drastically improved the algorithm convergence.

Next Step?

Well that’s it: we are done with the face recognition part. The next article – the last in this series – will focus on the Text-to-Speech with the use of Deep Learning. We’ll apply TTS to select a message to play to the person whose face we just recognized. Stay tuned!