Here we’ll apply TTS to select a message to play to the person whose face we just recognized.

Introduction

If you’ve seen the Minority Report movie, you probably remember the scene where Tom Cruise walks into a Gap store. A retinal scanner reads his eyes, and plays a customized ad for him. Well, this is 2020. We don’t need retinal scanners, because we have Artificial Intelligence (AI) and Machine Learning (ML)!

In this series, we’ll show you how to use Deep Learning to perform facial recognition, and then – based on the face that was recognized – use a Neural Network Text-to-Speech (TTS) engine to play a customized ad. You are welcome to browse the code here on CodeProject or download the .zip file to browse the code on your own machine.

We assume that you are familiar with the basic concepts of AI/ML, and that you can find your way around Python.

TTS in a Nutshell

In the previous articles of this series, we’ve shown you how to recognize a person using face recognition capabilities of CNN. So there you go – a person walks into your store, a camera catches their face, and the smart CNN behind the scenes tells you who the person is. Now what? Let’s assume that the act of face recognition triggers some business logic, which fetches a group of ads from a database and feeds it to a Text-to-Speech (TTS) system. This business logic, probably feeding off a data science / Big Data process, links the person’s identity and their buying habits… and – bingo! – selects and plays a relevant ad.

For example, if our visitor is identified as Bob, and our customer database says that Bob bought a pair of shoes last time he stopped by the store, we could play this ad: "Hi Bob! How are those dock shoes working out for you? If you need some socks to go with them, our alpaca wool socks are on sale for $5.99 today!"

Where could we apply TTS besides an ad generator? In many areas, such as voice assistants, accessibility tools for sight-impaired people, communication means for mute people, screen readers, automatic communication systems, robotics, audio books, and so on.

The goal of TTS is not only to generate speech based on text, but also to produce speech that sounds human – with intonations, volume, and cadence of a human voice. The most popular TTC model today is Google’s Tacotron2.

Tacotron2 – Some Nuts and Bolts

Tacotron2 relies on CNNs, as well as on Recurrent Neural Networks (RNNs), which are artificial Neural Networks typically used for speech recognition. RNNs retain information in a datetime-stamped form, so they are very useful in time series prediction. Their ability to remember previous inputs is called Long Short-Term Memory (LSTM).

How do RNNs remember previously input values? They do not consider independent activations the way traditional ANNs do. Instead, they convert these independent activations into dependent ones by providing the same weights and biases to all layers. This reduces the complexity of increasing the number of parameters and memorizing each of the previous outputs by turning each output into an input for the next hidden layer. In general, RNNs mimic the way we – the humans – process data sequences. We don’t decide that a sentence is "sad," or "happy," or "offensive" until we have read it completely. In a similar way, an RNN does not classify something as X or Y until it has considered all the data from the beginning to the end.

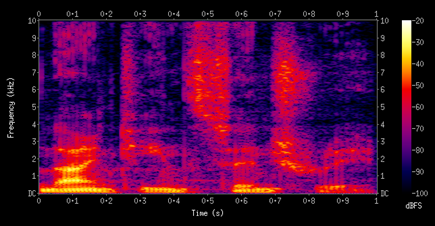

The Tacotron2 architecture is divided into two main components: Seq2Seq and WaveNet, both deep learning ANNs. Seq2Seq receives as input a chunk of text and outputs a Mel Spectrogram – a representation of signal frequencies over time.

Seq2Seq follows an Encoder/Attention/Decoder sequence of execution. The first part, Encoder, converts the text into a word embedding vector, which later serves as input for the Decoder to predict spectrograms. In general, Seq2Seq operates as follows:

- Encoder includes three convolutional layers, each containing 512 filters of 5 x 1 shape, followed by batch normalization and ReLU activations

- The Attention network gets the Encoder input and tries to summarize the full encoded sequence as a fixed-length context vector for each of the Decoder output steps

- The output of the final convolutional layer is passed to a single bidirectional LSTM layer containing 512 units (256 in each direction) to generate the encoded features

The second Tacotron2 component, WaveNet, receives as input the output of the first component (Mel Spectrogram) and outputs audio waveforms – a representation of audio signals over time.

Let’s TTS It!

To demonstrate how TTS would work in our "Minority Report" scenario, let’s use a Tacotron2 model available in this repository. Start by installing the Tacotron implementation – follow instructions in the Readme file.

What is nice about this code is that it comes bundled with a demo server, which can be tied up to an existing pre-trained model. This allows us to input a text string and receive a speech (audio) segment associated with that string. Server installation is a separate procedure – see Readme again.

When we run this Tacotron implementation, a text box appears and prompts us to input text. Once the text is in, the application recites that text out loud. Besides using an already trained model, we also have the option of training Tacotron2 with our own dataset.

Next Step?

Actually… none. We’ve identified a person using CNN-based face recognition, and then we’ve played an ad relevant to that person using TTS. This ends our series on the "Minority Report" case. Science fiction is not such fiction anymore, eh?