Understanding extreme asset price changes involves combining price history, news, events and social media data, much of which is only available in the form of unstructured text. By applying machine learning technologies to a real-time data pipeline, Refinitiv Labs has developed a prototype to help traders identify and respond to extreme price moves at pace.

- Financial institutions have a significant amount of structured and unstructured data at their disposal. However, this data is rarely integrated to enable the discovery of new insights.

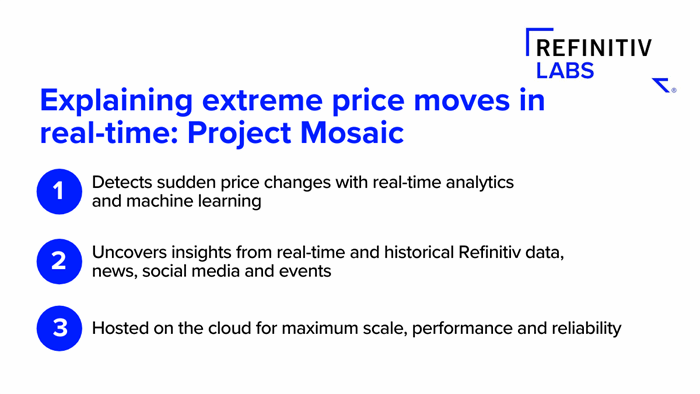

- Project Mosaic, a prototype from Refinitiv Labs, helps equity traders to detect and explain extreme price moves at speed.

- The process of creating Project Mosaic illustrates best practices for building real-time, cloud-hosted solutions collaboratively with design thinkers, engineers, data scientists, and, most importantly, customers.

For more data-driven insights in your Inbox, subscribe to the Refinitiv Perspectives weekly newsletter.

Data is abundant, not only in volume, but also in the number of sources it is derived from, the frequency at which it is updated, and the variety of formats it may take. Time spent sorting through that data, however, can keep businesses from generating actionable information at pace.

While financial institutions have become adept at finding insights hidden within homogeneous and structured data streams, reaping the full potential of data requires combing through many sources and formats. The process of data-focused analysis often starts with exploring, cleaning, filtering, and formatting available datasets before analysis and modelling can begin.

Refinitiv Labs set out to solve this problem, and apply it to a real-life challenge faced by equity traders with regards to detecting and responding to unexpected asset price changes.

Project Mosaic: Explaining extreme price moves with machine learning

Extreme price moves occur suddenly and without warning, and traders typically go through a slow and painstaking process of sourcing and analyzing disparate information to identify the cause of a price anomaly.

Explaining extreme price moves requires information about the asset’s market segment, industry, country, region, and so on. To fully understand a sudden price surge, Refinitiv Labs needed to work with a number of unstructured data sources, including price history, news and social media posts.

Using machine learning with unstructured data

Refinitiv Labs developed Project Mosaic, a prototype which provide traders with real-time understanding of why an asset price has changed, by combining data from the following sources:

- Real-time and historical trading data from the Refinitiv Data Platform

- News

- Social media

- Events such as quarterly earnings and annual financial reports

Project Mosaic identifies extreme price movements and explains why they occurred, by using advanced machine learning models that process a continuous stream of structured and unstructured data.

Real-time analytics are used to detect price movement, while a machine learning model, trained using historical data, confirms whether the price moves are anomalous.

It is common, for example, for two companies to see a significant increase in their share price if an unexpected M&A rumor is circulated during market hours. Mosaic can alert users about this spike, and provide relevant news articles, social media posts, and related events to explain the price surge.

Building a real-time data pipeline

How did the team of data scientists, engineers and design thinkers in Refinitiv Labs create this new machine learning prototype?

Machine learning models benefit from a data pipeline, as this enables the model to produce reliable results. Data pipelines read a constant, high-volume stream of data from different sources, and process and feed that data to the machine learning model, which then makes predictions from the data.

It is also helpful to use similar, or even identical pipelines for training, running inference and the systematic retraining of the model to mitigate negative effects, such as drift, over time.

In the case of Project Mosaic, the pipeline had to handle a large volume of data from various sources and in multiple formats — up to terabytes per day.

All processing needed to happen in real-time. This limited the available time frame for handling each message, creating a need for an even more sophisticated solution.

To satisfy these requirements, Project Mosaic uses a modern technology stack that includes: The Refinitiv Data Platform; Apache Beam; Apache Kafka, Elasticsearch; Google BigQuery; and Google Cloud DataFlow.

A backtester defines and runs simulations on historical data, and an alerts store accumulates alerts generated by the Project Mosaic engine for users.

Cloud infrastructure

To ensure maximum scale, performance and reliability, Project Mosaic was built as a cloud solution, and its key components, including Google BigQuery and Google Cloud DataFlow, are cloud-native products.

Other components could run on-premises, but employing cloud infrastructure for deployment and management significantly increased the speed of development and updates.

Hosting the prototype in the cloud made it easier to scale data storage space, data processing capacity, and perform complex data analysis as needed. It also means that Project Mosaic benefits from the minimal amount of effort required to maintain servers, networks, operating systems, and software packages.

Watch — Project Mosaic: Using machine learning to explain extreme price moves

A collaborative and customer-centric project

The critical success factors behind Project Mosaic are the collaboration between Refinitiv Labs’ design thinkers, engineers, research scientists and data scientists, and, most importantly, ongoing guidance from Refinitiv customers.

In many organizations, specialist teams operate in silos. We find that this detachment prevents companies from achieving the best possible outcomes for end users.

Refinitiv Labs ensures that our multi-disciplinary teams work together from the outset of a project. With Project Mosaic, getting continuous feedback from customers made sure that the prototype provided traders with the insights they needed.

What did we learn?

Refinitiv Labs is currently rolling out a global pilot program to customers, and continues to refine Project Mosaic based on their feedback.

The principles and processes behind this project offer data science and innovation teams some useful takeaways:

- Lay the groundwork that will enable data processing at scale, and set up a data pipeline.

- Make the most of the cloud to increase speed and flexibility, while reducing costs.

- Most importantly, stay focused on your end user and their problem, and not the technology itself.

Project Mosaic: Explaining extreme price moves with machine learning