Here, we’ll convert a trained ONNX image classification model to the Core ML format.

Introduction

Deep neural networks are awesome at tasks like image classification. Results that would have taken millions of dollars and an entire research team a decade ago are now easily available to anyone with a half-decent GPU. However, deep neural networks have a downside. They can be very heavy and slow, so they don’t always run well on mobile devices. Fortunately, Core ML offers a solution: it enables you to create slim models that run well on iOS devices.

In this article series, we’ll show you how to use Core ML in two ways. First, you’ll learn how to convert a pre-trained image classifier model to a Core ML and use it in an iOS app. Then, you’ll train your own Machine Learning (ML) model and use it to make a Not Hotdog app – just like the one you might have seen in HBO’s Silicon Valley.

In the previous article, we prepared our development environment. In this one, we’ll convert a trained ONNX image classification model to the Core ML format.

Core ML and ONNX

Core ML is an Apple’s framework that allows you to integrate ML models into your applications (not only for mobile devices and desktops but also for watches and Apple TV). We recommend to always start with Core ML when thinking about ML on iOS devices. This framework is very easy to use, and it supports full utilization of custom CPU, GPU and Neural Engine available on Apple devices. Besides, you can convert almost any neural network model to the Core ML’s native format.

Quite a few ML frameworks are seeing heavy use these days, such as TensorFlow, Keras, and PyTorch. Each of these frameworks comes with its own format for saving models. There are tools to convert most of these formats directly to Core ML. We’ll focus on the Open Neural Network Exchange (ONNX) format. ONNX defines a common file format and operations to make it easier to switch between frameworks.

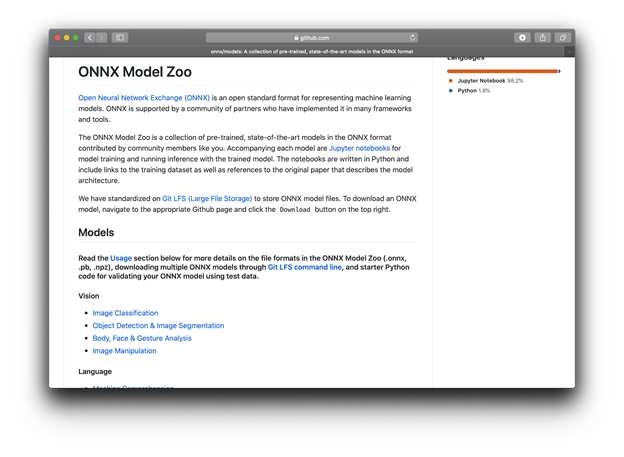

Let’s take a look at the available ONNX models in the so-called model zoo:

Click the first link in the Vision section, Image Classification. This is the page it displays:

As you can see, there are quite a few models to choose from. These models were trained using the well-known ImageNet classification dataset, which contains 1,000 object categories (such as a "keyboard," "ball-pen," "candle," "tarantula," "great white shark" and… well, exactly 995 others).

While these models differ in architecture and the framework that was used to train them, in the model zoo they all appear converted to ONNX.

One of the best models available here (with the error rate as low as 3.6%) is ResNet. This is the one we’ll use for conversion to Core ML.

To download the model, click the ResNet link in the above table, and then scroll down to the required version of the model.

To show you that, with Core ML, iOS devices can handle "real" models, we’ll select the largest (and the best) one available – ResNet V2 with 152 layers. For older iOS devices, such as iPhone 6 or 7, you may want to try one of the smaller models, such as ResNet18.

Convert the Model From ONNX to Core ML

It is possible to convert almost any model to Core ML using the coremltools and onnx packages installed in our Conda environment, as long as that model uses operations and layers (opset version) that Core ML supports (currently opset version 10 and lower).

Two types of models enjoy dedicated support: classification and regression. A classification model assigns a label to an input (such as an image). A regression model calculates a numeric value for the given input.

We’ll focus on image classification models.

What does the selected ResNet model expect as input? A detailed description is available at the corresponding model zoo page.

As you can see, the ResNet model expects a picture in an array with the following dimensions: batch, size channel (always 3 for red, green, and blue channels), height, and width. Array values should be scaled to the range ~[0, 1] using mean and standard deviation values defined separately for each color.

While the coremltools library is pretty flexible, its built-in image classification options won’t allow us to fully reproduce the original preprocessing steps. Let’s try to get close enough:

import coremltools as ct

import numpy as np

def resnet_norm_to_scale_bias(mean_rgb, stddev_rgb):

image_scale = 1 / 255. / (sum(stddev_rgb) / 3)

bias_rgb = []

for i in range(3):

bias = -mean_rgb[i] / stddev_rgb[i]

bias_rgb.append(bias)

return image_scale, bias_rgb

mean_vec = np.array([0.485, 0.456, 0.406])

stddev_vec = np.array([0.229, 0.224, 0.225])

image_scale, (bias_r, bias_g, bias_b) = resnet_norm_to_scale_bias(mean_vec, stddev_vec)

The above conversion is required because the standard ResNet procedure calculates a normalized value for each pixel in the image using the following formula:

norm_img_data = (img_data/255 - mean) / stddev =

(img_data/255/stddev) - mean/stddev

Core ML expects something like this:

norm_img_data = (img_data * image_scale) + bias

ResNet preprocessing expects different stddev (value scaling) for each channel but Core ML, by default, supports a single value for corresponding image_scale parameter.

Because a well-generalized model should not be noticeably affected by small changes in the image color tone, it is safe to use the single image_scale value calculated as mean of the specified stddev_vec values:

image_scale = 1 / 255. / (sum(stddev_rgb) / 3)

Next, let’s calculate bias for each color channel. We end up with a set of preprocessing parameters (image_scale, bias_r, bias_g, and bias_b) that we can use in the Core ML conversion.

Equipped with the calculated preprocessing parameters, you can run the conversion:

model = ct.converters.onnx.convert(

model='./resnet152-v2-7.onnx',

mode='classifier',

class_labels='./labels.txt',

image_input_names=['data'],

preprocessing_args={

'image_scale': image_scale,

'red_bias': bias_r,

'green_bias': bias_g,

'blue_bias': bias_b

},

minimum_ios_deployment_target='13'

)

Let’s have a brief look at some of the parameters:

mode=‘classifier’ with class_labels=‘./labels.txt’ determines the classification mode with the use of the provided labels. This will ensure that the model outputs not only numerical values but also the label of the most likely detected object.image_input_names=[‘data’] indicates that the input data contains an image. It will allow you to use the image directly, without prior conversion to MultiArray in Swift or NumPy array in Python.preprocessing_args specify the previously calculated pixel value normalization parameters.minimum_ios_deployment_target set to 13 ensures input and output structures a little less confusing than were required in older iOS versions.

After running the above code, you can print the model summary:

In our case, the model accepts as input an RGB image, sized 224 x 224 pixels, and generates two outputs:

classLabel – the label of the object with the highest model confidence.resnetv27_dense0_fwd – the layer output dictionary (with 1,000 "label":confidence pairs). The confidence returned here is a raw neural network output, not a probability. It can be easily converted to probability, as shown in the sample notebook included in the code download.

Run a Prediction

With the converted model, running a prediction is a straightforward task. Let’s use the PIL (pillow) library to handle images, and the ballpen.jpg image included in the code download.

from PIL import Image

image = Image.open('ballpen.jpg')

image = image.resize((224,224))

pred = model.predict(data={"data": image})

print(pred['classLabel'])

The expected result is:

ballpoint, ballpoint pen, ballpen, Biro

Feel free to experiment with additional images. To avoid repeating the conversion process, save the model:

model.save('ResNet.mlmodel')

You can later load it with:

model = ct.models.MLModel('ResNet.mlmodel')

Check the notebook provided in the code download to see how to obtain additional details from the model output, such as probabilities and labels for "top 5" prediction candidates.

Summary

You’ve converted and saved the ResNet model in the Core ML format.

While different models will require different preprocessing and conversion parameters, the overall approach will remain the same.

There is a lot more that can be done with the model, like adding metadata (model description, authors, etc.), adding custom layers (such as softmax at the end to force the model to return probabilities instead of raw network outputs). We only covered the basics, allowing you to experiment with your own models.

Now we are ready to use the converted model in an iOS application – see the next article.