Here we create your ML project, configure training data, augment training images, run predictions, and then use our custom ML model in our iOS application.

Introduction

Deep neural networks are awesome at tasks like image classification. Results that would have taken millions of dollars and an entire research team a decade ago are now easily available to anyone with a half-decent GPU. However, deep neural networks have a downside. They can be very heavy and slow, so they don’t always run well on mobile devices. Fortunately, Core ML offers a solution: it enables you to create slim models that run well on iOS devices.

In this article series, we’ll show you how to use Core ML in two ways. First, you’ll learn how to convert a pre-trained image classifier model to a Core ML and use it in an iOS app. Then, you’ll train your own Machine Learning (ML) model and use it to make a Not Hotdog app – just like the one you might have seen in HBO’s Silicon Valley.

With images downloaded in the previous article, we can proceed to train our custom hot dog detection model using Apple’s Create ML.

Create Your ML Project

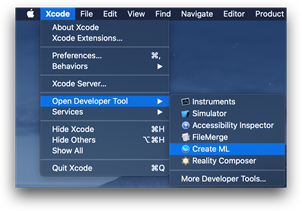

To start a new Create ML project, select Xcode > Open Developer Tool > Create ML.

Select a folder for the project and click New Document.:

Select Image Classifier and click Next.

Enter your model’s project description and click Next.

Confirm your project location and click Create.

Your project is ready.

Configure Training Data

Now it’s time to configure the training data. For an image classification model, Create ML requires images to be grouped into folders that correspond to their labels:

● dataset_root:

● class_1_label:

● image_1

● image_2

● (…)

● image_n

● class_2_label:

● image_n + 1

● image_n + 2

● (…)

● image_n + m

● (…)

Because this is exactly how we downloaded images in the previous article, no additional preparation is required.

In the Training Data section, select Choose > Select Files.

Locate the dataset that contains your images.

Make sure that the dataset’s root folder is selected and click Open.

Create ML should correctly detect 157 images split into 2 classes.

Augment Training Images

The last parameters to configure before training are the maximum number of iterations (25 by default) and data augmentation.

The augmentation process introduces random changes to the images, which increases the size and variability of the training dataset. When training a model to classify images, we want the model to work regardless of the image quality, orientation, exact object position, and so on. While augmentation often helps, we should not overuse it. Note that each selected option exponentially increases the size of the training dataset, with only a limited set of new information that can improve the model.

Even with our very small dataset, selecting multiple augmentation options can drastically increase the training time (from under two minutes to many hours), with questionable impact on model performance. Let’s use augmentation cautiously.

Train Your Model

With all the options set, you may click the Train button at the top of the window... and take a break. Depending on the number of images, selected augmentation options, and the hardware you are using, the process may take a couple of minutes to several hours.

The first step in the process is Extracting features. Technically, this is a prediction session where each image is processed by an existing, pre-trained VisionFeaturePrint model, to generate a 2048-element output vector. This vector represents abstract features that describe both basic and complex elements.

Only after the features are generated for all the images, the actual training starts.

With the selected configuration, the process lasted just over a minute on our machine.

The results look pretty good, eh? Both precision and recall are well over 90% for the training dataset and 80% for the validation dataset. Nothing to complain about, especially when we consider a very small number of pictures and short training time. Does the small number of pictures mean that the validation dataset is not representative enough?

Instead of guessing and worrying, let’s see how our model works in real life.

Run Predictions

Click the Output cell in the upper right corner. Use the + button in the bottom left corner or drag and drop images into the left column.

The model generates predictions. Here are a few predictions on images from Google Open Images Dataset that had not been included in the training dataset.

So far so good. Now let’s try something a bit tricky.

Well, our model is not perfect after all – we’ve managed to fool it with a burger.

The most obvious way to address this issue would be by adding some burger images to the "other" category and repeating the training. It may be not enough though, especially with an unbalanced dataset – we have many more "other" objects then "hotdog" ones. Anyway, if the model performance is not acceptable, additional work with the data will be required. (Do remember that 100% prediction accuracy is virtually never a realistic goal!)

What’s Inside the Create ML Model?

One of the interesting things about our classification model trained with Create ML is its size. It is very, very small (17 KB compared to 20-200 MB for models we’ve discussed previously).

Let’s peek under the model’s hood to find out how it manages to stay so compact while producing reasonably good results. A good tool for this peek would be iNetron.

At a first glance, it looks nothing like a CNN or any other neural network.

The secret lies in the visionFeaturePrint block. This is not a simple "layer." This block represents an entire CNN model provided by Apple. The same model that was used for feature extraction during the first step of our Create ML training.

The following block is glmClassifier (a generalized linear model), which is trained by Create ML to assign a label to a fixed vector. Technically, this means that the part of the model we’ve trained isn’t a neural network at all!

Hot Dog or Not 1.0

So now we have a custom ML model that we can use in our iOS application. To do that, simply drag the model’s output from Create ML to Xcode, very similar to what we did previously with the ResNet model.

With this model and some cosmetic changes to the sample iOS app used earlier in this series, we can finally deliver the Hot Dog or Not iOS app. Look at how it handles the images from Google Open Image Dataset.

You are welcome to have a look at the app code in the download attachment.

Summary

Is this it? Not even close – the first iteration is never the last. With the freshly gained knowledge, you can go back to the training dataset selection and preparation. Then you would likely want to obtain new images that reflect the "real-life" scenarios of using your app… Then you might fiddle with the training parameters... and so on.

But this is a topic for a new story.

For now, let’s give ourselves a pat on the back for building a fully functional Hot Dog or Not iOS application with a custom image classification model. The tools we’ve used helped us build an app like Jian Yang’s one in HBO’s Silicon Valley series.

It is incredible how far the ML has progressed over the last decade. You can now fit in your pocket models that "only yesterday" required powerful workstations. You can not only use these models but also train them, sometimes with nothing more than a simple laptop. And with easy access to cloud (including free Google Colab resources), you can go much, much further.

We hope you enjoyed this series. If you have any questions, please drop them in the comments – we’ll do our best to answer.