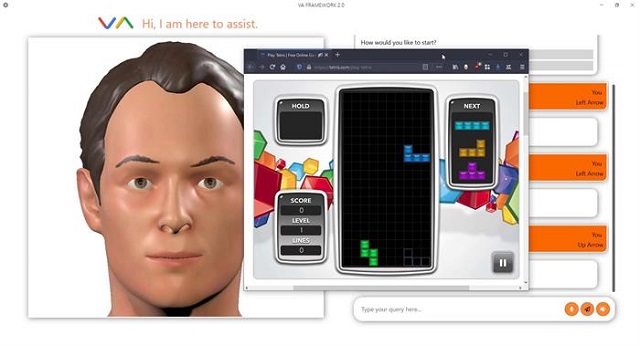

In this article, we'll use a .NET AI Assistant development platform called VA Framework to build a plugin that enables us to use voice commands in game. We'll be doing all of these by just using freely available tools.

Introduction

So the world is full of the AI buzzword (don't know how long that's going to last) and I thought let's surf the waves here and try something new. I've been a Bot enthusiast for a while here on CodeProject, but in this article, we'll jam pack something new.

We'll write down a custom plugin in C# that will enable us to give voice commands to send keyboard keys to an open and active Game window. So long story short. Controlling a game via voice commands.

My inspiration to write this article arouses from the fact that the AI Assistant application being used is powered by a Bot development framework that I am well-versed with.

Prerequisites

Syn VA Framework and an online Tetris game browser window.

Before we begin, I am going to make a strong assumption that you are proficient in C# .NET programming and playing around with Visual Studio 2019 (as of writing of this article).

You'll need to ensure that you have a couple of things pre-installed before diving into this article.

- Visual Studio 2019 (or above) - I am on Community Edition just so you know.

- Syn VA Framework (probably it's a good idea to install the latest version) - Community Edition should do just fine.

- Oryzer Studio - A freely available flow based programming platform that I'll use later on in the article.

A portion of this article uses certain semantics from my other article: Creating an On-Premise Offline Bot in C# using Oscova

Getting Started

We are going to follow concrete steps to achieve our goal of creating the plugin.

- We'll begin by creating a C# Plugin Library.

- Place the library in the right folder within the Assistant's Plugins directory.

- Add some knowledge to our Virtual Assistant by using Oryzer Studio.

- Specify the voice commands and key bindings.

- Add voice command examples to the AI Assistant.

- Finally, we'll just run the application and see if our Voice Commands work on the game.

Creating the Plugin Project

So let's heat it up and start Visual Studio and create a suitable project to create our plugin.

- Open Visual Studio.

- Select WPF User Control Library (.NET Framework).

- Hit Next and specify the name of the project as

Syn.VA.Plugins.GameCommander. - Ensure that the target Framework is set to .NET Framework 4.6.1 or above.

The reason why I have added the prefix Syn.VA.Plugins.* is that AI Assistant application only scans for plugin files starting with that prefix in the Plugins directory.

Implementing an Assistant Plugin Class

Now that the project is ready, we'll firstly go ahead and create a class that inherits the AssistantPlugin class. To do so:

- Right-click on the project name.

- Select Add and choose Class...

Name the class GameCommanderPlugin and add the following code to it:

namespace Syn.VA.Plugins.GameCommander

{

public class GameCommanderPlugin : AssistantPlugin

{

public GameCommanderPlugin(VirtualAssistant assistant)

: base(assistant) { }

public override T GetPanel<T>(params object[] parameters)

{

return null;

}

}

}

This class is the first class that gets scanned by VA Framework to assert if a class library is indeed a VA Plugin. We don't have to do much here as we are not manipulating any core Assistant functions. Instead, we are going to extend it.

Creating a Functional Node

Like aforementioned, we are going to create something called a Node. A node in a flow-based programming (FBP) environment is a functional piece of code that is visually intractable in a graph called Workspace. We create Workspace projects in Oryzer Studio.

Now that's cleared up, we will go ahead and add a new folder to our project and call it Nodes and inside that, we'll create a new class file and name it GameCommanderNode. The reason we are calling it that is because eventually our Node is going to end up taking certain keyboard key values and send them to the active window.

Let's create the GameCommanderNode class step by step. Paste the following code into the file:

using Syn.Workspace;

using Syn.Workspace.Attributes;

using Syn.Workspace.Events;

using Syn.Workspace.Nodes;

namespace Syn.VA.Plugins.GameCommander.Nodes

{

[Node(Category = CategoryTypes.VaFramework, DisplayName = "Game Commander")]

public class GameCommanderNode : FunctionNode

{

public GameCommanderNode(WorkspaceGraph workspace) : base(workspace) { }

public override void OnTriggered(object sender, TriggerEventArgs eventArgs)

{

}

}

}

So here, we inherit a FunctionNode and we add the constructor that takes WorkspaceGraph as the argument.

To implement a FunctionNode, we'll also have to create a special OnTriggered() method that takes a set of arguments. Inside this method will lie the code that will be executed when this node is triggered.

It all will make sense a bit later in the article, so don't get carried away.

Next, we'll add a port to the Node. This port is going to be responsible for storing the key combinations for a particular voice command.

[InputPort]

[PortEditor]

public string KeyCombinations

{

get => _keyCombinations;

set { _keyCombinations = value; OnPropertyChanged(); }

}

In the code above, we decorate our KeyCombinations property with the InputPort and PortEditor attributes. These special attributes make our class property visible as a port in the visual editor of Oryzer Studio.

Side note, the PortEditor attribute specifies that if the application has an in-built editor for this property type, then the application is free to display or render it.

Let's now expand the OnTriggered() function to something that we want the Node to do when it's triggered.

But before that, we'll have to use the System.Windows.Forms namespace. For this, you'll have to manually reference it.

For this, tick the System.Windows.Forms in Reference Manager as shown below:

Now that we've added the right reference, change OnTriggered() method to the following:

public override void OnTriggered(object sender, TriggerEventArgs eventArgs)

{

try

{

SendKeys.SendWait(KeyCombinations);

VirtualAssistant.Instance

.Logger.Info<GameCommanderNode>($"Sending Keys: \"{KeyCombinations}\"");

RaiseTriggerFlow(sender, eventArgs);

}

catch (Exception e)

{

Workspace.Logger.Error<GameCommanderNode>(e);

}

}

All the above code does is that it calls the SendKeys.SendWait() method with the KeyCombinations value.

On a side note, the RaiseTriggerFlow() method is called to continue what is called a sequential node trigger, i.e., if this node is called, it should call the children nodes after executing its code block.

Overall Code

using System;

using System.Windows.Forms;

using Syn.Workspace;

using Syn.Workspace.Attributes;

using Syn.Workspace.Events;

using Syn.Workspace.Nodes;

namespace Syn.VA.Plugins.GameCommander.Nodes

{

[Node(Category = CategoryTypes.VaFramework, DisplayName = "Game Commander")]

public class GameCommanderNode : FunctionNode

{

private string _keyCombinations;

public GameCommanderNode(WorkspaceGraph workspace) : base(workspace) { }

[InputPort]

[PortEditor]

public string KeyCombinations

{

get => _keyCombinations;

set { _keyCombinations = value; OnPropertyChanged(); }

}

public override void OnTriggered(object sender, TriggerEventArgs eventArgs)

{

try

{

SendKeys.SendWait(KeyCombinations);

VirtualAssistant.Instance

.Logger.Info<GameCommanderNode>($"Sending Keys: \"{KeyCombinations}\"");

RaiseTriggerFlow(sender, eventArgs);

}

catch (Exception e)

{

Workspace.Logger.Error<GameCommanderNode>(e);

}

}

}

}

Separate Plugin for the Node?

This might seem a bit odd but it makes a lot more sense if we try to comprehend how a Node gets imported into Oryzer Studio for interaction. We've created a GameCommanderNode to be available in Oryzer Studio. This node is only going to be visible (for some reason as of the date of writing this article) if a separate WorkspacePlugin implementation exposes it.

We don't need to get in-depth into that for now, so let's just create a Workspace Plugin.

Create a new class file called GameCommanderWorkspacePlugin and add the following code to it:

using Syn.Workspace;

namespace Syn.VA.Plugins.GameCommander.Nodes

{

public class GameCommanderWorkspacePlugin : WorkspacePlugin

{

public GameCommanderWorkspacePlugin(WorkspaceGraph workspace) : base(workspace)

{

workspace.Nodes.RegisterType<GameCommanderNode>();

}

}

}

Placing the Plugin File

Now that the C# coding part is done. We'll just compile the project so that a Dynamic Link Library file (DLL) is created and can be placed in the Assistant's Plugin directory. For this:

- Build the Project by hitting F6.

- Open the Bin/Release directory and copy Syn.VA.Plugins.GameCommanderPlugin.dll.

- Run VA Framework Assistant.

- Open Settings Panel by clicking on the gear symbol on the top left corner.

- Select System and click on Open Working Directory as shown below:

- Browse to the Plugins directory and paste the copied file there:

We are now done with the Plugin creation part. Let's move to some flow based programming to create commands that will execute the node we've created.

Flow Based Programming

Moving on to the next major part of our development is to now create a visual graph within which we'll specify a set of commands and bind some keyboard keys to them. When a command is used, our node will be triggered and in-turn the keyboard keys specified will be sent to an active window.

If you've followed up with one of my previous articles on creating a Bot's knowledge (mentioned below), you shall find it a lot easier to follow what's next.

I'll show you how to create the first intent that will trigger the Node we've created when the user says Up Arrow

For this, let's:

- Press Ctrl+F search for

Oscova Bot node. - Drag it on to the Workspace.

- Drag a

Dialog and Intent Node. - Connect them as shown below:

So what we've done so far is that we've created an Intent with the name up_arrow_intent and this intent will be invoked/called when a specified expression matches user input.

Next let's setup a Response node and connect the Game Commander node to it.

- Again, search for a

Response node by pressing Ctrl+F. - Connect it to the

Intent node. - Add an

Expression node and set the value as {up arrow}. - Connect an

Input Text node and set the text value as Up Arrow Pressed. - Finally, add the

Game Commander node and set the key combination value to {UP}.

I've now shown how you can add a command (intent) for the user command Up Arrow.

Although you might have figured it out already, I'll still add the screenshots for the Down, Left and Right Arrow commands.

Down Arrow Key Command

Left Arrow Key Command

Right Arrow Key Command

Now that the knowledge-base for the bot is ready, we'll go ahead and save this Workspace project and then copy and paste the file in the Knowledge directory of the AI Assistant.

- Save the Workspace by pressing Ctrl+S.

- Name the project file as

Game-Commander.west. - Copy this file.

- Open VA Framework.

- Open the Settings Panel by clicking on the gear symbol on the top-left.

- Select System and click on Open Working Directory.

- Browse to Knowledge directory and paste the copied file there.

We are now done with the knowledge-base part as the pasted file will be read and imported by the AI Assistant on next restart.

Adding Voice Commands

We shall now continue to add voice commands to the AI Assistant so that we can use speech recognition to trigger the commands we've stored in the Workspace project.

- Open VA Framework.

- Click on the Settings Panel Icon on the top left.

- Select Speech>Voice Commands and click on the + Icon on the bottom right.

- Add the 4 voice commands. Up Arrow, Down Arrow, Left Arrow and Right Arrow.

- Click on the Save button on the bottom-right.

- Restart the Digital Assistant.

Let's Test

We are now done creating the plugin, creating a Workspace Knowledge-base project and setting up Voice Commands.

- Let's open VA Framework.

- Click on the Speech Icon.

- Open up Tetris Online.

- Keep the Browser Window Focused and use voice commands to navigate.

And there we are! We've finally built a custom plugin that enables us to play Tetris using ASR on our Assistant.

Points of Interest

Unlike my previous articles on bot development, this is the first time that I've written an article on plugin development. I find it exciting to play around with witty software technologies. There's a part that I haven't covered and that's something related to settings management in VA Framework. I am currently tinkering with it. If I get into something exciting, I shall make this a 2 part article later on.

History

- 7th September, 2020 - Initial release