Here we’ll launch our person detection software on a Raspberry Pi device.

In the last article of this series, we’ve written the Python code for detecting persons in images using SSD models. In this article, we’ll go over the Python-OpenCV installation on a Raspberry Pi device, and then see how to launch our Python code on that device.

First, download and install the Raspberry Pi OS – the official operating system for the Pi device series. We used Raspberry Pi OS (32-bit) with desktop and recommended software. The OS can be installed on the device’s SD card using a disk imager. For our Windows desktop, we used Win32DiskImager. With the first boot, the system asks for updating all pre-installed system packages – we recommend that you do this. The update can take several minutes.

Now it’s time to install Python-OpenCV on the device. There are two main options for this: compile the OpenCV source on the device or install an existing package on the system. We’ve used the latter option for simplicity. Fortunately, there is good support for Python packages on Raspberry Pi. Before installing the OpenCV library, we need to install some prerequisites, such as HD5 and Qt packages. After this, you need to set up a virtual environment for further use of the OpenCV package. We’ve installed the virtualenv and virtualenvwrapper tools, and then activated a virtual environment named ‘cv’. Then, we’ve installed the opencv-contrib-python package in that environment. This package is highly recommended as it includes all the required OpenCV modules.

Now we can use the virtual environment to launch on the Pi device the Python code for person detection that we’d developed in the previous article. The classes are the same as for the desktop application. We’ll only need to change the paths for the DNN models and the image locations. Here is the code modified for the Pi 3 device:

proto_file = r"/home/pi/Desktop/PI_RPD/mobilenet.prototxt"

model_file = r"/home/pi/Desktop/PI_RPD/mobilenet.caffemodel"

ssd_net = CaffeModelLoader.load(proto_file, model_file)

print("Caffe model loaded from: "+model_file)

proc_frame_size = 300

ssd_proc = FrameProcessor(proc_frame_size, 1.0/127.5, 127.5)

person_class = 15

ssd = SSD(ssd_proc, ssd_net)

im_dir = r"/home/pi/Desktop/PI_RPD/test_images"

im_name = "woman_640x480_01.png"

im_path = os.path.join(im_dir, im_name)

image = cv2.imread(im_path)

print("Image read from: "+im_path)

obj_data = ssd.detect(image)

persons = ssd.get_objects(image, obj_data, person_class, 0.5)

person_count = len(persons)

print("Person count on the image: "+str(person_count))

Utils.draw_objects(persons, "PERSON", (0, 0, 255), image)

res_dir = r"/home/pi/Desktop/PI_RPD/results"

res_path = os.path.join(res_dir, im_name)

cv2.imwrite(res_path, image)

print("Result written to: "+res_path)

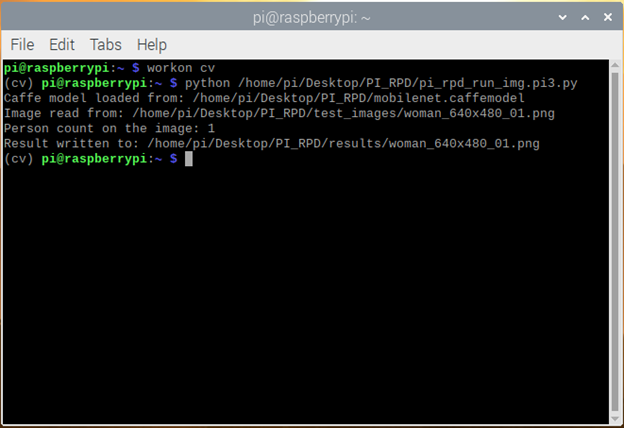

If our code resides in a single Python file, say pi_rpd_run_img.pi3.py, we can launch it from the terminal with two subsequent commands:

workon cv

python /home/pi/Desktop/PI_RPD/pi_rpd_run_img.pi3.py

Here is the screenshot of the terminal response:

As you can see from the picture above, the code works on the Pi device the same as it did on a desktop computer. And, as expected, it produces the same person detections in the test images:

So we’ve shown that we can detect persons in images using the SSD model on the Raspberry Pi device with the same precision we did on a desktop or a laptop. The difference is speed. Yes, performance on an edge device can be an issue.

Next Steps

In the next article, we’ll test the accuracy and the performance of the MibileNet and SqueezeNet models on the Raspberry Pi device. We’ll select the better option and use it for further testing on a video clip, and then in the real-time mode.