Here we’ll use YOLO to detect and count the number of people in a video sequence.

So far in the series, we have been working with still image data. In this article, we’ll use a basic implementation of YOLO to detect and count people in video sequences.

Let’s again start by importing the required libraries.

import cv2

import numpy as np

import time

Here, we’ll use the same files and code pattern as discussed in the previous article. If you haven’t read the article, I would suggest you give it a read since it’ll clear the basics of the code. For reference, we’ll load the YOLO model here.

net=cv2.dnn.readNet("./yolov3.weights", "./yolov3.cfg")

classes=[]

with open("coco.names","r") as f:

classes=[line.strip() for line in f.readlines()]

layer_names=net.getLayerNames()

output_layers=[layer_names[i[0]-1] for i in net.getUnconnectedOutLayers()]

Now, instead of image, we’ll feed our model a video file. OpenCV provides a simple interface to deal with video files. We’ll create an instance of the VideoCapture object. Its argument can either be the index of an attached device or a video file. I’ll be working with a video.

cap = cv2.VideoCapture('./video.mp4')

We’ll save the output as a video sequence as well. To save our video output, we’ll use a VideoWriter object instance from Keras.

out_video = cv2.VideoWriter( 'human.avi', cv2.VideoWriter_fourcc(*'MJPG'), 15., (640,480))

Now we’ll capture the frames from the video sequence, process them using blob and get the detection. (Check out the previous article for a detailed explanation.)

frame = cv2.resize(frame, (640, 480))

height,width,channel=frame.shape

blob=cv2.dnn.blobFromImage(frame,1/255,(320,320),(0,0,0),True,crop=False)

net.setInput(blob)

outs=net.forward(outputlayers)

Let’s get the coordinates of the bounding box for each detected object and apply a threshold to eliminate weak detections. Of course, let’s not forget to apply Non-Maximum Suppression.

class_ids=[]

confidences=[]

boxes=[]

for out in outs:

for detection in out:

scores=detection[5:]

class_id=np.argmax(scores)

confidence=scores[class_id]

if confidence>0.5:

center_x=int(detection[0]*width)

center_y=int(detection[1]*height)

w=int(detection[2]*width)

h=int(detection[3]*height)

x=int(center_x-w/2)

y=int(center_y-h/2)

boxes.append([x,y,w,h])

confidences.append(float(confidence))

class_ids.append(class_id)

indexes=cv2.dnn.NMSBoxes(boxes,confidences,0.4,0.6)

Draw the final boundary boxes on the detected objects and increment the counter for each detection.

count = 0

for i in range(len(boxes)):

if i in indexes:

x,y,w,h=boxes[i]

label=str(classes[class_ids[i]])

color=COLORS[i]

if int(class_ids[i] == 0):

count +=1

cv2.rectangle(frame,(x,y),(x+w,y+h),color,2)

cv2.putText(frame,label+" "+str(round(confidences[i],3)),(x,y-5),font,1, color, 1)

We can draw the frame number as well as a counter right on the screen so that we know how many people there are in each frame.

cv2.putText(frame, str(count), (100,200), cv2.FONT_HERSHEY_DUPLEX, 2, (0, 255, 255), 10)

cv2.imshow("Detected_Images",frame)

Our model will now count the number of people present in the frame. For a better estimate of queue length, we can define our region of interest as well prior to processing.

ROI = [(100,100),(1880,100),(100,980),(1880,980)]

And then focus the frame only in that region as follows:

cv2.rectangle(frame, ROI[0], ROI[3], (255,255,0), 2)

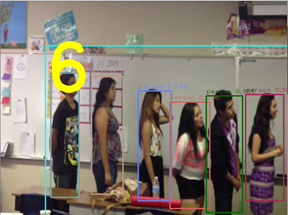

Here’s a snapshot of the final video being processed.

End Note

In this series of articles, we learned to implement deep neural networks for computer vision problems. We implemented the neural networks from scratch, used transfer learning, and used pre-trained models to detect objects of interest. At first glance, it seemed like it might be better to use custom trained models when we’re working with a congested scene where objects of interest are not clearly visible. Custom trained models would be more accurate in such cases, but the accuracy comes at the cost of time and resource consumption. Some state-of-the-art algorithms like YOLO are more efficient than custom trained models and can be implemented in real time while maintaining a reasonable level of accuracy.

The solutions we explored in this series are not perfect and can be improved, but you should now be able to see a clear picture of what it means to work with deep learning models for object detection. I encourage you to experiment with the solutions we went through. Maybe you could fine-tune parameters to get a better prediction, or implement ROI or LOI to get a better estimate of the number of people in a queue. You can experiment with object tracking as well, just don’t forget to share your findings with us. Happy coding!