Here we’ll cover the basic UI setup for an Android application that will use our trained model for lightning detection.

Introduction

In the previous article, we went through training a TF model with our curated dataset using Teachable Machine and exporting the trained model in the FTLite format.

Tools to Use

Have a look at the tools and their versions that we’ve used.

| Tool | Version |

| IDE: Android Studio | · Android Studio 4.0.1

· Build #AI-193.6911.18.40.6626763, built on June 24 2020

· Runtime version: 1.8.0_242-release-1644-b01 amd64

· VM: OpenJDK 64-Bit Server VM by JetBrains s.r.o |

| Testing on an Android device | · Samsung SM-A710FD- Android version 7.0

· Huawei MediaPad T3 10- Android version 7.0 |

| Java | 8 Update 231 |

| Java SE | 8 Update 131 |

The above specs are not uniquely suited to our task. Feel free to experiment with alternative tools/versions, such as IntelliJ IDEA for IDE.

Android Studio Setup

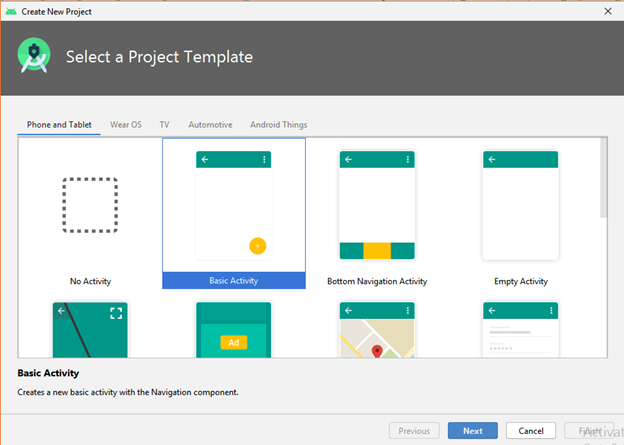

First, let's create a new Android project. Choose the basic activity as shown below.

Then, select "API 23" as Minimum SDK and fill in, other respective fields as follows.

The project hierarchy appears in the studio UI. We’ll start with the gradle files that Android Studio created when it generated your project.

The build.gradle (project level) file is good as is – just make sure you’ve updated and synced the Gradle dependency:

classpath "com.android.tools.build:gradle:4.0.1"

dependencies {

classpath "com.android.tools.build:gradle:4.0.1"

}

In the build.gradle (app level) file, enter this code inside the android tag:

aaptOptions {

noCompress "tflite"

}

And then, in the same tag, add this snippet:

compileOptions {

sourceCompatibility JavaVersion.VERSION_1_8

targetCompatibility JavaVersion.VERSION_1_8

}

Now it’s time to add the dependencies required for implementing the libraries and frameworks (on top of the default ones) to the build.gradle (app level) file:

implementation 'com.google.mlkit:object-detection:16.2.1'

implementation 'com.google.mlkit:object-detection-custom:16.2.1'

implementation "androidx.lifecycle:lifecycle-livedata:2.2.0"

implementation "androidx.lifecycle:lifecycle-viewmodel:2.2.0"

implementation 'androidx.appcompat:appcompat:1.2.0'

implementation 'androidx.annotation:annotation:1.1.0'

implementation 'androidx.constraintlayout:constraintlayout:2.0.1'

implementation 'com.google.guava:guava:17.0'

implementation 'com.google.guava:listenablefuture:9999.0-empty-to-avoid-conflict-with-guava'

Sync the file when Android Studio asks you about syncing after adding the above dependencies.

Now move on to the AndroidManifest.xml file and enter permissions:

<uses-permission android:name="android.permission.CAMERA" />

<uses-feature android:name="android.hardware.camera" />

<uses-feature android:name="android.hardware.camera.autofocus" />

Now let’s dive into the basic setup and the front-end matters. Your activity_main.xml file should look like this:

="1.0"="utf-8"

<androidx.coordinatorlayout.widget.CoordinatorLayout xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:app="http://schemas.android.com/apk/res-auto"

xmlns:tools="http://schemas.android.com/tools"

android:layout_width="match_parent"

android:layout_height="match_parent"

tools:context=".MainActivity">

<include layout="@layout/content_main" />

</androidx.coordinatorlayout.widget.CoordinatorLayout>

The content_main.xml file should look as follows:

="1.0"="utf-8"

<androidx.constraintlayout.widget.ConstraintLayout

xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:app="http://schemas.android.com/apk/res-auto"

android:layout_width="match_parent"

android:layout_height="match_parent"

android:keepScreenOn="true">

<com.ruturaj.detectlightning.mlkit.CameraSourcePreview

android:id="@+id/preview_view"

android:layout_width="match_parent"

android:layout_height="0dp"

app:layout_constraintTop_toTopOf="parent"/>

<com.ruturaj.detectlightning.mlkit.GraphicOverlay

android:id="@+id/graphic_overlay"

android:layout_width="0dp"

android:layout_height="0dp"

app:layout_constraintLeft_toLeftOf="@id/preview_view"

app:layout_constraintRight_toRightOf="@id/preview_view"

app:layout_constraintTop_toTopOf="@id/preview_view"

app:layout_constraintBottom_toBottomOf="@id/preview_view"/>

</androidx.constraintlayout.widget.ConstraintLayout>

Create a new folder called assets to store our TFLite model and the label file. Unzip the file downloaded from Teachable Machine.

We need to add the following to the pre-generated strings in the strings.xml file:

<string name="permission_camera_rationale">Access to the camera is needed for detection</string>

<string name="no_camera_permission">This application cannot run because it has nocamera permission. The application will now exit.</string>

<string name="desc_camera_source_activity">Vision detection demo with live camera preview</string>

<string name="pref_category_key_camera" translatable="false">pckc</string>

<string name="pref_category_title_camera">Camera</string>

<string name="pref_key_rear_camera_preview_size" translatable="false">rcpvs</string>

<string name="pref_key_rear_camera_picture_size" translatable="false">rcpts</string>

<string name="pref_key_front_camera_preview_size" translatable="false">fcpvs</string>

<string name="pref_key_front_camera_picture_size" translatable="false">fcpts</string>

<string name="pref_key_camerax_target_resolution" translatable="false">ctas</string>

<string name="pref_key_camera_live_viewport" translatable="false">clv</string>

<string name="pref_title_rear_camera_preview_size">Rear camera preview size</string>

<string name="pref_title_front_camera_preview_size">Front camera preview size</string>

<string name="pref_title_camerax_target_resolution">CameraX target resolution</string>

<string name="pref_title_camera_live_viewport">Enable live viewport</string>

<string name="pref_summary_camera_live_viewport">Do not block camera preview drawing on detection</string>

<string name="pref_title_object_detector_enable_multiple_objects">Enable multiple objects</string>

<string name="pref_key_live_preview_object_detector_enable_multiple_objects" translatable="false">lpodemo</string>

<string name="pref_key_still_image_object_detector_enable_multiple_objects" translatable="false">siodemo</string>

<string name="pref_title_object_detector_enable_classification">Enable classification</string>

<string name="pref_key_live_preview_object_detector_enable_classification" translatable="false">lpodec</string>

<string name="pref_key_still_image_object_detector_enable_classification" translatable="false">siodec</string>

Did You Know…?

Did you know that this project could be developed in a number of ways? Yes it could! Let’s briefly discuss these ways.

For this project I’ve chosen the Google ML kit because it felt like the one I should focus on to build my own demo. Here is the dependency I’ve used:

implementation 'com.google.mlkit:object-detection-custom:16.2.1'

The ML-based class files were hugely inspired by the ML-kit, and I have carefully chosen and organized the class files for this project.

The MainActivity.java file will be explained in the next article.

You can download the entire project and try building. A disclaimer: the solution I’m offering is far from "ideal" - it was just expedient and simple to explain. There are multiple ways to bring a trained model into the Android environment. For example, some developers would prefer Bazel for generating an .apk file to build the Android project.

Next Steps

In the next article, we’ll set up the TFLite model in the Android environment and create a working demo application. Stay tuned!