Here we’ll decode the YOLO Core ML Model using array manipulations (vectorization) to get rid of loops. Understanding how it works will allow us to add this logic to the Core ML model in the next article.

Introduction

This series assumes that you are familiar with Python, Conda, and ONNX, as well as have some experience with developing iOS applications in Xcode. You are welcome to download the source code for this project. We’ll run the code using macOS 10.15+, Xcode 11.7+, and iOS 13+.

Decoding the YOLO Output the Right Way

If you had worked with neural networks or arrays before, you most likely cringed seeing our loops over cells and boxes (cy, cx, and b) in the last article. As a rule of thumb, if you need loops when working with arrays, you are doing it wrong. In this particular case, it was intentional, as these loops made it easier to grasp the underlying logic. Vectorized implementations are usually short but not very easy to understand at the first sight.

Note that the notebook in the code download for this article contains both the previous (loop-based) solution and the new one.

To start with vectorized decoding, we need a new softmax function working on 2D arrays:

def softmax_2d(x, axis=1):

x_max = np.max(x, axis=axis)[:, np.newaxis]

e_x = np.exp(x - x_max)

x_sum = np.sum(e_x, axis=axis)[:, np.newaxis]

return e_x / x_sum

Next, to get rid of the cy, cx and b loops, we need a few constant arrays:

ANCHORS_W = np.array([0.57273, 1.87446, 3.33843, 7.88282, 9.77052]).reshape(1, 1, 5)

ANCHORS_H = np.array([0.677385, 2.06253, 5.47434, 3.52778, 9.16828]).reshape(1, 1, 5)

CX = np.tile(np.arange(GRID_SIZE), GRID_SIZE).reshape(1, GRID_SIZE**2, 1)

CY = np.tile(np.arange(GRID_SIZE), GRID_SIZE).reshape(1, GRID_SIZE, GRID_SIZE).transpose()

CY = CY.reshape(1, GRID_SIZE**2, 1)

The ANCHORS array is now split into two: ANCHORS_W and ANCHORS_H.

The CX and CY arrays contain all the cx and cy value combinations, previously generated during the nested loops execution. The shapes of these arrays were set to simplify the subsequent operations.

Now we are ready to implement the vectorized decoding function:

def decode_preds_vec(raw_preds: []):

num_classes = len(COCO_CLASSES)

raw_preds = np.transpose(raw_preds, (0, 2, 3, 1))

raw_preds = raw_preds.reshape((1, GRID_SIZE**2, BOXES_PER_CELL, num_classes + 5))

decoded_preds = []

tx = raw_preds[:,:,:,0]

ty = raw_preds[:,:,:,1]

tw = raw_preds[:,:,:,2]

th = raw_preds[:,:,:,3]

tc = raw_preds[:,:,:,4]

x = ((CX + sigmoid(tx)) * CELL_SIZE).reshape(-1)

y = ((CY + sigmoid(ty)) * CELL_SIZE).reshape(-1)

w = (np.exp(tw) * ANCHORS_W * CELL_SIZE).reshape(-1)

h = (np.exp(th) * ANCHORS_H * CELL_SIZE).reshape(-1)

box_confidence = sigmoid(tc).reshape(-1)

classes_raw = raw_preds[:,:,:,5:5 + num_classes].reshape(GRID_SIZE**2 * BOXES_PER_CELL, -1)

classes_confidence = softmax_2d(classes_raw, axis=1)

box_class_idx = np.argmax(classes_confidence, axis=1)

box_class_confidence = classes_confidence.max(axis=1)

combined_box_confidence = box_confidence * box_class_confidence

decoded_boxes = np.stack([

box_class_idx,

combined_box_confidence,

x,

y,

w,

h]).transpose()

return sorted(list(decoded_boxes), key=lambda p: p[1], reverse=True)

First, to make calculations a little easier, we transpose the raw_preds array by moving the 425 values with the encoded box coordinates and class confidence to the last dimension. Then we reshape it from (1, 13, 13, 425) to (1, 13*13, 5, 85). This way, ignoring batch in the first position (always equal to 0), the order of dimensions matches the previous loops over cy (13), cx (13), and box (5).

Note that we had to use the shape (1, 13*13, 5, 85), instead of a more explicit (1, 13, 13, 5, 85), only because Core ML has some array rank limitations. It means that certain operations lead to exceptions on arrays with too many dimensions. Besides, considering the "hidden" internal sequence dimension, working with arrays in Core ML is not very intuitive.

Working on NumPy arrays, we could use the "longer" shape (1, 13, 13, 5, 85) but, to make operations easily convertible to Core ML, we had to reduce the number of dimensions by one, hence the shape (1, 13*13, 5, 85).

Now, the main change from the previous version is how the tx, ty, tw, th, and tc values, as well as classes_raw, are obtained. Instead of reading separate values corresponding to a single box within a single cell, we obtain an array with all corresponding values in a single step. This supports the following "single step" array operations, which make all the calculations extremely efficient, especially when executed on a chip optimized for array calculations, such as GPU or Neural Engine.

decoded_preds_vec = decode_preds_vec(preds)

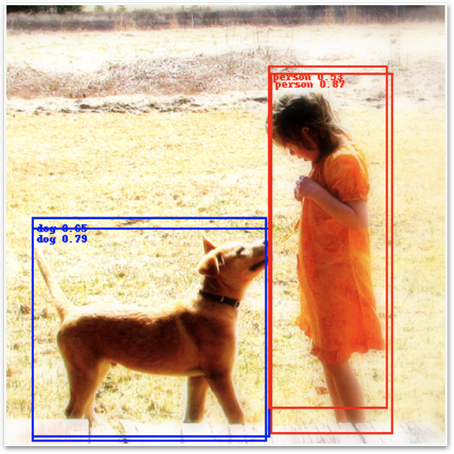

annotate_image(image, decoded_preds_vec)

Here is another example.

Next Steps

We now got the same results as in the previous, loop-based solution. It makes us ready to include detection decoding directly in the Core ML model. This eventually will allow us to use the object detection features of the Vision framework, which significantly simplifies the Swift code of the iOS application.