Here we will learn how to use mouse callbacks in OpenCV to select various objects in images. This functionality is commonly used to prepare test and training datasets, and to indicate detected objects.

In the first article of this series, we learned how to use OpenCV to work with images and video sequences. We learned how to read, write and display images and videos from a webcam and video files.

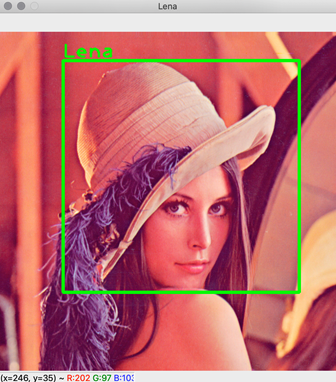

In this article, we will learn how to annotate objects in images with OpenCV as shown in the image below. You typically need this during object detector training to indicate objects in the images in your training and test datasets.

OpenCV provides a mechanism that enables you to select shapes in images. Namely, you can wire the mouse callbacks with the windows you display with OpenCV (imshow or namedWindow methods). By doing so, you can detect when the user clicks the mouse buttons and where they put the pointer.

I will show you how to respond to mouse events with OpenCV and Python. You can find the companion source code here.

Common Module

To group the various constants I used in the code, I created the common.py module:

import cv2 as opencv

LENA_FILE_PATH = '../Images/Lena.png'

WINDOW_NAME = 'Lena'

GREEN = (0, 255, 0)

LINE_THICKNESS = 3

FONT_FACE = opencv.FONT_HERSHEY_PLAIN

FONT_SCALE = 2

FONT_THICKNESS = 2

FONT_LINE = opencv.LINE_AA

TEXT_OFFSET = 4

This module defines several constants:

LENA_FILE_PATH – Points to the Lena image location.WINDOW_NAME – Stores the window name.GREEN and LINE_THICKNESS – Defines the color (in a BGR format) and line thickness used to draw a rectangle.FONT_FACE, FONT_SCALE, etc. – Parameters for displaying the object label.TEXT_OFFSET – Defines the gap (in pixels) between the label and the rectangle.

Mouse Callback

To handle mouse interaction with the OpenCV window, you set the mouse callback. To do so, you first create the window using the namedWindow function:

import cv2 as opencv

import common

opencv.namedWindow(common.WINDOW_NAME)

Then, invoke setMouseCallback as follows:

opencv.setMouseCallback(common.WINDOW_NAME, on_mouse_move)

The callback should have the following prototype:

def on_mouse_move(event, x, y, flags, param)

These are the five input parameters:

event – One of the mouse events, for example EVENT_LBUTTONDOWN, EVENT_LBUTTONUP, EVENT_MOUSEWHEEL, EVENT_RBUTTONDBLCLK.x, y – The coordinates of the mouse cursor.flags – Flags indicating whether the mouse button is down (EVENT_FLAG_LBUTTON, EVENT_FLAG_RBUTTON, EVENT_FLAG_MBUTTON) or the user presses Ctrl (EVENT_FLAG_CTRLKEY), Shift (EVENT_FLAG_SHIFTKEY) or Alt (EVENT_FLAG_ALTKEY).param - An optional parameter.

Once you set up the callback, OpenCV will invoke it whenever the mouse events are fired. To let the user select an object, you can write the on_mouse_move callback like so:

rectangle_points = []

def on_mouse_move(event, x, y, flags, param):

if(event == opencv.EVENT_LBUTTONDOWN):

rectangle_points.clear()

rectangle_points.append((x,y))

elif event == opencv.EVENT_LBUTTONUP:

rectangle_points.append((x,y))

display_lena_image(True)

The above function stores two coordinates of the rectangle (two opposite corners) selected by the user in the rectangle_points list. When the user presses the left mouse button, the callback invokes two statements:

rectangle_points.clear()

rectangle_points.append((x,y))

The first one clears the coordinate list and then appends the new element by reading the x and y parameters passed to the callback by OpenCV. These coordinates represent the first corner of the rectangle. Then, the user holds the left mouse button down, and moves the cursor to the opposite rectangle's corner. Then, the user has to release the left mouse button, which in turn, invokes the following statements from the on_mouse_move callback:

rectangle_points.append((x,y))

display_lena_image(True)

The first statement will append the x, y coordinates of the mouse cursor to the rectangle_points list. The second statement will display the Lena image using the following method:

def display_lena_image(draw_rectangle):

file_path = common.LENA_FILE_PATH

lena_img = opencv.imread(file_path)

if(draw_rectangle):

draw_rectangle_and_label(lena_img, 'Lena')

opencv.imshow(common.WINDOW_NAME, lena_img)

opencv.waitKey(0)

The display_lena_image function shown above uses OpenCV's imread method to read the image from the file. Then, the function checks if the input parameter draw_rectangle is True. If so, the display_lena_image will draw the rectangle (see below) selected by the user before showing the final image to the user. To display the image, I use the imshow method and waitKey to wait for the user to press any key.

Drawing the Rectangle and Label

To draw the rectangle selected by the user, I implemented the draw_rectangle_and_label function:

def draw_rectangle_and_label(img, label):

opencv.rectangle(img, rectangle_points[0], rectangle_points[1],

common.GREEN, common.LINE_THICKNESS)

text_origin = (rectangle_points[0][0], rectangle_points[0][1] - common.TEXT_OFFSET)

opencv.putText(img, label, text_origin,

common.FONT_FACE, common.FONT_SCALE, common.GREEN,

common.FONT_THICKNESS, common.FONT_LINE)

The above method uses two OpenCV functions: rectangle and putText.

The first one, as the name implies, draws the rectangle.

The second function, putText, is used here to write the label above the rectangle.

Putting Things Together

I combined the above functions into one Python script, annotations.py (see companion code):

import cv2 as opencv

import common

rectangle_points = []

def draw_rectangle_and_label(img, label):

def display_lena_image(draw_rectangle):

def on_mouse_move(event, x, y, flags, param):

opencv.namedWindow(common.WINDOW_NAME)

opencv.setMouseCallback(common.WINDOW_NAME, on_mouse_move)

display_lena_image(False)

To test the app, just run annotations.py. The code will create the window, set the mouse callback, and then display the Lena image without any annotations. You then select the rectangle, and the app will draw it on the image as shown in the introduction.

Wrapping Up

We learned how to use mouse callbacks in OpenCV to select various objects in images. This functionality is commonly used to prepare test and training datasets, and to indicate detected objects. In the next article, we will use drawing functions to depict detected objects.