Here we develop a small computer vision framework that enabled running the various computer vision and Deep Learning models, with different parameters, and comparing their performance.

Introduction

Traffic speed detection is big business. Municipalities around the world use it to deter speeders and generate revenue via speeding tickets. But the conventional speed detectors, typically based on RADAR or LIDAR, are very expensive.

This article series shows you how to build a reasonably accurate traffic speed detector using nothing but Deep Learning, and run it on an edge device like a Raspberry Pi.

You are welcome to download code for this series from the TrafficCV Git repository. We are assuming that you are Python and have basic knowledge of AI and neural networks.

In the previous article, we discussed the basic implementation of a vehicle speed detection algorithm using an Haar object detector and an object correlation tracker. Both ran on the CPU and consumed a lot of it. This article will focus on developing a small computer vision framework that can run the various Machine Learning and neural network models, like SSD MobileNet, on live and recorded vehicle traffic videos using either the CPU or Edge TPU. This will allow us to easily compare the different models and formulae for calculating vehicle speed in terms of performance.

Detector: Nuts and Bolts

We identified the tasks our traffic speed detector needs to perform in real time:

- Process a live video or other video source frame-by-frame

- Detect and track vehicle objects

- Measure and estimate vehicle object speeds

- Display object bounding boxes and other information in a video window

From an developer’s POV, we also need to provide certain facilities:

- Offer a basic user interface including the ability to easily exit from the video processing loop

- Enter essential arguments, like the traffic video source and detector, in the command line

- Enter detector parameters, like the frame counter interval, in the command line

- Print out informational and debugging statements from the program

Our TrafficCV mini-framework consists of two main parts: the CLI and Detector class. The TrafficCV CLI is the main interface to the program. It allows the user to set arguments and parameters for the program execution. The CLI uses the standard ArgumentParser class from the argparse module.

parser = argparse.ArgumentParser()

parser.add_argument("--debug", help="Enable debug-level logging.",

action="store_true")

parser.add_argument("--test", help="Test if OpenCV can read from the default camera device.",

action="store_true")

The arguments are made available dynamically on the args variable. For example, the --test argument states we only want to test that our video input and video output is working:

if args.test:

import cv2 as cv

info('Streaming from default camera device.')

cap = cv.VideoCapture(0)

...

The CLI also provides a way for the user to break out of any video loop by starting a separate thread that monitors keyboard input:

threading.Thread(target=kbinput.kb_capture_thread, args=(), name='kb_capture_thread', daemon=True).start()

The kb_capture_thread() function in the kbinput module simply waits for the user to press the Enter key and then sets the KBINPUT global flag to True:

KBINPUT = False

def kb_capture_thread()

global KBINPUT

input()

KBINPUT = True

In our main video processing loop, we test the value of the KBINPUT flag and break out of the loop if it is True.

The --model argument determines the computer vision or Deep Learning model to use. In order to use a model with TrafficCV, you must:

- Provide an implementation of the abstract Detector class

- Add an option handler to enable the model in the CLI

- Provide a way to download the model file and the required additional data

The base Detector class runs the main video processing loop and performs the essential video processing and computer vision tasks. The class relies on Python Abstract Base Classes to define a Python class with abstract methods that must be implemented by the inheriting classes.

class Detector(abc.ABC)

def __init__(self, name, model_dir, video_source, args):

self.name = name

self.model_dir = model_dir

self.video_source = video_source

self.video = cv2.VideoCapture(self.video_source)

self.video_end = False

if self.video_source == 0:

self.video.set(cv2.CAP_PROP_FRAME_WIDTH, 1280)

self.video.set(cv2.CAP_PROP_FRAME_HEIGHT, 720)

self.video.set(cv2.CAP_PROP_FPS, 60)

self._height, self._width, self._fps = int(self.video.get(cv2.CAP_PROP_FRAME_HEIGHT)), int(self.video.get(cv2.CAP_PROP_FRAME_WIDTH)), int(self.video.get(cv2.CAP_PROP_FPS))

info(f'Video resolution: {self._width}x{self._height} {self._fps}fps.')

self.args = args

@abc.abstractmethod

def get_label_for_index(self, i)

@abc.abstractmethod

def detect_objects(self, frame)

@abc.abstractmethod

def print_model_info(self)

The constructor for the base class initializes the capture from the video source, or if the source is a camera, it sets the camera resolution to 1280 * 720 @60fps. There are three abstract methods: get_label_for_index, detect_objects, and print_model_info. The names are self-explanatory: one method to get the text label for a numeric ID for a class, one method to detect objects in a frame, and one to print a description of the model.

The rest of the class contains an implementation of common functions that every Detector must implement. For example, the Haar cascade classifier is implemented using this base class:

class Detector(detector.Detector)

def __init__(self, model_dir, video_source, args):

super().__init__("Haar cascade classifier on CPU", model_dir, video_source, args)

self.model_file = os.path.join(model_dir, 'haarcascade_kraten.xml')

if not os.path.exists(self.model_file):

error(f'{self.model_file} does not exist.')

sys.exit(1)

self.classifier = cv2.CascadeClassifier(self.model_file)

self.video_width = 1280

self.video_height = 720

The constructor creates a CascadeClassifier instance using the file in our model directory. The video_width and video_height members are the dimensions of the frame the detector expects. The implementation of the detect_objects method looks like this:

def detect_objects(self, frame):

image = cv2.cvtColor(cv2.resize(frame, (self.video_width, self.video_height)), cv2.COLOR_BGR2GRAY)

cars = self.classifier.detectMultiScale(image, 1.1, 13, 18, (24, 24))

scale_x, scale_y = (self._width / self.video_width), (self._height / self.video_height)

def make(i):

x, y, w, h = cars[i]

return Object(

id=0,

score=None,

bbox=BBox(xmin = x,

ymin= y,

xmax=x+w,

ymax=y+h).scale(scale_x, scale_y))

return [make(i) for i in range(len(cars))

The code is much shorter than what we had before: all we do is run inference on a provided frame and return bounding boxes of each detected object. The only functional change is that we scale up our bounding box back to the size of a frame in the original video, so that it is the correct size for our dlib object tracker. All other tasks, like creating the object trackers or removing objects with a PSR less than seven, or overlaying info onto the frame, are handled by the base Detector class code.

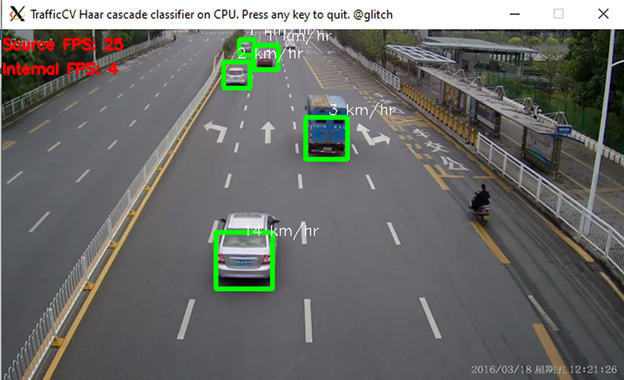

Detector in Action

Once we activate our cv Python virtual environment on Linux or Windows, we can invoke our model from the TrafficCV CLI like this:

tcv EUREKA:0.0 --model haarcascade_kraten --video ../TrafficCV/demo_videos/cars_vertical.mp4

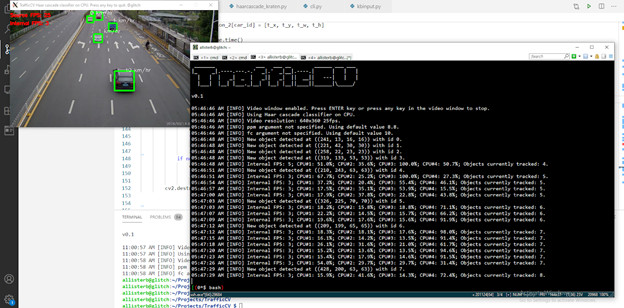

And we should see something like:

The CLI prints out information such as the current video processing FPS, and when a new object is detected, the video window overlays this information on the original video.

The Detector class also keeps track of the internal FPS and CPU load:

(cv) allisterb@glitch:~/Projects/TrafficCV $ tcv EUREKA:0.0 --model haarcascade_kraten --video ../TrafficCV/demo_videos/cars_vertical.mp4

_______ ___ ___ __ ______ ___ ___

|_ _|.----.---.-.' _|.' _|__|.----.| | | |

| | | _| _ | _|| _| || __|| ---| | |

|___| |__| |___._|__| |__| |__||____||______|\_____/

v0.1

06:45:55 AM [INFO] Video window enabled. Press ENTER key or press any key in the video window to stop.

06:45:55 AM [INFO] Using Haar cascade classifier on CPU.

06:45:55 AM [INFO] Video resolution: 640x360 25fps.

06:45:56 AM [INFO] ppm argument not specified. Using default value 8.8.

06:45:56 AM [INFO] fc argument not specified. Using default value 10.

06:45:57 AM [INFO] New object detected at (241, 13, 16, 16) with id 0.

06:45:57 AM [INFO] New object detected at (221, 42, 30, 30) with id 1.

06:45:57 AM [INFO] New object detected at (258, 22, 23, 23) with id 2.

06:45:57 AM [INFO] New object detected at (319, 133, 53, 53) with id 3.

06:45:57 AM [INFO] Internal FPS: 5; CPU#1: 31.0%; CPU#2: 23.2%; CPU#3: 63.7%; CPU#4: 24.2%; Objects currently tracked: 4.

06:46:00 AM [INFO] New object detected at (210, 243, 63, 63) with id 4.

06:46:00 AM [INFO] Internal FPS: 4; CPU#1: 23.7%; CPU#2: 16.0%; CPU#3: 66.3%; CPU#4: 14.6%; Objects currently tracked: 5.

06:46:03 AM [INFO] Internal FPS: 3; CPU#1: 21.5%; CPU#2: 14.7%; CPU#3: 70.3%; CPU#4: 14.6%; Objects currently tracked: 5.

06:46:06 AM [INFO] Internal FPS: 3; CPU#1: 23.0%; CPU#2: 15.2%; CPU#3: 72.1%; CPU#4: 13.9%; Objects currently tracked: 5.

06:46:09 AM [INFO] Internal FPS: 3; CPU#1: 18.4%; CPU#2: 18.3%; CPU#3: 69.7%; CPU#4: 13.4%; Objects currently tracked: 5.

06:46:12 AM [INFO] New object detected at (326, 225, 70, 70) with id 5.

06:46:12 AM [INFO] Internal FPS: 3; CPU#1: 20.8%; CPU#2: 17.0%; CPU#3: 70.1%; CPU#4: 14.1%; Objects currently tracked: 6.

06:46:16 AM [INFO] Internal FPS: 3; CPU#1: 26.9%; CPU#2: 15.7%; CPU#3: 65.1%; CPU#4: 13.9%; Objects currently tracked: 6.

In the previous article, we noted that, when using a remote X display on Pi, the CPU usage was not very high while the FPS remained low. We speculated that the time to send an X frame over the network was blocking execution of the video processing. We can use the CLI --nowindow parameter to tell the TrafficCV program not to display the video window at all during video processing:

(cv) allisterb@glitch:~/Projects/TrafficCV $ tcv EUREKA:0.0 --model haarcascade_kraten --video ../TrafficCV/demo_videos/cars_vertical.mp4 --nowindow

_______ ___ ___ __ ______ ___ ___

|_ _|.----.---.-.' _|.' _|__|.----.| | | |

| | | _| _ | _|| _| || __|| ---| | |

|___| |__| |___._|__| |__| |__||____||______|\_____/

v0.1

06:42:50 AM [INFO] Video window disabled. Press ENTER key to stop.

06:42:50 AM [INFO] Using Haar cascade classifier on CPU.

06:42:50 AM [INFO] Video resolution: 640x360 25fps.

06:42:50 AM [INFO] ppm argument not specified. Using default value 8.8.

06:42:50 AM [INFO] fc argument not specified. Using default value 10.

06:42:51 AM [INFO] New object detected at (241, 13, 16, 16) with id 0.

06:42:51 AM [INFO] New object detected at (221, 42, 30, 30) with id 1.

06:42:51 AM [INFO] New object detected at (258, 22, 23, 23) with id 2.

06:42:51 AM [INFO] New object detected at (319, 133, 53, 53) with id 3.

06:42:51 AM [INFO] Internal FPS: 10; CPU#1: 43.3%; CPU#2: 45.4%; CPU#3: 43.3%; CPU#4: 99.0%; Objects currently tracked: 4.

06:42:53 AM [INFO] New object detected at (210, 243, 63, 63) with id 4.

06:42:53 AM [INFO] Internal FPS: 7; CPU#1: 22.8%; CPU#2: 24.2%; CPU#3: 23.8%; CPU#4: 100.0%; Objects currently tracked: 5.

06:42:55 AM [INFO] Internal FPS: 6; CPU#1: 20.4%; CPU#2: 21.5%; CPU#3: 19.5%; CPU#4: 100.0%; Objects currently tracked: 5.

06:42:57 AM [INFO] Internal FPS: 5; CPU#1: 19.9%; CPU#2: 22.2%; CPU#3: 20.3%; CPU#4: 100.0%; Objects currently tracked: 5.

06:42:59 AM [INFO] Internal FPS: 5; CPU#1: 19.1%; CPU#2: 20.5%; CPU#3: 20.9%; CPU#4: 100.0%; Objects currently tracked: 5.

06:43:01 AM [INFO] New object detected at (326, 225, 70, 70) with id 5.

06:43:02 AM [INFO] Internal FPS: 5; CPU#1: 20.7%; CPU#2: 22.2%; CPU#3: 20.3%; CPU#4: 100.0%; Objects currently tracked: 6.

06:43:04 AM [INFO] Internal FPS: 5; CPU#1: 18.1%; CPU#2: 18.6%; CPU#3: 17.2%; CPU#4: 100.0%; Objects currently tracked: 6.

06:43:06 AM [INFO] Internal FPS: 5; CPU#1: 18.0%; CPU#2: 20.7%; CPU#3: 19.0%; CPU#4: 100.0%; Objects currently tracked: 6.

06:43:09 AM [INFO] New object detected at (209, 199, 65, 65) with id 6.

06:43:09 AM [INFO] Internal FPS: 4; CPU#1: 19.1%; CPU#2: 20.8%; CPU#3: 18.4%; CPU#4: 100.0%; Objects currently tracked: 7.

06:43:11 AM [INFO] Internal FPS: 4; CPU#1: 18.5%; CPU#2: 19.9%; CPU#3: 17.9%; CPU#4: 100.0%; Objects currently tracked: 7.

Here we see that one CPU core is pegged at 100% while the FPS has nearly doubled. When tracking six objects, we are able to get 5 FPS on Pi with the video window disabled, compared to only 3 FPS with the video window enabled.

The base Detector class handles all of the tracking, measuring, and printing out of statistics and informational messages. Classes implementing the Detector interface only need to specify how objects are detected in a particular video frame – and the TrafficCV CLI and library functions handle the rest of the tasks. This lets us quickly implement the various models and options and compare results. With a base library in place, we can look at the different ways of measuring vehicle speed and the different Deep Learning models we can use.

Next Step

In the next article, we’ll explore the different ways of measuring vehicle speed and the different Deep Learning models for object detection that can be used in our TrafficCV program. Stay tuned!