A walkthrough of converting a code from parallel C++ ray-tracing code to CUDA, and the work needed to make that CUDA code run on CPU using parallel for_each() and then converted the code to SYCL 2020 via Intel® DPC++. This article is an entry competing in The Great Cross-Architecture Challenge.

Table of Contents

Introduction

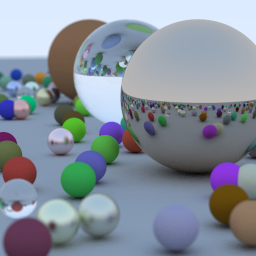

In this article, we will port Raytracing in One Weekend, the already converted parallel code to CUDA, make CUDA run on CPU and then port again to SYCL 2020 via Intel® Data-Parallel C++ (DPC++) toolkit. DPC++ is ISO C++ plus Khronos SYCL with community extensions eventually making their way into the final standard. As time of article writing, the SYCL 2020 specification has been released for public review as a provisional specification for developers to provide their valuable feedback before the final version is published and ratified.

Here is a list of improvements made to the Raytracing in One Weekend from serial code to multi-threaded using C++17 parallel for_each algorithm.

- Convert all raw memory allocation to

shared_ptr to prevent memory leaks. - Replace non-reentrant

rand() with each thread having its copy of the C++11 random number generator using thread_local to avoid sharing and locking. - Replace the plain text PPM image format with Portable Network Graphics (PNG) format using single-header stb_image_write.h library.

- Parallelize with C++17 parallel

for_each, achieving a more than 5X performance on my 6 core CPU.

This article is divided into 2 main sections where the first discusses a pure C++ to CUDA conversion while the second covers the work required for a CUDA to SYCL conversion. For SYCL code, Intel's Data-Parallel C++ compiler is used to compile the code. In the article, host and device refer to CPU and device other than CPU, such as GPU or FPGA. In CUDA case, the device refers exclusively to GPU since CUDA can only run on GPU, specifically Nvidia's. SYCL code can be selected to execute on CPU, GPU or FPGA with its selector. Let's begin our journey of exploring heterogeneous programming with CUDA and SYCL.

Converting to CUDA

In CUDA and SYCL, data structures in buffers must be C++ trivially copyable, which means that an object can be safely copied byte by byte where copy constructors do not need to be invoked. This requirement rules out the use of polymorphism. In the original Raytracing in One Weekend code exists a pure virtual material class and 3 derived material classes of different sizes. We will combine them into 1 non-virtual class to have uniform size to make the array of material easy to allocate on CUDA side. Another reason is because the object of a virtual class has an invisible vptr member. Simply put, vptr is a pointer to vtbl be called in a polymorphic setting. Since vptr and vtbl are not documented and are implemented non-transparently and most likely-than-not differently. It is best not to rely on our CUDA raytracer's virtual functions, since the material objects are instantiated on the host and then passed to the device side.

class material {

public:

virtual bool scatter(const ray& r_in, const hit_record& rec,

vec3& attenuation, ray& scattered) const = 0;

};

class lambertian : public material {

public:

lambertian(const vec3& a);

virtual bool scatter(const ray& r_in, const hit_record& rec,

vec3& attenuation, ray& scattered) const;

vec3 albedo;

};

class metal : public material {

public:

metal(const vec3& a, float f);

virtual bool scatter(const ray& r_in, const hit_record& rec,

vec3& attenuation, ray& scattered) const;

vec3 albedo;

float fuzz;

};

class dielectric : public material {

public:

dielectric(float ri);

virtual bool scatter(const ray& r_in, const hit_record& rec,

vec3& attenuation, ray& scattered) const;

float ref_idx;

};

This is our combined material class. We have a material_type member to keep track of what type of material the object is to which scatter() to call. scatter() delegates its work to one of the xxx_scatter() functions. __device__ is a CUDA directive that indicates the function is callable on the CUDA device. On the other hand, __host__ indicates the function is callable on the CPU host. __device__ and __host__ are not mutually exclusive: in other words, a function can be both host and device callable.

enum class material_type

{

lambertian,

metal,

dielectric

};

class material {

public:

material() = default;

material(material_type mtype, const vec3& a, float f, float ri);

__device__ bool scatter(const ray& r_in, const hit_record& rec,

vec3& attenuation, ray& scattered, const RandAccessor& rand) const

{

switch (mat_type)

{

case material_type::lambertian:

return lambertian_scatter(r_in, rec, attenuation, scattered, rand);

case material_type::metal:

return metal_scatter(r_in, rec, attenuation, scattered, rand);

case material_type::dielectric:

return dielectric_scatter(r_in, rec, attenuation, scattered, rand);

}

return lambertian_scatter(r_in, rec, attenuation, scattered, rand);

}

__device__ bool lambertian_scatter(const ray& r_in, const hit_record& rec,

vec3& attenuation, ray& scattered, const RandAccessor& rand) const;

__device__ bool metal_scatter(const ray& r_in, const hit_record& rec,

vec3& attenuation, ray& scattered, const RandAccessor& rand) const;

__device__ bool dielectric_scatter(const ray& r_in, const hit_record& rec,

vec3& attenuation, ray& scattered, const RandAccessor& rand) const;

material_type mat_type;

vec3 albedo;

float fuzz;

float ref_idx;

};

The random numbers are generated beforehand using the PreGenerated class and a RandAccessor class to access them on the device side.

class PreGenerated

{

public:

PreGenerated(size_t size)

{

std::random_device rd;

std::mt19937 gen(rd());

std::uniform_real_distribution<> dis(0.0, 1.0);

vec.resize(size);

for (size_t i = 0; i < size; ++i)

vec[i] = dis(gen);

}

const std::vector<float>& GetVector() const

{

return vec;

}

private:

std::vector<float> vec;

};

class RandAccessor

{

public:

__device__ RandAccessor(size_t offset_,

const float* arr_, size_t size_)

: offset(offset_)

, arr(arr_)

, size(size_)

, index(0)

{}

__device__ float Get() const

{

size_t i = (offset + index) % size;

++index;

return arr[i];

}

private:

size_t offset;

const float* arr;

size_t size;

mutable size_t index;

};

Next, we'll try to compile the code. The pseudo arguments to the CUDA compiler, nvcc.exe, are as simple as follows. CUDA source file has to be in *.cu file extension.

nvcc -o executable source.cu

On Windows, this is not enough. I have to add an argument, -ccbin with a folder path of Microsoft Visual C++'s compiler, cl.exe. Note: Your path might differ from mine, so modify it accordingly.

nvcc -o CUDARayTracer.exe CUDARayTracer.cu -ccbin

"C:\Program Files

(x86)\Microsoft Visual Studio\2019\Community\VC\Tools\MSVC\14.28.29333\bin\Hostx64\x64"

There is a warning that the stack size of raytrace() cannot be statically determined. I do not know exactly what it means, so I ignored it, after all, it is a warning, not an error. But later, I found out that this warning foreshadowed my converted CUDA code is doomed to failure.

ptxas warning : Stack size for entry function '_Z8raytracePfPyP6sphereS0_PjS0_P6cameraPiS6_S6_'

cannot be statically determined

After running the CUDA code, the cudaDeviceSynchronize() returns error number 700 which refers to cudaErrorIllegalAddress described in this page of CUDA errors as The device encountered a load or store instruction on an invalid memory address. This leaves the process in an inconsistent state and any further CUDA work will return the same error. To continue using CUDA, the process must be terminated and relaunched. In short, my CUDA program crashed and I do not know the cause.

By some sheer determination of logic and guesswork, I correctly deduced the root cause to be recursive color() because its recursive levels can be as high as 50, resulting in a stack overflow. Unlike default 1MB stack size in every thread on a CPU process, CUDA kernel running on a CUDA thread has a limited amount of stack size, hence the ptxas warning. My CUDA version 11.1 supports recursion but at the time of writing this article, DPC++ does not support recursive function calls on device, so I decided to convert color() to an iterative version named color_loop().

__device__ vec3 color(const ray& r, sphere* world, size_t world_size,

int depth, const RandAccessor& rand)

{

hit_record rec;

if (world_hit(world, world_size, r, 0.001, FLT_MAX, rec)) {

ray scattered;

vec3 attenuation;

if (depth < 50 && rec.mat->scatter(r, rec, attenuation, scattered, rand)) {

return attenuation * color(scattered, world, world_size, depth + 1, rand);

}

else {

return vec3(0, 0, 0);

}

}

else {

vec3 unit_direction = unit_vector(r.direction());

float t = 0.5 * (unit_direction.y() + 1.0);

return (1.0 - t) * vec3(1.0, 1.0, 1.0) + t * vec3(0.5, 0.7, 1.0);

}

}

By converting color() to the iterative version, color_loop() below, I managed to get rid of the warning and the program runs smoothly to completion.

__device__ vec3 color_loop(const ray& r, sphere* world,

size_t world_size, const RandAccessor& rand)

{

vec3 attenuation_result(1.0,1.0,1.0);

bool assigned = false;

ray temp = r;

for(int i=0; i<50; ++i)

{

hit_record rec;

if (world_hit(world, world_size, temp, 0.001, FLT_MAX, rec)) {

ray scattered;

vec3 attenuation;

if (rec.mat->scatter(temp, rec, attenuation, scattered, rand)) {

temp = scattered;

if(assigned == false)

{

attenuation_result = attenuation;

assigned = true;

}

else

attenuation_result *= attenuation;

}

else

{

attenuation_result = vec3(0, 0, 0);

break;

}

}

else {

vec3 unit_direction = unit_vector(temp.direction());

float t = 0.5 * (unit_direction.y() + 1.0);

attenuation_result *=

(1.0 - t) * vec3(1.0, 1.0, 1.0) + t * vec3(0.5, 0.7, 1.0);

break;

}

}

return attenuation_result;

}

This is raytrace function. The __global__ directive indicates to CUDA compiler that this function is invoked from the host. It delegates the grunt work to raytrace_pixel().

#ifndef NO_CUDA

__global__ void raytrace(float* dev_arr, size_t* dev_arr_size, sphere* dev_sphere,

size_t* dev_sphere_size, unsigned int* dev_pixelsSrc, size_t* dev_pixelsSrc_size,

camera* dev_camera, int* nx, int* ny, int* ns)

{

int x = blockIdx.x * blockDim.x + threadIdx.x;

int y = blockIdx.y * blockDim.y + threadIdx.y;

if (x >= *nx || y >= *ny)

{

return;

}

int thread_index = y * (*nx) + x;

if (thread_index >= *dev_pixelsSrc_size)

{

return;

}

unsigned int& pixel = dev_pixelsSrc[thread_index];

raytrace_pixel(&pixel, dev_arr, dev_arr_size, dev_sphere,

dev_sphere_size, dev_camera, nx, ny, ns);

}

#endif

The definition of raytrace_pixel function.

__device__ void raytrace_pixel(unsigned int* dev_pixel, float* dev_arr,

size_t* dev_arr_size, sphere* dev_sphere, size_t* dev_sphere_size,

camera* dev_camera, int* nx, int* ny, int* ns)

{

int j = (*dev_pixel) & 0xffff;

int i = ((*dev_pixel) & 0xffff0000) >> 16;

RandAccessor rand(i+j*(*nx), dev_arr, *dev_arr_size);

vec3 col(0, 0, 0);

for (int s = 0; s < *ns; s++) {

float u = float(i + rand.Get()) / float(*nx);

float v = float(j + rand.Get()) / float(*ny);

ray r = dev_camera->get_ray(u, v, rand);

col += color_loop(r, dev_sphere, *dev_sphere_size, rand);

}

col /= float(*ns);

col = vec3(sqrt(col[0]), sqrt(col[1]), sqrt(col[2]));

int ir = int(255.99 * col[0]);

int ig = int(255.99 * col[1]);

int ib = int(255.99 * col[2]);

*dev_pixel = (0xff000000 | (ib << 16) | (ig << 8) | ir);

}

Making CUDA Code Run on CPU with C++17 Parallel for_each

CPU code that extensively accesses network and is harddisk bound, cannot be converted to GPU code. Ideally, the CPU code should be compute-bound to maximize the success of conversion. So not every CPU software can run on GPU. It is no secret that the reverse is not necessarily true: GPU code can be run on CPU, provided that the source code is available. Unfortunately, Nvidia does not open source any of its CUDA libraries. Even if they are, they are written not in CUDA but Streaming ASSembly (SASS) which is of little use to our conversion effort. This is why random number generation does not depend on Nvidia's library but is pregenerated on the CPU side, to pave the way to having the one codebase to maintain for both CUDA and CPU. The pure C++ version calls the raytrace_pixel in a lambda in-turn called by C++17 parallel for_each.

std::for_each(std::execution::par, pixelsSrc.begin(), pixelsSrc.end(),

[&](unsigned int& pixel)

{

size_t dev_arr_size = vec.size();

size_t dev_sphere_size = world.size();

raytrace_pixel(&pixel, vec.data(), &dev_arr_size, world.data(),

&dev_sphere_size, &cam, &nx, &ny, &ns);

});

In pure C++, the code must be inside *.cpp, so we create a TestRayTracer.cpp and add in these 6 lines below. All the CUDA related code are guarded by the non-presence of NO_CUDA. Defining NO_CUDA is going to exclude all CUDA code and header. __global__, __host__ and __device__ are purely CUDA directives which can be defined to nothing in pure C++ code. Then CUDARayTracer.cu is included. The work for a pure C++ version is completed. Below is all the code, RayTracing.cpp contains.

#pragma once

#define NO_CUDA

#define __global__

#define __host__

#define __device__

#include "CUDARayTracer.cu"

For benchmarking purposes, the pure C++ CPU version takes 90 seconds on a 6 cores 12 threads Intel® i7 8700 processor and the CUDA version extracted 18X performance on a Geforce 1060. In the next section, we'll take a plunge to convert the CUDA code to SYCL 2020 via Intel® DPC++ and then upload the source code to Intel® DevCloud for compilation and execution.

Converting to SYCL 2020 via DPC++

This section shall try to convert the CUDA code to SYCL 2020 using Data-Parallel C++ (DPC++). DPC++ is Intel's implementation of the SYCL 2020 specification defined by SYCL Working Group within Khronos. An excellent DPC++ ebook is made freely available on the Springer publisher site. I strongly encourage anyone interested in SYCL 2020 to download and study it. The SYCL ray-tracing code is the same as CUDA's except with the CUDA directives such as __host__ and __device__ removed and the CUDA function calls replaced by SYCL equivalents.

The book's code examples make extensive use of C++17's Class Template Argument Deduction (CTAD) to instantiate a template, not every template argument has to be specified. Pre-C++17, vector type has to be specified even though it can be deduced from the argument type in the initializer_list.

std::vector<int> vec{1, 2, 3, 4};

CTAD relaxed this requirement that allows the template type to be omitted.

std::vector vec{1, 2, 3, 4};

However, the SYCL code listing in this section does not use CTAD for data buffers to make it clear to the C++ compiler of the instantiating type. This is just my preference. A simple SYCL code is used to try out and get familiar with the SYCL syntax/compiler before getting into the code conversion process. Read the code comments for more explanation. The code does no useful computation, other than assigning each element of A array to B array.

#include <CL/sycl.hpp>

#include <array>

#include <iostream>

#include <exception>

using namespace sycl;

constexpr int N = 42;

int main(){

std::array<int, N> a, b;

for(int i=0; i< N; ++i) {

a[i] = i;

b[i] = 0;

}

try {

queue Q(host_selector{});

buffer<int, 1> A{a.data(), range<1>(a.size())};

buffer<int, 1> B{b.data(), range<1>(b.size())};

Q.submit([&](handler& h) {

auto accA = A.template get_access<access::mode::read>(h);

auto accB = B.template get_access<access::mode::write>(h);

h.parallel_for<class nstream>(

range<1>{N},

[=](id<1> i) { accB[i] = accA[i]; });

});

Q.wait();

B.get_access<access::mode::read>();

for(int i=0; i< N; ++i) {

std::cout << b[i] << " ";

}

}

catch(sycl::exception& ex)

{

std::cerr << "SYCL Exception thrown: "

<< ex.what() << std::endl;

}

catch(std::exception& ex)

{

std::cerr << "std Exception thrown: "

<< ex.what() << std::endl;

}

std::cout << "\nDone!\n";

return 0;

}

The sycl::queue constructor takes in a selector that indicates the computing device. A list of available selector to choose from.

sycl::queue q(sycl::default_selector{});

sycl::queue q(sycl::host_selector{});

sycl::queue q(sycl::cpu_selector{});

sycl::queue q(sycl::gpu_selector{});

sycl::queue q(sycl::accelerator_selector{});

In the SYCL code below, buffers are created to reference the host data to facilitate the copying to the device later on. The body of raytrace_pixel() is put inside the h.parallel_for lambda. Q.wait() is then called to wait for the work completed. Afterwards, dev_pixelsSrc.get_access() is called to synchronize the pixel data from the device to the host buffer.

try {

using namespace sycl;

queue Q(host_selector{});

buffer<float, 1> dev_arr{ vec.data(), range<1>(vec.size()) };

buffer<sphere, 1> dev_sphere{ world.data(), range<1>(world.size()) };

buffer<unsigned int, 1> dev_pixelsSrc{ pixelsSrc.data(), range<1>(pixelsSrc.size()) };

buffer<camera, 1> dev_camera{ &cam, range<1>(1) };

Q.submit([&](handler& h) {

auto acc_dev_arr = dev_arr.template get_access<access::mode::read>(h);

auto acc_dev_sphere = dev_sphere.template get_access<access::mode::read>(h);

auto acc_dev_pixelsSrc =

dev_pixelsSrc.template get_access<access::mode::read_write>(h);

auto acc_dev_camera = dev_camera.template get_access<access::mode::read>(h);

h.parallel_for<class nstream>(range<1>{pixelsSrc.size()}, [=](id<1> index) {

unsigned int& pixel = acc_dev_pixelsSrc[index];

unsigned int* dev_pixel = &pixel;

int j = (*dev_pixel) & 0xffff;

int i = ((*dev_pixel) & 0xffff0000) >> 16;

RandAccessor rand(i + j * nx, acc_dev_arr.get_pointer(), arr_size);

vec3 col(0, 0, 0);

for (int s = 0; s < ns; s++) {

float u = float(i + rand.Get()) / float(nx);

float v = float(j + rand.Get()) / float(ny);

ray r = acc_dev_camera.get_pointer()->get_ray(u, v, rand);

col += color_loop(r, acc_dev_sphere.get_pointer(), sphere_size, rand);

}

col /= float(ns);

col = vec3(sqrt(col[0]), sqrt(col[1]), sqrt(col[2]));

int ir = int(255.99 * col[0]);

int ig = int(255.99 * col[1]);

int ib = int(255.99 * col[2]);

acc_dev_pixelsSrc[index] = (0xff000000 | (ib << 16) | (ig << 8) | ir);

});

});

Q.wait();

dev_pixelsSrc.get_access<access::mode::read>();

}

catch (sycl::exception& ex)

{

std::cerr << "SYCL Exception thrown: "

<< ex.what() << std::endl;

}

catch (std::exception& ex)

{

std::cerr << "std Exception thrown: "

<< ex.what() << std::endl;

}

The command for compiling the SYCL with the DPC++ compiler is shown below. You can either download the Intel® oneAPI tookit or register an account on Intel® DevCloud to build the SYCL program. Intel® DevCloud is the recommended way to develop, test, and run your workloads for free on the latest Intel® hardware and software.

dpcpp -O2 -g -std=c++17 SYCLTracer.cpp

After logging in the Intel® DevCloud, create a job script named myjob with this contents. PBS_O_WORKDIR environment variable holds the path where the work is done. a.out is the name of executable output by the DPC++ compiler because we did not give it a name and the default name is a.out. Add -o switch followed by your desired executable name in your command above if you wish to change it.

cd $PBS_O_WORKDIR

./a.out

Submit your job for CPU below.

qsub myjob

Submit your job for GPU below.

qsub -l nodes=1:gpu:ppn=2 -d . myjob

Important note: To make your queue to run on GPU, remember to amend this line:

queue Q(host_selector{}); // for running on cpu without OpenCL installed

to this line:

queue Q(gpu_selector{}); // for running on gpu

And compile again with dpcpp.

To get the progress of your work, run qstat command. It returns empty to signify the work completion.

qstat

The output is stored in myjob.oXXXXX and similarly, the error, if any, is recorded in myjob.eXXXXX where XXXXX is a numeric code for the job number. Note: Your output and error filename might not begin with myjob if your job script is not named myjob. In the output, the ray-tracing timing is recorded. This is the CPU timing for 24 cores Xeon processor.

ray_tracer timing:23017ms

This is the GPU timing. It shaves 2 seconds off the CPU timing. The GPU specs are unknown.

ray_tracer timing:21559ms

This is the command to copy the raytraced image from Intel® DevCloud to your local folder. uXXXXX is your Intel® DevCloud user ID, so replace it with yours. The scp command securely copies the ray_trace.png from my DevCloud home folder to my D: drive.

scp devcloud:/home/uXXXXX/ray_trace.png /cygdrive/d/

Conclusion

The article is divided into two main sections. The first section covers the code conversion to Nvidia CUDA. For CUDA and CPU to share the same codebase, some macros are needed to define CUDA directives to empty. To achieve parallelism in the CPU version, C++17 parallel for_each is used. In the second section, it covers the SYCL 2020 conversion, DPC++ compilation and Intel® DevCloud execution. Making SYCL code run on either CPU or GPU, only involves a single line change on the queue instantiation. As mentioned before, DPC++ is Intel's implementation of SYCL 2020. For Nvidia and AMD, there is hipSYCL though not officially supported by both companies. SYCL 2020 is open standard, cross-architecture (CPU/GPU/FPGA) and multi-vendor (Intel/AMD/Nvidia) whereas CUDA is tied exclusively to the Nvidia ecosystem. Whenever the GPU is not supported on SYCL, an option of CPU fallback is always available. In conclusion, SYCL 2020 is currently the best available choice to target/support a wider customer base having different hardware.

Code Repositories

References

History

- 17th January, 2021: First release