oneMD is a new molecular dynamics simulator designed to take advantage of SIMD and GPU and FPGA hardware data parallelism and acceleration using Intel's oneAPI development framework and the DPC++ toolchain which implements the SYCL specification. The simulator is designed to be both cross-platform and cross-arch and can also be built with ordinary C++ toolchains without SYCL like MSVC. This article will be my development journal for the project documenting my journey learning modern C++ and SYCL and using Intel's DevCloud environment for testing oneMD on CPU, GPU, and FPGA hardware.

Introduction

Simulating matter at the atomic and molecular scale is one of the most fascinating applications of HPC and supercomputing and is an essential part of the discovery process in numerous diverse areas of scientific research, from materials science to bio-chemistry to drug discovery. The discovery and development of Newtonian mechanics spurred interest in the time-evolution of N-body Newtonian systems as early as the 18th century, and several numerical methods and techniques used in MD predate the development of high-speed numerical computing and simulation using digital computers. The development of the theory of simularing hundreds of interacting classical particles using numerical methods suitable for implentation by an electronic computer began in the late 1950's, and was preceded by Monte Carlo simulations which were developed shortly after WWII to study nuclear weapons and were the first set of calculations run as a stored program on the ENIAC I computer.

oneMD is a project started as my entry into The Great Cross-Architecture Challenge here on CodeProject. It's a molecular dynamics simulator similar in principle to OpenMM and GROMACS but coded in C++17 using Intel's oneAPI DPC++/C++ compiler which implements the SYCL standard from Khronos Group. oneAPI allows you to target the SIMD capabilities of CPUs as well as GPU and FPGA acceleration for accelerated parallel data processing using a single set of C++ abstractions.

Typically using OpenCL or similar parallel computing frameworks like CUDA in C++ means that you write your kernels in separate source files that have the extension .cl or .cu. (e.g., OpenMM's OpenCL platform) using a variant of C or C++ like OpenCL C or CUDA C++. Each parallel computing framework requires its own language and concepts and each hardware acceleration device has its own architecture that the programmer needs to learn to program effectively.

With DPC++ and SYCL, you use a single source file and only C++ 17 code and encapsulate your SYCL kernel as regular C++ functions or C++ lambdas. You can use the same set of C++ types and abstractions to write generic data-parallel kernels that will run on any hardware acceleration device. You also can write kernels that are specialized for different devices from general purpose CPUs with SIMD vector units to GPGPUs to FPGAs with programmable logic units.

While the single-source model reduces the complexity of writing kernels for different devices significantly, writing DPC++ kernels in C++ is a lot different than general-purpose programming. There is a strict separation between host and device code. and device code uses a constrained device memory model where all device memory buffers or allocations must be declared before use and you must explicitly capture or share any data from regular C++ containers like std:vector and std:array using SYCL buffers.

Given these differences, plus my own desire to start from scratch a project using modern C++ and CMake, it makes sense to attempt a new simulator codebase written in C++17 targetting the DPC++ compiler toolchain and tested on Intel's free DevCloud cloud computing environment with access to multiple hardware accelerator devices. oneMD can also be built for non-SYCL C++ compilers like GCC and MSVC using traditional paralllel computing libraries like OpenMP which allows us to have a performance baseline for data-parallel operations and kernels.

Background

A molecular dynamics simulator is a computer program which simulates how a set of atoms or molecules interact with each other in a specific region using an assumption that the equations of classical mechanics are sufficient to model the particle interactions accurately.

In a molecular dynamics simulation, we have a system of N atoms (or molecules) where each atom follows Newton's law relating the force on an individual atom to its mass and acceleration F=ma. The simulation calculates the momentum and position of each atom at a microscopic level and then converts this information to macroscopic properties like pressure, energy, etc. using statistical mechanics. The thermodynamic or macroscopic state of the system is calculated by statistical averages over the microscopic state of individual atoms defined by their position and momentum.

The basic algorithm for a oneMD simulation of a system of N particles in a cubic box region is:

-

Establish a set of initial conditions by using a random distribution to assign svelocity and position to each atom.

-

For each atom, keep track of neighboring atoms in a neighbor list. Atoms that are initially too far away to interact (according to the model) should be removed from the neighbor list.

-

Iterate through a fixed set of time steps and perform the following calculations ay each step:

-

For each atom, iterate through the neighbor list and calculate the distance between the selected atom and each of its neighbors along with the interaction potential energy using an equation like Lennard-Jones. The force on each atom due to a neighboring atom is found by using the differential of the LJ equation wrt. distance.

-

Use the virial theorem to find the instantaneous kinetic energy of the entire system of atoms as a function of the force due to each neighboring particle and the distance between them.

-

Calculate the instantaneous pressure using the kinetic energy, volume, temperature and Boltzmann's constant.

-

Calculate the next position and velocity of each atom in the next timestep using the Verlet integration method.

Development Environment

Our development environment will be Windows 10 and Visual Studio Code with the C++ extension, CMake extension and the Remote SSH extension connecting to the remote DevCloud Ubuntu environment. This setup lets us have all the conveniences of a C++ IDE while building and testing code on DevCloud.

SSH

Once you signup for DevCloud, you'll be able to access instructions for setting up SSH acccess to your remote environment. The official Intel instructions cover setting up SSH access for Linux or MacOS or a Cygwin environment on Windows but it is possible to use the Windows 10 native SSH client too. First, copy the key file from the Intel instructions page to your Windows account's .ssh folder at %USERPROFILE%\.ssh. Then add the following section to %USERPROFILE%\.ssh\config:

################################################################################################

# oneAPI DevCloud SSH config

################################################################################################

Host devcloud

User <user>

IdentityFile <path to key file>

ProxyCommand C:\Windows\System32\OpenSSH\ssh.exe -T -i <path to key file> guest@devcloud.intel.com

################################################################################################

# SSH Tunnel config

################################################################################################

Host *.aidevcloud

User <user>

IdentityFile <path to key file>

ProxyCommand C:\Windows\System32\OpenSSH\ssh.exe -T devcloud nc %h %p

LocalForward 5022 localhost:22

LocalForward 5901 localhost:5901

################################################################################################

################################################################################################

# DevCloud VSCode config

################################################################################################

Host devcloud-vscode

UserKnownHostsFile /dev/null

StrictHostKeyChecking no

Hostname localhost

User <user>

Port 5022

IdentityFile <path to key file>

################################################################################################

Replace <user> and <path to key file> with your DevCloud user id (uxxx...) and path to your DevCloud key file respectively. Note that in the above config snippet, there is one difference from the official instructions from Intel for Unix environments, where we use the full path to the Windows SSH executable in the ProxyCommand config field.

DevCloud

Once you get a shell on DevCloud, you'll need to use commands like qsub and qstat to setup environments that you can either develop on or run your programs on Intel's hardware like Xeon CPUs and Iris Xe GPUs. These queue commands are inherited from old-school cluster management software where jobs are submitted to be batch processed in job queues so it's easy to find docs and examples for the commands. The official Intel reference doc for submitting and managing DevCloud jobs is here. The following are some essential commands I use for developing a DPC++ project on DevCloud.

For developing, we need to create an interactive job which will allocate memory and CPU resources during the time we are logged in to devcloud. To create an interactive job that runs for 24hrs, use the qsub command with the -I (capital I) option to specify it is an iteractive option the -l (common l) option to set parameters like walltime:

uxxxxx@login-2:~$ qsub -I -l walltime=1:0:0:0

qsub: waiting for job 809147.v-qsvr-1.aidevcloud to start

qsub: job 809147.v-qsvr-1.aidevcloud ready

########################################################################

# Date: Tue Mar 9 11:26:21 PST 2021

# Job ID: 809147.v-qsvr-1.aidevcloud

# User: u61437

# Resources: neednodes=1:batch:ppn=2,nodes=1:batch:ppn=2,walltime=24:00:00

########################################################################

uxxxxx@s001-n045:~$

To see the jobs running, use the qstat command.

uxxxxx@s001-n045:~/oneMD$ qstat

Job ID Name User Time Use S Queue

------------------------- ---------------- --------------- -------- - -----

809147.v-qsvr-1 STDIN u61437 00:00:04 R batch

To kill running jobs, use the qdel command using the job id displayed by qstat.

uxxxxx@s001-n045:~$ qstat

Job ID Name User Time Use S Queue

------------------------- ---------------- --------------- -------- - -----

809147.v-qsvr-1 STDIN u61437 00:00:04 R batch

uxxxxx@s001-n045:~$ qdel 809147

Terminated

uxxxx@s001-n045:~$

qsub: job 809147.v-qsvr-1.aidevcloud completed

uxxxxx@login-2:~$

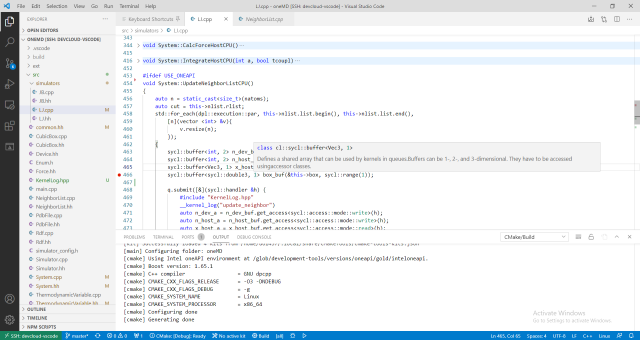

Visual Studio Code

Once we can create interactive jobs on DevCloud, we can connect to the interactive environment using Visual Studio Code's Remote SSH extension. Note that we need two logins - one to the base DevCloud environment to start the job and one to the hostname of our interactive job environment (e.g., s001-n045.aidevcloud) that VS Code will use. Our SSH config file already has the configuration describe above so all we need to say is something like ssh s001-n045.aidevcloud and then start VS Code.

The .vscode folder in the project repo contains files for getting IntelliSense to work and for building and debugging oneMD inside VS Code e.g. the c_cpp_properties.json file contains the paths to the include folders for the project and also for the DPC++ libraries.

With this environment, we can do C++ development on DevCloud as easily as we would using a locally installed toolchain.

CMake

The current version of CMake on DevCloud is 3.10. This is an older version and you may experience problems trying to build other libraries and apps, e.g., the latest SYCL-enabled version of the GROMACS simulator requires CMake 3.20. sudo isn't allowed on DevCloud and any additional programs you need must be installed in your home directory.

Fortunately, it's easy to get a private version of CMake and configure VS Code to use it. You can download the latest version of CMake from Kitware as a binary installer for Linux, e.g., cmake-3.19.5-Linux-x86_64.sh. You then execute this file and it will install CMake wherever is convenient like your home folder. In VS Code, you can then change the path to the CMake executable in your settings.json file. Note that this isn't needed for oneMD as it works fine with the CMake already installed.

Writing Kernels

We can take a brief look at writing kernels in DPC++ compared to using OpenMP. The first iteration of the Lennard-Jones simulator in oneMD is based on the OpenMP LJ simulator by James Wes Barnett. The first kernel in the simulator is the simplest - updating the neighbor list for each atom. For each atom in the simulation we keep track of its position as a 3D vector in a std::vector called x. We want to determine what the neighbouring atoms are within a certain radius called rlist and store the neighboring atoms in a list. This operation can be parallelized since the distance calculations for each atom do not change the atom positions and are independent of one another. This was implemented originally like this:

void NeighborList::Update(vector <coordinates> &x, cubicbox &box)

{

#pragma omp parallel for schedule(guided, CHUNKSIZE)

for (unsigned int i = 0; i < this->list.size(); i++)

{

this->list.at(i).resize(0);

}

#pragma omp parallel for schedule(guided, CHUNKSIZE)

for (unsigned int i = 0; i < x.size()-1; i++)

{

for (unsigned int j = i+1; j < x.size(); j++)

{

if (distance2(x.at(i), x.at(j), box) < rlist2)

{

this->list.at(i).push_back(j);

}

}

}

return;

}

After initializing the neighbor list vector for each atom to size zero, we iterate through each position vector and then iterate again through every subsequent vector calculating the distance between them and adding the second vector to the current atom's neighbor list if the distance is less than the radius threshold. Each operation is preceded by OpenMP #pragma directives for parallelizing the loop using threads on the CPU. Note that this kernel is originally implemented using std::vectors of different sizes for the neighbor list of each atom.

In contrast to the original implementation, the oneMD version can be executed in parallel on different hardware devices. The kernel is implemented in oneMD like this:

void System::UpdateNeighborList() {

dpc_common::TimeInterval timer;

auto n = static_cast<size_t>(natoms);

auto cut = this->conf.nlist;

int* neighbors = sycl::malloc_device<int>(n * n, q);

sycl::buffer<int, 2> n_buf (neighbors, sycl::range(n, n));

sycl::buffer<Vec3, 1> x_host_buf(&x[0], sycl::range(n));

auto k1 = q.submit([&](sycl::handler &h) {

#include "kernel_logger.hpp"

__kernel_logger("update_neighbor")

auto n_a = n_buf.get_access<sycl::access::mode::write>(h);

auto x_host_a = x_host_buf.get_access<sycl::access::mode::read>(h);

auto b = this->box;

h.parallel_for(sycl::range(n, n), [=](sycl::id<2> idx) {

auto i = idx[0];

auto j = idx[1];

if (i < j)

{

auto d = distance2(x_host_a[i], x_host_a[j], b);

if (d < cut)

{

printijdd2(idx, "Including this atom at distance ", d);

n_a[i][j] = 1;

}

else

{

n_a[i][j] = 0;

}

}

});

});

}

In contrast to the OpenMP kernel, we use fixed-size arrarys for the neighbor lists that all have the same size which creates a 2D grid that allows us to more effectively parallelize operations that use the neighbor lists. Memory for the neighbor lists is allocated on the device directly. We create SYCL buffers that allow us to read from the position vector list x and to write to the neighbor list arrays using SYCL accessors inside the kernel definition. We use C++ lambdas to define the kernel and to iterate in parallel over the position vectors x accessed as x_host_a as a 2D grid. We calculate the distance between each pair of atoms and store 1 in the neighbor list for atom x[i] if atom x[j] is within the threshold radius. As in the first we do not double count neighbors and only store neighbors for atom x[i] by considering distances to atom x[j] when i > j.

History