In this article, you will learn what you can do to protect your online privacy when even the most widespread messaging services seem to fail this goal.

Continue using your favorite Chat-Application but keeping your privacy!

Introduction

With increasing levels of online data surveillance, user activity tracking and user profiling, threats to online data security and user privacy continues to worry most of "us", normal users. But, can we do anything to protect our privacy when even the most widespread tools seem to fail this goal? The answer is "yes". The following video inspired me in trying to contribute:

ProtonMail, Min. 8:53

After some months of hard work, the result is the multiplatform Application shown below, a solution for everyone, which actually works ! ..and to be honest, it works really well ! ..for almost no money, besides a few bucks needed for cables and adapters. Here, you find some videos with examples:

AC4QGP – Video Gallery

AC4QGP (Audio Chat for Quite Good Privacy) - "Tunneled Audio Chat" while Voice Calling with TRIfA

AC4QGP (Audio Chat for Quite Good Privacy) – Main Window

The Problem We Solve: Endpoint Security

Providers of chat applications offer “end-to-end” encryption (E2EE) as the ultimate measure against violation of online privacy. Unfortunately, end-to-end encryption is only as secure as the end-nodes, and most of the end-nodes have massive vulnerability problems. Infections with simple exploits like "key-loggers" give easy access to the initial encryption keys, thus making the end-to-end encrypted communication useless!

Quote:

"Endpoint security: The end-to-end encryption paradigm does not directly address risks at the communications endpoints themselves.

Each user's computer can still be hacked to steal his or her cryptographic key (to create a MITM attack) or simply read the recipients’ decrypted messages both in real time and from log files.

Even the most perfectly encrypted communication pipe is only as secure as the mailbox on the other end.[1]

Major attempts to increase endpoint security have been to isolate key generation, storage and cryptographic operations to a smart card such as Google's Project Vault.[19]

However, since plaintext input and output are still visible to the host system, malware can monitor conversations in real time.

A more robust approach is to isolate all sensitive data to a fully air gapped computer.[20] PGP has been recommended by experts for this purpose:

If I really had to trust my life to a piece of software, I would probably use something much less flashy — GnuPG, maybe, running on an isolated computer locked in a basement.

— Matthew D. Green, A Few Thoughts on Cryptographic Engineering

To deal with key exfiltration with malware, one approach is to split the Trusted Computing Base behind two unidirectionally connected computers that prevent either insertion of malware, or exfiltration of sensitive data with inserted malware.[22]“

https://en.wikipedia.org/wiki/End-to-end_encryption#Endpoint_security

Here is where E-E2EE („enhanced“ end-to-end-enryption) comes to help!

How We Solve the Problem of Endpoint Security: E-E2EE

The article cited above already mentions some possibilities to solve the problem with endpoint security. I found that article "after" developing my application, but it pretty much describes the same basic idea.

The current solution does not demand locking your computer in a basement, although you may want to do that in order to increase security even futher ;-)

You don't need in addition 2 unidirectionally connected computers either, although that is quite a simple extension of the current idea which is very easy to implement. In fact, the current version supports already an "intermediate" approach as shown in Figure 9 further below.

Instead, the current proposal targets a wider audience and focuses on practicality while achieving a giant leap in cybersecurity and online privacy.

Improvements described in the Further Improvements section below may be implemented for users with even more demading requirements.

By combining enryption with audio modulation for encoding messages, an "enhanced"-end-to-end encryption is achieved which enables safer communication, improving confidentiality and integrity of the exchanged information.

E-E2EE is based on the use of an additional isolated device as end-node, and two separate physical unidirectional audio channels used as data-diodes which enable tunneling a communication protocol carrying encrypted data. The encrypted data exchange between the isolated end-nodes prevents malware injection and data leak.

The idea makes use of a technology which is ubiquitous (found everywhere!) namely, the good-old audio interface, and reuses available services and infrastructure. This makes it available for everyone and almost everywhere. A typical use case considers a private chat using two standard devices on each side of the communication, with one of them running AC4QGP under Windows or Linux and the other running your favourite messenger.

You can use, for example, the following devices:

- laptop <–-> PC

- laptop <–-> cell phone

- laptop <--> laptop

- PC <–-> PC

- PC <–-> cell phone

… and the list continues... just add tablet, raspberry pi, etc. to the list above and combine it the way you want. Millions of people out there have this possibility, and they also want to protect their privacy when they chat with friends and family.

So, how do we solve the problem of end-node "in"-security?

Very simple: in the isolated device, a secret message is encrypted and then coverted to a binary format. Then, the ONEs and ZEROs are encoded with two different frequencies, say fOne and fZero.

If fZero = 2*fOne, which simplifies decoding, then a ONE is converted to a sine wave cycle with period 1/fOne and a ZERO is converted to 2 sine wave cycles, each of period 1/fZero.

Each of the encoded bits in the stream consists exactly of N audio samples represented as int16, where N depends on the coding frequency and on the sampling rate.

Then, the audio output interface of your isolated-device takes the digital samples and converts them to an electrical analog signal which is transmitted over an audio cable (analog channel). The audio interface is used as a data-diode, which by construction allows data to travel in only one direction.

After that, the audio signal could also travel over several different media and may be converted back to digital, compressed, and even encrypted on its way to the target, e.g., when it is transmitted over VoIP.

Note that this encryption comes on top of our own encryption and is applied on the digital samples of the complete audio signal.

The intended recipient of the audio signal, who has the corresponding session key, just reverts the steps above and recovers the original secret message.

The same system is mirrored in order to be able to transmit secret messages from the other side.

The key point is that any person or system "in between" will just see the audio signal carrying the encrypted message, but will have no access to the session key or any resource of the end-nodes, because they are isolated/offline. Therefore, the attacker will not be able to decrypt the message or compromise the end-nodes.

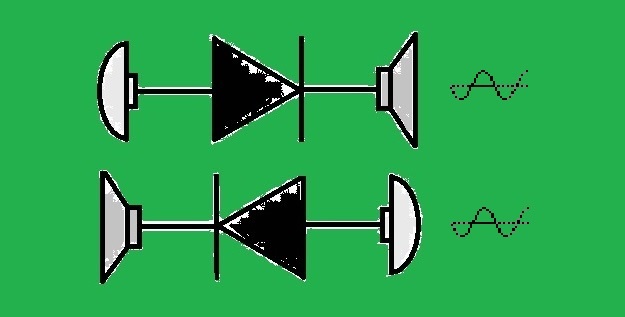

Figure 1 shows the simplest connection example, where two isolated devices A and D are connected over their speaker and microphone interfaces (speaker1 --> mic2, mic1 <-- speaker2), resulting in two separate physical channels, each allowing controlled unidirectional data transmission, thus behaving like 2 data-diodes.

In Figure 2, you find a basic configuration which shows an arrangement of devices which can be used for actual useful purposes.

Figure 1: Device Connection

Warning: Connecting audio interfaces as shown in Figure 1 may damage your device! Although I experienced no problems when doing all sorts of things with several different devices, care shall be taken considering the allowed input levels of the microphone/line-in interfaces. Many devices adapt their impedance automatically depending on the detected audio signal level, so in the general case, no problems will occur.

In any case, avoid connecting a Speaker Output with high volume to a Microphone Input!

Here, you find a list of the connection options I used up to now:

- Speaker -> Line-In (preferred option!).

- Speaker with "low output volume" -> Mic.

- Speaker with "adapter cable with resistors and capacitors" -> Mic (impedance adaptation).

Here you find, for example, a video tutorial to connect headphone output/stereo mix to mic input. - Speaker with T-Splitter (3.5mm to 2X adapter) with connected headphone -> Mic (impedance adaptation). In case you connect a smartphone, for correct detection, it may be necessary to connect the microphone of the headset as well, but it can be removed or muted afterwards.

The Idea in Short

The idea enables transmission and reception of secret messages between two parties, where low rate, half-duplex communication over an audio channel is established by transmitting encrypted data in a dedicated frequency range.

While data is superposed over voice in the time domain, in frequency domain, it is placed in a particular frequency-range, complementary/non-overlapping to the frequencies used by voice. Thus constructing a side channel with own characteristics, e.g., low signal-power, which allows placing the coding signal at low levels in order to not interfere with voice communication.

The following arrangement of devices shows the general idea in a basic configuration:

Figure 2: Basic Configuration - Overview

Note: The communication is currently "half-duplex" because this is a limitation of voice-chat in most of the current messengers which we use as "infrastructure"! But of course, the idea can be extended to "full-duplex" communication.

Devices B and C, possibly separated by a long distance, communicate with each other transmitting bidirectional audio information over an open network (e.g., over a public switched telephone network (PSTN), the internet, or a cellular network) and using standard communication protocols like VoIP.

Devices B and C could be two cell phones making a videoconference or a voice call using, e.g., Skype, or even better, using TRIfA which is open source with license "GNU General Public License v2.0". Devices B and C could as well be laptops or even landline-telephones. In the latter case, when using a normal telephone, you don't need your favourite messenger App anymore, which is anyways used only as infrastructure for AC4QGP.

Devices A and D, which are both offline, could be two PCs or laptops running AC4QGP.

The main assumption is that all these devices make use of standard interfaces and technologies that are vulnerable to exploits of all sorts. These interfaces and protocols are typically "digital".

Encrypted communication between devices A and D, which are offline, is achieved by plugging them to the audio peripherals (e.g., speaker and microphone) of devices B and C, as shown in Figure 2, thus being able to use the audio signal as a transmission media (carrier) for secret messages.

Before connection establishment devices A and D shall be isolated, that is, they need to be put offline in order to prevent information leakage. This can be done, e.g., by turning off the following interfaces if available:

- WLAN

- LAN

- Bluetooth

- USB

- GPS

While offline, devices A and D offer almost Air-Gap conditions, increasing security. That is, during session establishment, the generated or typed keys cannot be intercepted and sent to an attacker in case the device may have been already infected with a malware before.

Besides, typing of keys and screen information in A and D can not be compromised either. This is one of the major advantages of this method. In addition, the identity of the person or system "behind" devices A and D is hidden.

The next diagram shows again the basic configuration, now focusing on the concept of "tunneling".

Figure 3: Basic Configuration – Detail "tunneling messages"

The diagram clearly shows that, while both VPN and the ecryption provided by typical messenger services like Skype, Messenger, WhatsApp and Signal are vulnerable to end-node-attacks, the AC4QGP information remains secret.

The malware software in B and C intercepts the "fake message" transmited with the standard messenger, and forwards it to Eve... as it usually does since years :-( But now, with AC4QGP, the "real secret message", tunneled over the audio channel and protected with E-E2EE, is safe!

The malware has probably not even noticed our secret communication (emojis at the top), and even if it did, now it has really no chance to decrypt it! (emoji at the bottom in B).

Key Verification

"...‘end-to-end encryption’ is rapidly becoming the most useless term in the security lexicon. That’s because actually encrypting stuff is not the interesting part. The real challenge turns out to be distributing users’ encryption keys securely, i.e., without relying on a trusted, central service.

The problem here is simple: if I can compromise such a service, then I can convince you to use my encryption key instead of your intended recipient’s. In this scenario — known as a Man in the Middle (MITM) attack — all the encryption in the world won’t help you."

https://blog.cryptographyengineering.com/2013/03/09/here-come-encryption-apps/

For this reason, it is important to "verify" the exchanged keys as soon as the session has been established. For this purpose, AC4QGP displays a session code on the top right side of the app.

By reading out loud, this value to your communication partner, he can check and confirm if his session code matches yours. Only in that case, you can be sure that you are not a victim of a MITM attack.

For convenience, both session codes are derived (to a much simpler and shorter format) from the session key which in turn is derived from both public keys exchanged during communication setup. The session codes on both sides have to match exactly.

Note that the voice channel acts as an outbound link for verification of the session code. If you would use instead the same communication channel (inbound link) for that purpose, then the attacker could again arrange things to covince you that you have the correct session code while you don't.

In order to activate voice communication, you shall press the following button:

The button will then turn red to show that you can stop voice communication anytime by pressing it again (toggle on-off = green-red).

Extended Configurations

Assuming the possibility to connect other "trusted networks or devices", devices A and D can be used to receive and send messages from/to other sources, e.g., acting as gateways or data adapters. For more information, check Further Features in AC4PGC.

Communication Protocol

Due to possible loss, corruption or repetition of data during transmission and reception, as well as the need to exchange the public keys in each session, a simple communication protocol is required. The telegrams of the protocol, exchanged as strict request-response sequences, consist of the following fields:

| Field | Number of bytes | Description |

| Preamble | 4- 20 | Synchronization and Carrier Detection |

| Start byte | 1 | 0xAA or 0x00 or oxF2 or some useful information like source address |

| Address | 1 | Destination address, e.g. coded as 0:chat, 1:console, 2-255:files |

| Sequence Number | 1 | 0-255 "own" Seq. Nr. (TX) |

| Seq. Nr. ACK | 1 | 0-255 "remote" Seq. Nr. (RX) |

| Command | 1 | Command to be executed |

| Nr. of data bytes | 1 | Size of data field in bytes |

| Data | 0 - 255 | Data |

| End byte | 1 | 0x55 or 0xAA or 0xFF or 0xF2 or ~0xF2=0x0D or some useful information |

| Checksum | 1 | XOR of all bytes from Start Byte to Last Byte of Data |

| Terminator | 1 | Workaround to not corrupt Checksum bits due to signal distortions |

After entering the message, the chat information will be encrypted and transmitted. On correct reception of data, the receiver will reply with a positive acknowledge. The acknowledge may contain as well data from the receiver.

On timeout of a retransmission timer or reception of a negative acknowledge, the sender will retransmit the last telegram. This repeats a maximum number of times, which is configurable (MAX_RESENDS).

The complete communication protocol is transmitted modulating the audio signal.

The public cryptographic keys are exchanged during connection establishment. Then, using the derived shared key, symmetric encryption is used to encipher the data field of the telegrams.

Message Embedding

The following diagram shows the detail of message embedding:

Figure 4: Architecture Overview

In Figure 4, a rough overview of embedding and encryption is presented.

The input message mA is entered with a keyboard or touch-screen and then immediately encrypted with the encryption key (Key 4). The result is mAe.

The encrypted message mAe is then encoded applying the channel algorithm which uses Key 3. Key 3 consists of the specific settings used for embedding (like threshold values).

The values of Key 3 and Key 4 may be derived from the session key exchanged during connection establishment.

A simple embedding algorithm based on AFSK (Audio Frequency Shift Keying) converts each of the bits in the input message (mAe) to different frequencies within the bandwidth of the carrier signal.

In order to avoid distortions introduced by the carrier signal, a dedicated/exclusive and sufficiently small frequency range can be used which does not overlap to the frequencies used by the voice.

Note though, that some carrier contents will still remain in the coding frequency range. This is due to the real filters which can't get rid completely of signals beyond the defined boundaries. This can be improved by implementing better filters, but the effect will always set another limit to the minimum amplitude needed for the codes.

Note also that filters with sharp frequency cutoffs can produce outputs that ring for a long time when they operate on signals with frequency content in the transition band. In general, therefore, the wider a transition band that can be tolerated, the better behaved the filter will be in the time domain.

Figure 5: AFSK (Audio Frequency Shift Keying)

Because the input voice makes use of the complete bandwidth (e.g., Wideband VoIP: 50Hz - 7kHz), this requires that, before embedding, the carrier signal gets the embedding-frequency-range removed/filtered with a band-stop filter FcA.

Advanced embedding-techniques may take the carrier signal cA in consideration in the process of embedding. That is, the embedding process may depend on the current value of the carrier signal.

The correction factor SA is selected according to the expected or measured channel conditions, especially depending on the Signal-to-Noise ratio (SNR):

The SA value can be selected so the embedded-signal is close to the noise level. Depending on the technique used, the embedded-signal can even be below the noise level, e.g., for steganographic purposes as explained in AC4PGC (Audio Chat for Pretty Good Concealing). Then, with help of a correlation function and further advanced techniques, the message can be recovered.

For standard applications without previous knowledge of the channel conditions, a value of SA = MAX_AMP/1000 proved to give good results. That is, with this value, the embedding was not perceptible by the human ears and it could still be recovered out of the noise present in the carrier signal.

In the present article, with focus on reliability and increased channel capacity, we don't follow that target yet. Instead, extensions to support steganography will be added in future releases of AC4QGP and/or AC4PGC, where embedding will be based, e.g., on spread spectrum techniques, which will allow hiding the signal also in the frequency domain.

As explained before, the carrier signal cA is filtered with a band-stop filter FcA which removes the frequency range used for coding the message. Then, it is multiplied by the factor (1-SA) which adapts the signal to such an amplitude that, when added with the coding-signal in the time domain, it can never exceed the maximum level and saturate.

With this, the signal output to the speaker interface of the device is:

XA = cAf*(1-SA) + mAeS*SA

When considering in addition some noise added in the communication channel, we have:

YA = cAf*(1-SA) + mAeS*SA + nA

The channel noise may be too strong or there may be other channel disturbances, which affect the data communication. In that case, we rely on the protocol described above, which takes care to retransmit data if required.

We don't have to forget that all carrier signals will inevitably have some environment noise, being the most usual the pink-noise. This noise, which cannot be distinguished from actual voice information, has not been shown in Figure 4 and is just considered to be part of cA.

On the top of Figure 4, the inverse process (decoding and extraction) shows how the message can be recovered.

The band-pass filter FYB-pass will give as a result YBf, which contains only the frequency-range of the input signal yB, where the embedded information was transmitted by the other device:

YB = cBf*(1-SA) + mBeS*SA + nB YBf = mBeS*SA + nB

Then, YBf is multiplied by the factor 1/SA giving back mBeS, a very approximate version of mBeS. mBeS can then be decoded and decrypted to give back the exact original message mB.

As mentioned before, the actual implementation will not transmit mB directly, as shown in the simplified overview, but it will instead transmit a telegram containing mB.

The telegram will support error detection and retransmission assuring consistency, data integrity and actuality. On error detection, the recipient can send back a negative acknowledge or simply not answer and wait for the retransmission timeout on the sender side to expire.

Finally, the filter FYB-stop will result in YB ~ Voice B which is output to the speaker. You can hear your communication partner talking.

The “Carrier”

The description above defines cA as the “carrier signal”, which is the voice acting as a carrier to transport the embedded data. This is a correct term, especially in the context of steganography.

Nevertheless, this article will refer to another meaning of the term. Sorry for that, I didn't find a better term for now.

In the file audioSettings.py, you find two definitions, CARRIER_FREQUENCY_HZ and CARRIER_AMPLITUDE. These definitions refer to a "trick" of adding a signal, that may be needed in cases where the error rate in the transmission of data over messenger services is too high.

When testing Skype with 2 cellphones, I realized that using my 2 Huawei devices worked extremely well. But as soon as I used my Samsung, the communication in one direction would fail too often.

The audio interface in Android may be opened in the so called COMMUNICATION_MODE or in CALL_MODE. The latter provides high-quality audio which allows our signals to be transmitted without problems.

In COMMUNICATION_MODE, the quality of the signals is sometimes “disastrous”. This is noticeable during voice chats as if the person was in a bathroom. For data communication, that means that half of the telegrams may not make it through.

The origin of the problem is that VoIP was not designed to transport digital data encoded the way we do. This article confirms this problem and provides a good background on the technology.

Yes, what we do is nothing different to what a modem over IP (MoIP) does. At least when using “pass-through” (G.711 VoP channel).

A much better approach would be to have a MoIP solution using Modem-Relay (MR). Unfortunately, after years of standardization, there is still no widespread support of the V.150.1 protocol.

So, how does the trick with the carrier work? Well, I still don't know. I just discovered it by chance after lots of experimentation. My theory is that with help of the “carrier” signal, we force the algorithms, and probably also the Apps, to transmit where they would otherwise stop transmitting. Under certain conditions, our codes may be interpreted as disturbances and get removed automatically.

Several other artifacts may distort our signal in a significant way, e.g., compression artifacts and echo-canceling. In addition, there may be glitches, coupling between audio cables (cross-talk), electromagnetic interference (EMI), etc. I actually noticed all of that. For example, charging my cellphone produces peaks at 2kHz and in spectral-replicas (power at 2kHz: noise without interference = -93 dB, noise with interference = -86 dB).

With all that interference, it seems like a miracle that I managed to lower the error rate down to 5% with this simple trick!

With an error rate of 5%, we will lose in average one out of 20 telegrams. But we will not even notice because the lost telegram will be re-transmitted automatically by the communication protocol.

Some parameters that you may need to adapt in order to have a reliable communication are CHANNEL_DELAY_MS (in my case, 1500 for TRIfA and 1000 for Skype), ADD_CARRIER (True if your cellphone uses COMMUNICATION_MODE), AMPLITUDE and FFT_DETECTION_LEVEL.

Almost all parameters found in configuration.py and audioSettings.py can be comfortably changed on the GUI and stored for later reuse.

A funny thing about the value I currently use for the frequency of the “carrier” is that some may consider it a “magical frequency”. If you are curious, you can read this article for more information: Magical Frequency.

The Code

The code provided in this article can also be found in GitHub (you may probably find a newer version there).

It was developed as a “proof of concept” using Python 3.7 / 3.9 and tested on Windows 10 and Linux Mint 20.

README.txt contains the steps you need to follow in order to install your environment and generate an executable if required.

The following list shows the most important python scripts:

- AC4QGP.py

- audioReceiver.py

- audioSettings.py

- audioTransmitter.py

- configuration.py

- init.py

- soundDeviceManager.py

- updateRequirements.py

- ui/mainWindow.py

Besides, the following files are useful:

- config.ini

- gen_exe_with_pyinstaller.bat

- audit_code.bat

- requirements.txt

- README.txt

Note that this proof of concept focuses on demonstration. That is, the code was written to prove that the idea really works.

For example, for experimentation purposes, many parameters are defined which enable exploring different possibilities in different environments and configurations (e.g., using different messenger Apps or a landline telephone). Check the tabs Settings and Advanced (see Figures 6 and 7 further below). In addition, a plot window can be displayed to show the signal in real time in the time domain or in frequency domain (see Figure 8).

This is the reason why the quality of the code may be low for other goals. For example, it violates intentionally very basic principles such as “data encapsulation/data hiding”, “single-responsibility principle”, just to mention some.

Extreme programming prioritizes simplicity and time over formalities, and allows use of shortcuts and workarounds. Please take this into account if you intend to “learn” programming. This code is sure not the best example in that case.

For professional use, a complete refactoring of the code is required, the use of licenses needs to be checked, etc. Especially, the introduction of a properly implemented state machine would be required, polling could be replaced with event-driven methods, etc.

Nevertheless, as a reference implementation, the code provides a good starting point for any serious project. It has a wide functionality and is robust enough to be used even for real purposes.

Figure 6: Tab Settings

Figure 7: Tab Advanced

Figure 8: Plot Window

Some Recommendations

Notice that you may be using the same audio output for data transmission and for the system sounds or audio output of some other application. That is, of course, not a good idea because they could interfere with the data signal. So, adjust your settings as needed.

In general, I recommend to disable all audio effects of all audio interfaces on your system (on Windows/Linux and on Android too!). But sometimes, some of the audio features may actually help, like the microphone-booster, which could unfortunately also saturate your device. So, if required, make tests with care, changing one setting at a time and combining different settings to see their effects.

Set the volumes appropriately, and set the format to “16 Bit, 48000 Hz (DVD-Quality)”.

During initial setup, it usually helps to capture the audio signals in parallel using, e.g., Audacity. There, you can check their levels, their shapes, and then adjust your settings as required.

Although I could not test as much as I wished up to now, from all the messengers I tried, I can certainly recommend using TRIfA, which is free, open source, and works really well.

TRIfA implements the Tox protocol, enabling peer-to-peer messaging and video-calling with end-to-end encryption.

You may need to increase the audio buffer in TRIfA to fit a complete telegram, say to 10240.

The more audio settings you can adjust in your messenger, the more possibilities you have to obtain a more reliable communication.

Unfortunately, most of the messengers are black-boxes offering little to no possibilities to change your audio settings. So far I tried TRIfA, Skype, Messenger, Signal, WhatsApp, Discord and Wire.

Some of the apps worked really bad, depending sometimes on the time of the day. Sometimes, they work perfectly until for some reason, they just stop working. Maybe some algorithm in the communication infrastructure making evil?

Another thing you should be really careful about is using audio interfaces in your smartphone. Plugging and unplugging stuff, re-configuring, etc. All that can just fail without notice. Apps like the Android-App Lesser AudioSwitch can help with the system configuration and checking the “real” connection status.

Improved Setup

What you can do "outside" AC4QGP in order to increase cybersecurity:

- If possible, keep the end-node-device offline for ever or at least don't connect it to your online-device other than through audio-interfaces

- Run AC4QGP stored in an external data storage device (e.g., a USB flash drive or SD card)

- Use a live CD (with or without persistence) to run AC4QGP

- Use a virtual machine (with or without persistence) to run AC4QGP

- Use a trusted and safe VPN in your online-device (be aware of this)

- Split TX, RX (use one online-device for transmission and a different online-device for reception as shown in Figure 9)

Figure 9: Split configuration

In the split configuration shown in Figure 9, B1 could be using Skype to receive information while B2 could be running TRIfA to transmit information.

Notice that AC4QGP supports already such configurations, as both physical channels are independent and may be deployed on the same online-device or not, this is transparent for AC4QGP.

In Figure 10, a portable configuration with increased cybersecurity features is shown.

Figure 10: Configuration with increased cybersecurity features

Note that the configuration presented in Figure 10 above is portable in the sense that it is small enough to be carried in your pocket, and it can be connected between any two devices, one acting as the online-device (e.g., with TRIfA running on a smartphone) and the other acting as the offline-device (e.g., any machine able to boot Windows from a Live CD through USB).

Once the chat session is finished, you can just unplug the USB-hub... you will then leave no traces!

So, next time you visit a friend, you may want to try this gadget and check how well it works.

All equipment you need are 2 USB-flash-drives, 2 USB-Sound-cards, a USB-hub, a headset and an audio cable.

Note that you could even plug your headset to the laptop directly, or you could connect the smartphone to the laptop using an audio cable, thus requiring only one USB-sound-card.

Data Rate

The current implementation encodes and transfers data at a rate of 1200 bits per second using AFSK (transmission in only one direction at a time).

But the real rate is given mostly by the error rate and by the delays introduced in the communication channel.

A realistic rate can be calculated with 400ms channel delay, 5% error rate and 16 bytes of data-payload in each telegram.

With those figures, we get approximately 16 bytes of payload every second, simultaneously in both directions.

Note that when we transmit data, the telegram coming from the other side as a response may contain data as well, and it will also acknowledge the reception of our previous telegram.

Cybersecurity Considerations

As explained above, in the offline-devices, we shall avoid the use of standard digital interfaces like WLAN, LAN, Bluetooth, USB and GPS, all of which are known to be vulnerable against exploits. This reduces dramatically the number of countermeasures required when compared with devices which are online.

Ironically, in the era of "digitalization", a solution based on the "good old analog communication" seems to overcome many of the security problems we face when dealing with digital communication.

In my modest opinion, modern cryptography shall be urgently improved with the complementary/additonal use of the following two techniques:

The first bullet is covered in this article which makes use of data-diodes. The second bullet is covered in AC4PGC and will be implemented in the next part of this article.

So, how big is the advantage when using E-E2EE as compared to E2EE alone?

The following diagram might help you visualize the answer.

Figure 11: Data Diode

If you used a good configured firewall up to now (see vFirewall in Figure 11), which is the best-case for normal users, with addition of E-E2EE based on data-diodes, you can increase your security and your privacy considerably, without the need of expensive network equipment!

I hope I provided enough evidence and explanations as to why this is true.

But please convince yourself first, by doing some research if you can and have some time for that. I encourage you (only convinced users are loyal users).

Applications

The same "ubiquitous" technology may be used for countless applications such as:

- P2PE (Point to Point Encryption) for payment solutions - e.g., using a card-reader on the end-point.

- "true private chat“ !!!

- support services (banks, insurance, government...)

- telemedicine

- industrial control

- chatbot services (see above)

This technology is not only limited to text messages. For example, with text-to-speech (TTS), it is possible to support things we can now not even imagine. Watch this to grasp the incredible potential: GPT-3.

Believe it or not, the interface to GPT-3 is a text interface!

Further Improvements

The list is very long, but I will try to summarize the most important improvements that may follow:

- code refactoring

- tests with landline telephony, cellular network, and radio link (adaptations if required)

- improved filters

- encryption of the complete telegram (option)

- steganography

- console access

- socket-Gateway (see Figure 3: Extended Configuration in AC4PGC)

- increased data rate (e.g. 33.6 kbit/s)

- file transfer

- connection monitoring (with exchange of life-sign-telegrams)

- carrier auto detection

- audio monitoring (to detect suspicious audio signals, infiltrations, data-leaks)

- text-to-speech

- HART protocol (for industrial applications)

Links

Here, you find links that I gathered some days ago about related topics which may provide orientation on the used technologies, the available protocols, standards, and tools:

So, is AC4QGP really something new? Well, yes and no.

As I discovered not long ago, the idea to tunnel data over audio has been around actually for a long time:

There are also different ideas which get close to it:

chirp

But as far as I know, there are currently no applications which put everything together the way I did. Especially addressing features to support one of the favorite toys of modern people, the online chat.

Summary

In short, some of the main technical points of the method presented in this article are:

- Isolated device plugged between audio peripherals and unsafe communication device

- Simple communication protocol with error detection and retransmission

- Data encryption in isolated end-node (E-E2EE)

- Channel-encoding based on Audio-FSK in a reduced frequency range:

- Audio carrier pre-filtered to remove components in coding-frequency-range

- Coding-signal added to carrier under consideration of relative amplitudes

- Magic frequency added to cope with issues related to MoIP pass-through

- Especial multiplatform chat application implementing the points above

The proposed embedding technique as well as many other aspects of the idea can be improved in the future.

Why Do We Need Something like AC4QGP ?

Well, because privacy is a human right!

We cannot have a free society without online privacy.

The business model of most of the "free" messengers is getting your private data. That's the only price you have to pay. But, is it a fair price?

These big companies have no interest in securing your app or your device, so you have to do it yourself.

We need to change the status-quo and work towards a free internet, towards a free world.

Tools like AC4QGP and the new-old ideas behind them need to be spread.

AC4QGP could become the first step in an entirely new revolution - one that makes truly private communication a reality.

You can contribute by using it and helping to improve it until we've reached a point where real online privacy is the default feature for everyone!

Using the Code

Besides this article and the links provided in it, you find a short README.txt file which shall help you with the initial steps.

Points of Interest

Borrowing some words from Andy Yen (see link above):

Quote:

"What we have here is just the first step, but it shows that with improving technology, privacy doesn't have to be difficult, it doesn't have to be disruptive. Ultimately, privacy depends on each and everyone of us. And we have to protect it now because our online data is more than just a fractions of ones and zeros. It's actually a lot more than that. It's our lives, our personal stories, our friends, our families, and in some ways also our hopes and aspirations. So, now it's the time for us to stand up and say: yes, we do want to live in a world with online privacy. And yes, we can work together to turn this vision into reality!".

For a real-life scene about dangers to online-privacy, check this link:

Here the complete playlist of the tool used above:

Check also another article with source code: IP Radar 2, for Real-time detection and defense against malicious network activity and policy violations (exploits, port-scanners, advertising, telemetry, state surveillance, etc.):

History

- 27th February, 2021: Initial version

- 1st March, 2021: Minor corrections

- 5th March, 2021: Link to IP Radar 2 added

- 7th March, 2021: Figure 8 updated