This article will continue building the developer's familiarity with an automated CI/CD-powered cloud native workflow. Here we will provide a taste of a workflow where changes are built, tested, and deployed as soon as they're committed to a Git repository.

In part two of this series, we created an Azure Function in Node.js and TypeScript using the default Visual Studio Code template. We also created a build pipeline to build and test the code and transpile the TypeScript to JavaScript. With a release pipeline, we automatically deployed the function to Azure.

In this article, we’ll add a database to our function then deploy our function, including the required infrastructure, to Development, Testing, Acceptance and Production (DTAP). By the end of the article, we will have created a microservice, complete with a database, that can be deployed to DTAP.

Creating a Cosmos DB Account

Let’s start by adding a database to our function. We’re using Azure Cosmos DB with an SQL API. We have good reason to use the SQL API, as you’ll see in a bit. For a JavaScript or TypeScript developer, it probably makes sense to use the MongoDB API. That would mean that, although we’re running Cosmos DB, we can connect to it using any MongoDB library, such as Mongoose. However, for what we’re showing you next, the SQL API will do.

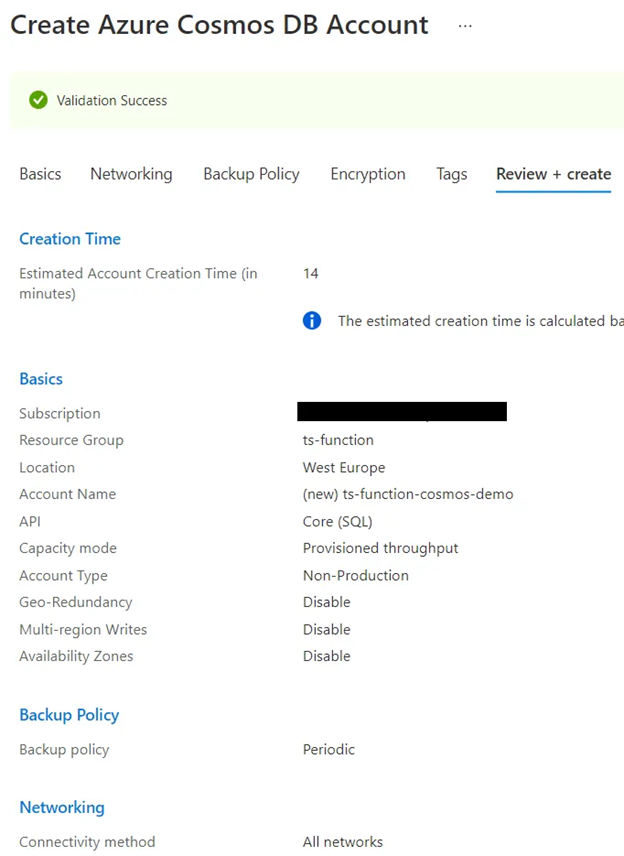

Go to the Azure portal and look for Cosmos DB. Place it in the same resource group as your function for easy cleanup later. Pick a unique name and a region near you. Other than that, leave all the default settings.

Creation may take a couple of minutes, 14 in our case. Meanwhile, we can focus on our function’s code.

Insert Using the Output Binding

To get data from and in a Cosmos DB, we use Azure Function bindings. For example, if we bind an input parameter to a MongoDB query, we’ll get a record automatically. Likewise, we can add an output binding to return an object, and it is added to Cosmos DB automatically. There are different bindings, like for Blob, Table and Queue storage, Service Bus, and Event Hubs, but we’ll use the Cosmos DB binding.

Your function has its own folder in your project, and this folder contains a function.json file. When you open this file, you see two bindings, an "in" and "out" binding. That’s actually your HTTP trigger request and response. You’ll also see that the GET and POST methods are currently allowed for your function.

Let’s start by inserting documents into our database. For this, we’ll only use POST, so remove the GET method from the configuration. It’s also nice to add a route to our function, enabling us to add other functions later. So, add a route-property with the value "person" to the input binding. Also, add an additional output binding to your configuration.

{

"bindings": [

{

"authLevel": "function",

"type": "httpTrigger",

"direction": "in",

"name": "req",

"methods": [

"post"

],

"route": "person"

},

{

"type": "http",

"direction": "out",

"name": "res"

},

{

"name": "personOut",

"databaseName": "mydb",

"collectionName": "person",

"createIfNotExists": true,

"connectionStringSetting": "CosmosDBConnString",

"partitionKey": "/partitionKey",

"direction": "out",

"type": "cosmosDB"

}

],

"scriptFile": "../dist/HttpTrigger1/index.js"

}

Cosmos DB works with a partition key to partition your data. The field is mandatory, but you can leave it empty or use a constant string value. We’re using a person’s initial as the partition key. We need to specify the partition key here because we’re telling our function to create the database and collection if they don’t exist.

Also, go to your local.settings.json file and add the CosmosDBConnString setting. Find your connection string in the Azure portal in the keys tab of your Cosmos DB account.

Fixing Our TypeScript

We can now change our function so it returns the person to the runtime. For the person’s ID, we’re going to use a GUID, so first run npm install guid-typescript. Then, add import { Guid } from "guid-typescript"; to the top of your TypeScript file.

For the person, we define an interface.

interface Person {

id: string,

email: string;

partitionKey: string;

firstName: string;

lastName: string;

}

Then, in our function, we create a person and add them to the context bindings so that the Function runtime knows it should add this person to Cosmos DB. We use:

context.bindings.[name you entered in your out binding configuration]

Last, we return the person to the caller so they can store the generated ID.

const httpTrigger: AzureFunction = async function (context: Context, req: HttpRequest): Promise<void> {

let p: Person = {

id: Guid.create().toString(),

email: req.body.email,

partitionKey: req.body.firstName[0],

firstName: req.body.firstName,

lastName: req.body.lastName,

};

context.bindings.personOut = p;

context.res = {

body: p

};

};

Now, fire up Postman or cURL or any other tool that enables you to make POST requests to HTTP services. Start your function and copy the URL, which should be something like https://localhost[:port]/api/person to your tool of choice. Then, then give it a body like:

{

"email": "john.smith@contoso.com",

"firstName": "John",

"lastName": "Smith"

}

If all goes well, it should return a 200 status and a copy of your person. Since we don’t validate that John Smith already exists, we can keep adding John Smith, but we should get a unique ID every time.

Confirm it really worked by finding the Cosmos DB account in the Azure portal and going to the Data Explorer tab.

Read Using the Input Binding

Now, create a second function to read the person from Cosmos DB. In VS Code, go to the Azure tab, click Create Function and pick the HTTP trigger. This creates HttpTrigger2 in a separate folder.

Again, open up the function.json file, but this time remove the POST method and add a route with value person/{partitionKey}/{id}. The Cosmos DB input binding looks a bit like the output binding, except we need to specify an ID and partition key. We use our route parameters here.

{

"bindings": [

{

"authLevel": "function",

"type": "httpTrigger",

"direction": "in",

"name": "req",

"methods": [

"get"

],

"route": "person/{partitionKey}/{id}"

},

{

"name": "personIn",

"databaseName": "mydb",

"collectionName": "person",

"connectionStringSetting": "CosmosDBConnString",

"id": "{id}",

"partitionKey": "{partitionKey}",

"direction": "in",

"type": "cosmosDB"

},

{

"type": "http",

"direction": "out",

"name": "res"

}

],

"scriptFile": "../dist/HttpTrigger2/index.js"

}

This time, we can read the person from context.bindings.[name of the binding]. The partition key and ID are taken from your URL, so we can get a person by browsing to https://[your URL]/api/person/[initial]/[ID]. The TypeScript is pretty simple.

const httpTrigger: AzureFunction = async function (context: Context, req: HttpRequest): Promise<void> {

context.res = {

body: context.bindings.personIn

};

};

So, without writing any code for Cosmos DB, we were able to insert text in, and read from, the database.

Fixing Our Release

Unfortunately, this change broke our release. If you push your changes, you’ll see that everything is released and seems to work, until you actually make a request to the URL.

This is because the local.settings.json file isn’t in source control, but we added the connection string to it. To confirm this is really your problem, go to your Function App in the Azure portal, then go to Configuration and add "CosmosDBConnString" with your connection string as the value. The app will restart and it should work again.

We can actually add this solution to our release. In your Azure DevOps release pipeline, add it to the Deploy Azure Function App task.

In the task, find App Settings then add -CosmosDBConnString $(CosmosDBConnString).

Now, add the CosmosDBConnString variable and set the value, set the Scope to Development, and make it a secret by clicking the lock. The connection string will be added to your Function App and no one will be able to see the value (they can only set a new value). Confirm it works by creating a new release.

Deploying Infrastructure

Everything works fine now. If you make changes to your TypeScript and push it to your repository, it will be automatically built, tested, and deployed. However, if we want to create another environment, we’ll have to manually create a Function App and a Cosmos DB, which is time consuming and error prone.

We can create resources in Azure using Azure Resource Management templates (better known as ARM templates), PowerShell, PowerShell Core, Bash, or Shell. Add a new task to your pipeline and look for the Azure CLI task. Place it before the Azure Functions task. Set the connection and select script type Shell and location Inline script.

We can now add Azure CLI commands to our build. You may want to add some scripts to your repository and use those instead, but, for simplicity, we’re using inline scripts.

Let’s start by creating a resource group, then we can add the Function App, which also needs a storage account.

az group create --name $(ResourceGroupName) --location $(Location)

az storage account create --resource-group $(ResourceGroupName) --name $(StorageAccountName) --location $(Location) --sku Standard_LRS

az functionapp create --resource-group $(ResourceGroupName) --name $(AppServiceName) --storage-account $(StorageAccountName) --consumption-plan-location $(Location) --functions-version 3 --os-type Linux --runtime node

Now, go into Variables and add ResourceGroupName, Location, and StorageAccountName. Set Location to "westeurope" or "westus" or whatever is close to you.

You can use the name of your resource group for ResourceGroupName or use something new, preferably ending with "-dev" so you know this is your development environment. If you use another name for your resource group, you must also use a new name for your Function App and a new name for your storage account.

The storage account name must be unique across all of Azure, can only contain letters, and must not be longer than 24 characters. This is a strict naming policy, but that’s how Azure works.

Ensure all variables, except Location, are scoped to Development. Once you’ve filled in the blanks, save and run the deployment and check that you don’t get errors. This deployment may take a few minutes, as it’s creating new resources in your Azure account.

Deploying Cosmos DB

If all goes well, add another Azure CLI task to your pipeline and place it after your Azure Functions task. In this CLI, we’ll create the Cosmos DB account, read the connection string, and place it in the App Service’s configuration. If we keep adding the connection string manually, we’ll need to do a release, manually get and set the connection string, and finally do a second release. However, we want it all to work at once.

az cosmosdb create --resource-group $(ResourceGroupName) --name $(CosmosDBAccountName) --locations regionName=$(Location) failoverPriority=0 isZoneRedundant=False

CONN_STRING=$(az cosmosdb keys list --name $(CosmosDBAccountName) --resource-group $(ResourceGroupName) --type connection-strings --query "connectionStrings[0].connectionString" -o tsv)

az webapp config appsettings set --resource-group $(ResourceGroupName) --name $(AppServiceName) --settings "CosmosDBConnString=$CONN_STRING"

Once Cosmos DB successfully deploys, query the connection string and store it in a script variable. Last, add the CosmosDBConnString to our Function App’s configuration. By doing this in the script, we can deploy whatever we want, change names, change environments, and more, and we’ll always have the connection string.

You can now remove the connection string from your Azure Functions task and from your variables.

Deploying DTAP

Now, go to your pipeline overview, where you view artifacts and stages. Hover over your Development stage and click the Clone button that appears. This will create the stage "Copy of Development", which you can rename to "Test". There’s a line between the two stages, which means Test will be deployed after Development. Click the lightning button on the Test stage to change the trigger to manual only. This will prevent your code from automatically going to your Test environment after a push to your repository. You can set pre-deployment approvals if you want. You may prefer the manual method if you do a lot of pushes but don’t want to disrupt testers while they’re testing.

So, the cool thing is, your entire Development stage was copied, including your variables. However, your variables are now set to the Test scope. This means you’ll only have to change those variables and you can deploy a completely new environment!

Since we didn’t use any hard naming in the scripts, we don’t even have to look at them. So, in your variables, enter a new name on the variables with scope Test. Only your location stays the same because it’s scoped to the entire release.

Once you set the variables, save, create a new release, trigger the test stage, and go to the Azure portal to confirm everything worked. Try triggering your Azure Function using Postman to insert and read a person.

Next Steps

In this article, we added Cosmos DB input and output binding to our Azure Function. You can, of course, connect to any database like you’ve always done, using an npm package. By adding our infrastructure to our release pipeline from the previous article, we were able to spin up a new environment quickly and easily with no manual intervention and no chasing bugs.

As you learned in this series, with a little setup, you can automate tasks you used to perform manually. This frees your time to focus on more important projects, like creating new features to wow your customers, while easily scaling as needed to support your growing customer base.

Congratulations! After completing this tutorial, you are now officially cloud native. If you're ready to learn more, check out the intermediate cloud native series that builds upon the skills you've developed here.

To learn more about working with Kubernetes on Azure, explore The Kubernetes Bundle | Microsoft Azure and Hands-On Kubernetes on Azure | Microsoft Azure.