Using both serverless functions and containers in a single Cloud Native app is a common use case, so here we aim to show readers how that looks in a real application to ensure we'll be teaching real-world skills they can use right away. We also discuss the limits of the serverless functions we've been using thus far.

In a previous article in this series, we created a simple microservice inside an Azure Function. Let’s expand on that concept a little further and construct a microservices-based back-end application using multiple Azure Functions.

We touch a little bit on how to approach our application design, some components we can use, how to build our Functions in TypeScript, and how to deploy Functions with Azure DevOps. By the end of this article, you will have many APIs you can use in an application, all powered by Azure Functions.

Background

For this example, we build out a simple task management application in TypeScript. This includes creating, updating, completing, and deleting tasks, as well as tracking what stage the task is in (Not Started, Doing, or Done).

We won’t worry about the actual front-end application component, just design, and deliver the back-end APIs for use within any front-end application. We also won’t be too concerned with implementing user-based security or roles either.

We store our code in Azure DevOps and use an automated pipeline to build and deploy our Azure Functions directly to our Azure Tenant.

Design

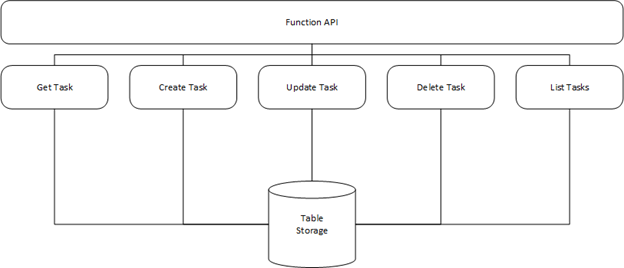

The basic rule of thumb for our application design is one instruction per Function. For our example application, the instructions we develop are:

- Get Task – Retrieves an individual task

- List Tasks – Lists all tasks associated with a user

- Create Task – Creates a new task for the user

- Update Task – Updates an existing task for the user

- Delete Task – Removes the task

This covers all the components of a basic task management application. Once we scope out the basic functionality, we can look at a couple of extra components, such as API management. We won’t implement them, just to keep it simple.

With that in mind, the following is a high-level view of the components we will deliver.

Setting up the Project

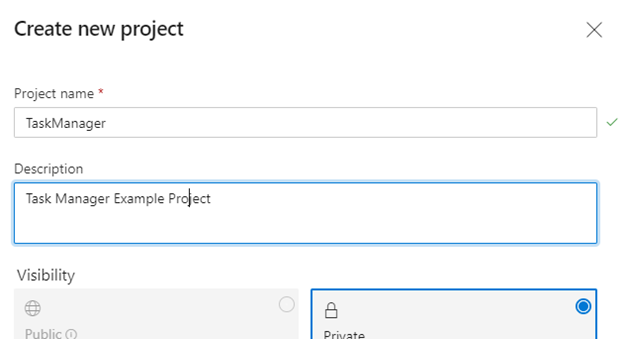

Let’s set up our project in Azure DevOps using Visual Studio Code first. You should already have an Azure DevOps account and Visual Studio Code. First, open Azure DevOps and log in. Create a new project with a unique name, TaskManager for example, accept the defaults, and click "create".

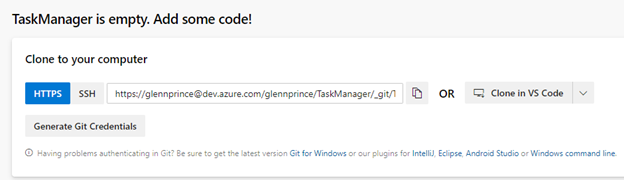

Once your project is created, the option to Clone in VS Code is under Repos > Files. This uses Git to download your project and open the result in VS Code.

When this is complete and your blank project is open, if you haven't installed it already, search for a VS Code extension from Microsoft called Azure Functions. After you install it, you have an Azure icon on your toolbar on the left, enabling you to create a new Functions project within your current workspace.

Finally, to run Functions locally, install the Azure Functions Core Tools as well as the Storage Emulator, which should be part of the Azure SDK.

Saving Data

To begin, we create a new TypeScript Functions project in VS Code and the CreateTask Function. This Function should just use a HTTP trigger and be scoped to Anonymous for the Authorization level. This base project has everything we need to deploy a single Azure Function similar to our previous post. When we run this Function (using F5), we get a default return statement that triggers on GET and POST.

We will modify this function to enable us to create new tasks and store them in Azure Table storage. Before we do that, we need to configure the Function and some dependencies. Run the following npm install commands to install two additional libraries:

npm install azure-storage

npm install guid-typescript

We will also update our functions signature in the function.json file to only accept POST requests. Our function needs to take a task payload and either create, update, or delete the task in our table storage account. The code is:

import { AzureFunction, Context, HttpRequest } from "@azure/functions"

import * as AzureStorage from "azure-storage"

import { Guid } from "guid-typescript";

const httpTrigger: AzureFunction = async function (context: Context, req: HttpRequest): Promise<void> {

context.log('Create Task Triggered');

if(!req.body.username || req.body.username === ""){

context.res.status(400).json({ error: "No Username Defined" });

return;

}

var tableSvc = AzureStorage.createTableService();

var tableName = "Tasks";

var taskID = Guid.create().toString();

var entGen = AzureStorage.TableUtilities.entityGenerator;

var task = {

PartitionKey: entGen.String(req.body.username),

RowKey: entGen.String(taskID),

name: entGen.String(req.body.name),

dueDate: entGen.DateTime(new Date(Date.UTC(

req.body.dueYear, req.body.dueMonth, req.body.dueDay))),

completed: entGen.String(req.body.completed)

};

await apiFunctionWrapper(tableSvc, tableName, task).then(value => {

return context.res.status(201).json({ "taskId": req.body.taskID,

"result": value });

}, error => {

context.log(error);

return context.res.status(500).json({ taskId: req.body.taskID,

error: error });

});

};

export default httpTrigger;

function apiFunctionWrapper(tableSvc, tableName, task) {

return new Promise((res,err) => {

tableSvc.insertEntity(tableName, task, function (error, result) {

if (!error) {

return res(result);

} else {

return err(error);

}

});

});

}

This function will now accept a payload in the body of the post with the task information then create the task in Table Storage.

The final step is to provide the connection string for our table storage account. To ensure this function works, we use the Azurite emulator and specify the environment variable AZURE_STORAGE_CONNECTION_STRING in the local.settings.json file.

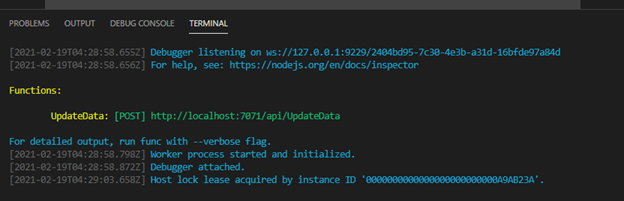

Now, when we run our Function in VSCode (using F5), it starts up and listens on http://localhost:7071/api/UpdateData for a task.

We can test our function, using a tool like Postman, by sending a JSON payload like the following:

{

"username" : "testuser",

"name" : "Finish Function Example",

"dueYear" : "2021",

"dueMonth" : "04",

"dueDay" : "01",

"completed" : "0"

}

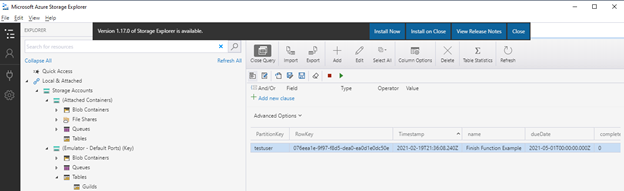

Now when we look at our local storage instance, we see a task entity for our user added to the table.

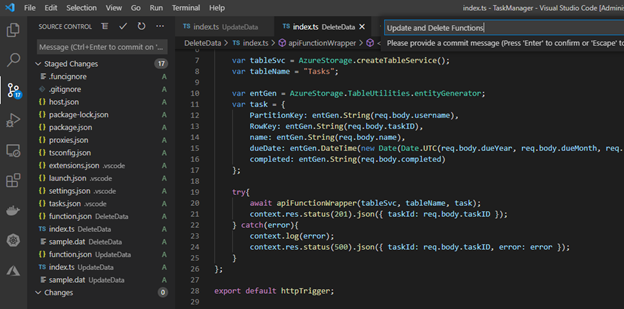

Before we move on to the next Function, let's commit our changes to Azure DevOps so they are saved. Switch to the Source Control section, stage and commit your changes, then push them to Azure DevOps.

Get Functions

Now that we can save data in a data store, let's create a function enabling us to retrieve task data. We create a GetTask function to retrieve a task based on the username and taskID we pass it. First, create a new Function using an HTTP Trigger with the code below:

import { AzureFunction, Context, HttpRequest } from "@azure/functions"

import * as AzureStorage from "azure-storage"

const httpTrigger: AzureFunction = async function (context: Context, req: HttpRequest): Promise<void> {

context.log('Task Store Triggered');

if(!req.body.username || req.body.username === ""){

context.res.status(400).json({ error: "No Username Defined" });

return;

}

if(!req.body.taskID || req.body.taskID === ""){

context.res.status(400).json({ error: "No Task ID Defined" });

return;

}

var tableSvc = AzureStorage.createTableService();

var tableName = "Tasks";

await apiFunctionWrapper(tableSvc, tableName, req.body.username,

req.body.taskID).then(value => {

context.res.status(200).json({

username: value["PartitionKey"]._,

taskID: value["RowKey"]._,

name: value["name"]._,

dueDate: value["dueDate"]._,

completed: value["completed"]._

});

}, error => {

context.log(error);

context.res.status(500).json({ taskId: req.body.taskID,

error: "TaskID does not exist for user" });

});

};

export default httpTrigger;

function apiFunctionWrapper(tableSvc, tableName, username, taskID) {

return new Promise((res, err) => {

tableSvc.retrieveEntity(tableName, username, taskID,

function(error, result) {

if (!error) {

return res(result);

} else {

return err(error);

}

});

});

}

This function follows a similar pattern to the previous function, except this time it uses the retrieveEntity method to return a single task and formats the JSON output to be more readable. This is one of the big benefits of producing microservices this way: the individual components are easy to read and implement as they only do one task.

The other functions that make up our application are pretty similar (you can find the code in the GitHub repository), so let’s move on to deploying our Functions to Azure.

Deploying Functions

Once we have all our functions up and running locally, we can deploy them to Azure. We have a few options (such as Azure Pipelines), but to start, let’s deploy directly from VSCode. Before we do, we need to ensure we have created and configured our Function App and storage.

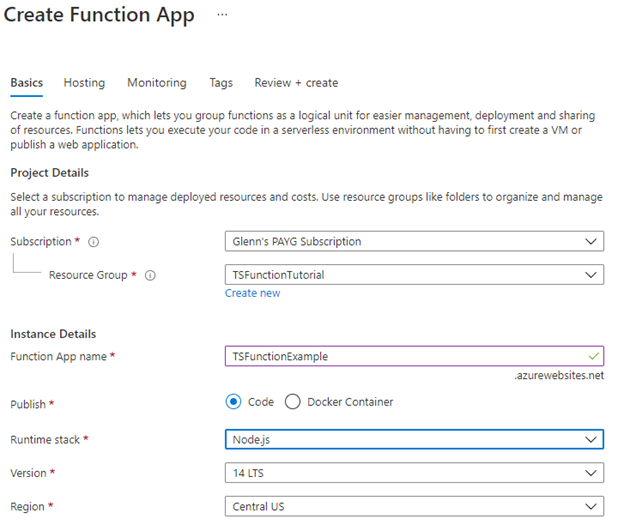

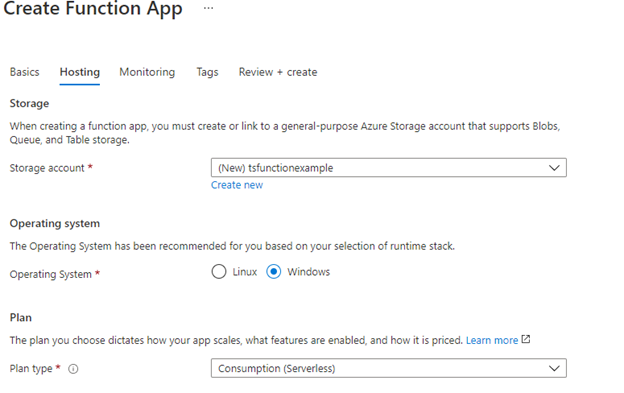

Open your Azure portal, open or create a resource group, and add a new resource. Find Function App and start the installation wizard. Choose an appropriate name and ensure the runtime stack has Node.js selected.

Click Next, specify a new storage account, and name it. We are reusing the storage account required for Azure Functions, but you may want to create a different storage account to hold just data in most cases.

Click review and create to create the Function App.

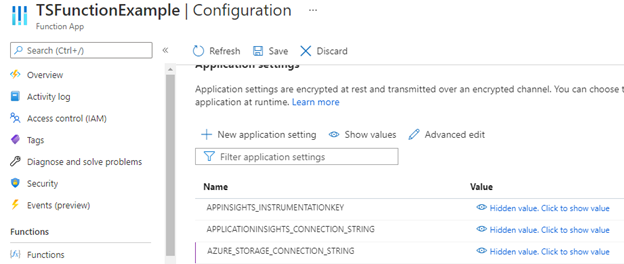

Once the Function application and its resources are created, we need to specify the AZURE_STORAGE_CONNECTION_STRING property like we did locally.

Navigate to the storage account, and under Access Keys, copy the Connection String. Go back to our Function App and under Configuration create a new Application Setting with the name AZURE_STORAGE_CONNECTION_STRING and the connection string we just copied.

When we return to VS Code and click on the Azure extension, we have the option to log into our Azure account. Go through the login steps, and when you return to VSCode, it should list the Azure instances available to your account.

Above this, there is a small, blue, up arrow icon that enables you to "Deploy to Function App":

Click this icon, select your Azure instance, then select the Function App we just created. This starts the deployment process.

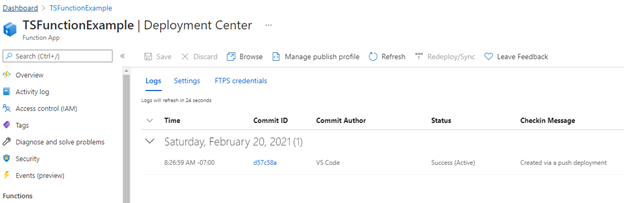

Once complete, if you switch back to your Function App and click on the Deployment center option, you should see your code deployed. We can now test our API using the publicly-available URL.

Next Steps

In this article we took the concept of creating a single function application and built it out to a complete set of Functions for a simple task management application. It’s easy to write event-driven application components in Azure Functions and deploy them quickly.

However, you may run into some challenges that require additional components or alternative solutions. In particular, long-running or intensive processes require more traditional applications that are running all the time. Also, some libraries require specific versions or make functions too large to run efficiently. To solve these challenges, mix containerized applications with Functions to create a fully-functional, cloud-based application.

In the next few articles, we’ll discuss containerization and how to deploy clusters and containers alongside our application code.