Here we start by building a simple Node.js front end for our Functions, then use Docker to containerize the application and put it into our own private container registry. Finally, we add the container to a pod in our Kubernetes cluster.

In the previous article, we looked at how to expand our cloud native application to the Azure Kubernetes service and have Azure DevOps automatically build and deploy both the application and infrastructure at the same time.

In this article, we take that one step further by building a containerized node.js application component. We start by building a simple Node.js front end for our Functions, then use Docker to containerize the application and put it into our own private container registry. Finally, we add the container to a pod in our Kubernetes cluster.

By the end of this article, we will have application components for both serverless Azure Functions and more traditional Node.js applications bundled into one code repository and deployed with Azure DevOps.

Building a Node.js Application

Before we begin working with Docker to build a container, let's build a simple Node.js frontend application using the Express.js framework. In this project, we just build a simple page to retrieve the list of tasks from our Functions, but you can always expand this further.

First, we create a new folder in our codebase called NodeServer. In this folder, we run the following commands to set up our application:

npm initnpm install expressnpm install axios

These three commands initialize our application as well as install two packages we need to host web pages (Express) and retrieve data from our API (Axios). Once this is finished, create a new file called app.js and enter the following code:

const express = require('express')

const axios = require('axios').default;

const app = express()

const port = 3001

app.listen(port, () => {

console.log(`Example app listening at http://localhost:${port}`)

})

app.get('/', function (req, res) {

try {

const response = axios({

method: 'get',

url: 'https://tsfunctionexample.azurewebsites.net/api/ListTasks',

data: { username: 'testuser' }

}).then(function (response){

var returnPage = "<html><body><h1>Task List</h1>";

if(response.status = '200'){

returnPage += '<table style="width:100%"><tr><th>Username</th><th>Task ID</th><th>Task Name</th><th>Due Date</th></tr>';

response.data.results.forEach(element => {

returnPage += '<tr><td>' + element.username + '</td><td>' + element.taskID + '</td><td>' + element.name + '</td><td>' + element.dueDate + '</td></tr>';

});

returnPage += '</table>';

} else {

returnPage += '<p>Error - No Tasks Found</p>';

}

returnPage += '</body></html>';

console.log(response);

res.send(returnPage);

});

} catch (error) {

console.error(error);

}

});

If we step through this code briefly, first we load Express and start it listening on port 3001. We then use the .get() function to listen to the root or home page of the URL for a get request. Once this is received, we use Axios to call our List tasks API (ours is hosted at https://tsfunctionexample.azurewebsites.net/api/ListTasks) using the username 'testuser' from earlier as the body payload.

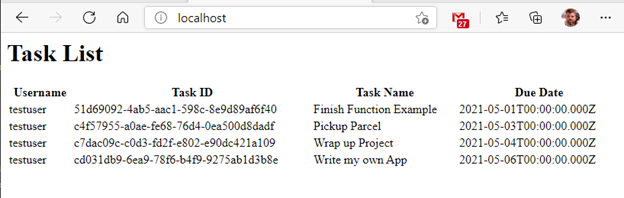

When the response returns, we iterate through all the results in the list, format them into a simple HTML table, and return them. If we save this file and now try to run our code using the command node app.js, we see a list of tasks returned from our Functions.

It is worth noting that if you have not used your Functions in a while, this page may take some time to return. This is because Functions take time to warm up when they haven’t been used in a while.

From here, we could add additional functions for post, delete, and get specific tasks, however, lets look at containerizing this application as it is.

Docker Containers

Docker enables you to package an application and all its dependencies into a container. This includes a basic version of an operating system, usually Linux, any dependencies, such as Node and npm, your application, and its required libraries.

A container is the running instance of your application and a blueprint is the configuration to build a container. Let’s build a blueprint for our current application and run the container locally to test. Ensure you have Docker installed and ready.

First, create a new file called Dockerfile to make our container blueprint. Open this file and use the following code:

FROM node:14

WORKDIR /usr/src/app

COPY package*.json ./

RUN npm install

COPY . .

EXPOSE 3001

CMD [ "node", "app.js"]

Most container blueprints should be pretty simple and follow a similar pattern. The first line defines the source image we are building from, in this case a Node version 14 image.

Next, we specify where to find our application code inside this image. Because this source image includes both Node and npm, we can install all our dependencies by copying our existing package file and running npm install.

Next, we copy in our application code by copying the current local directory into the current working directory.

Finally, we allow the container to open port 3001 and run Node with CMD and specify our apps.js as a parameter.

Before we build and run our Docker image, we should also create an ignore file so our local debug and packages aren’t copied across. To do this, create the file .dockerignore and the following:

node_modules

npm-debug.log

Once this is complete, we now build our Docker image using the command:

docker build -t <username>/node-server .

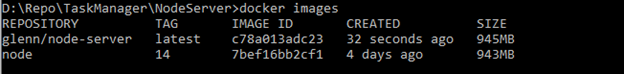

Docker goes through and compiles all the required components and saves it to a local register. You can view all the images in your local register using the command docker images.

Once we have our Docker image in our local register, we now start a container to run it using the command:

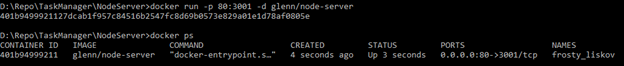

docker run -p 80:3001 <username>/node-server

This command runs an image using -d repository/image-name, but also specifies that any traffic coming from port 80 should be redirected to port 3001 on the container using the parameter -p. When we now run the command docker ps, we should see our container running with the ports forwarded.

When we open a web browser to http://localhost, our task manager application should be displayed.

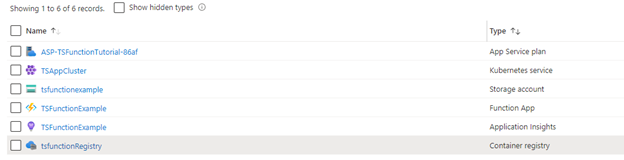

Using the Azure Container Registry

So, we have our application containerized with Docker, the container image is in our local registry, and Docker can build and run the container locally. Let’s now build our container image and store it in Azure Container Registry so AKS can host the container. First, we need to create a container registry by opening the Azure portal, going to a command shell, and typing the following command:

az acr create --resource-group TSFunctionTutorial --name tsfunctionRegistry --sku Basic

Once our container registry exists, we can now add another step to our infrastructure pipeline to build our container image.

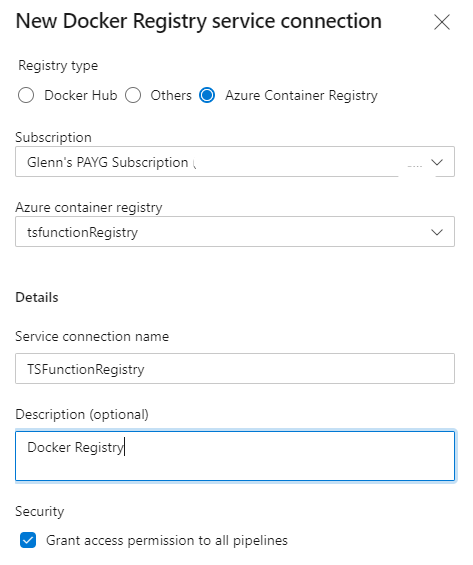

To access the Docker registry, we create an additional service principal by opening the project in Azure DevOps, selecting the project settings, and opening service connections.

Click New service connection, select Docker Registry from the connection options, and find the Azure Container Registry from your subscription.

With the service principal set up, we open our pipelines and edit the Task Manager pipeline we created in the previous article.

Under the Deploy Infrastructure stage, add a new Job using the following code under the Deploy AKS Cluster job:

- stage: BuildContainer

displayName: Build Container

jobs:

- job: Build

displayName: Build

pool:

vmImage: $(vmImageName)

steps:

- task: Docker@2

displayName: Build and push an image to container registry

inputs:

command: buildAndPush

repository: tsfunctionnodeserver

dockerfile: '**/Dockerfile'

containerRegistry: TSFunctionRegistry

This job is a single step that uses the Docker@2 task to build and push our image. The key inputs here are a name for our Docker image (repository), the location of our Docker file, and the name of the container registry we created. Save and commit the changes, then the pipeline runs to build and deploy the application components.

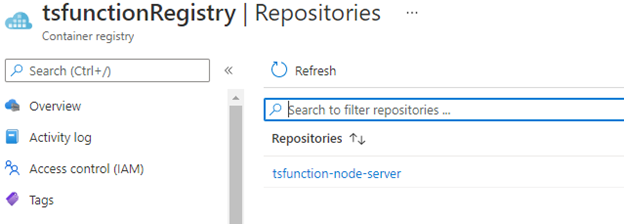

Once this is complete, when we go to our container registry in the Azure portal and look at repositories, we should see our docker image waiting to be deployed.

Deploying to Kubernetes

Now that our Docker image is built and in the registry, we can set an option to deploy our container to the cluster.

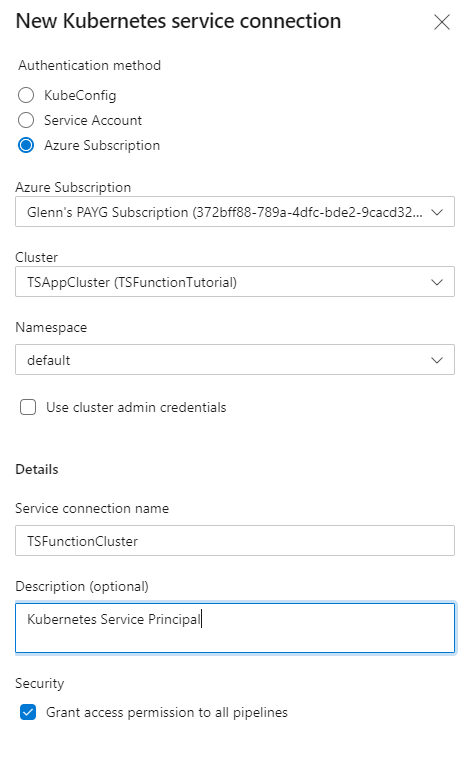

First, we need to create another service connection for our cluster.

Go to Project Settings, then Service Connections, and create a new service connection to Kubernetes. Select the Azure Subscription option, the AKS Cluster, and default namespace. We call the service connection TSFunctionCluster and we will use this later.

Now that we have a service principal created, we need to create the manifest files to deploy our container.

Create a new directory called manifests, and a new file called deployment.yaml with the following code:

apiVersion : apps/v1

kind: Deployment

metadata:

name: tsfunctionnodeserver

spec:

replicas: 1

selector:

matchLabels:

app: tsfunctionnodeserver

template:

metadata:

labels:

app: tsfunctionnodeserver

spec:

containers:

- name: tsfunctionnodeserver

image: tsfunctionregistry.azurecr.io/tsfunctionnodeserver

ports:

- containerPort: 80

This deploys our container with no real restrictions on the resources it can consume, and open on port 80. Next, we create a service.yaml file with the following code:

apiVersion: v1

kind: Service

metadata:

name: tsfunctionnodeserver

spec:

type: LoadBalancer

ports:

- port: 80

selector:

app: tsfunctionnodeserver

This component adds networking onto our container so we can access it from outside the cluster.

We also need to add two more stages to our pipeline to configure Kubernetes secrets and pull the image from ACR. Add the following code to your azure-pipelines.yaml file after the BuildContainer stage:

- stage: DeployContainer

displayName: Deploy Container

jobs:

- job: Deploy

displayName: Deploy Container

pool:

vmImage: $(vmImageName)

steps:

- task: KubernetesManifest@0

inputs:

action: 'createSecret'

kubernetesServiceConnection: 'TSFunctionCluster'

namespace: 'default'

secretType: 'dockerRegistry'

secretName: 'tsfunction-access'

dockerRegistryEndpoint: 'TSFunctionRegistry'

- task: KubernetesManifest@0

inputs:

action: 'deploy'

kubernetesServiceConnection: 'TSFunctionCluster'

namespace: 'default'

manifests: |

$(Build.SourcesDirectory)/manifests/deployment.yaml

$(Build.SourcesDirectory)/manifests/service.yaml

containers: 'tsfunctionnodeserver'

imagePullSecrets: 'tsfunction-access'

The first task in this stage creates the secret we need to pull the container image from the repository. It uses the Docker registry service connection and Kubernetes service connection to link access.

The second task uses the two manifest files to deploy the image into a container on the cluster.

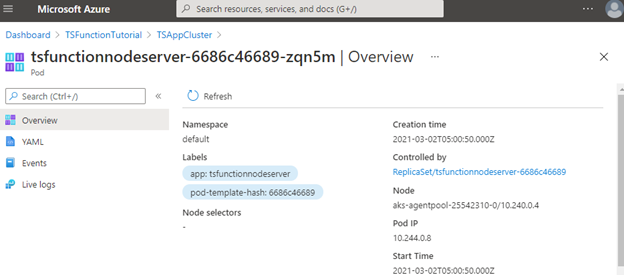

Save these files and check in your changes to run the pipeline. Once the pipeline is deployed, when you open the cluster in the Azure Portal, click on workloads, then Pods, and you should see a pod starting with tsfunctionnodeserver. When you click on this pod and copy the IP address, you should be able to use this to access your task management front end application.

Next Steps

Through this series, we built a simple task management application using a combination of serverless Azure Functions and containerized Node.js application components. All our code, infrastructure configuration, and pipeline configuration are stored together in the Git repository as part of Azure DevOps.

You can take this application further by building out the front end with a single-page application (SPA), adding security, and fully automating deployments. After reading through this article series, and seeing what Azure can do when teamed with other cloud tools, you can now construct and deploy your own unique serverless application.

If you're ready to do a deeper dive into building cloud native Node.js applications on Azure, check out the next article series.

To learn more about working with Kubernetes on Azure, explore The Kubernetes Bundle | Microsoft Azure and Hands-On Kubernetes on Azure | Microsoft Azure.