Here we set up the CI/CD base – GitHub, Docker, and Google Cloud Platform.

In this series of articles, we’ll walk you through the process of applying CI/CD to the AI tasks. You’ll end up with a functional pipeline that meets the requirements of level 2 in the Google MLOps Maturity Model. We’re assuming that you have some familiarity with Python, Deep Learning, Docker, DevOps, and Flask.

In the previous article, we briefly explained CI/CD in the context of Machine Learning (ML). In this one, we’ll set up the environment for our ML pipeline.

Git Repositories

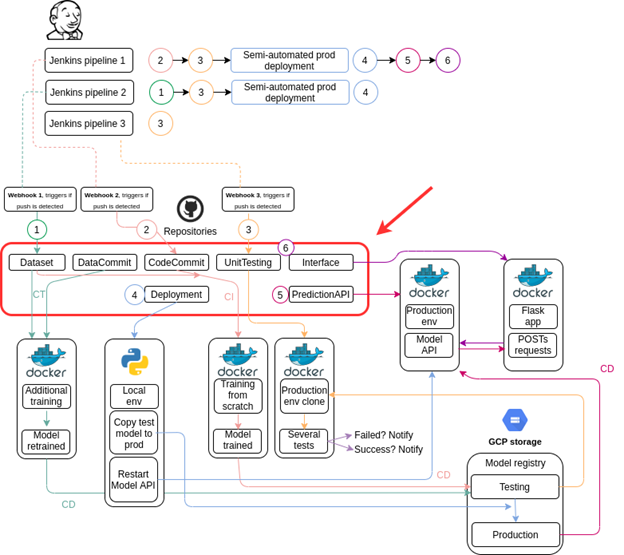

This project will include six mandatory repositories – Dataset, DataCommit, CodeCommit, UnitTesting, PredictionAPI, Deployment – and a "bonus" one, Interface. These appear in a red frame in the diagram below.

- The Dataset repository contains the preprocessed dataset(s) used to train or update models. Whenever the pipeline detects a change in this repository, it will trigger the continuous training step.

- The DataCommit repository contains the code that allows the pipeline to trigger the continuous training whenever new data is pushed.

- The CodeCommit repository contains the code that trains the model from scratch to support continuous integration.

- The UnitTesting repository contains the code that enables the pipeline to verify if the model is available in the testing registry, then run tests against it.

- The PredictionAPI repository contains the code that’s in charge of running the prediction service itself. It loads the model available in the production registry and exposes it through an API.

- The Deployment repository contains the code that copies the model from the testing registry to the production registry (once the model had successfully passed the tests), then gradually loads the new model to the Prediction API instances with zero downtime.

- The Interface repository contains a very basic Flask web interface that communicates with the API to support prediction requests.

To get access to the above repositories, create a project folder on your computer, open a terminal window from this folder, and run:

git clone https://github.com/sergiovirahonda/AutomaticTraining-Dataset.git

git clone https://github.com/sergiovirahonda/AutomaticTraining-DataCommit.git

git clone https://github.com/sergiovirahonda/AutomaticTraining-CodeCommit.git

git clone https://github.com/sergiovirahonda/AutomaticTraining-UnitTesting.git

git clone https://github.com/sergiovirahonda/AutomaticTraining-PredictionAPI.git

git clone https://github.com/sergiovirahonda/AutomaticTraining-Deployment.git

git clone https://github.com/sergiovirahonda/AutomaticTraining-Interface.git

Google Cloud Platform

Note: You can use a cloud provider other than Google. In that case, you’ll need to follow that provider’s procedure.

For Google Cloud Platform (GCP):

Docker

To install Docker on your local machine, follow the instructions in this guide.

Next Steps

Our environment is now ready for the ML pipeline. Time to get into the actual code. In the next article, we’ll implement automatic training. Stay tuned!