Here we configure GKE and prepared all the components to start our pipeline deployment.

In a previous series of articles, we explained how to code the scripts to be executed in our group of Docker containers as part of a CI/CD MLOps pipeline. In this series, we’ll set up a Google Kubernetes Engine (GKE) cluster to deploy these containers.

This article series assumes that you are familiar with Deep Learning, DevOps, Jenkins, and Kubernetes basics.

In the previous article of this series, we set up a GKE cluster. In this one, we’ll work with Kubernetes jobs and deployments. In this project, the jobs – which are intended to complete a task and then terminate – will perform tasks such as model training and testing. The deployment, which never ends unless you explicitly terminate it, will keep alive our prediction API.

Jobs, services, and deployments are declared as YAML files, in the same way we’ve defined secrets.

The Job YAML File

Let’s take a look at the YAML file that handles the AutomaticTraining-CodeCommit job:

apiVersion: batch/v1

kind: Job

metadata:

name: gke-training-code-commit

spec:

backoffLimit: 1

activeDeadlineSeconds: 900

ttlSecondsAfterFinished: 60

template:

spec:

containers:

- name: code-commit

image: gcr.io/automatictrainingcicd/code-commit:latest

env:

- name: gmail_password

valueFrom:

secretKeyRef:

name: gmail-secrets

key: gmail_password

- name: email_address

value: svirahonda@gmail.com

restartPolicy: OnFailure

In the above file:

The Deployment YAML File

Let’s inspect the most relevant labels in the YAML file for Automatic-Training-PredictionAPI deployment.

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: gke-api

labels:

app: api

spec:

replicas: 2

selector:

matchLabels:

app: api

template:

metadata:

labels:

app: api

spec:

containers:

- name: api

image: gcr.io/automatictrainingcicd/prediction-api:latest

imagePullPolicy: Always

ports:

- containerPort: 5000

env:

- name: gmail_password

valueFrom:

secretKeyRef:

name: gmail-secrets

key: gmail_password

- name: email_address

value: svirahonda@gmail.com

---

apiVersion: v1

kind: Service

metadata:

name: gke-api

labels:

app: api

spec:

clusterIP: 10.127.240.120

ports:

- port: 5000

protocol: TCP

selector:

app: api

type: LoadBalancer

kind: "Deployment" states the execution type.name: "gke-api" is the name of the deployment.replicas: "2" defines how many pod replicas will execute the program.image: "gcr.io/automatictrainingcicd/prediction-api:latest" indicates what container image from the GCR to use.imagePullPolicy: "Always" forces the container building process to never use a cached container.containerPort: "5000" opens the container’s 5000 port.- The

env: "label" passes the info stored in the secrets.yaml file to our container as environment variables.

Note that there’s yet another block in this file – "service" of type "LoadBalancer." This service will route traffic from inside of the cluster to the Deployment pods through a fixed API address and socket.

File Structure

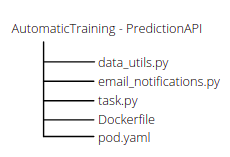

Maybe you’re wondering where these to be passed to Kubernetes, the YAML files should be saved in the same directory where your .py files reside with the .yaml extension, and pushed to the corresponding repository. This way, every repository will have its own .yaml file that will indicate Kubernetes how to act. The AutomaticTraining-PredictionAPI file structure should look as follows:

You can find all the YAML files for our project here: AutomaticTraining-CodeCommit, AutomaticTraining-DataCommit, AutomaticTraining-UnitTesting, AutomaticTraining-PredictionAPI, and AutomaticTraining-Interface (optional).

Next Steps

We’re all set to start deployment on Kubernetes. Once you have built and pushed your containers to GCR, you can deploy applications to GKE by running kubectl apply -f pod.yaml from the corresponding apps’ directories.

In the next article, we’ll set up Jenkins CI for this project in order to start building and automating our MLOps pipelines. Stay tuned!